From 2005 to 2020, interactive web applications fueled a massive wave of B2B SaaS companies, creating over 100 unicorns and capturing nearly half of all venture funding. Today, we’re on the cusp of a similar shift driven by vertical AI agents.

A vertical AI agent is a specialized software built on top of LLMs, carefully tuned to automate real, high-value work. Unlike simple “ChatGPT wrappers,” these systems require deep domain expertise, real agentic architectures, and integration with legacy workflows to function in production.

A promising use case is financial report analysis, where a single LLM struggles to read long reports and extract key information. Multiple AI agents can be used to handle different tasks, improving the overall results.

In this blog, we’ll show you how to build a financial report analysis pipeline using ZenML, SmolAgents, and LangFuse. Managing AI agents can be tricky, so it’s important to have good visibility into how they work. Proper tracking allows us to spot any issues and understand how the agents are performing. Using reproducible pipelines also allows teams to keep track of their experiments, improve performance, and figure out what works best.

TL;DR

Financial document analysis is tedious. You've got hundreds of pages of dense filings, and somewhere in there are the insights that matter. We thought: what if AI could do the heavy lifting, but do it right?

What we built: A pipeline with 5 AI agents working together to analyze financial documents - like having a team of specialists (financial analyst, researcher, fact-checker, etc.) all working on the same report simultaneously.

What's interesting: Instead of just summarizing documents, we combine real-time web research to create insights that go way beyond the original filing. Everything is reproducible and tracks how each conclusion was reached.

How ZenML helps: Handles the messy reality of AI workflows -tracks inputs, results, and keeps everything organized so you can reproduce any analysis months later.

What are AI Agents?

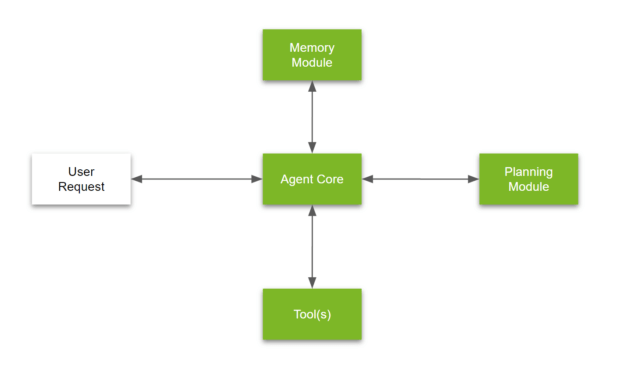

AI agents go beyond traditional LLMs by using tool calling, reasoning, and memory to complete complex tasks. Unlike standard chatbots that rely only on pre-trained data, AI agents can search the web, interact with APIs, and break problems into subtasks to improve accuracy.

For basic agent-like behavior, like simple routing or sequential task execution, you’re better off writing the code yourself. This keeps things faster, more transparent, and easier to debug. But as soon as you introduce tool calling (where an LLM executes functions) or multi-step reasoning (where an LLM determines the next step based on prior actions), things get tricky.

This is where SmolAgents come in. They provide lightweight, composable building blocks for building agentic workflows without unnecessary overhead. Instead of using heavyweight agent frameworks, SmolAgents help structure key components like:

- A defined set of tools the agent can call

- A parser to extract tool calls from LLM output

- A structured system prompt that aligns with the parser

- Memory to track multi-step interactions

- Error handling & retries to manage LLM mistakes

However, for AI agents to be reliable, we need observability. This is the ability to monitor how AI agents make decisions. Tools like LangFuse provide real-time tracking, showing which tools an agent calls, what data it retrieves, and how decisions evolve. Without observability, debugging agent behavior becomes a nightmare.

Overview

Financial reports are long, dense, and packed with critical details; exactly the kind of content that financial analysts, investors, and regulators need to digest quickly. But manually summarizing thousands of reports? That’s slow, expensive, and prone to human error. Traditional automation struggles, too, since financial disclosures mix structured tables with unstructured narratives. Here’s an example of a bad report:

“The company reported revenue of $2.3B in Q4, up from previous periods. Management discussed various operational challenges and market conditions. Risk factors include regulatory changes and competitive pressures. The company plans to focus on growth initiatives and operational efficiency.”

The above is bad due to the following reasons:

- Lack of Specificity: Phrases like "up from previous periods" or "growth initiatives" are vague. Investors need numbers, not narratives.

- No Context: Without comparing performance to competitors, analyst expectations, or historical trends, the statement doesn’t help assess the company’s trajectory.

- No Judgment or Insight: The summary regurgitates management's talking points without challenging them. Which challenges are actually material? Are the growth plans feasible given debt levels, market conditions, or past execution?

- Fluff Over Substance: It sounds like a summary, but it's hollow. It leaves the reader with no actionable insight.

Solution

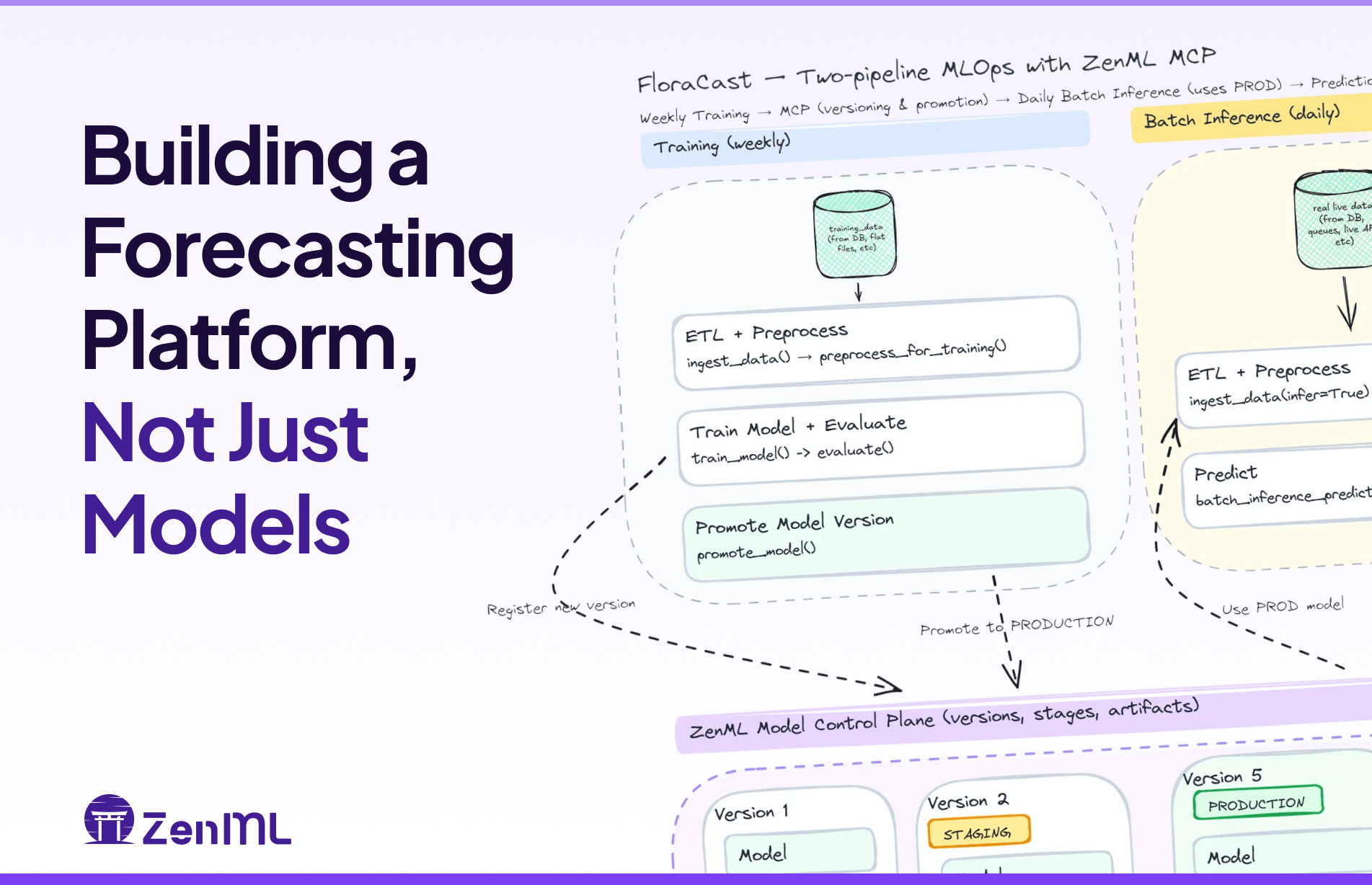

To tackle this, we’re building a financial report analysis pipeline using ZenML and SmolAgents. ZenML provides a structured way to manage our data pipelines, while SmolAgents offers lightweight, flexible AI agents that can process documents and extract key insights. Instead of running a single monolithic LLM call, we break the problem into chained steps: extracting, summarizing, and refining key insights across multiple stages.

Why Use Agents for This?

Traditional LLM workflows tend to be rigid; call the model, get a response, and move on. But, financial document analysis requires more nuanced decision-making. SmolAgents allows us to:

- Chain multiple steps together: First, an agent extracts relevant sections. Another agent summarizes those sections, and a final agent refines the output.

- Adapt dynamically: Instead of a one-size-fits-all model, we can swap tools, adjust prompts, and integrate different models as needed.

- Work with structured and unstructured data: Financial reports include balance sheets, text explanations, and regulatory filings. Agents let us process each differently.

Why ZenML + SmolAgents?

ZenML helps orchestrate the pipeline, ensuring smooth data flow and reproducibility. SmolAgents keeps the AI logic simple, modular, and extensible, allowing us to run agents efficiently in a few lines of code. This combination gives us the flexibility of LLMOps with the intelligence of AI agents.

In the next sections, we’ll break down how this works in practice, from dataset handling to model execution.

Implementation

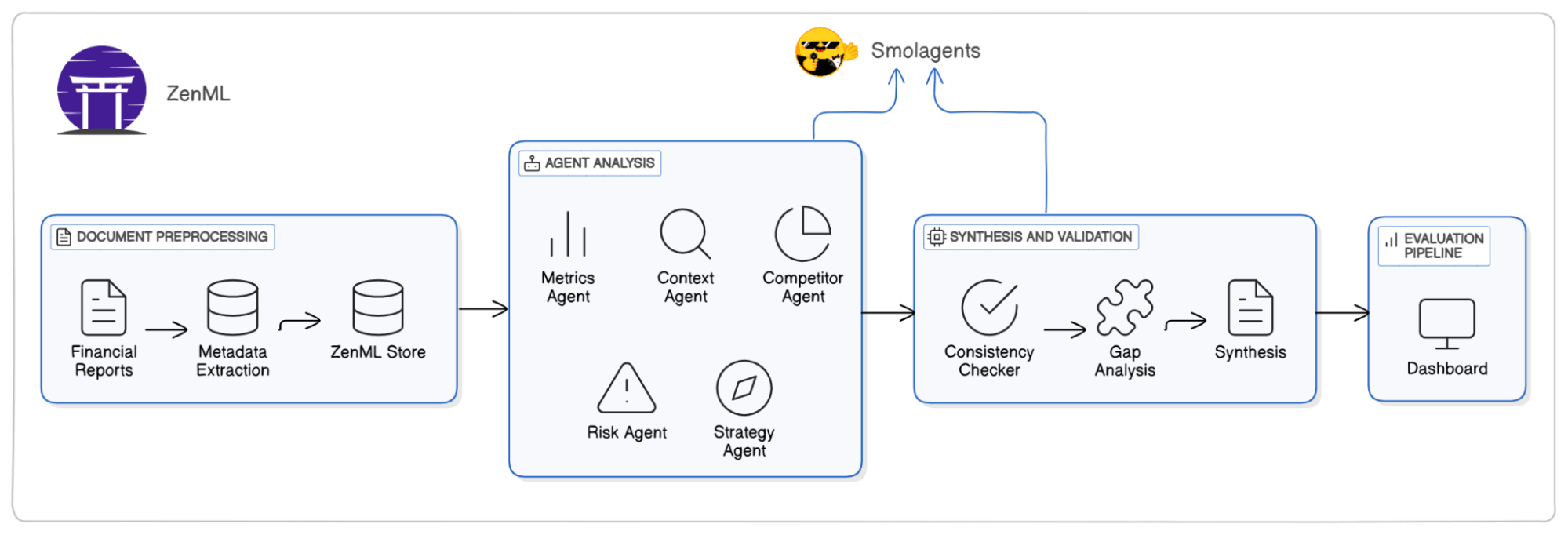

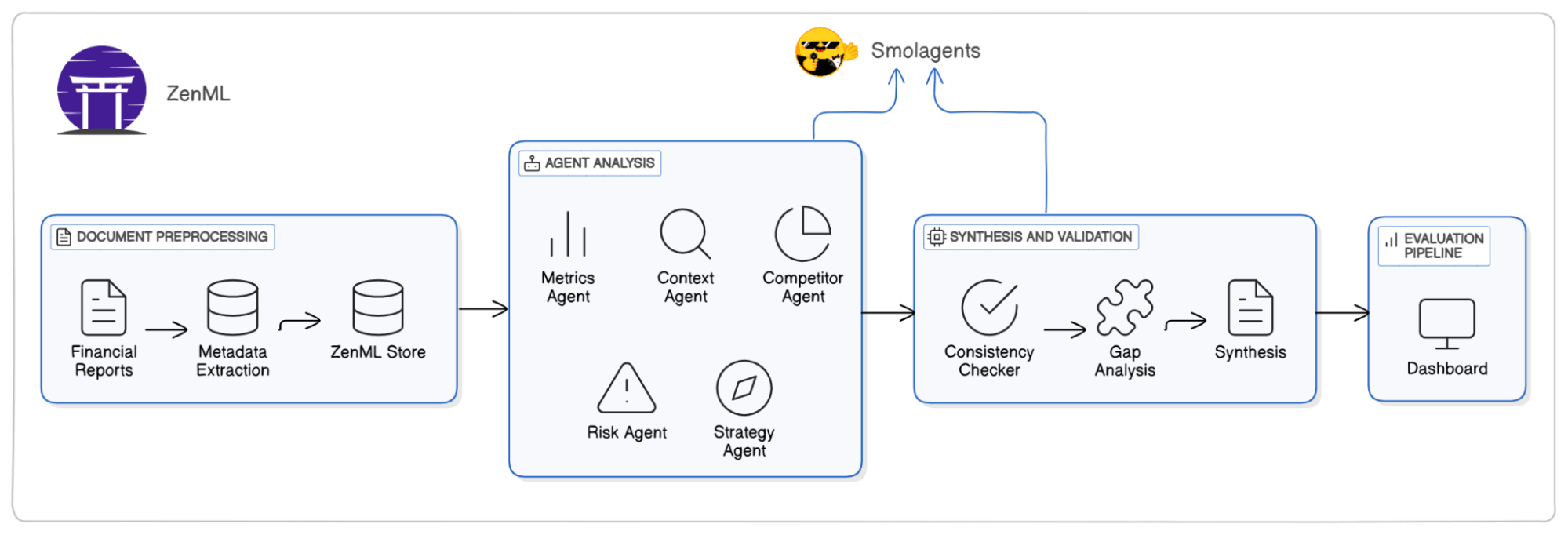

The diagram below represents a financial report analysis pipeline using ZenML and SmolAgents.

This system is designed to process financial reports, extract insights, and validate data using AI-powered agents. Here’s how it works:

1. Document Preprocessing

Financial reports are ingested, and metadata extraction is performed. Next, the extracted metadata is stored in the ZenML Store, ensuring structured data management.

2. Agent Analysis

A suite of AI agents processes the data to extract key insights:

- Metrics Agent: Identifies and extracts financial metrics.

- Context Agent: Understands the broader financial landscape.

- Competitor Agent: Conducts comparisons with competitor reports.

- Risk Agent: Assesses financial risks.

- Strategy Agent: Provides strategic recommendations.

3. Evaluation

The processed insights undergo evaluation by:

- Consistency Checker ensures data accuracy.

- Gap Analysis detects missing or conflicting information.

- Synthesis compiles the final structured financial insights.

4. Dashboard

The validated insights are displayed in a dashboard, providing a clear and actionable summary for decision-makers.

ZenML ensures the reproducibility of experiments, from data storage to visualization on the dashboard. For observability, all agents are tracked using LangFuse, enabling failure detection and LLM cost analysis. Let’s start with the setup.

Setup

Before building our financial report analysis pipeline, we need to set up the required tools and dependencies. This section walks through installing and configuring ZenML, Langfuse, and SmolAgents and setting up necessary API keys. Some agents require searching the web therefore, we have also required a SearchAPI API key.

Installing ZenML

ZenML is a powerful MLOps framework that simplifies the development and deployment of machine learning pipelines. To get started, first, create a virtual environment and install ZenML:

ZenML supports Python versions 3.9 through 3.12, so ensure you use a compatible version before proceeding.

Logging into ZenML Pro

ZenML offers a Pro version that enhances pipeline orchestration and monitoring. To log in:

- Go to ZenML Pro and sign in.

- Run the following command to authenticate:

This will open a login window. Once authenticated, you’ll be connected to ZenML.

Setting Up Langfuse

Langfuse is a robust observability platform for LLM applications. It allows tracking and debugging of model interactions. To get started:

- Sign up at Langfuse.

- Create an organization and invite team members (the free tier supports up to 2 users).

- Create a project and generate both a public and a secret key.

Langfuse’s free plan includes:

- Access to all platform features (with limits)

- 50,000 observations per month

- 30 days of data retention

- Community support via Discord and GitHub

Configuring API Keys

To enable the required integrations, set up API keys for:

- OpenAI: Obtain an API key from OpenAI

- SearchAPI: Sign up at SearchAPI to receive a free API key (100 free searches available)

Once you have your keys, create an .env file and populate it with the following:

Setting Up Your Environment with uv and virtualenv

To prepare your environment for building the financial report analysis pipeline using modern and performant tooling, follow these steps:

1. Create and Activate a Virtual Environment

First, create a new Python virtual environment and activate it:

2. Install uv

If you don’t already have uv installed, get it via pip:

3. Define Your requirements.txt

Create a requirements.txt file with the following contents:

4. Install Packages Using uv

Now use uv to install all the dependencies efficiently:

This will ensure all packages are installed into your virtual environment.

Dataset

The FINDSum dataset is designed for financial document summarization. Unlike traditional datasets focusing only on text, FINDSum integrates numerical values from tables, improving summary informativeness.

It consists of 21,125 annual reports from 3,794 companies, divided into two subsets:

- FINDSum-ROO: Summarizes a company’s results of operations, comparing revenue and expenses over time.

- FINDSum-Liquidity: Focuses on liquidity and capital resources, assessing cash flow and financial stability.

Many critical financial figures appear exclusively in tables, making FINDSum a valuable resource for multi-modal document analysis. Combining structured and unstructured data enables more accurate financial summarization and evaluation.

How to Use the Dataset

The dataset is organized into text and table components. Text files contain segmented report texts, while table files hold numerical data. To use the dataset, load the text and table data, preprocess them, and apply summarization models. The data is present in Google Drive. Here’s the Github repository of the dataset.

How to Download the Dataset

The dataset is hosted on Google Drive and contains two main folders: text and table. To download these folders programmatically, we use the googledriver library, which simplifies downloading shared Google Drive files and folders. Use the following code to download the dataset:

This will create a data/ directory containing both text and table subfolders, each with their respective files. We have created a script to combine both tables and text data for accurate financial summarization and evaluation:

The above script generates a CSV that consolidates all relevant structured and unstructured data, preparing it for the next stage in the pipeline: preprocessing.

Preprocessing

Before any meaningful analysis can take place, we need to preprocess the dataset by:

- Splitting them into logical sections (e.g., Management Discussion, Risk Factors, Financial Statements).

- Extracting key metadata such as company name, sector, fiscal year, and other metrics.

- Storing the structured document chunks in ZenML’s artifact store to track lineage and ensure reproducibility.

This section walks through the preprocessing pipeline, explaining how we extract and structure the data using ZenML and Smolagents.

Loading and Extracting Financial Data

We start by loading the raw financial reports. These reports come in two parts:

- A CSV file containing structured financial information.

- A JSON text file with additional financial tables and qualitative summaries.

The following function loads a subset of the dataset for initial processing:

This function reads both the structured and unstructured data, returning them as a Pandas DataFrame and a list of JSON objects, respectively.

Extracting Key Financial Metrics

Once the data is loaded, we extract key financial figures such as total assets,liabilities, cash flow, and revenue. The extract_important_metrics functionscans the report’s structured financial tables and retrieves relevant figures:

Here, we:

- Define a helper function

safe_float()to clean and convert financial values. - Check different report sections and extract relevant financial metrics.

Saving Processed Data

Once extracted, the financial data is merged with the original dataset and savedas a CSV for downstream processing.

We have a structured CSV file containing financial figures alongside the original text data at this stage.

Extracting Document Sections with an LLM

With the structured data saved, we now use a Large Language Model (LLM) to extract logical sections from the document text. ZenML provides a step-based workflow where each operation is defined as a step.

What Are ZenML Steps?

Steps in ZenML are Python functions annotated with the @step decorator. They encapsulate specific tasks, such as loading data or running an LLM-based extraction.

Example of a simple ZenML step:

Now, let’s define our preprocessing steps.

Step 1: Load Data

The first step reads the preprocessed CSV and loads it into a Pandas DataFrame.

Step 2: Extract Document Sections with LLM

We use OpenAI’s GPT-4o model to break financial reports into sections like Management Discussion, Risk Factors, and Financial Statements.

The DocumentSections is as follows:

Step 3: Extract Metadata

This step extracts structured metadata such as company name, fiscal year, and sector from the DataFrame.

Step 4: Store Structured Data in ZenML

Finally, we store the extracted sections and metadata in ZenML’s artifact store.

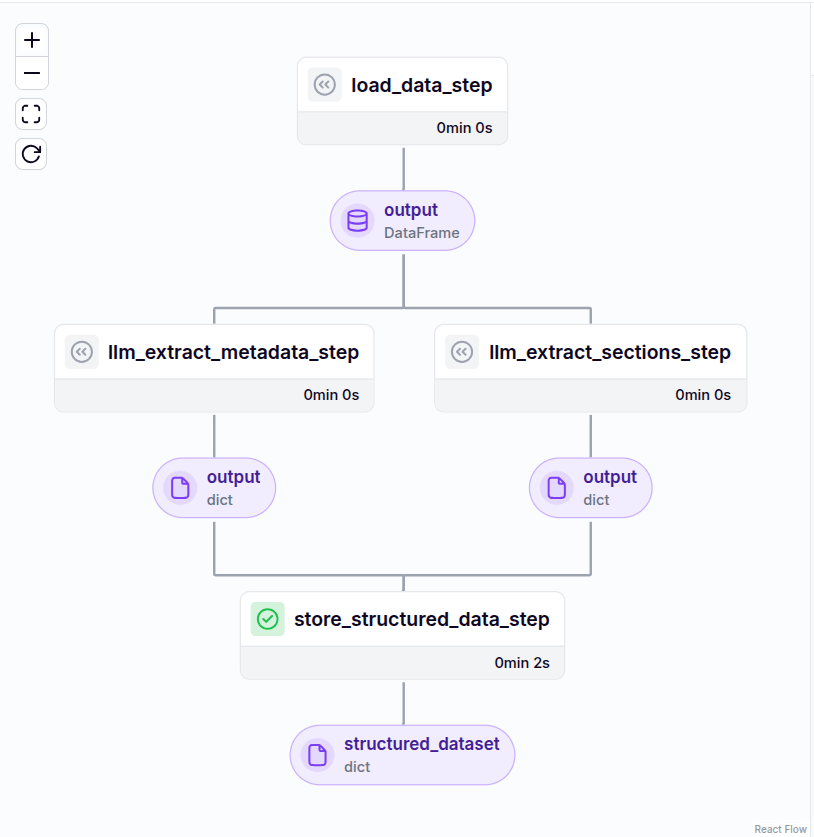

Orchestrating the Steps with a ZenML Pipeline

In ZenML, a pipeline defines the execution order of steps. The following pipeline loads the dataset, extracts sections and metadata, and stores the results.

This pipeline ensures each step runs sequentially and tracks outputs for lineage and reproducibility. Here is the DAG (direct acyclic graph) for the pipeline:

Agent Analysis

In this section, we break down the role of each agent in the Financial Report Analysis Pipeline, using ZenML and Smolagents to extract, analyze, and contextualize financial data. Each agent operates as an independent ZenML step, focusing on specific analytical tasks. Let's explore how they function and interact.

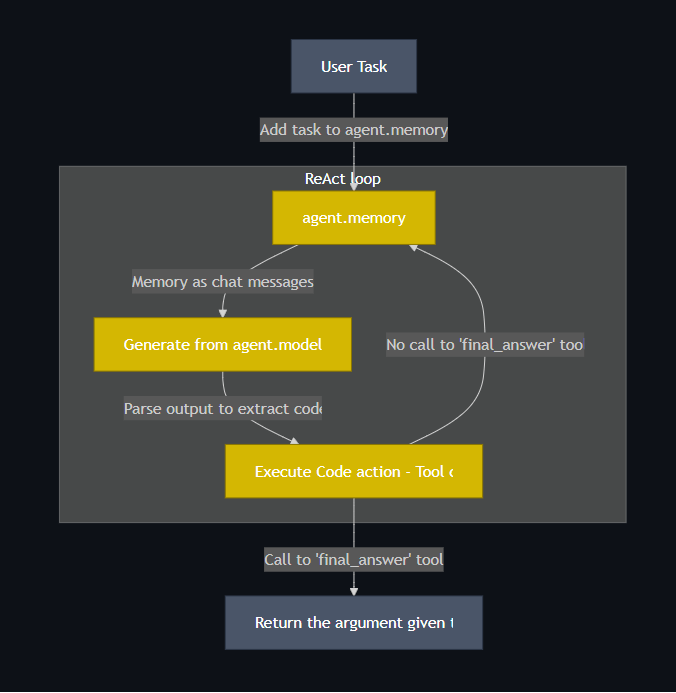

What is a Code Agent in SmolAgent?

In a multi-step agent system, an LLM (Large Language Model) writes an action at each step (typically as JSON instructions) containing tool names and arguments. For example:

However, research suggests that expressing these actions in executable code leads to more effective tool execution.

This approach aligns with how programming languages are optimized for defining computational tasks, making them superior to JSON-based action calls. For example:

HuggingFace SmolAgents has addressed this by introducing CodeAgent. CodeAgent has several benefits over JSON format:

- Fewer Steps: CodeAgents use 30% fewer steps to complete tasks compared to the traditional ReAct-style agents, which reduces the number of LLM calls and speeds up workflows.

- Higher Accuracy: Especially on complex, multi-step tasks, CodeAgents outperform traditional agents by chaining logic better through code.

- More Natural for Programmers: If you're already working in Python, CodeAgents just feel more intuitive.

This approach is inspired by ReAct (Reasoning + Acting) agents, a framework where the agent reasons out loud and performs actions to solve tasks. CodeAgents are like ReAct—but with Python code as the "act" part.

Now, let’s take a closer look at the different agents and the tools they use.

1. Financial Metrics Agent

The Financial Metrics Agent focuses on extracting and normalizing key financial indicators such as revenue, profit margins, and debt ratios. It uses a set of tools, each responsible for a different aspect of financial metric extraction.

Extracting Revenue Metrics

The extract_revenue_metrics function retrieves and normalizes revenue-related figures, calculating growth rates where applicable:

This function ensures a structured extraction of revenue data, including growth rates and three-year CAGR, for deeper trend analysis.

Analyzing Profit Margins

Similarly, analyze_profit_margins calculates various profit-related metrics:

This function ensures we capture critical profitability indicators for financial health assessment.

Calculating Debt Ratios

Debt analysis is crucial in financial reporting. The calculate_debt_ratios function provides various leverage and liquidity ratios:

This tool ensures a structured breakdown of financial leverage using industry-standard debt metrics.

The best part about agents is that when the agent calls these functions, it extracts the arguments from the unstructured data and then calls them.

2. Market Context Agent

The Market Context Agent uses external data sources to gather news, market trends, and analyst opinions on a company. It relies on search_recent_news, search_market_trends, and search_analyst_opinions.

This tool retrieves the latest market news, helping analysts understand external influences on financial performance. Similarly, here are the other two tools:

Here’s how we combine them in a ZenML step:

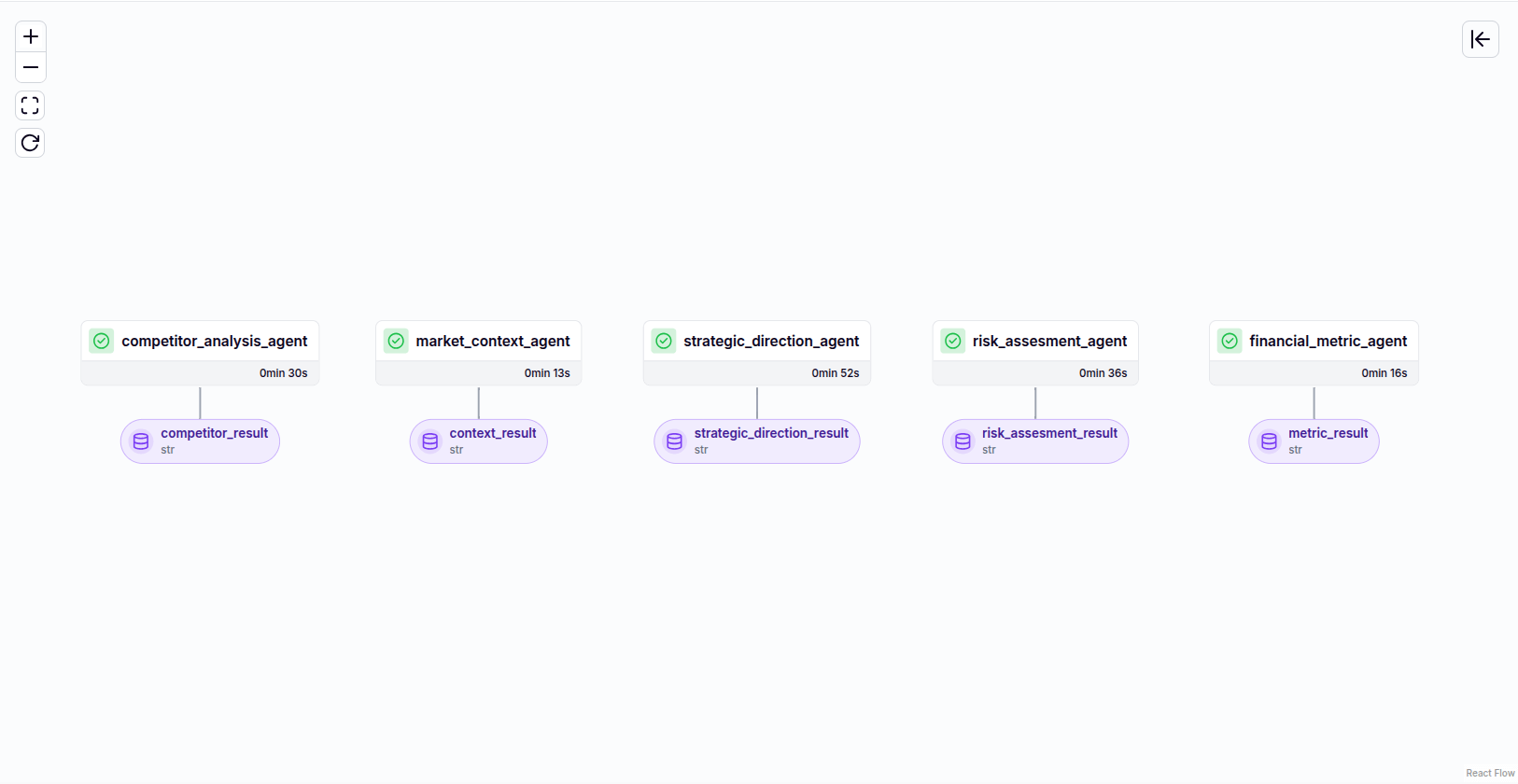

This structured approach ensures that financial insights are extracted, contextualized, and analyzed efficiently. Three more agents, Competitor Analysis, Risk Assessment, and Strategic Direction Agents, enrich the financial analysis pipeline. More details can be found in the Github repository.

Pipeline Integration

Here’s how to orchestrate the steps using the ZenML pipeline. It’s integrated with observability via OpenTelemetry (OTel) and Langfuse for tracing and monitoring. Multiple AI-powered agents perform financial and strategic assessments based on provided data.

Here’s what the DAG looks like:

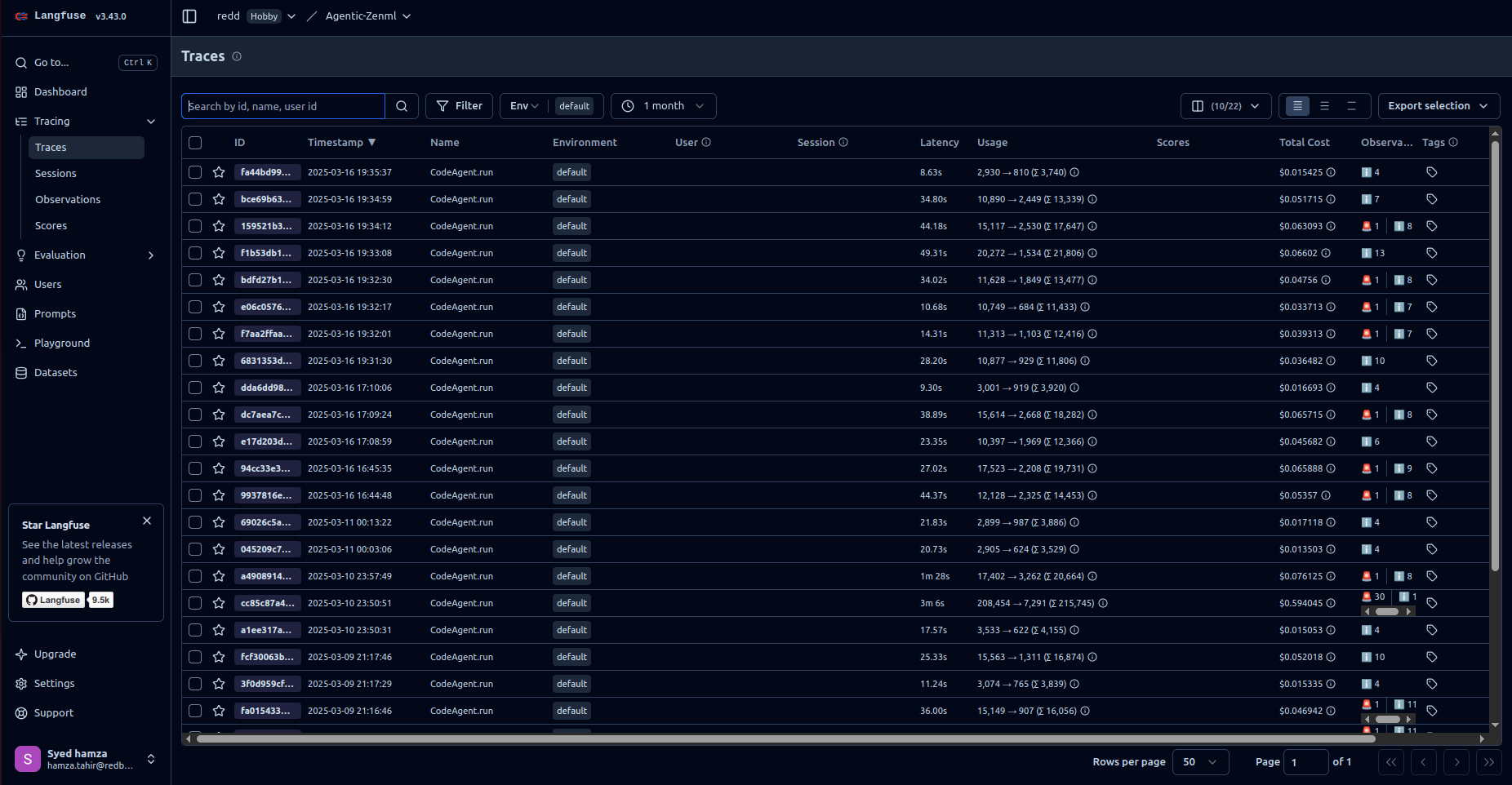

Here we will take a moment to discuss on Langfuse capabilities and value. It provides comprehensive trace data that is invaluable for both debugging and cost analysis. Understanding how to interpret these traces can significantly enhance the efficiency and cost-effectiveness of your LLM applications.

Debugging with Langfuse

Each trace in Langfuse captures the flow of a request through various components, such as retrievers and LLMs. By examining these traces, developers can:

- Identify Errors: Pinpoint the exact stage where an error occurred, facilitating quicker resolution.

- Analyze Latency: Determine which components contribute most to processing time, aiding in performance optimization.

- Monitor Token Usage: Track input and output tokens to understand and optimize token consumption.

For instance, if a particular component consistently shows high latency or token usage, it may be a candidate for optimization or replacement.

Cost Analysis with Langfuse

Langfuse allows for detailed cost tracking by associating costs with traces. This is particularly useful when dealing with multiple LLM providers or custom models. By analyzing trace metadata, developers can:

- Estimate Costs: Calculate costs based on token usage and predefined rates.

- Identify Cost Drivers: Determine which components or queries are the most expensive.

- Optimize Resource Allocation: Make informed decisions about where to allocate resources for maximum efficiency.

By regularly reviewing these metrics, developers can maintain optimal performance and cost-efficiency in their LLM applications.

After the pipeline run is completed, a trace of the run, including all the agents, will be produced. Here’s what it will look like:

To maintain separation of concerns, all prompts have been organized within the config folder. Below are the prompts used by each of the agents:

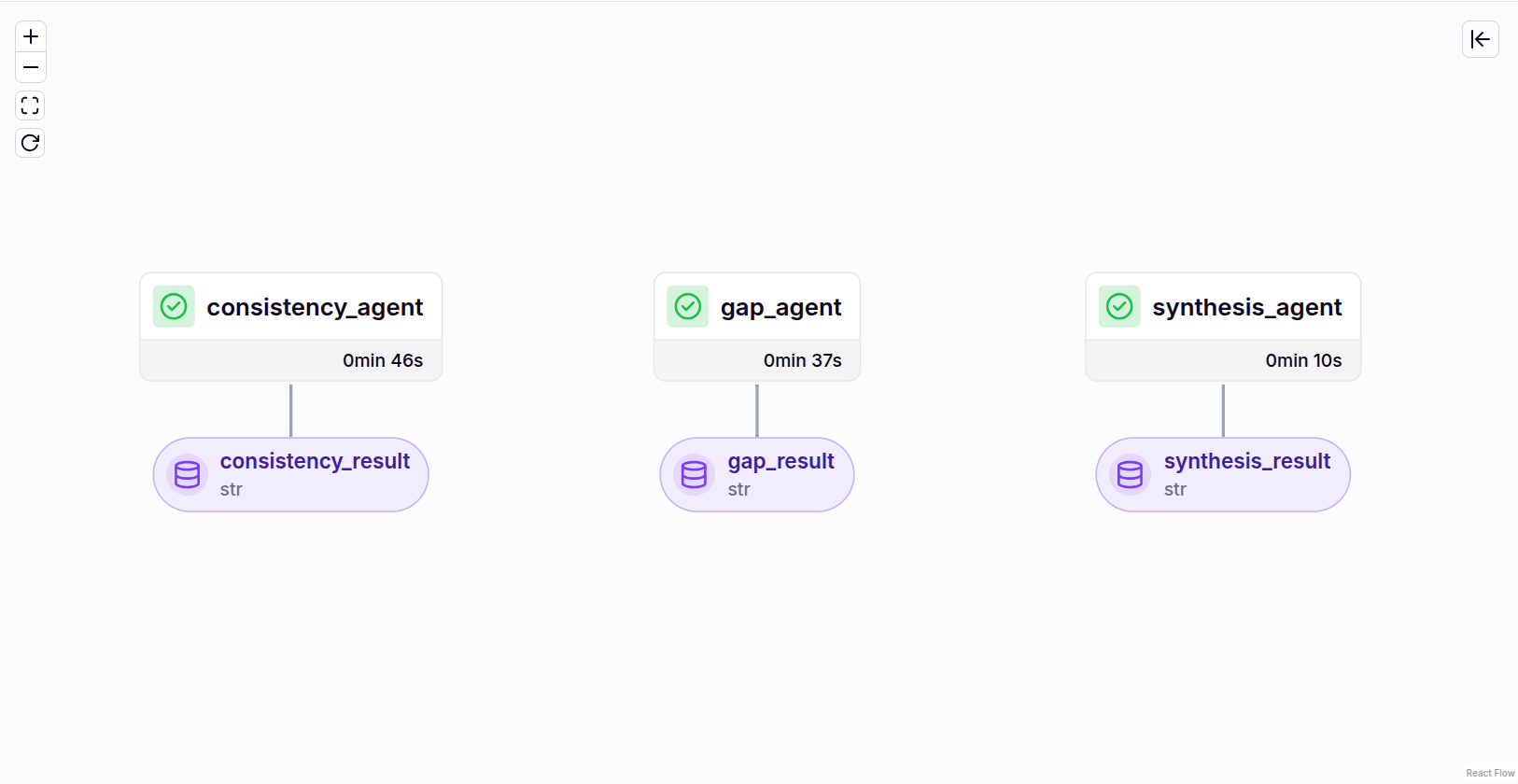

Synthesis & Validation

As many agents work together, it’s important to check data consistency and completeness by providing a source of truth. To ensure high-quality financial analysis, we use a multi-agent system that verifies, enhances, and synthesizes financial reports. This approach involves three AI-driven agents:

- Consistency Checker: Cross-validates financial metrics, market context, and competitor data for contradictions.

- Gap Analysis Agent: Identifies missing critical information and suggests areas requiring further research.

- Synthesis Agent: Compiles all findings into a cohesive report with properly attributed sources.

Each agent plays a vital role in ensuring data accuracy and completeness. Here’s how:

Consistency Checker

The Consistency Checker detects contradictions in financial data by cross-referencing information from various sources, such as financial metrics, market trends, and company-reported data.

Using OpenAI’s GPT-4o, the agent ensures that financial insights are free from contradictions before being used in decision-making.

Gap Analysis Agent

The Gap Analysis Agent identifies missing data points and suggests areas for further investigation. This ensures that all critical information is included before synthesis.

By addressing data gaps early, this agent enhances the reliability of financial reports.

Synthesis Agent

The Synthesis Agent consolidates all findings into a well-structured financial report. It integrates data from the Consistency Checker and Gap Analysis Agent, ensuring clarity and proper attribution.

This step ensures the final report is comprehensive and well-structured.

Agent Validation Steps

We use the tools outlined above and the CodeAgent from SmolAgents to process the query. The query is formatted to invoke the appropriate functions and deliver the responses.

Each step ensures that data flows through a structured validation process.

Final Pipeline Execution

The pipeline integrates ZenML, Langfuse, and OpenTelemetry for end-to-end validation, observability, and tracking.

Similar to the agent analysis, here’s how the DAG looks like for agent validation:

Dashboard

A financial report is only as useful as its presentation. Raw data and insights, no matter how detailed, need a structured and visually accessible format to provide real value. In this section, we’ll build a simple dashboard that transforms extracted financial metrics and analysis reports into a well-organized HTML report.

At the core of this implementation is the financial_dashboard step, which takes a structured response containing financial insights and converts it into an interactive HTML dashboard. This step relies on utility functions to extract relevant data and generate HTML components for a clean, readable output.

Extracting Key Metrics

Before constructing the dashboard, we must parse the financial report’s response and extract key insights. The function extract_metrics() handles this by organizing the structured response into different categories: financial metrics, market context, competitor insights, contradictions, and additional context.

This function retrieves specific components from the response, ensuring that each category is properly structured. Financial metrics are extracted separately using parse_financial_metrics(), which applies regex patterns to identify numerical values such as revenue growth rate, net margin, and debt-to-equity ratio.

With the data extracted and structured, the next step is transforming these insights into an HTML format.

Generating the Dashboard

The financial_dashboard step constructs an HTML report using ZenML’sHTMLString. It retrieves extracted data and then applies utility functions to format financial metrics and market context as structured HTML lists.

To organize the metrics, competitors, and additional context, we'll use an LLM to extract this information into a structured JSON format.

This function constructs an HTML structure where each section presents a specific type of insight. The financial metrics and market context are rendered using helper functions like generate_metrics_html(), which wraps key values in styled HTML elements.

Similarly, generate_context_html() follows the same approach for market insights.

Encapsulating the dashboard logic in a ZenML step ensures that this visualization integrates smoothly into a broader analysis pipeline.

Integrating with the Pipeline

To incorporate the dashboard into the pipeline, we define a simple ZenML pipeline function that executes the financial_dashboard step. This pipeline orchestrates the flow from raw report data to a finalized HTML dashboard, making financial insights easily interpretable. Here’s how the report looks like in the dashboard:

.png)

Here, we have a complete rundown of the complex workflows involving multiple agents with ZenML and SmolAgents.

Conclusion

The rise of vertical AI agents marks a new era in automating high-value tasks like financial report analysis. While a single LLM may struggle to process long documents and extract key insights, using multiple specialized AI agents can significantly enhance results.

This guide is a way out by building these applications effortlessly and with less pain. Using tools like ZenML and Langfuse, the system is more transparent to the engineer, so developers are comfortable reproducing the pipelines with evolving technologies.

Ready to start building your own AI pipelines? Sign up to ZenML today.

Full code for the project is available at https://github.com/haziqa5122/Financial-report-summarization-ZenML-and-SmolAgents