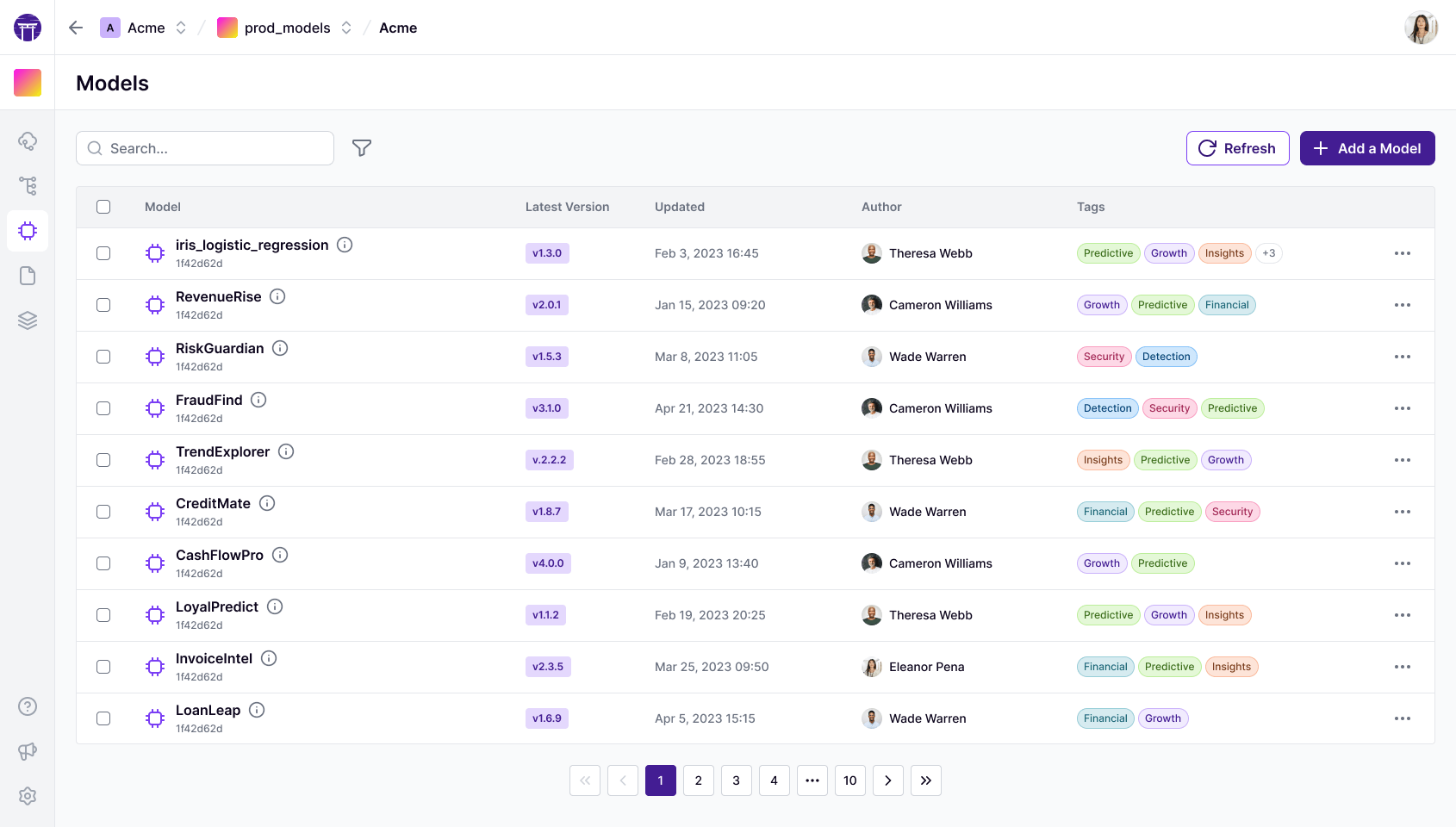

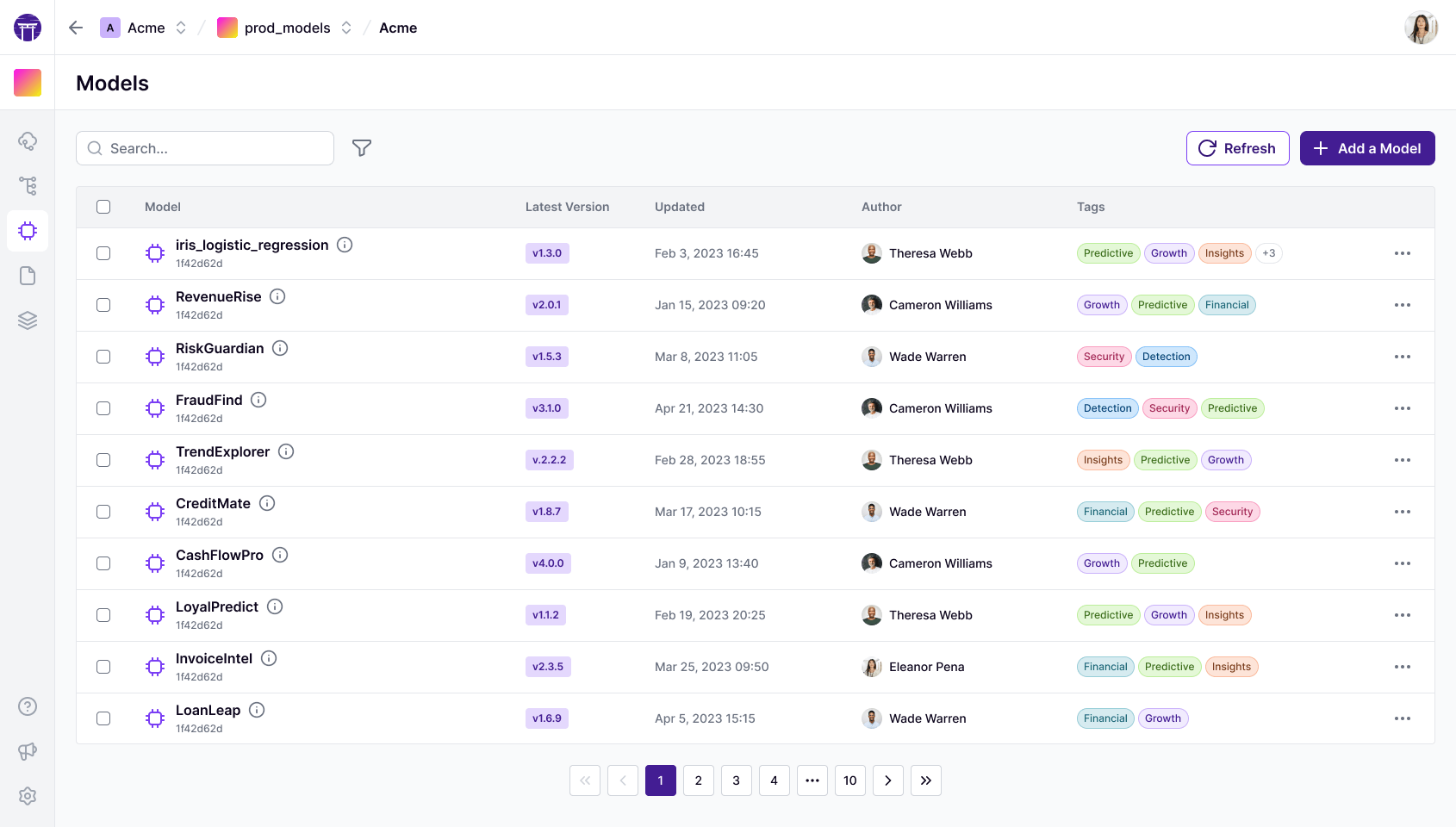

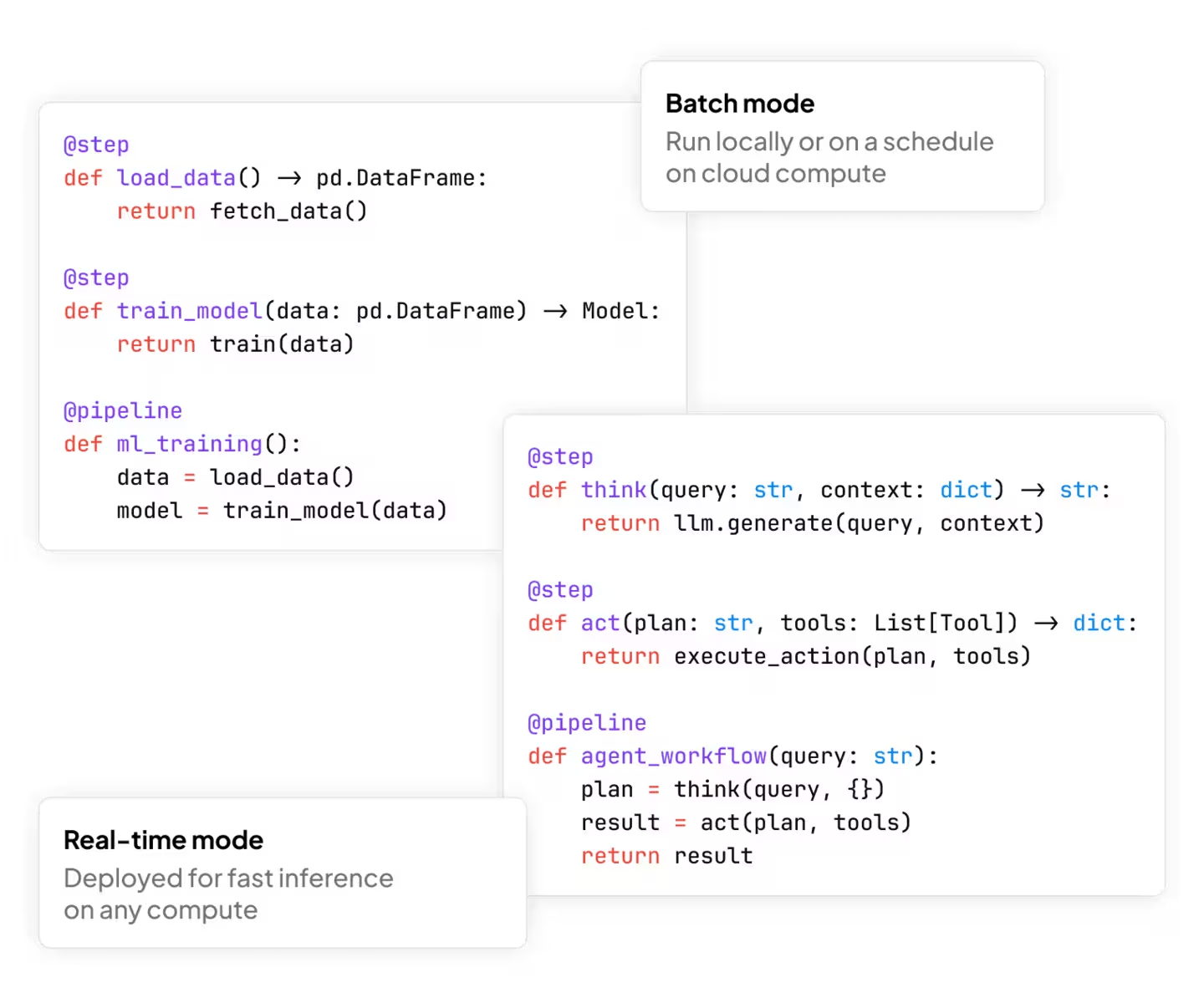

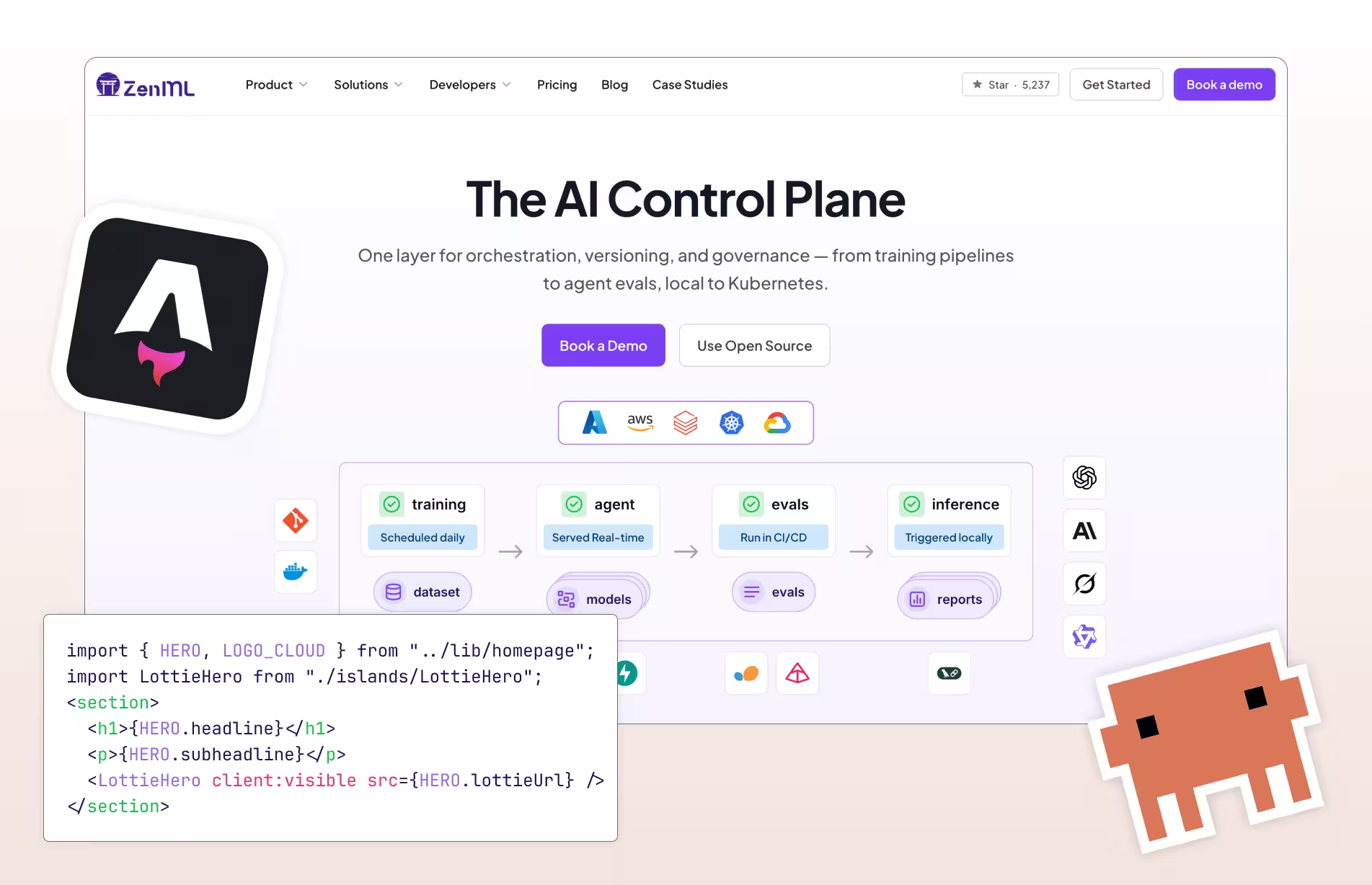

The AI Control Plane

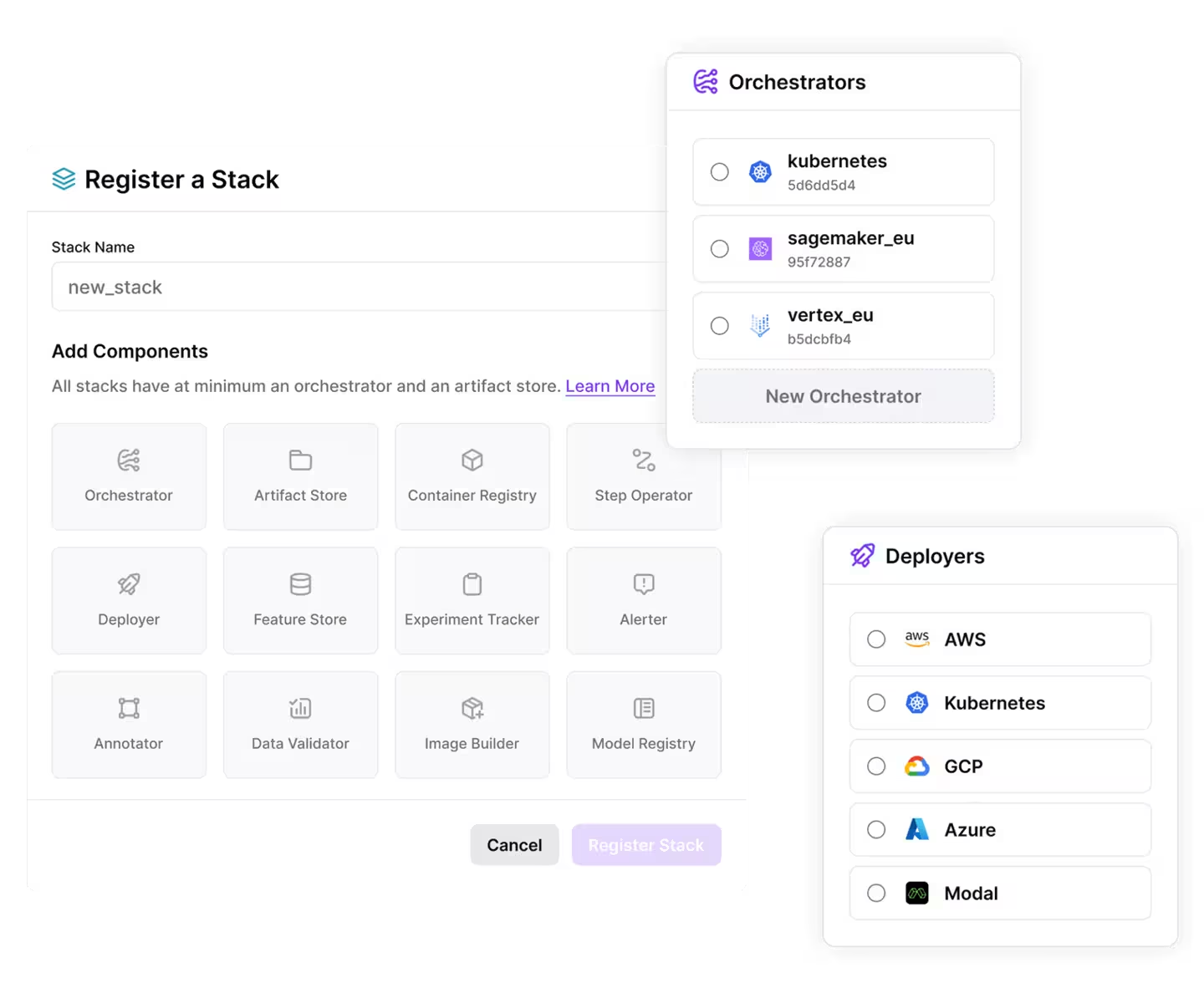

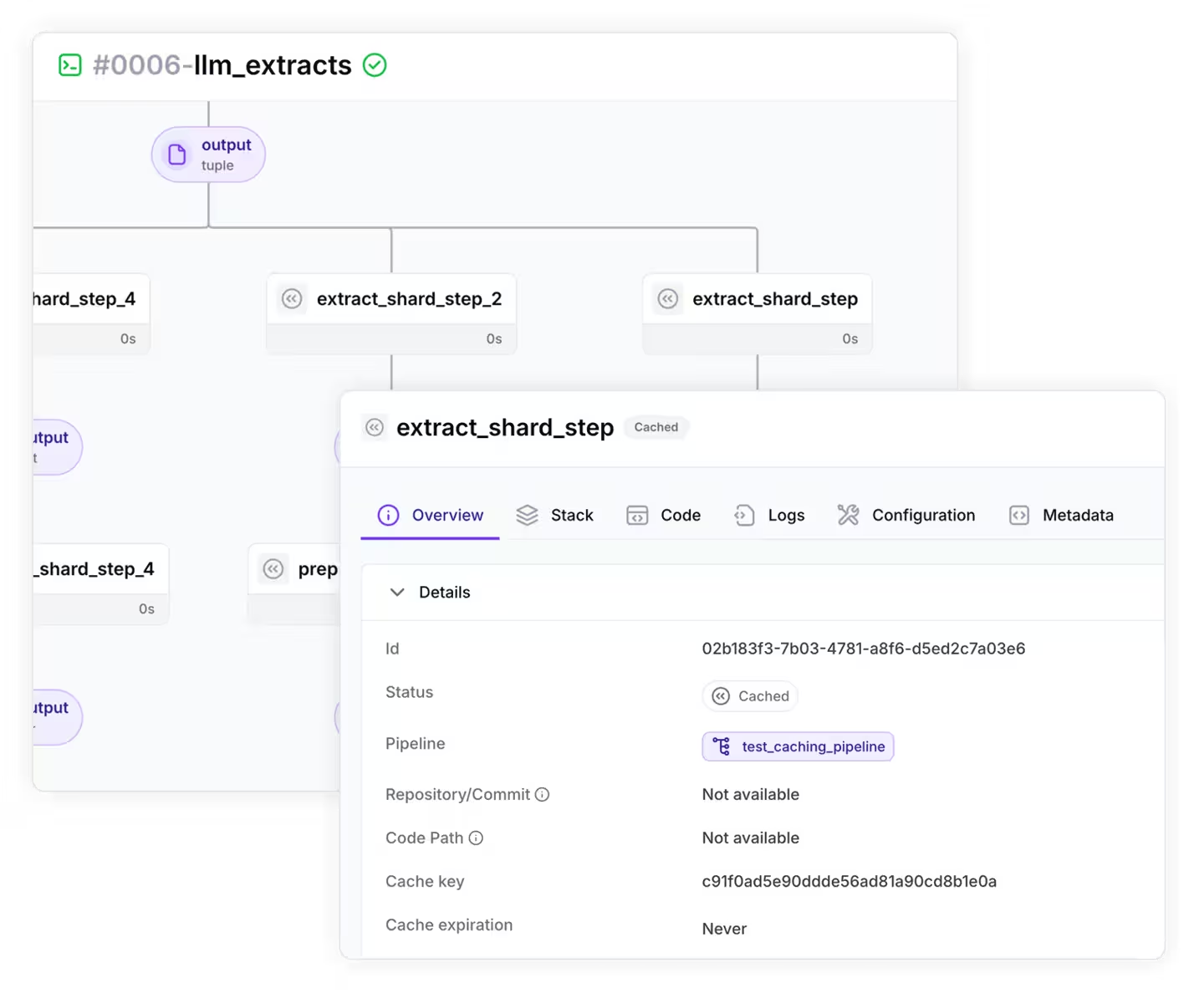

One layer for orchestration, versioning, and governance — from training pipelines to agent evals, local to Kubernetes.

Trusted by 1,000s of top companies to standardize their AI workflows

Ready to Unify Your AI Platform?

Join thousands of teams using ZenML to eliminate chaos and accelerate AI delivery

Use ZenML with any framework

60+ integrations across the AI ecosystem. From sklearn to LangGraph.

Whitepaper

ZenML as your Enterprise-Grade AI Platform

We have put down our expertise around building production-ready, scalable AI platforms, building on insights from our top customers.

Customer Stories

Learn how teams are using ZenML to save time and simplify their MLOps.

Creating a Unified AI Platform: How JetBrains Centralizes ML on Kubernetes with ZenML

How ADEO Leroy Merlin decreased their time-to-market from 2 months to 2 weeks

How Brevo accelerated model development by 80% using ZenML

How Cross Screen Media Trains Models for 210 Markets in Hours, Not Weeks, with ZenML

ZenML tracks production AI deployments across the industry

See the LLMOps database here

ZenML offers the capability to build end-to-end ML workflows that seamlessly integrate with various components of the ML stack. This enables teams to accelerate their time to market by bridging the gap between data scientists and engineers.

Harold Gimenez

SVP R&D at HashiCorp

ZenML allows orchestrating ML pipelines independent of any infrastructure or tooling choices. ML teams can free their minds of tooling FOMO from the fast-moving MLOps space, with the simple and extensible ZenML interface.

Richard Socher

Former Chief Scientist Salesforce and Founder of You.com

ZenML allowed us a fast transition between dev to prod. It's no longer the big fish eating the small fish – it's the fast fish eating the slow fish.

François Serra

ML Engineer / ML Ops / ML Solution architect at ADEO Services

Many teams still struggle with managing models, datasets, code, and monitoring as they deploy ML models into production. ZenML provides a solid toolkit for making that easy in the Python ML world.

Chris Manning

Professor of Linguistics and CS at Stanford

Thanks to ZenML we've set up a pipeline where before we had only Jupyter notebooks. It helped us tremendously with data and model versioning.

Francesco Pudda

Machine Learning Engineer at WiseTech Global

ZenML allows you to quickly and responsibly go from POC to production ML systems while enabling reproducibility, flexibility, and above all, sanity.

Goku Mohandas

Founder of MadeWithML

News

Latest ZenML Updates

Stay updated on the latest developments, announcements, and updates from the ZenML ecosystem.

See all newsNo compliance headaches

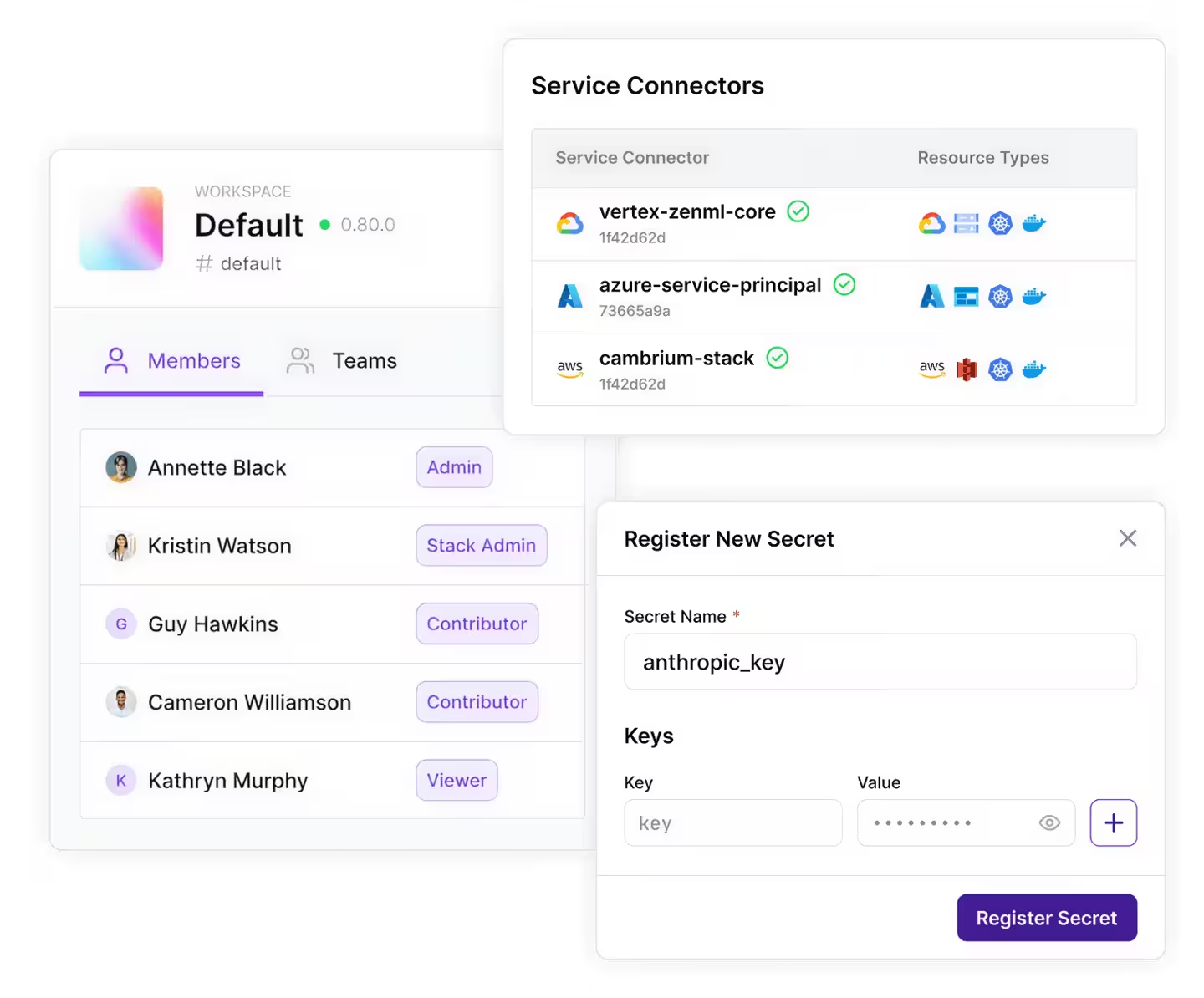

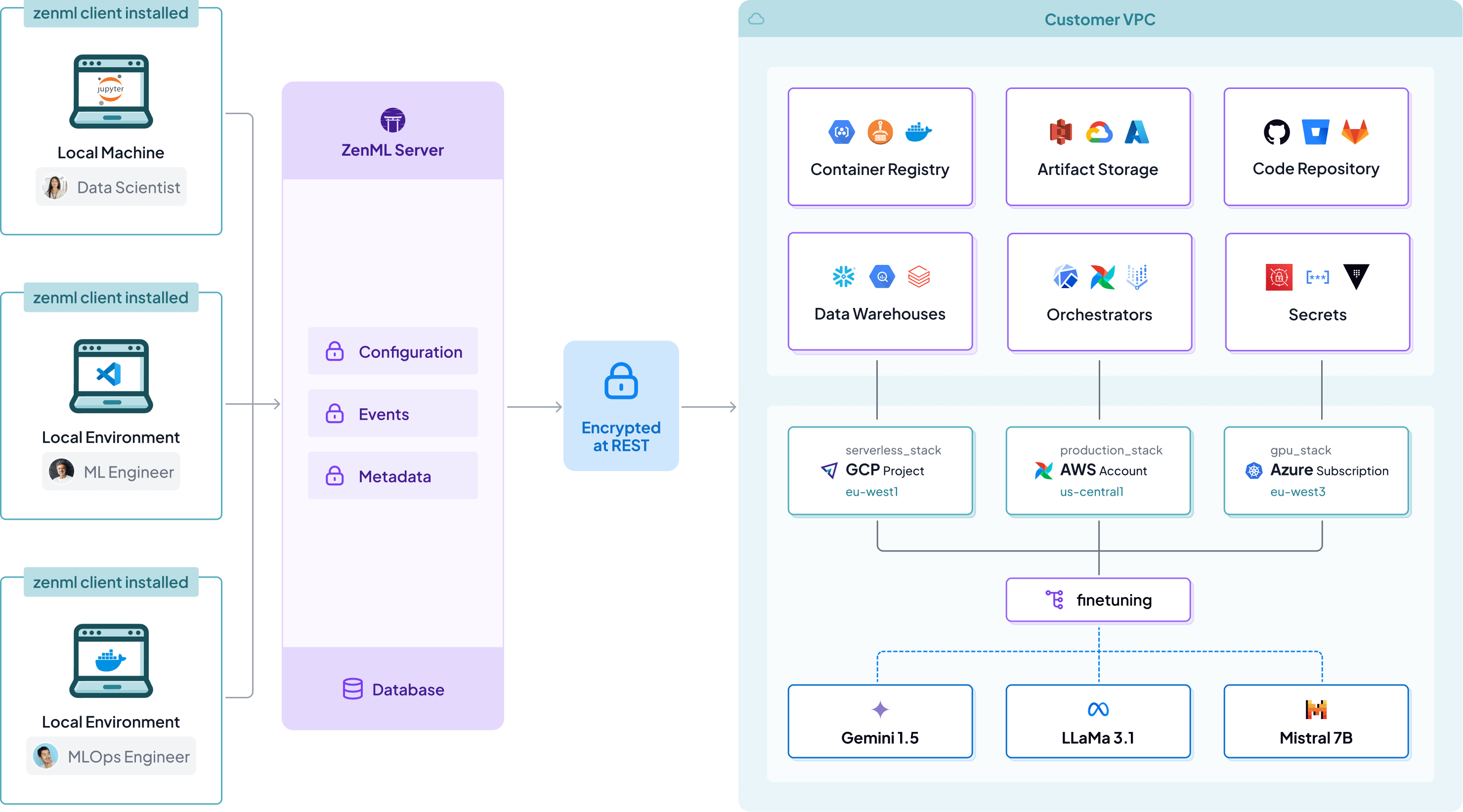

Your VPC, your data

ZenML is a metadata layer on top of your existing infrastructure, meaning all data and compute stays on your side.

ZenML is SOC2 and ISO 27001 Compliant

We Take Security Seriously

ZenML is SOC2 and ISO 27001 compliant, validating our adherence to industry-leading standards for data security, availability, and confidentiality in our ongoing commitment to protecting your ML workflows and data.

Looking to Get Ahead in MLOps & LLMOps?

Subscribe to the ZenML newsletter and receive regular product updates, tutorials, examples, and more.

We care about your data in our privacy policy.

Support

Frequently asked questions

Everything you need to know about the product.

What is the difference between ZenML and other machine learning orchestrators?

Does ZenML integrate with my MLOps stack?

Does ZenML help in GenAI / LLMOps use-cases?

How can I build my MLOps/LLMOps platform using ZenML?

What is the difference between the open source and Pro product?

Unify Your ML and LLM Workflows

- Free, powerful MLOps open source foundation

- Works with any infrastructure

- Upgrade to managed Pro features