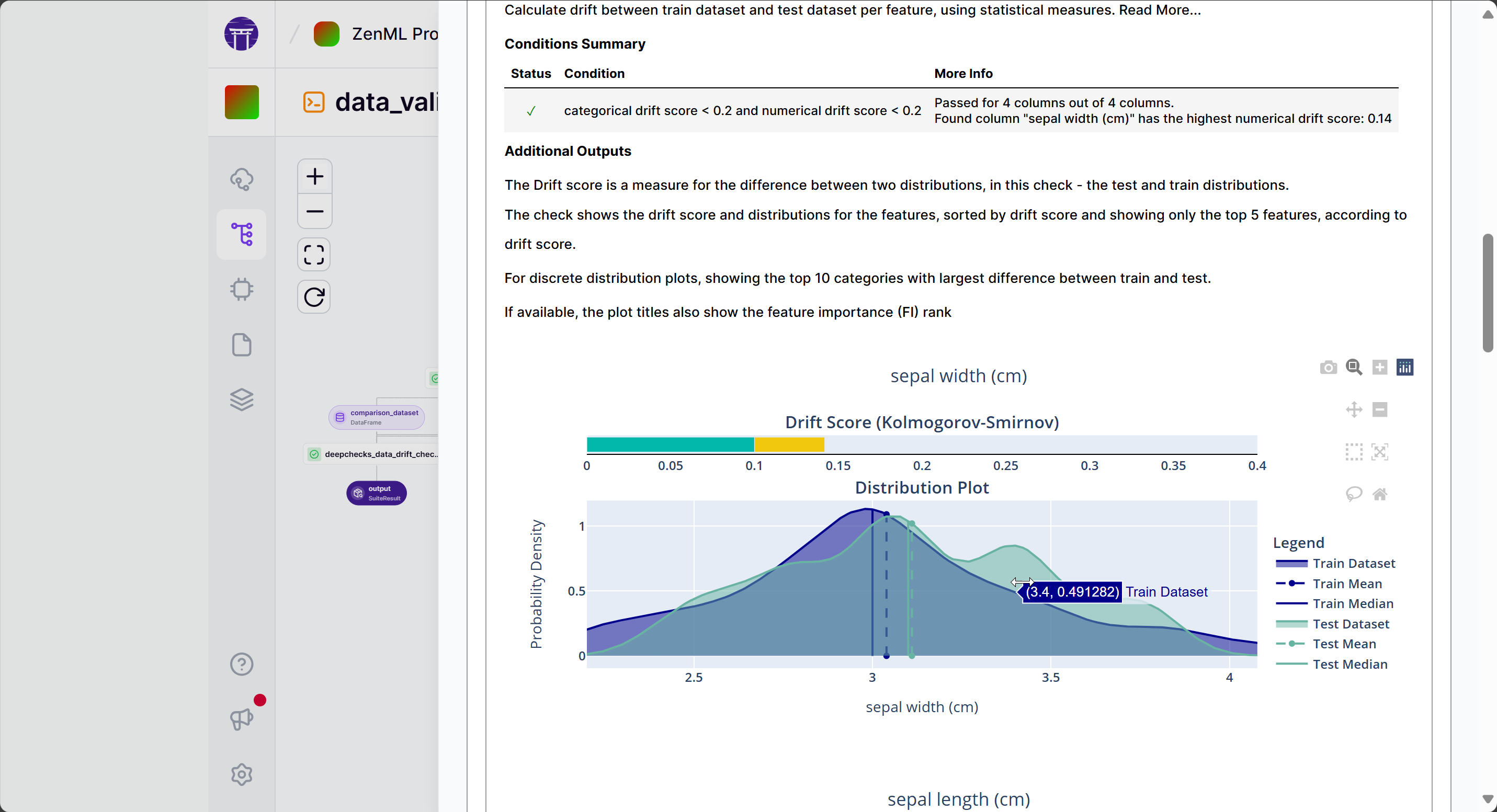

Automate robust data and model validation in your ML pipelines with Deepchecks and ZenML

The Deepchecks integration enables you to seamlessly incorporate comprehensive data integrity, data drift, model drift and model performance checks into your ZenML pipelines. By leveraging Deepchecks' extensive library of pre-configured tests, you can easily validate the quality and reliability of the datasets and models used in your ML workflows, ensuring more robust and production-ready pipelines.

import pandas as pd

from sklearn.model_selection import train_test_split

from sklearn.base import ClassifierMixin

from sklearn.ensemble import RandomForestClassifier

from zenml import pipeline, step

from zenml.integrations.constants import DEEPCHECKS, SKLEARN

from deepchecks.tabular.datasets.classification import iris

from typing_extensions import Tuple, Annotated

from zenml.artifacts.artifact_config import ArtifactConfig

LABEL_COL = "target"

@step

def data_loader() -> Tuple[

Annotated[

pd.DataFrame, ArtifactConfig(name="reference_dataset")

],

Annotated[

pd.DataFrame,

ArtifactConfig(name="comparison_dataset"),

],

]:

"""Load the iris dataset."""

iris_df = iris.load_data(data_format="Dataframe", as_train_test=False)

df_train, df_test = train_test_split(

iris_df, stratify=iris_df[LABEL_COL], random_state=0

)

return df_train, df_test

@step

def trainer(df_train: pd.DataFrame) -> Annotated[ClassifierMixin, ArtifactConfig(name="model")]:

# Train Model

rf_clf = RandomForestClassifier(random_state=0)

rf_clf.fit(df_train.drop(LABEL_COL, axis=1), df_train[LABEL_COL])

return rf_clf

from zenml.integrations.deepchecks.steps import (

deepchecks_data_integrity_check_step,

)

data_validator = deepchecks_data_integrity_check_step.with_options(

parameters=dict(

dataset_kwargs=dict(label=LABEL_COL, cat_features=[]),

),

)

from zenml.integrations.deepchecks.steps import (

deepchecks_data_drift_check_step,

)

data_drift_detector = deepchecks_data_drift_check_step.with_options(

parameters=dict(dataset_kwargs=dict(label=LABEL_COL, cat_features=[]))

)

from zenml.integrations.deepchecks.steps import (

deepchecks_model_validation_check_step,

)

model_validator = deepchecks_model_validation_check_step.with_options(

parameters=dict(

dataset_kwargs=dict(label=LABEL_COL, cat_features=[]),

),

)

from zenml.integrations.deepchecks.steps import (

deepchecks_model_drift_check_step,

)

model_drift_detector = deepchecks_model_drift_check_step.with_options(

parameters=dict(

dataset_kwargs=dict(label=LABEL_COL, cat_features=[]),

),

)

from zenml.config import DockerSettings

docker_settings = DockerSettings(required_integrations=[DEEPCHECKS, SKLEARN])

@pipeline(enable_cache=True, settings={"docker": docker_settings})

def data_validation_pipeline():

"""Links all the steps together in a pipeline"""

df_train, df_test = data_loader()

data_validator(dataset=df_train)

data_drift_detector(

reference_dataset=df_train,

target_dataset=df_test,

)

model = trainer(df_train)

model_validator(dataset=df_train, model=model)

model_drift_detector(

reference_dataset=df_train, target_dataset=df_test, model=model

)

if __name__ == "__main__":

# Run the pipeline

data_validation_pipeline()

Expand your ML pipelines with more than 50 ZenML Integrations