Leverage the power of Kubernetes to orchestrate and scale your machine learning workflows using ZenML's Kubernetes integration. This lightweight, minimalist orchestrator enables you to run ML pipelines on Kubernetes clusters without the complexity of managing additional frameworks like Kubeflow.

# Step 1: Register a new Kubrnetes orchestrator

>>> zenml orchestrator register <ORCHESTRATOR_NAME> \

--flavor=kubernetes

# Step 2: Authernticate Sagemaker orchestrator

# Option 1 (recomended): Service Connector

>>> zenml orchestrator connect <ORCHESTRATOR_NAME> --connector <CONNECTOR_NAME>

# Option 2 (not recommended): Explicit authentication

>>> zenml orchestrator register <ORCHESTRATOR_NAME> \

--flavor=kubernetes \

--kubernetes_context=<KUBERNETES_CONTEXT>

# Step 3: Update your stack to use the Sagemaker orchestrator

>>> zenml stack update -o <ORCHESTRATOR_NAME>

from zenml import step, pipeline

from zenml.integrations.kubernetes.flavors.kubernetes_orchestrator_flavor import (

KubernetesOrchestratorSettings,

)

kubernetes_settings = KubernetesOrchestratorSettings(

pod_settings={

"resources": {

"requests": {"cpu": "1", "memory": "1Gi"},

"limits": {"cpu": "2", "memory": "2Gi"},

},

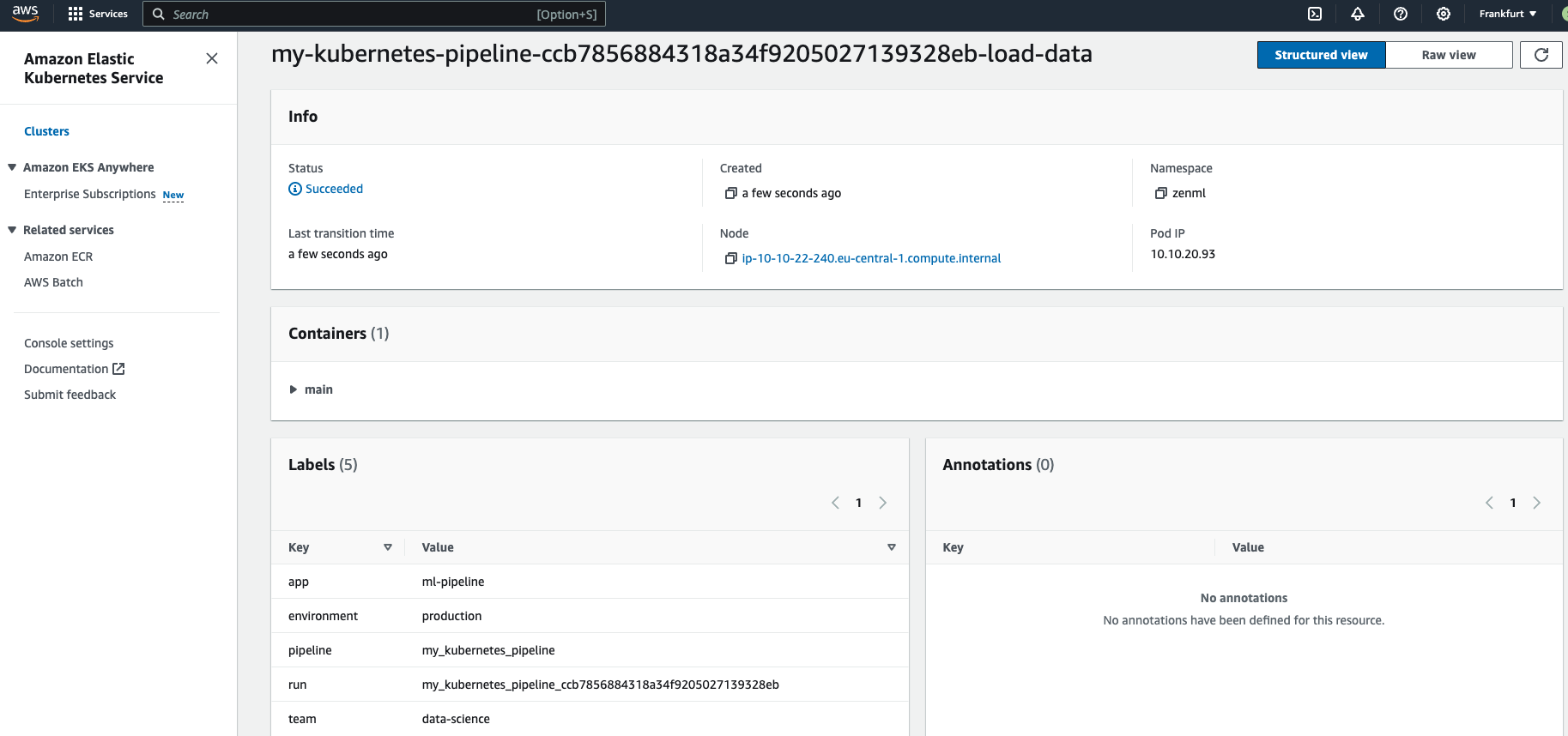

"labels": {

"app": "ml-pipeline",

"environment": "production",

"team": "mlops",

},

},

orchestrator_pod_settings={

"resources": {

"requests": {"cpu": "1", "memory": "1Gi"},

"limits": {"cpu": "2", "memory": "2Gi"},

},

"labels": {

"app": "zenml-orchestrator",

"component": "pipeline-runner",

"team": "mlops",

},

},

)

@step

def load_data() -> dict:

# Load data here

return {1: [1, 2], 2: [3, 4]}

@step

def preprocess_data(raw_data: dict) -> dict:

# Preprocess data here

return {k: v * 2 for k, v in raw_data.items()}

@pipeline(

settings={

"orchestrator.kubernetes": kubernetes_settings,

}

)

def my_kubernetes_pipeline():

# Pipeline steps here

raw_data = load_data()

preprocess_data(raw_data)

if __name__ == "__main__":

my_kubernetes_pipeline()

Expand your ML pipelines with more than 50 ZenML Integrations