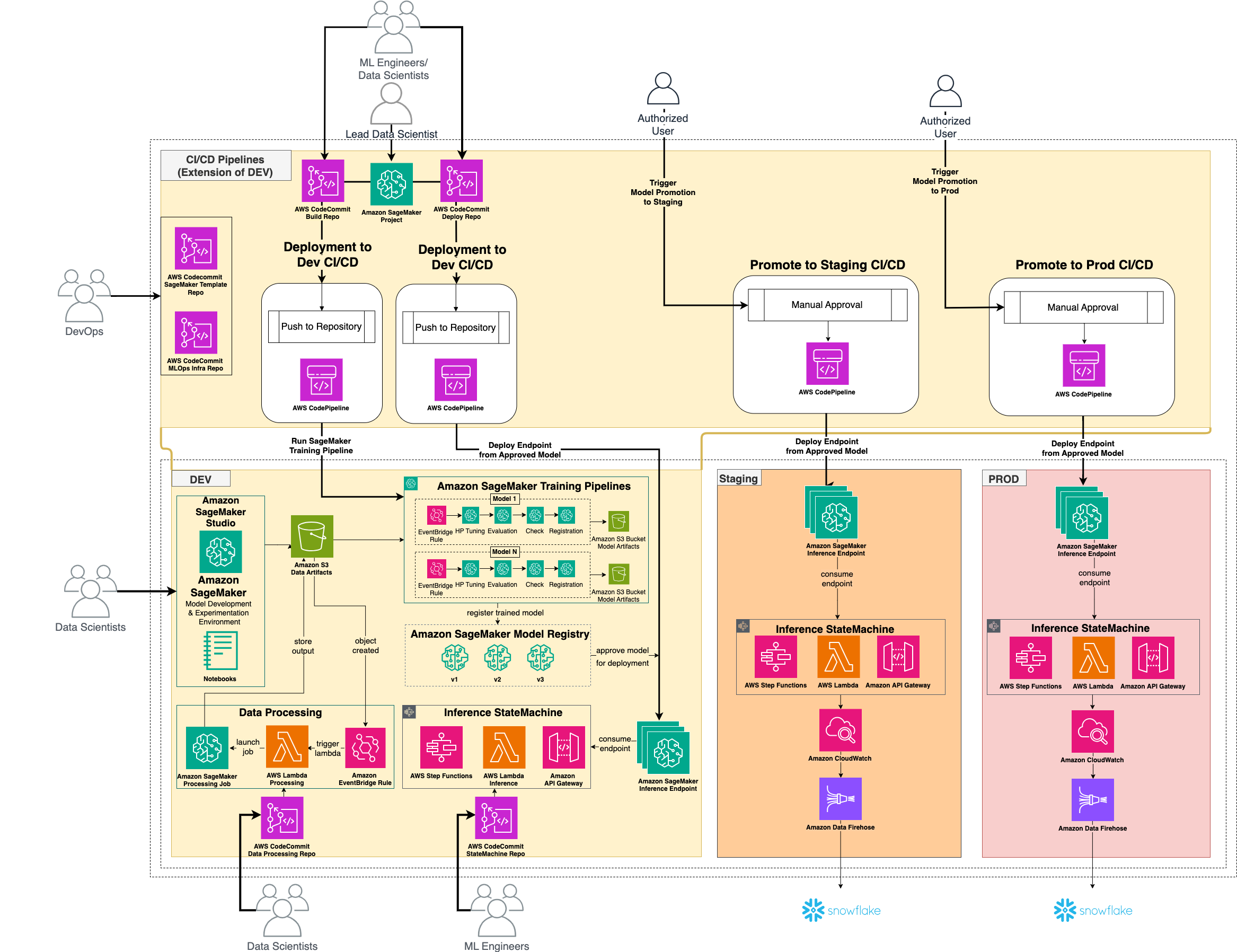

As enterprise machine learning initiatives scale, managing the lifecycle of models across separate AWS accounts for development, staging, and production environments becomes increasingly complex. This separation of environments—while essential for security, compliance, and resource isolation—creates significant operational challenges in the model promotion process.

Industry leaders like Aviva have implemented serverless MLOps platforms using Amazon SageMaker and the AWS Enterprise MLOps Framework, achieving remarkable cost reductions compared to on-premises solutions. However, the manual promotion process between AWS accounts often creates bottlenecks, delays model deployment, and introduces unnecessary operational overhead.

This article explores best practices and modern solutions for streamlining model promotion across multiple AWS accounts, ensuring governance without sacrificing agility.

The Cross-Account Challenge

Organizations with mature ML practices typically implement a multi-account strategy with distinct AWS accounts for:

- Development/Experimentation: Where data scientists build and test models

- Staging/QA: Where models undergo validation and compliance checks

- Production: Where approved models serve business-critical applications

While this separation provides essential guardrails, it introduces several challenges:

- Manual Model Promotion: Teams must manually export models from one account, validate them, and re-import them into the target account

- Inconsistent Environments: Configuration drift between accounts leads to "works in development, fails in production" scenarios

- Governance Complexity: Tracking model lineage and ensuring compliance across account boundaries

- Limited Visibility: No unified view of model performance across environments

- Resource Duplication: Redundant infrastructure and pipeline definitions across accounts

These challenges are especially acute for organizations managing dozens of models in production, where manual processes quickly become unsustainable.

AWS MLOps implementations can require multiple different contexts across different libraries and SDKs, from YAML to Python, which can get overwhelming for managing efficiency at scale. For example, to create a single training workflow, one might have to write local scripts, push to CodeCommit, then write a CI workflow that compiles into a Sagemaker pipeline SDK.

ZenML provides a simple Python SDK that feels natural to data scientists:

In traditional multi-account AWS setups, teams have no unified view of models across environments. Each AWS account has its own separate dashboards, metrics, and logs, making it difficult to track models through their entire lifecycle without custom solutions.

ZenML provides a central dashboard that shows all pipelines, models, and metrics across every environment. This unified view allows teams to:

- Track model lineage from development to production

- Compare model performance across environments

- Monitor deployment status across all accounts

- Manage approvals from a central location

Different team members (data scientists, ML engineers, DevOps) need different configurations across AWS accounts, creating significant overhead and often leading to environment inconsistencies.

ZenML's stack concept allows each team member to work with configurations tailored to their role:

Slight variations in package versions between AWS accounts can cause models to behave differently in production than in development, leading to unexpected failures and inconsistent results.

ZenML containerizes pipeline steps to ensure identical dependencies:

.png)

While AWS has many amazing tools, authoring AWS Sagemaker pipelines can be cumbersome for data scientists to create and maintain, especially across multiple AWS accounts. The business and infrastructure logic can easily get mixed up.

ZenML automatically generates proper DAGs from simple Python functions and separates the infrastructure-level configuration with the Stack concept.

Data scientists develop locally but deploy to AWS cloud environments, creating a significant gap between development and production workflows that leads to "works on my machine" problems.

ZenML allows the exact same code to run locally or in any AWS account:

Many organizations operate across multiple cloud providers or maintain hybrid environments, which creates complexity when trying to maintain consistent ML operations.

ZenML's stack abstraction layer allows the same pipelines to run seamlessly across different cloud providers using detailed stack configurations. See this animation to see how easily we can configure stack components for different providers across different regions with a few clicks (you can also do this via Terraform or API):

Regulated industries like financial services and insurance companies face strict regulatory requirements that are difficult to enforce consistently across separate AWS accounts. This creates challenges for model reproducibility, audit trails, and compliance verification.

ZenML enables model governance through specialized pipeline steps that can be appended to any pipeline, generating compliance reports and visualizations automatically. Furthermore, integrations with data validation tools like Evidently make this even easier:

This approach allows organizations to implement "data-quality gates & alerting easily," as mentioned in their case study, while Brevo has leveraged similar techniques to become "a safer platform, fighting against fraudsters and scammers." The visualizations and reports generated serve as crucial documentation for audit purposes while ensuring consistent governance across all AWS environments.

ML operations across multiple AWS accounts often result in inefficient resource allocation and unnecessary cloud costs.

ZenML enables fine-grained control over resource allocation for each step:

Tracking experiments across multiple AWS accounts and ensuring reproducibility is challenging without centralized systems.

ZenML provides consistent experiment tracking regardless of where experiments run:

You can then compare metadata easily in one interface across stacks and models:

.png)

Transforming MLOps Across AWS Accounts

Organizations implementing modern MLOps solutions across multiple AWS accounts have achieved remarkable improvements, as evidenced by real-world case studies. Brevo (formerly Sendinblue) reduced its ML deployment time by 80%, taking what was once a month-long development-to-deployment process and dramatically shortening it. Similarly, ADEO Leroy Merlin decreased their time-to-market from 2 months to just 2 weeks—a 75% reduction. Both companies have successfully deployed multiple models into production (5 models for Brevo and 5 for ADEO, with the latter targeting 20 by the end of 2024).

These implementations have yielded significant operational benefits: ADEO's data scientists gained autonomy in pipeline creation and deployment, eliminating bottlenecks between teams, while Brevo achieved enhanced team productivity with just 3-4 data scientists independently handling end-to-end ML use cases. Additionally, both organizations reported improved business outcomes, including better fraud targeting for Brevo and seamless cross-country model deployment for ADEO. These real-world results demonstrate how proper MLOps implementation can transform operations across multiple environments while maintaining necessary governance and security controls.

Modern MLOps for Multi-Account AWS

As organizations scale their ML operations across AWS accounts, they need solutions that address these 10 critical challenges. ZenML provides a comprehensive approach that allows data scientists to focus on ML innovation rather than infrastructure complexity.

ZenML's Python-first approach, stack-based architecture, and unified dashboard enable teams to maintain all the security and governance benefits of multi-account AWS setups while dramatically reducing operational overhead and accelerating time-to-value.

To learn more about how ZenML can transform your cross-account MLOps strategy, make a free account on ZenML Pro or try our open-source project on GitHub.