To build multi-agent systems means moving beyond simple response loops into complex, multi-step reasoning and collaboration. Both Agno and LangGraph frameworks help you create multi-agent systems where LLM-powered agents can plan, act, and even work together autonomously.

But both frameworks come from different lineages and take different approaches.

The question is: if we do an Agno vs LangGraph comparison, which one would come out as the best framework for you? Let’s see.

In this comparison, we compare both frameworks across key features, integrations, and pricing. We also discuss how you can leverage both LangGraph and AutoGen (with a tool like ZenML) to get the best of both worlds.

Agno vs LangGraph: Key Takeaways

🧑💻 Agno: High-performance, lightweight framework for building multi-agent systems. Its design philosophy circles around a ‘pure Python’ approach that avoids graphs and chains. It’s an excellent choice if you prioritize performance, resource efficiency, and development velocity.

🧑💻 LangGraph: LangGraph, from the LangChain team, is a low-level orchestration framework. It’s deeply integrated with the LangChain and LangSmith ecosystem and helps build stateful, multi-agent applications using graphs. It’s ideal if you require predictable, controllable systems and would like to use the broader LangChain universe.

Agno vs LangGraph: Framework Maturity and Lineage

The table below compares the framework maturity of Agno and LangGraph:

Agno is a young but quickly growing project in the agentic AI space. By contrast, LangGraph comes from a well-established lineage (LangChain) with a focus on enterprise readiness.

However, the data reveals a fascinating divide in community traction and real-world usage.

Agno's higher GitHub star count (33.5k) reflects its market pull and interest among developers, likely because of its community-first approach, performance benchmarks, and simpler API.

On the other hand, LangGraph's PyPI downloads (9.8 million) in the last 30 days hint at a powerful network effect of the LangChain ecosystem. A major portion of LangGraph's downloads likely stems from the LangChain user base.

When looking at Commits, LangGraph’s 6,274 commits show a much faster development velocity compared to Agno’s 4,107.

Agno vs LangGraph: Features Comparison

To understand how Agno and LangGraph stack up, let’s compare their capabilities across several core features. Before we dive in, here’s a quick comparison table:

Feature 1. Agent and Workflow Abstraction

The core design and abstraction choice of any agent framework is how it defines an agent and the sequence of tasks it performs.

Agno

Agno provides a high-level abstraction for defining agents. It encloses the agent’s entire reasoning loop into one upper class.

As a developer, you can define the core unit Agent class, which represents a complete AI agent with a given LLM model and a set of tools it can use. You can configure agents with standard Python objects for models, tools, memory, and knowledge.

For example, here’s how you can use Agno to build an agent that uses Anthropic’s Claude model and a HackerNews search tool to answer questions:

In ~10 lines of Python, you’ve instantiated an AI agent and queried it. In fact, a recent evaluation compared the startup time for an Agent with 1 tool in Agno vs LangGraph. And Agno is way faster.

Agno is Pythonic and developer-friendly. You write standard Python classes and functions to define agent logic, and it comes with a built-in runtime system, Agent OS, to turn agents into a working application in hours.

On top of that, Agno’s team abstraction simplifies building multi-agent workflows that would otherwise require writing a lot of coordination logic (more on this later).

LangGraph

LangGraph takes a lower-level, graph-based approach to define agent logic. Its core abstraction is often a StateGraph or GraphBuilder object, which you populate with:

- State: A schema defining what information is tracked as the agent runs. For example, a state might include a

messageslist to keep conversation history, or other variables the agent updates. - Nodes: Each node is a function that receives the current state of the graph, performs a task such as calling an LLM or executing a tool, and returns an update to the state.

- Edges: Each edge is a logic rule that connects nodes and directs the flow of execution. You can specify that after Node A, go to Node B, etc. Edges can be static or dynamic.

One might wonder: isn’t defining a whole graph more work than using something like Agno’s agent class?

It can be. It might feel more like programming an orchestration workflow than a typical conversational agent. But the benefit is predictability and control. Because the entire workflow is an explicit state machine, it’s easier to debug, visualize, and reason about.

A conceptual example of building a simple graph in LangGraph:

Bottom line: The choice of abstraction reflects a fundamental trade-off.

Agno optimizes for developer experience and speed by using familiar Python building blocks.

LangGraph optimizes for control and reliability in complex, non-linear workflows by making the state and control flow explicit.

For a simple, linear sequence of tasks, Agno's approach may require less boilerplate. For a sophisticated workflow with multiple decision points and potential loops, LangGraph's explicit graph structure provides a more manageable and scalable foundation.

Feature 2. Multi-Agent Composition

As tasks become complex, one agent isn’t enough. It becomes necessary to use multiple specialized agents that work together. And this is a core requirement for developers regardless of whichever framework they use.

Agno

Similar to Agentclass, Agno has a Team class for multi-agent systems. A team is a group of agents that can communicate and work together on tasks.

Agno's Team class supports three primary modes of interaction:

route: The team leader acts as a simple router, analyzing the incoming task and sending it to the most appropriate agent based on its defined role and capabilities.coordinateorcollaborate: These modes allow more dynamic conversations between agents. They can work together, share intermediate results, and collectively solve a problem that requires multiple skills. This is akin to a brainstorming session among multiple AIs.

Under the hood, Agno manages the message passing between agents according to the mode.

For example, in a Coordinator team, one agent acts as a leader, delegating subtasks to other specialized agents. In a Router team, an agent automatically routes each query to whichever specialist agent is best suited. In a Collaborator team, agents openly share information and iterate together on a solution. These modes are built in, so you can configure a team with a single flag.

Also, if you spin up the AgentOS for a team, you get a chat interface where you can see each agent’s contributions identified by role. This visual trace is immensely helpful to understand how the agents are interacting.

LangGraph

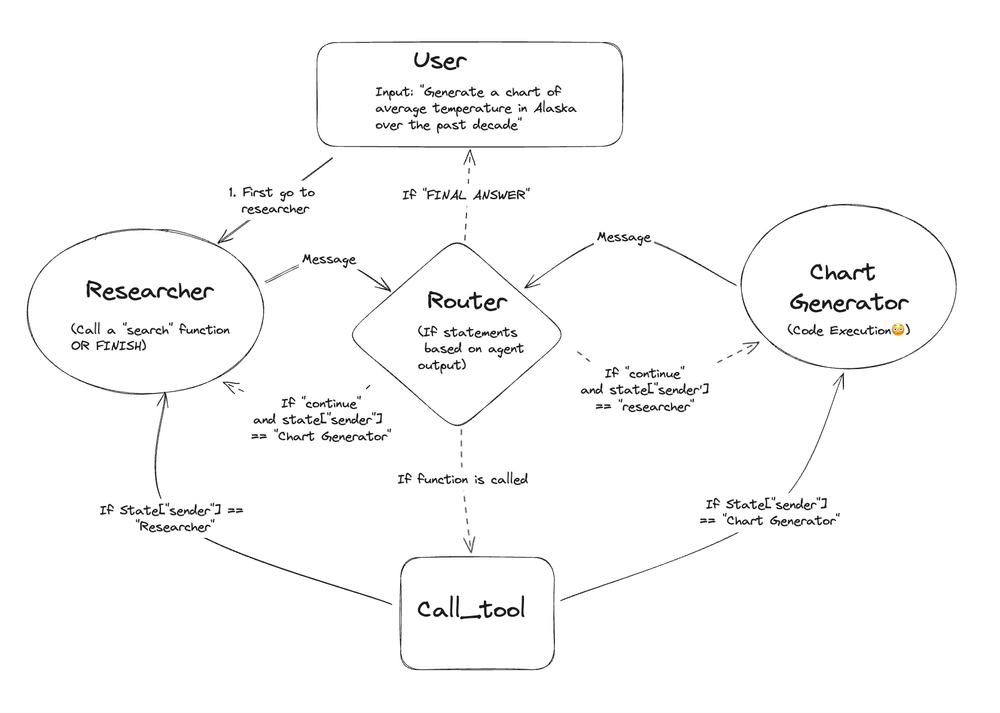

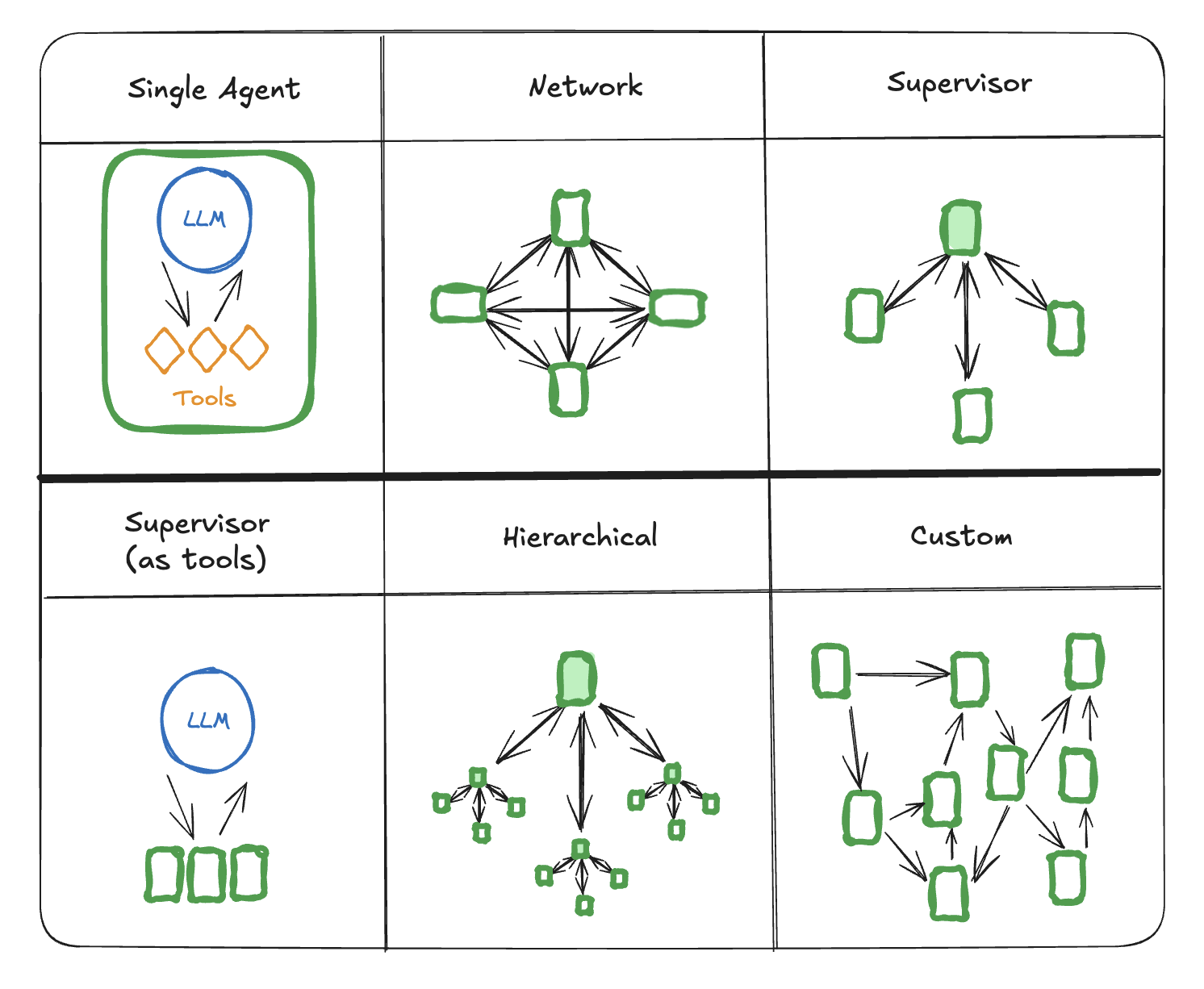

LangGraph uses a graph-based orchestration framework to build multi-agent systems. Unlike Agno’s single Team objective; here you can create nodes that represent distinct agents and connect them using different edges and architecture.

LangGraph supports various multi-agent architecture models, like a single agent, a fully connected network, a centralized supervisor, hierarchical supervision, and custom mixed structures.

A popular architectural pattern is the Supervisor-Worker model:

- A ‘supervisor’ node acts as the central router or orchestrator. It receives a task, analyzes it, and uses conditional edges to route the task to one or more specialized ‘worker’ agents.

- Each worker agent can be a complete sub-graph itself, with its own internal logic and tools.

- After a worker completes its sub-task, it returns control to the supervisor, who can then decide the next step. This could involve routing to another worker, aggregating results, or finishing the process.

For example, an agent node could output Command("research_agent", payload) to hand control to a research_agent node, possibly including a question to research.

The decision of which agent to call can be made by an LLM’s output or fixed by the developer by defining edges. For example, the LLM’s response could contain a structured directive like ‘I need help from Agent B with X,’ and LangGraph’s framework can parse that and route accordingly.

LangGraph also recently introduced utilities like LangGraph Supervisor, a separate helper library that provides templates for common multi-agent use cases. These templates are akin to pre-defined subgraphs you can plug in, so you don’t have to hand-craft everything.

Bottom line: Both frameworks support multi-agent compositions, but in different ways.

Agno has a native concept of ‘teams’ that can coordinate in different modes.

LangGraph is flexible and lets you build custom multi-agent topologies (network, supervisor, hierarchical, etc.) by linking agent nodes in a graph.

Notably, LangGraph is more suitable for complex multi-agent workflows that might not fit a standard pattern.

Feature 3. Memory Management

Memory is a critical aspect of any agent that needs to maintain context over time or learn from past interactions. Let’s see how both agents handle it.

Agno

Agno gives agents short and long-term memory. Every agent keeps a session state, which is essentially a conversation history and state within a single interaction, so it remembers prior turns by default. This is similar to how LangChain’s ‘memory’ works for chat agents.

For durable knowledge, you can attach knowledge bases and vector stores using helpers like PDFKnowledgeBase or VectorKnowledgeBase.

This means your agent can recall information it was taught previously or search a knowledge repository, not unlike Retrieval-Augmented Generation (RAG) patterns.

Beyond single sessions, Agno’s memory modules let agents with memory store important findings in the database and load them later.

Agno introduces the concept of ‘Agentic Memory,’ where you can configure your Agent with enable_agentic_memory=True to automatically let it create, update, or delete memories after each run.

In practice, using memory in Agno might look like: you initialize an agent with a vector memory, like ChromaDBVectorMemory or a LangChain retriever, and then during conversation, the agent’s chain-of-thought will automatically retrieve relevant info and incorporate it.

Here’s a simple example of using Memory in an Agent:

LangGraph

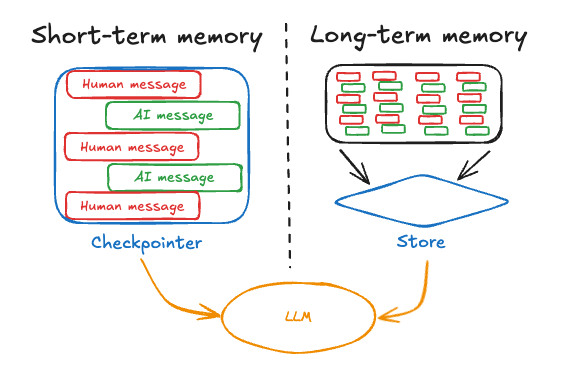

In LangGraph, state and memory are fundamentally linked. The entire graph state serves as the agent's memory for a given run.

Short-Term Memory: LangGraph manages short-term memory as a part of your agent's state, which typically includes a list of messages. The key component for persistence is the checkpointer.

After every node execution, LangGraph will serialize the current state and save it via the checkpointer. This makes conversations durable and resumable by default.

Long-Term Memory: LangGraph formalizes long-term memory into distinct types, providing a structured vocabulary for building agents that learn over time. These types include:

- Semantic Memory: Facts about the world or a user.

- Episodic Memory: A record of past experiences, often used for few-shot prompting.

- Procedural Memory: Learned rules or processes for how to perform tasks.

The structured approach requires the developer to manage writing to and retrieving from these memory stores, but it offers a powerful framework for building agents that truly learn.

Bottom line: LangGraph's checkpointer system offers a more robust and automatic solution for durable, resumable workflows.

Agno's ‘Agentic Memory’ provides a compelling, higher-level capability for long-term personalization where the agent takes a more active role in managing its own memory.

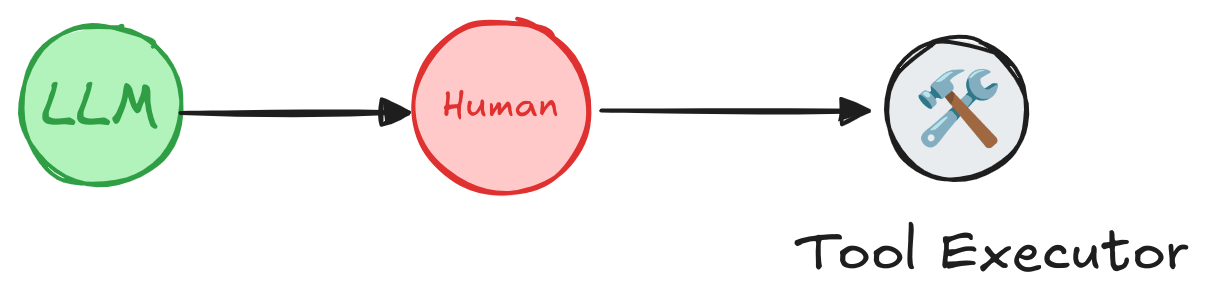

Feature 4. Human-in-the-Loop

For many real-world applications, even the best autonomous agents sometimes need human oversight to approve a critical action, validate decisions, and correct errors.

Agno

Agno incorporates human-in-the-loop (HITL) through confirmation requirements on agent actions.

For instance, when defining a tool or a step in a workflow, you can set a parameter like requires_confirmation=True. This tells Agno that before executing that action, it should pause and await human approval.

When the agent’s logic reaches that point, it will pause and move the action to a human operator (likely through the AgentOS UI or callback). The human can then either approve the action to continue or modify/abort the action.

Agno’s AgentOS UI plays a role here as well. Because it’s a web app interface for your agents, it can surface HITL prompts.

For example, if an agent is in a team and needs your input at some step, the UI will show a prompt or a decision button on your screen.

Agno has a way to integrate with frontend components (maybe through the control plane) such that when the agent pauses for human input, a message or form is displayed to the user.

LangGraph

LangGraph’s approach to human-in-the-loop is very much a natural consequence of its design: since you control the workflow, you can program in a pause whenever you want a human to step in. There are a couple of ways LangGraph facilitates HITL:

- Static Interrupts: When compiling a graph, developers can use the

interrupt_beforeorinterrupt_afterarguments. These commands automatically pause execution at a specific node, for example, before the ‘tools’ node executes a sensitive action. When paused, a human can inspect the entire graph state, modify it if necessary, and then resume execution. - Dynamic Interrupts: LangGraph also supports dynamic interrupts from within a node's logic. This allows an agent to decide when to ask for help based on the current context or its own uncertainty.

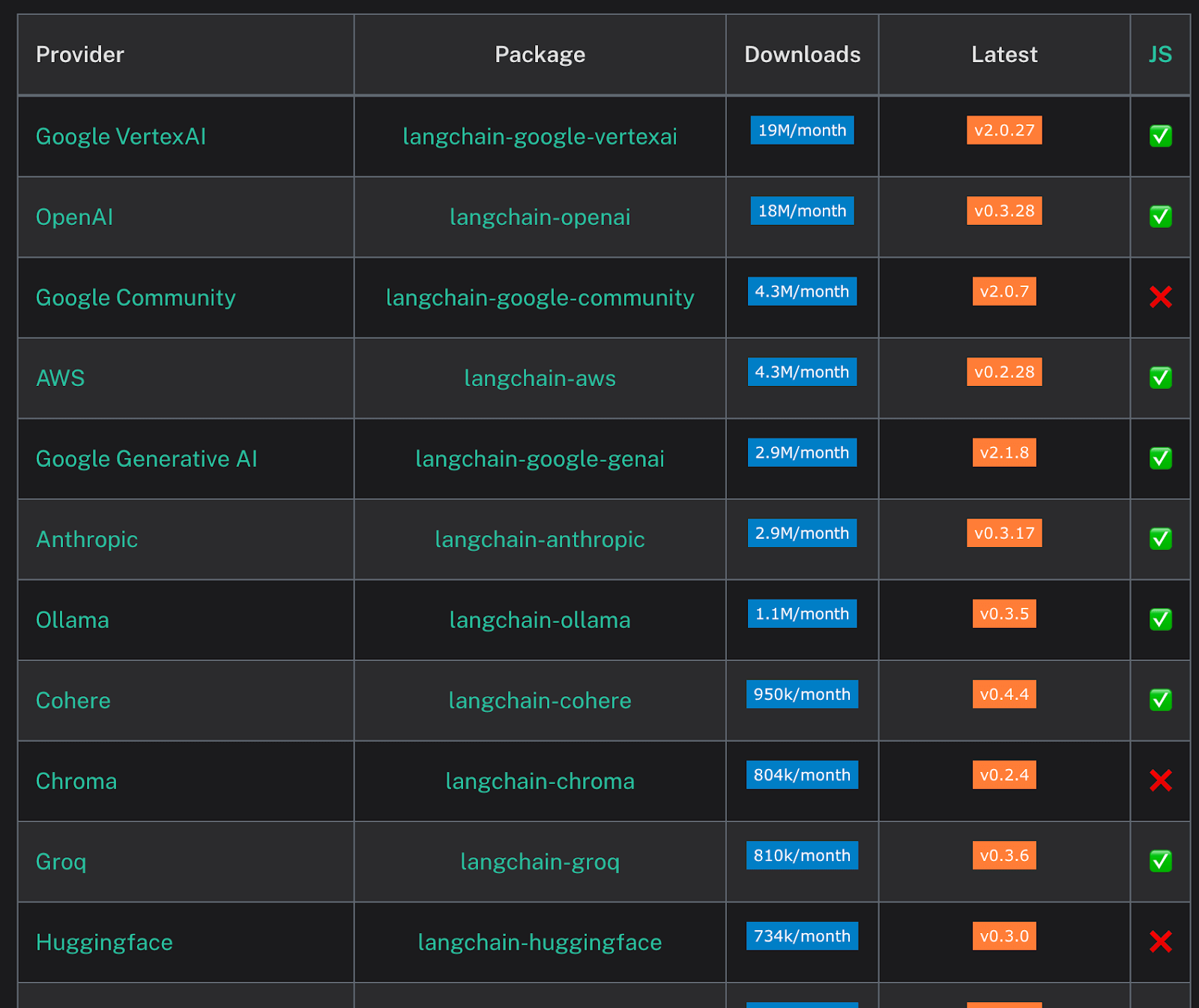

Agno vs LangGraph: Integration Capabilities

No agentic framework exists in a vacuum. Its ability to connect with LLMs, data sources, and other MLOps tools is critical for building real-world applications.

Agno

Agno is model-agnostic and integrates with over 23 major LLM providers, including OpenAI, Anthropic, Groq, Google, and Hugging Face. Switching models is simple since any API or SDK can be wrapped in its Model class.

On the tools side, Agno has built-in tools for web search, stock data, databases, and even image processing, while also allowing developers to create custom Python tools.

It integrates directly with vector databases like Weaviate, Qdrant, Couchbase, or Milvus, making it suitable for retrieval-augmented generation.

For observability, Agno integrates with third-party platforms like AgentOps and Langfuse.

A key integration feature is the AgentOS runtime that exposes agents through FastAPI endpoints. Agents can be connected to external systems or pipelines with REST or WebSocket calls.

Although its ecosystem is newer than LangChain’s, Agno’s Python base makes it easy to integrate with virtually any library.

LangGraph

LangGraph’s strength lies in its deep, native integration with the broader LangChain ecosystem. This gives developers immediate access to:

- LangChain Components: The vast library of LangChain integrations for LLMs, document loaders, text splitters, vector stores, and tools can be used directly as nodes within a LangGraph graph.

- LangSmith: A best-in-class platform for observability, tracing, and debugging. Traces from LangGraph are automatically visualized in LangSmith, showing the step-by-step execution of the graph, which is invaluable for understanding and improving complex agent behavior.

- Extensibility: As a Python library, LangGraph can be integrated with any other Python package or API, offering limitless extensibility for developers.

Agno vs LangGraph: Pricing

Agno

Agno’s core framework is open-source and free to use for self-hosting. The Agno team does provide AgentOS control plane as part of the open-source package. Again, using it is free.

Apart from that, Agno has two paid plans:

- Starter: $150 per month

- Custom: Custom pricing

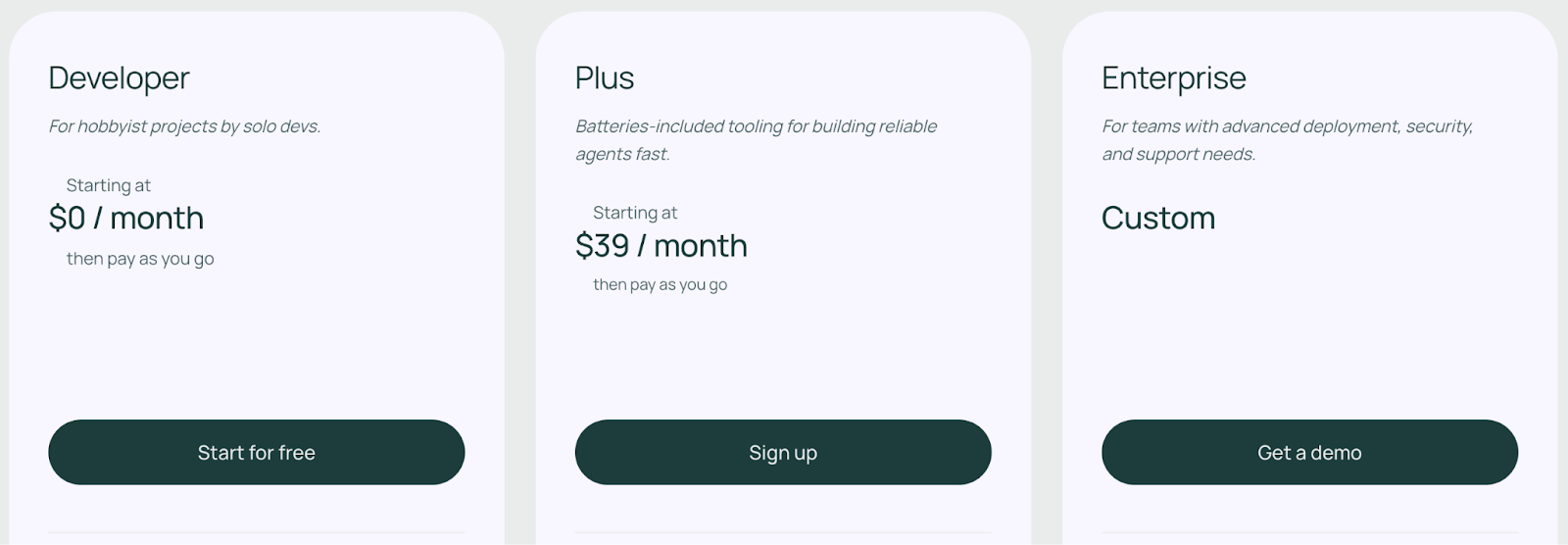

LangGraph

LangGraph’s open-source core is free, but it comes with an option of paid managed services. If you just use the LangGraph Python (or JS) library on your own, there’s no cost; it’s MIT licensed. You can design and run LangGraph agents in your environment freely.

LangChain offers three paid plans for LangGraph:

- Developer: Free

- Plus: $39 per month

- Enterprise: Custom

How ZenML Manages the Outer Loop when Building Multi-Agent Systems

Frameworks like Agno and LangGraph are excellent for the ‘inner loop’ of development: writing, debugging, and iterating on agent logic.

However, moving an agent into production requires solving the ‘outer loop,’ which involves the entire lifecycle of deployment, monitoring, evaluation, and reproducibility.

This is where a dedicated MLOps and LLMOps platform like ZenML complements both these frameworks.

ZenML is an open-source MLOps + LLMOps framework that serves as the unifying outer loop for your AI agents. It complements agents built with Agno or LanGraph frameworks by governing the entire production lifecycle.

Here’s how ZenML adds value:

- Embed agents in end-to-end pipelines: Wrap Agno or LangGraph workflows inside ZenML pipeline steps. A single pipeline can handle data prep, agent execution, and evaluation, making the process reproducible, versioned, and schedulable across local or cloud infrastructure.

- Unified visibility and lineage: ZenML logs every prompt, response, model, and tool output. Its dashboard shows complete run histories, letting teams trace errors, compare outputs, and collaborate by reviewing runs together.

- Continuous quality checks: Add evaluation steps after the agent runs to measure output quality. ZenML can trigger alerts or fallbacks when failures occur, enabling A/B testing and long-term monitoring of agents in production.

- Mix and match tools (avoid lock-in): ZenML is framework-agnostic. You can combine Agno, LangGraph, and even traditional ML components in one pipeline, run on any infra, and avoid vendor lock-in by swapping frameworks as needed.

- Scalability and deployment: Pipelines can scale to many agents in parallel or run on orchestrators like Airflow, Kubernetes, or Tekton. ZenML bridges development code and production-grade infrastructure.

- Experimentation and versioning: Each tweak or config change becomes a versioned pipeline. This ensures reproducibility, easy comparisons, and a systematic ‘outer loop’ for improving agents over time.

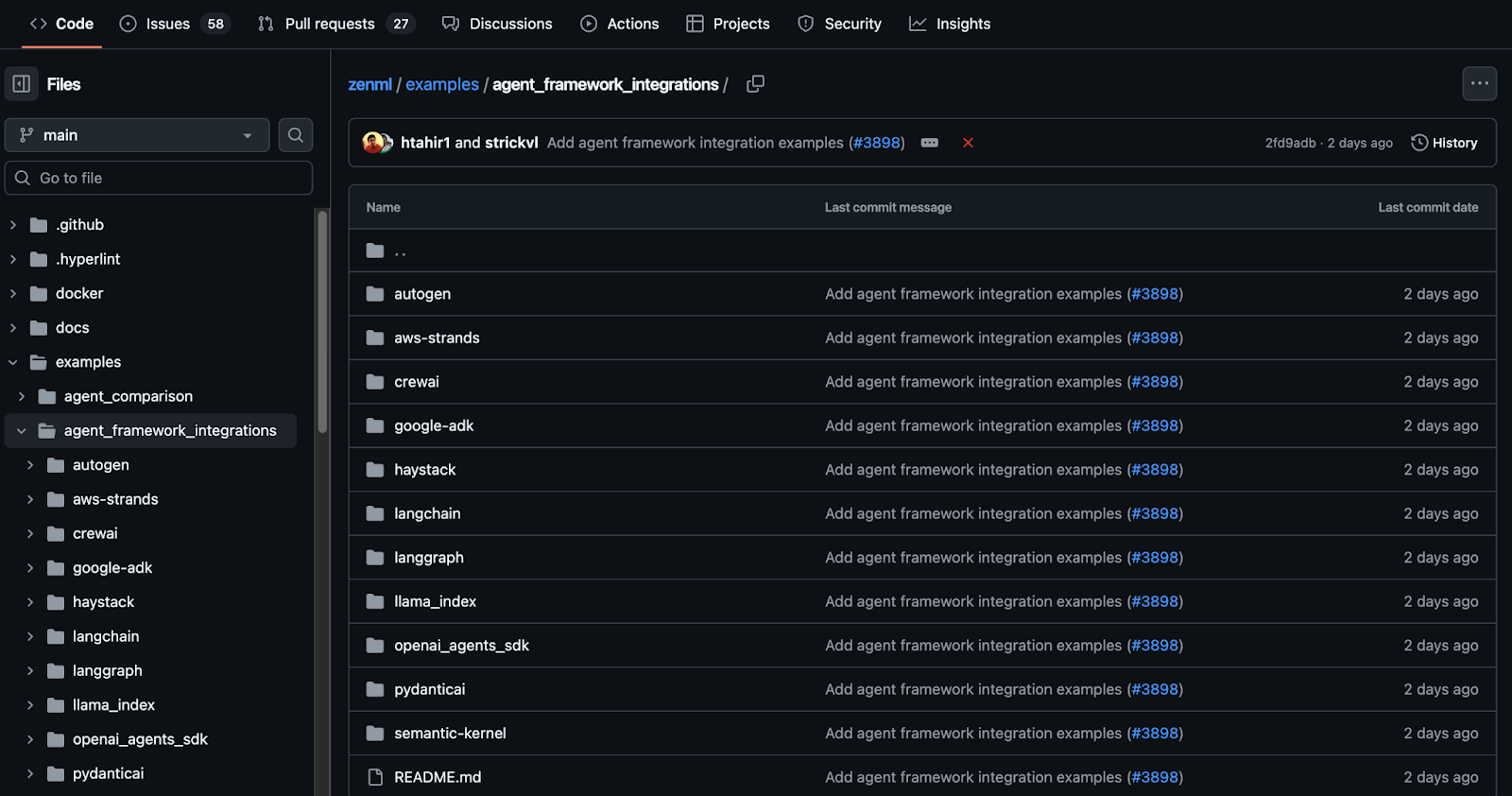

👀 Note: At ZenML, we have built several agent workflow integrations with tools like Semantic Kernel, LangGraph, LlamaIndex, and more. We are actively shipping new integrations that you can find on this GitHub page: ZenML Agent Workflow Integrations.

📚 Related comparison article to read:

Which Framework of the Two Is Best to Build Multi-Agent Systems?

The choice between Agno and LangGraph is not about good and bad, but which one aligns best with a project's specific requirements for performance, control, and ecosystem integration.

✅ Choose Agno if you want a fast, Python-native framework that prioritizes performance and simplicity. It’s ideal for teams that need quick prototyping and self-hosted deployment with built-in runtime (AgentOS) support.

✅ Choose LangGraph if your workflow demands fine-grained control and reliability. It suits complex, branching agents where auditability, resumable execution, and tight integration with LangChain/LangSmith matter most.

✅ Use ZenML when you’re ready to take any agentic system into production. It manages pipelines, evaluation, and scaling, giving you versioned workflows and experiment tracking across cloud or enterprise infrastructure.

If you’re interested in taking your AI agent projects to the next level, consider joining the ZenML waitlist. We’re building out first-class support for agentic frameworks (like LangGraph, CrewAI, and more) inside ZenML, and we’d love early feedback from users pushing the boundaries of what AI agents can do. With ZenML, you can seamlessly integrate whichever agent framework you choose into robust, production-grade workflows. Join our waitlist to get started.👇