Building reliable, production-grade AI agent workflows requires more than just plugging into an LLM. You need a solid framework that structures your agent's reasoning, manages its tools, and orchestrates complex workflows.

Pydantic AI and CrewAI are two popular frameworks for creating production-grade workflows. While both help you create efficient Gen AI workflows, they operate on fundamentally different philosophies. That brings up the question: Which one’s better?

In this Pydantic AI vs CrewAI article, we compare both frameworks in terms of their maturity, core features, integrations, and pricing, as well as discuss how each delivers in different scenarios.

Pydantic AI vs CrewAI: Key Takeaways

🧑💻 Pydantic AI: A framework that uses Pydantic’s robust data validation to build reliable and predictable AI agents. It’s ideal for cases where you want predictable, maintainable single-agent logic integrated into Python applications.

🧑💻 CrewAI: A high-level framework for orchestrating role-based, autonomous AI agents that work together as a ‘crew.’ It abstracts away the complexity of multi-agent collaboration, providing pre-built patterns for task delegation and role assignment, making it perfect for complex problem-solving workflows.

Pydantic AI vs CrewAI: Maturity and Lineage

Maturity matters when choosing an AI framework. CrewAI is the older project by a small margin. It launched in late 2023 amid the GenAI boom, whereas Pydantic AI arrived in public beta in late 2024.

Below is a comparison of key metrics and lineage for the two projects:

CrewAI gained an impressive head start in adoption with ~36k GitHub stars within two years of launch and a large community of practitioners. It’s a relatively young project, but it has seen rapid iteration.

Pydantic AI is newer to the scene, but it reached a stable 1.0 release in late 2025 and quickly narrowed the feature gap.

In short, CrewAI currently has more community traction, whereas Pydantic AI benefits from the pedigree of the Pydantic ecosystem and a focus on stability now that it’s hit v1.

Pydantic AI vs CrewAI: Features Comparison

Let's see how Pydantic AI and CrewAI stack up against each other. Here's a quick peek:

Now, let's dive deep with a one-on-one feature comparison.

Feature 1. Primary Abstraction

What is an ‘agent’ in each framework? This fundamental difference in abstraction sets the tone for how you develop workflows with Pydantic AI vs CrewAI.

Pydantic AI

Pydantic AI's central abstraction is the AIAgent that you can configure like a FastAPI app. You specify which LLM model it uses, define instructions, structure output, and add tools or functions it can call.

The agent automatically validates if the LLM’s response conforms to the output schema, retrying or self-correcting if validation fails. The data-centric approach makes agent interactions extremely reliable.

For example, here’s a simplified Pydantic AI agent that produces a structured response and uses a custom tool:

CrewAI

CrewAI takes a more architectural view. Each agent in CrewAI has a role, a goal, and even a backstory/persona to guide its behavior.

However, a single agent is rarely used alone in CrewAI; the framework is designed such that the application is a multi-agent system, hence the name ‘Crew.’ Its primary abstractions let you design complex workflows by thinking in terms of team structure and responsibilities rather than low-level code with fundamental building blocks, including:

- Agent: A specialized worker with a specific

role,goal, andbackstory. This defines what the agent is an expert in. - Task: A specific assignment for an agent to complete, including a

descriptionand theexpected_output. - Crew: A team of agents that work together to execute a series of tasks according to a defined

process.

When you run a crew, the agents will each tackle their tasks in the defined order or hierarchy. CrewAI supports defining this setup via a YAML config or directly in Python code.

For example, a simple Crew with two agents and two sequential tasks can be defined in code like so:

Bottom line: Pydantic AI offers a data-first abstraction that guarantees structured, validated outputs, making it ideal for reliable data processing tasks.

CrewAI provides a role-based abstraction, which adds some overhead in setup but pays off when you need multiple specialized agents working together.

Feature 2. Multi-Agent Orchestration

Orchestration defines how multiple agents communicate and work together to achieve a larger goal. Let’s compare how Pydantic AI and CrewAI enable multi-agent workflows and what orchestration patterns they support.

Pydantic AI

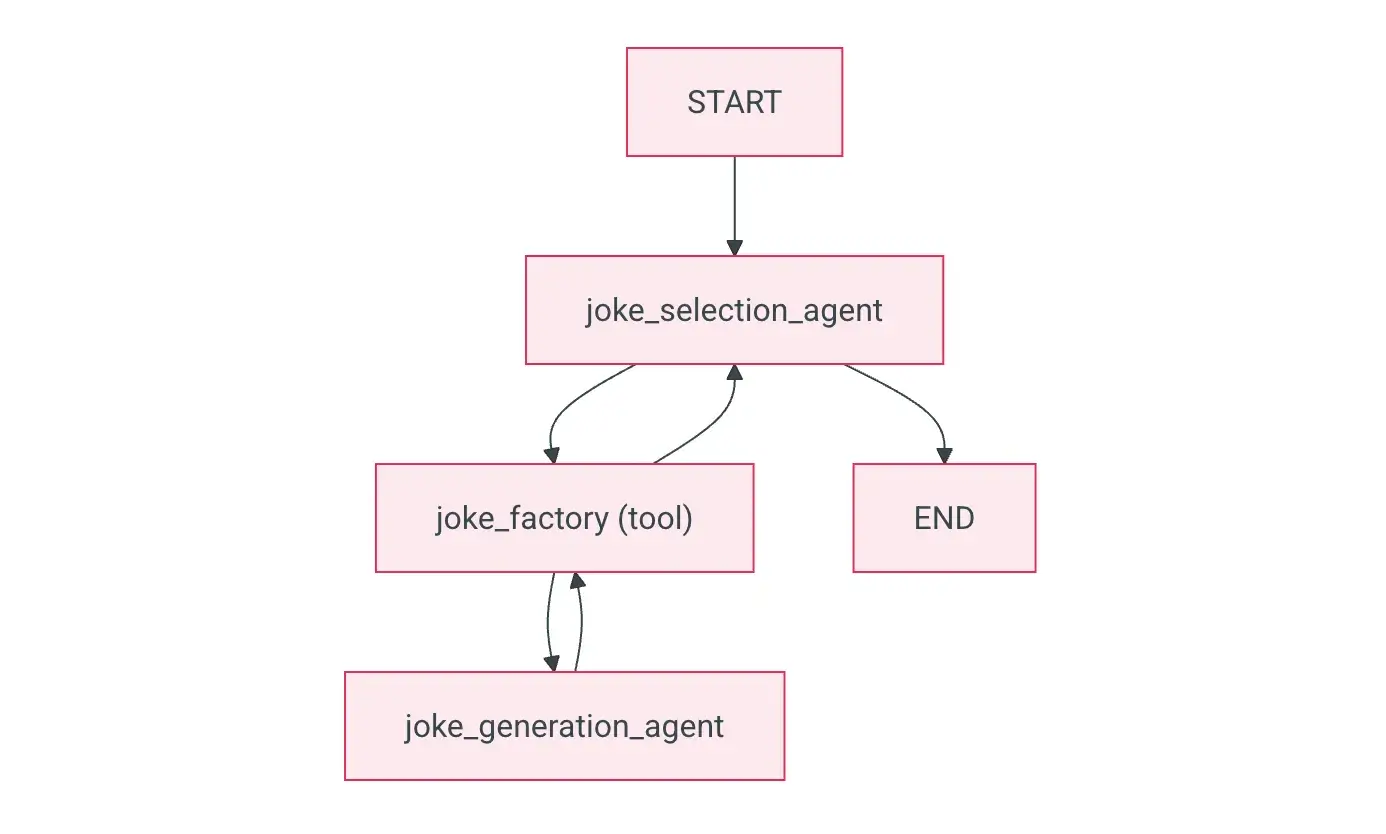

Pydantic AI started as a single agent, but it provides several ways to orchestrate multiple agents when needed.

- Delegation via tools: Register another agent’s

runas a tool, so a primary agent hands off a subtask and continues when a result returns. - Programmatic hand-off: Orchestrate agents directly in Python, chaining A → B → C with your own branching and loops.

- Graph workflows: Use the optional pydantic-graph state machine to model nodes and edges for complex control flow.

What you won’t find in Pydantic AI is a native concept of multi-agent orchestration. You’ll connect agents using one of the above methods. The approach offers high flexibility and favors teams that want tight control inside Python. However, it requires you to write the orchestration code yourself.

CrewAI

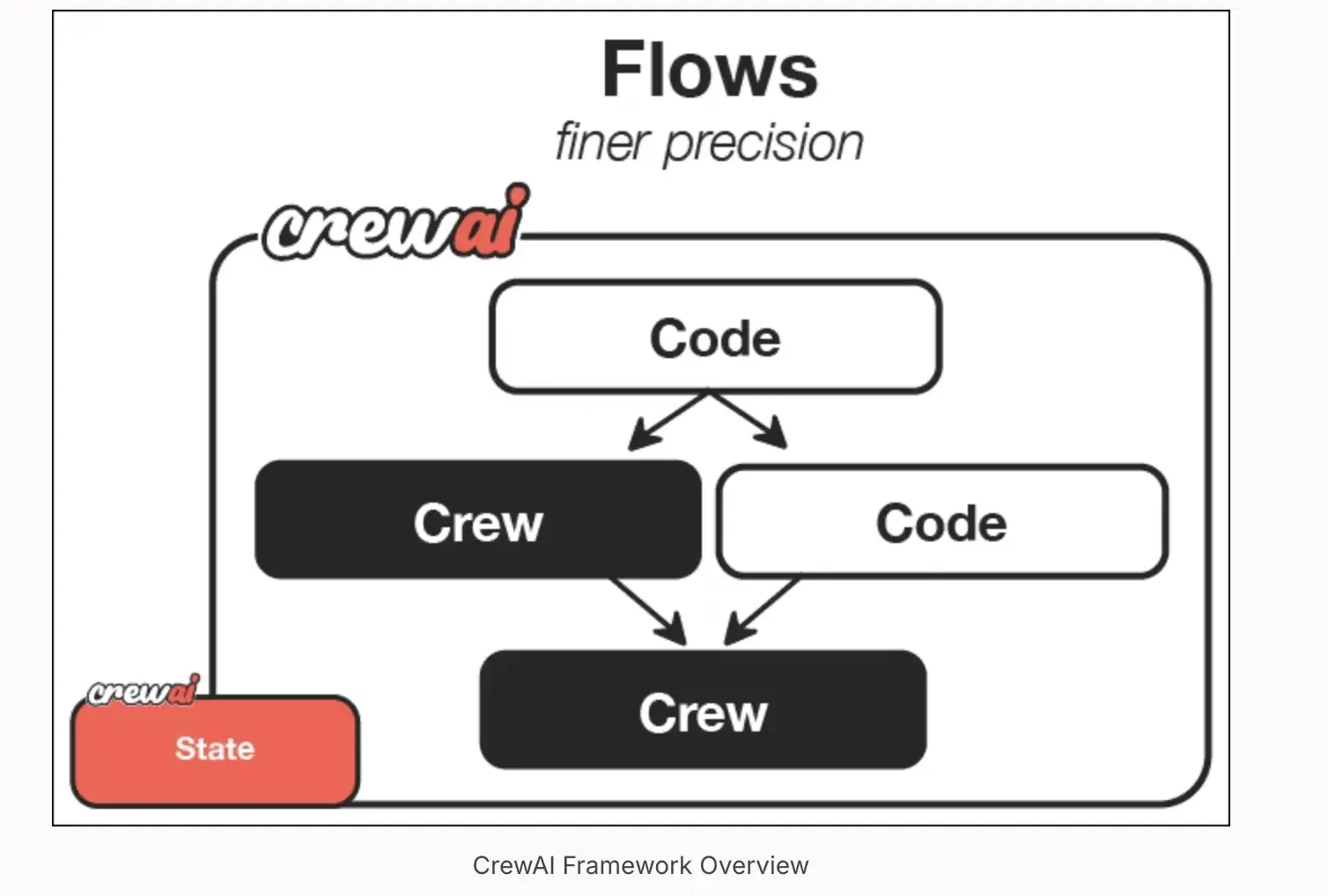

CrewAI excels at multi-agent orchestration by providing built-in, high-level patterns through its Process types:

- Sequential Process: Tasks are executed one after another in a linear pipeline. The output of one task automatically becomes the context for the next, creating a simple, linear workflow.

- Hierarchical Process: A ‘manager’ agent oversees a team of worker agents. It assigns and supervises worker tasks, keeping coordination structured.

Beyond that, CrewAI introduces Flows, which automate more complex or event-driven workflows. A Flow can trigger crews based on schedules or external events and even chain multiple crews together.

Bottom line: CrewAI provides powerful, out-of-the-box orchestration strategies like sequential and hierarchical processes, making it easy to set up collaborative agent teams.

Pydantic AI offers the building blocks for flexible, custom-coded orchestration, giving developers full control but requiring more manual implementation.

Feature 3. Memory Management

Real-world workflows often require remembering information. Memory allows agents to recall past interactions, maintain context, and learn over time. In short, agent memory is crucial for coherent and personalized conversations.

Let’s see how both tools stack up in terms of memory.

Pydantic AI

Pydantic AI includes a built-in Memory tool that lets agents recall and maintain conversational context, like remembering a user’s previous question or a past value.

However, the tool is focused on session-based memory rather than persistent, long-term knowledge. By default, Pydantic AI agents are stateless. That is, each run starts fresh unless you explicitly design persistence.

If you need longer-term memory, a workaround is to build custom tools that connect to vector databases such as FAISS or Pinecone, or use external stores for retrieval.

CrewAI

CrewAI treats memory as a first-class capability you can switch on with memory=True when creating a crew.

Once enabled, agents gain layered recall that improves reasoning and continuity without extra plumbing. It supports:

- Short-Term Context: Agents within a crew automatically share the context of ongoing tasks, allowing for seamless collaboration.

- Long-Term Memory (RAG): You can equip agents with tools to access external knowledge bases, such as vector stores. This allows an agent to retrieve relevant information from a persistent memory to inform its actions.

- Shared Memory: The framework is designed to allow agents to share learnings and context, enhancing the collective intelligence of the crew over time.

Bottom line: Both frameworks offer memory capabilities. Pydantic AI’s memory is a simple, effective tool for maintaining conversational context in single-agent scenarios.

CrewAI’s memory is more robust and deeply integrated into its collaborative model, supporting both short-term context sharing and long-term knowledge retrieval for entire teams.

Feature 4. Observability and Tracing

For production systems, the ability to observe, debug, and trace an agent's behavior is non-negotiable. Both Pydantic AI and CrewAI recognize this need and provide observability features, but they do so in different ways.

Pydantic AI

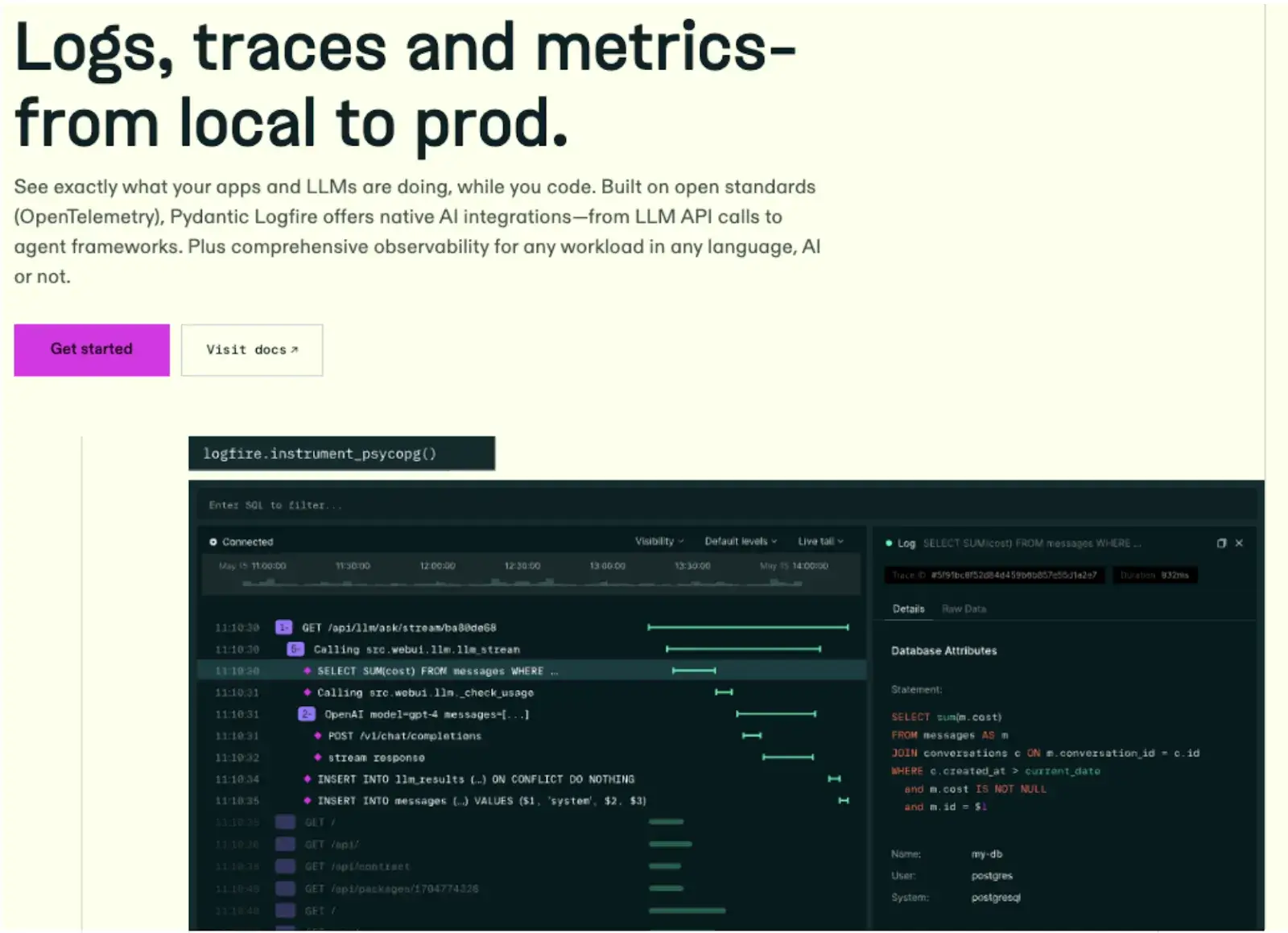

Pydantic AI integrates observability through Pydantic Logfire, a lightweight tracing and logging service built by the same team.

Logfire records every model call, prompt, tool invocation, and validation step, displaying them as spans (traceable events) with timing and metadata.

Each span logs inputs, outputs, latency, and token usage, helping diagnose validation failures or performance issues quickly.

It's OpenTelemetry-compatible and allows you to route traces to external systems like Langfuse, Datadog, Jaeger, or Zipkin for self-hosted monitoring.

CrewAI

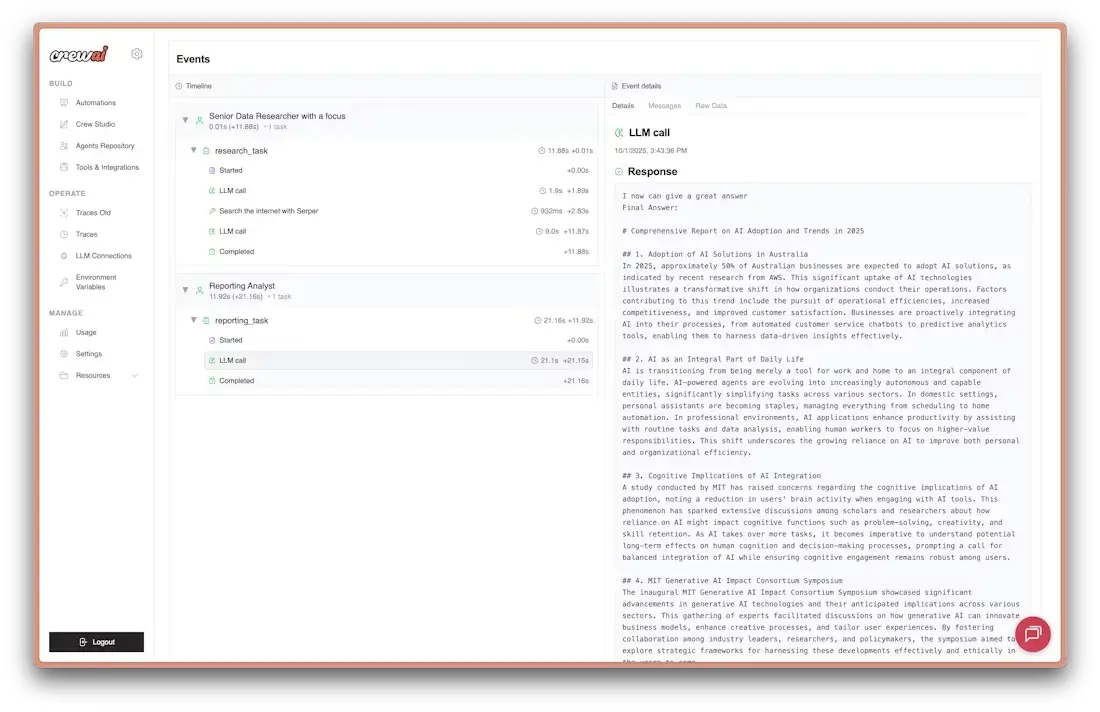

CrewAI provides built-in tracing capabilities that allow you to monitor and debug your Crews and Flows in real-time. This guide demonstrates how to enable tracing for both Crews and Flows using CrewAI’s integrated observability platform.

What is CrewAI Tracing? CrewAI’s built-in tracing provides comprehensive observability for your AI agents, including agent decisions, task execution timelines, tool usage, and LLM calls - all accessible through the CrewAI AMP platform.

Pydantic AI vs CrewAI: Integration Capabilities

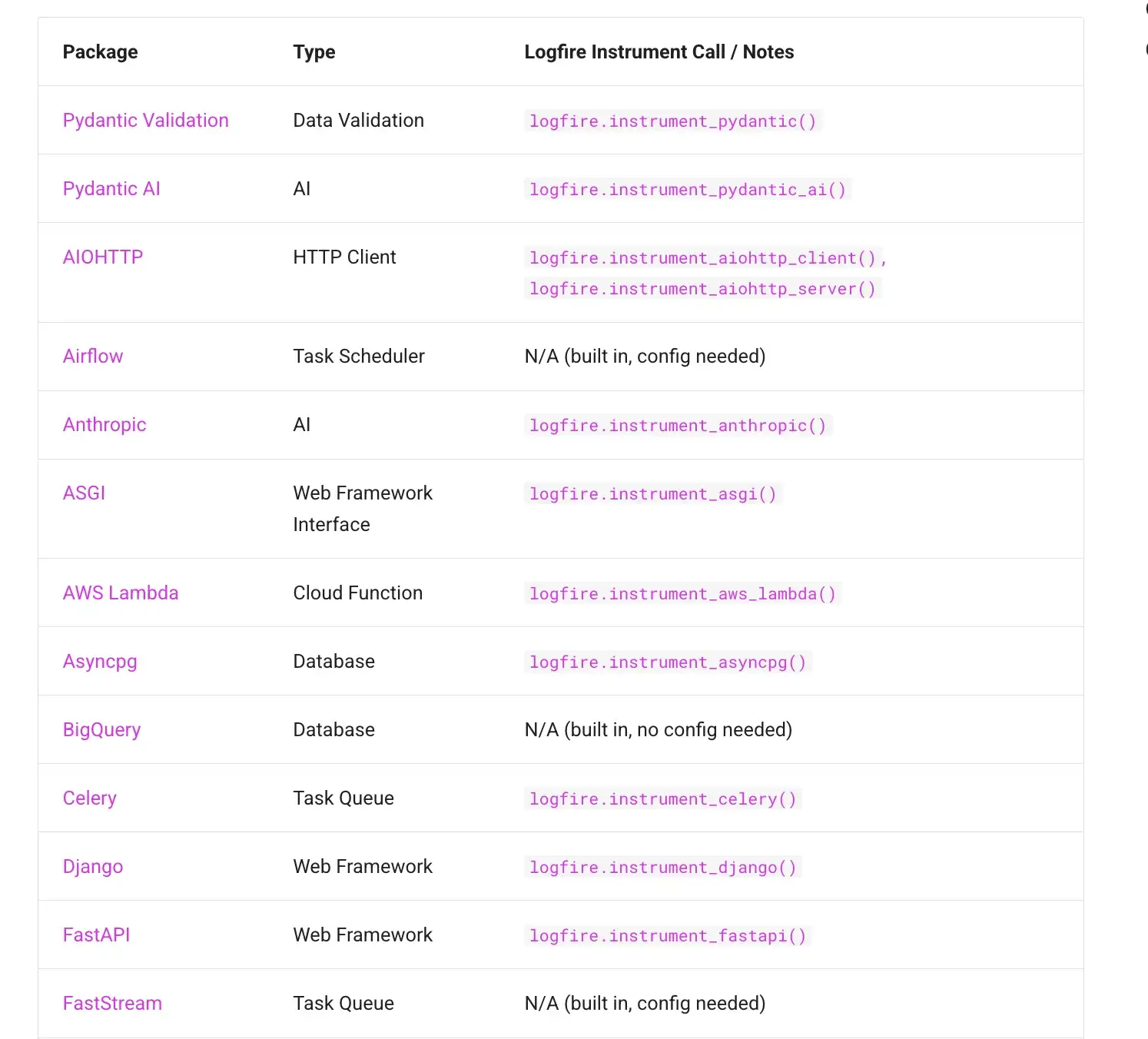

Pydantic AI

Pydantic AI's integration strategy is rooted in its Python-native design. It supports all major LLM providers like OpenAI, Anthropic, Google Vertex, Amazon Bedrock, and Cohere through a modular provider system, so developers can switch models with minimal setup.

Its tool integration is equally flexible. Any Python function can become an agent tool using decorators, making API or library connections straightforward.

For interoperability, Pydantic AI supports open protocols such as Model Context Protocol (MCP), Agent-to-Agent (A2A) communication, and AG-UI, allowing agents to connect to external tool servers or interactive UIs with minimal code.

It also supports durable execution with platforms like Temporal, DBOS, or Prefect, ensuring long-running workflows can resume after failures. Plus, its FastAPI compatibility makes it simple to expose agents as APIs or event-driven webhooks.

CrewAI

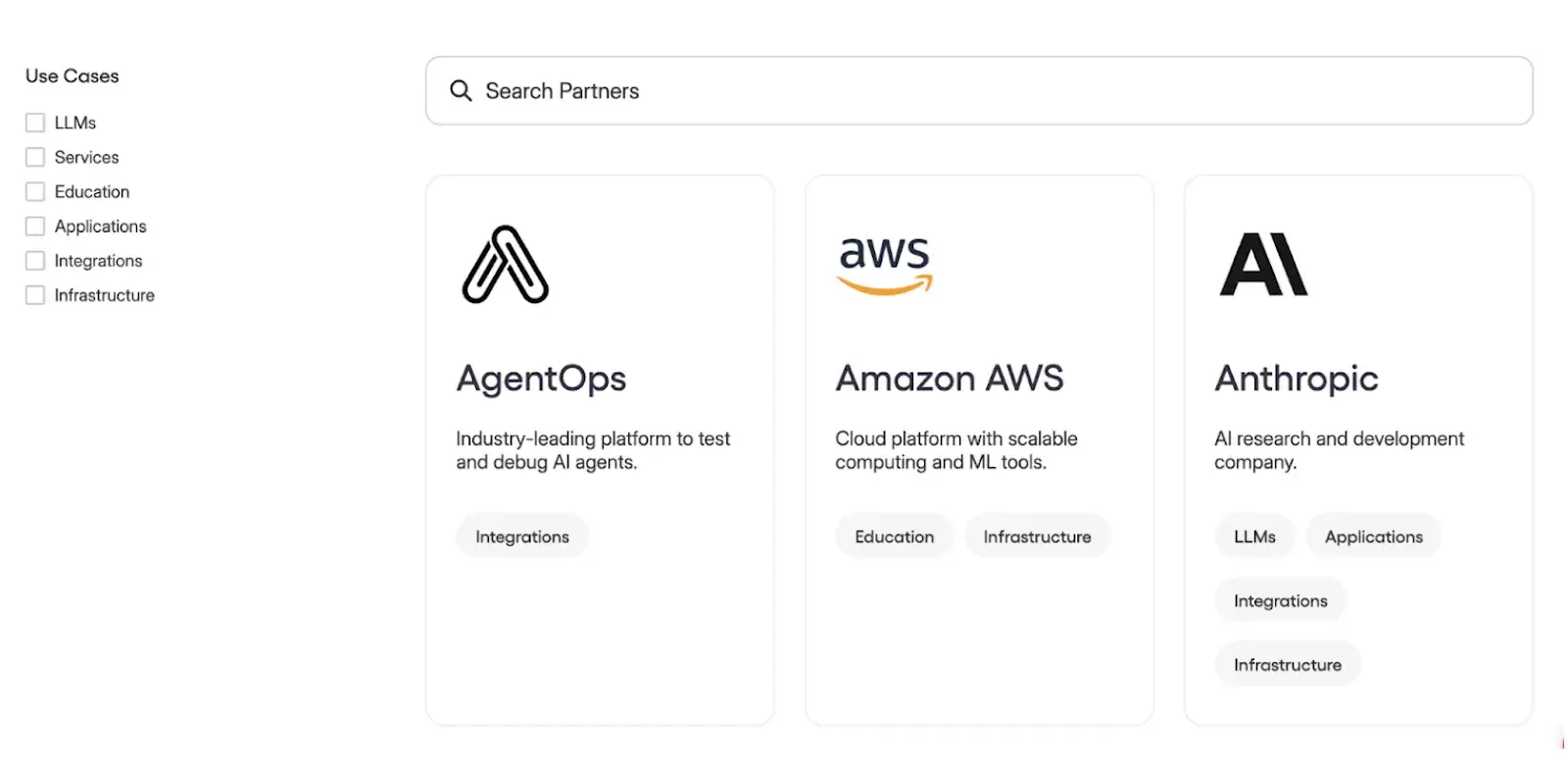

CrewAI comes with a library of over 40 built-in tools and integrations that comprise:

- LLMs: Groq, OpenAI, Anthropic

- Services: Revium, RagaAI, StartSE

- Education: PWC, DeepLearning, K2 Consulting

- Applications: Composio, Chroma, Cloudera

- Integrations: Notion, Slack, Replit

- Infrastructure: Microsoft Azure, MongoDB, Nexla

Notably, while CrewAI was initially built on LangChain, it is now an independent framework, though it maintains compatibility with many tools from the broader AI ecosystem.

Pydantic AI vs CrewAI: Pricing

Pydantic AI

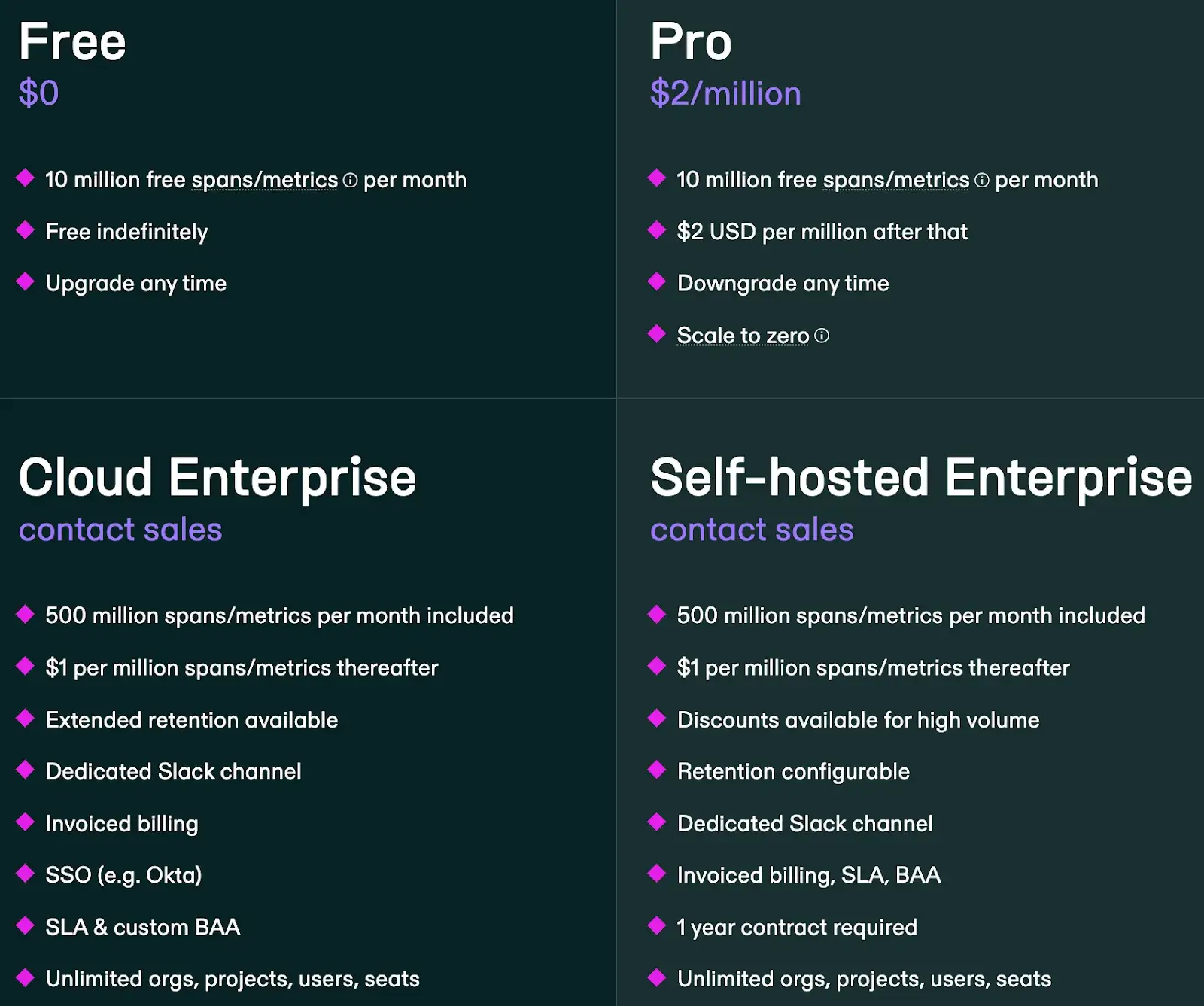

Pydantic AI is part of the open-source Pydantic library and is available under the MIT license. It is completely free to use. But it does have plans to increase the limits of ‘spans/metrics.’

Here are the plans it offers:

- Pro: $2 per million spans/metrics

- Cloud Enterprise: Custom pricing

- Self-hosted Enterprise: Custom pricing

You can install it and build applications on your own infrastructure without any licensing fees or subscriptions. Your only costs will be for the underlying LLM API calls and your hosting infrastructure.

CrewAI

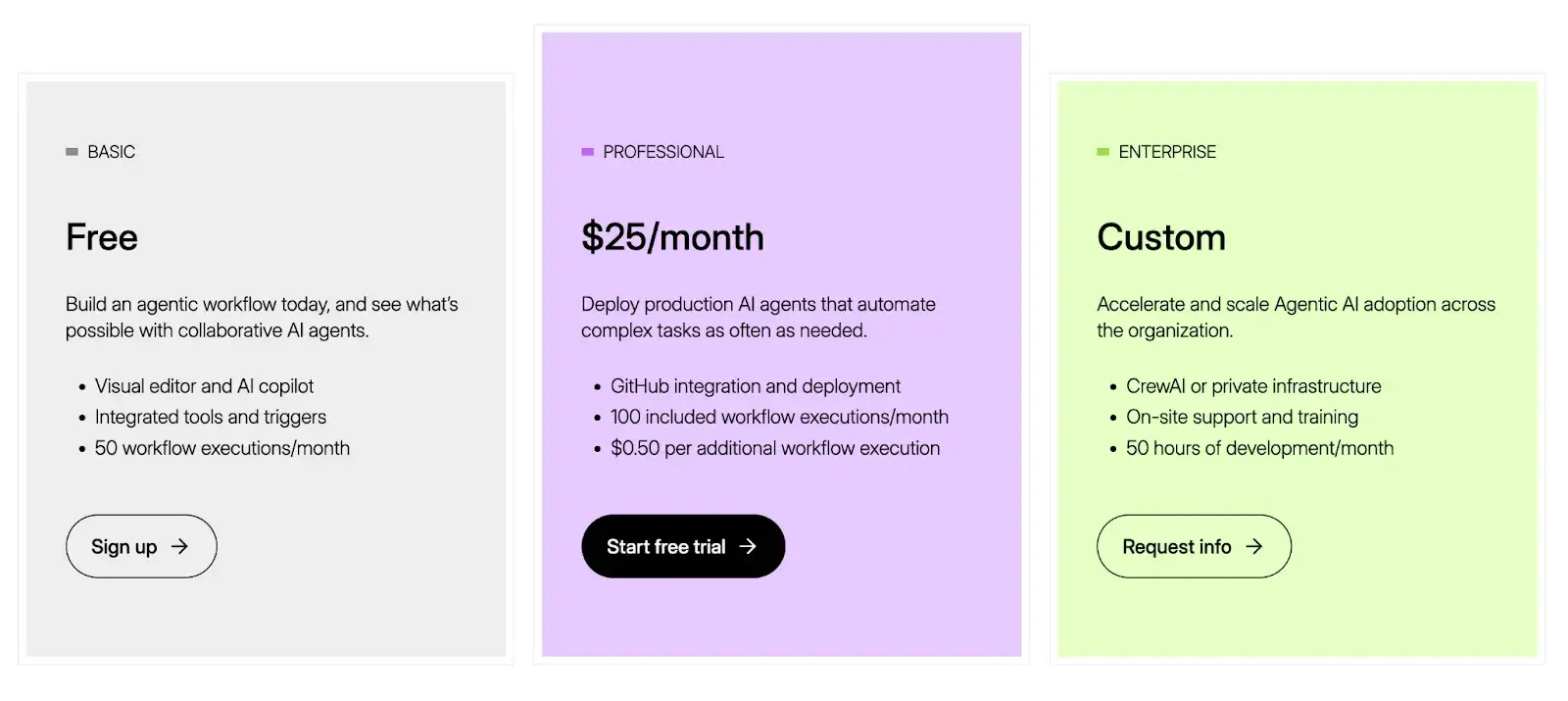

CrewAI's core framework is also open-source and free under an MIT license. For production deployments, CrewAI offers two paid plans, including:

- Professional: $25 per month

- Enterprise: Custom pricing

How ZenML Manages the Outer Loop when Deploying Agentic AI Workflows

Pydantic AI vs CrewAI sounds like an either-or choice. One is a type-safe agent, the other is a multi-agent crew. But what if you could actually use them together, or swap between them with a click?

That’s where ZenML comes in.

ZenML is an open-source MLOps + LLMOps framework that acts as the glue unifying the ‘outer loop’ for your AI agents.

Rather than competing with Pydantic AI or CrewAI, ZenML complements them by handling the surrounding infrastructure and lifecycle concerns. It governs the entire production lifecycle and adds value in several ways:

1. Pipeline Orchestration and Scheduling

ZenML helps you productionize your Pydantic AI or CrewAI logic by embedding it in a pipeline that runs reliably on a schedule or in response to events.

This means you can design a workflow where, for example, one step prepares data, the next step runs a Pydantic AI agent, and another step runs a CrewAI crew.

ZenML handles the orchestration automatically, ensuring steps run in the correct sequence; or even in parallel, if configured. You can also schedule these pipelines using cron jobs or event triggers, and deploy them on scalable backends such as Kubernetes, Airflow, or cloud runners.

2. Unified Visibility and Lineage Tracking

ZenML automatically tracks and versions every component of your pipeline. For agent workflows, this is a blessing in disguise. Every prompt, every response, and every intermediate artifact gets recorded and versioned in ZenML’s metadata store

So if a CrewAI agent made a decision that led to an error, you can trace back through the run logs and see exactly what happened. ZenML’s dashboard shows you these run histories, and you can compare outputs across different runs.

3. Continuous Quality Control and Feedback Loops

A ZenML pipeline can include steps that automatically run evaluations after each agent execution.

If the output quality is poor, ZenML will help you trigger alerts, route the output for human review, or even automatically invoke a fallback agent.

What’s more, implement an A/B testing scheme: run the same query through both a Pydantic AI agent and a CrewAI agent in parallel and compare results.

This kind of automated evaluation loop helps ensure your agents maintain performance in production and allows you to systematically improve them.

Which One to Choose: Pydantic AI vs CrewAI?

The choice between Pydantic AI and CrewAI depends entirely on your project's goals and technical requirements.

✅ Choose Pydantic AI if you need to enforce strict, reliable, and machine-readable outputs from your LLMs.

✅ Choose CrewAI if your goal is to build sophisticated, multi-agent systems that can solve complex problems through collaboration.

Ultimately, these frameworks are not mutually exclusive. You could even use a Pydantic AI agent as a tool within a larger CrewAI workflow to handle a specific data validation task.

With a tool like ZenML, you can orchestrate, monitor, and evolve agents built with both frameworks inside a unified, production-grade MLOps pipeline.

If you’re interested in taking your AI agent projects to the next level, consider joining the ZenML waitlist. We’re building our first-class support for agentic frameworks (like LangGraph, CrewAI, and more) inside ZenML, and we’d love early feedback from users pushing the boundaries of what AI agents can do. With ZenML, you can seamlessly integrate whichever agent framework you choose into robust, production-grade workflows. Join our waitlist to get started.👇