Everywhere you look, a new ‘agentic AI framework’ is being announced. Some claim to be the future of multi-agent systems. Others promise enterprise-grade reliability. And if you’ve tried following the conversation online, you know it’s a mess: features get conflated, buzzwords are thrown around, and very little clear thinking happens about what actually makes these frameworks useful.

Developers and ML engineers are left asking the same questions:

Which framework should I trust for production? How do I compare one tool’s orchestration to another’s? Why does it feel like every blog post contradicts the last?

Instead of clarity, the hype has only created noise.

We wrote this guide to cut through that noise. In this best agentic AI frameworks article, we break down 7 of the most important agentic AI frameworks. You’ll see what each one does well, where it falls short, how it’s priced, and where it fits in the real world.

By the end, you’ll have a clear picture of which framework makes sense for your workflows, and why ZenML deserves special attention as the backbone for production-ready agentic AI.

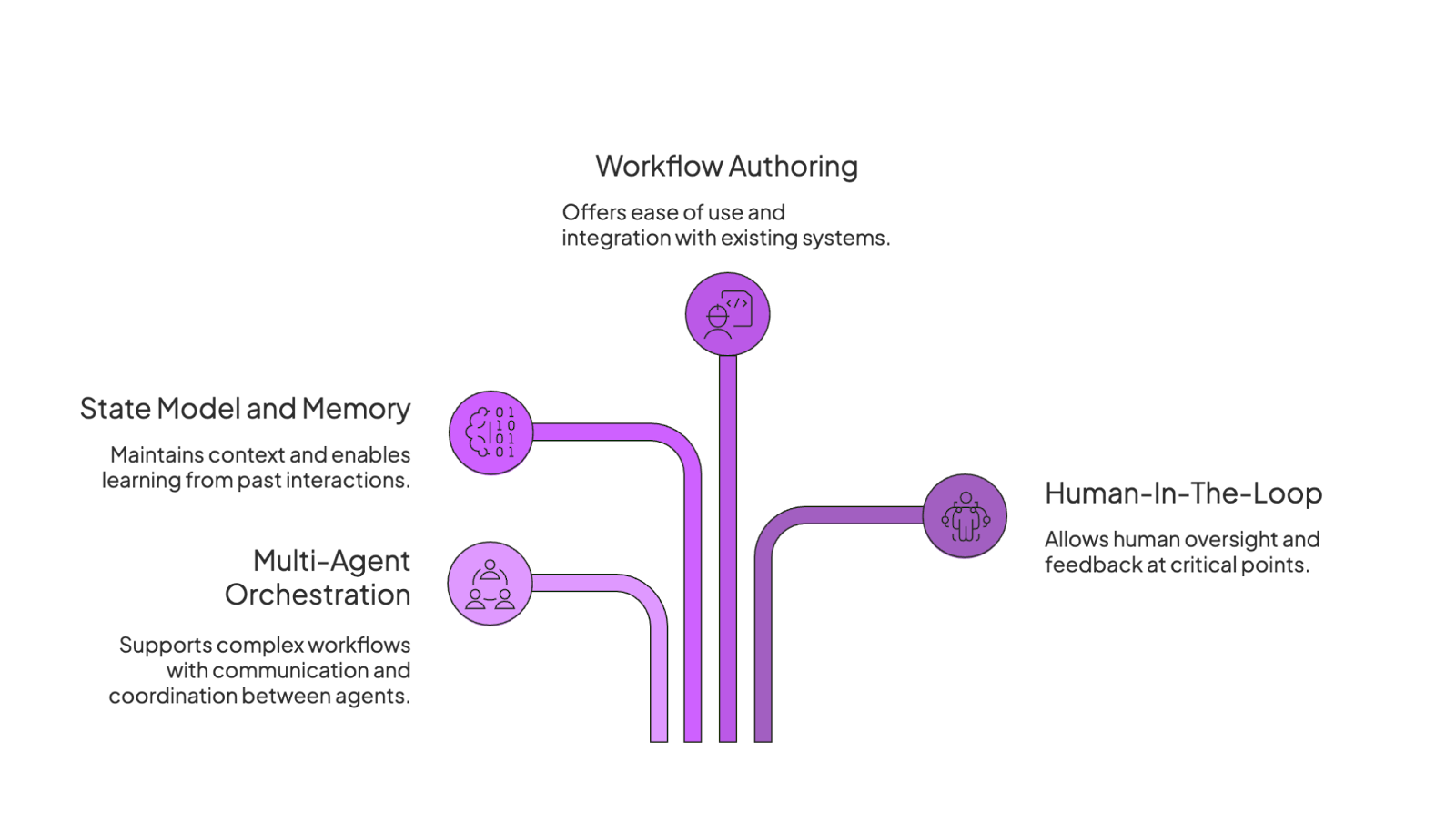

What to Look for In an Agentic AI Framework?

Before choosing a framework, consider how it handles the core requirements of agentic systems.

Below are key factors to evaluate:

1. Multi-Agent Orchestration

Agentic applications often involve multiple agents working in tandem – for example, a ‘researcher’ agent gathering information and a ‘writer’ agent composing a report.

A good framework should support orchestration of multi-agent workflows, whether through sequential pipelines, parallel branches, or graph-based flows. This includes letting agents communicate or pass outputs to each other and coordinate via defined logic or a central orchestrator.

Look for support for patterns like hierarchies of agents, role-based teams, or event-driven agent messaging. Robust multi-agent orchestration ensures complex tasks can be split among specialized agents without agents interfering or ‘talking over’ each other.

2. State Model and Memory

State management and memory are crucial so that agents remember context and learn from past interactions.

An effective framework provides a state model to maintain conversation history, intermediate results, or store long-term knowledge. This could include in-memory session state for multi-turn conversations, vector database integration for long-term semantic memory, or persistent storage of facts an agent has learned.

Evaluate how the framework lets you store and retrieve state – e.g., built-in memory objects, session contexts passed between steps, or connectors to external knowledge bases.

Solid memory handling prevents agents from forgetting important details as workflows progress, and it allows more coherent, context-aware behavior over time.

3. Workflow Authoring and Developer Experience

Agentic AI frameworks vary in how you author workflows and how developer-friendly they are. Some offer low-code or visual builders, while others require writing Python code or YAML configurations.

Consider the developer experience:

- Does the framework have clear abstractions like agents, tools, and steps that are easy to use?

- Is there support for debugging and tracing the agent’s reasoning, for example, logging each action or a visual trace of the agent’s decision flow?

- Also, look for how easily the framework integrates into your stack – can you plug in your own models, tools, and data sources without heavy custom code?

A good framework should be easy to start with, giving you quick results with built-in defaults, while still letting you customize it for bigger, more complex projects.

4. Human-In-The-Loop

Even autonomous agents sometimes need a human-in-the-loop for oversight on critical decisions or to provide feedback. The framework you decide to invest in should accommodate human interaction at key points.

This might mean the ability to pause an agent and await human approval, special ‘human’ agents that represent a user or moderator, or hooks for reinforcement learning from human feedback.

Human-in-loop capabilities are important for workflows that require validation of AI outputs, compliance checks, or collaborative decision-making. Frameworks that make it easy to inject human review steps will give you more control over autonomous systems, especially in high-stakes or customer-facing applications.

What are the Best RAG Tools for Agentic AI Currently On the Market?

Now, let’s examine 7 leading frameworks you can leverage to build agentic AI workflows.

1. ZenML

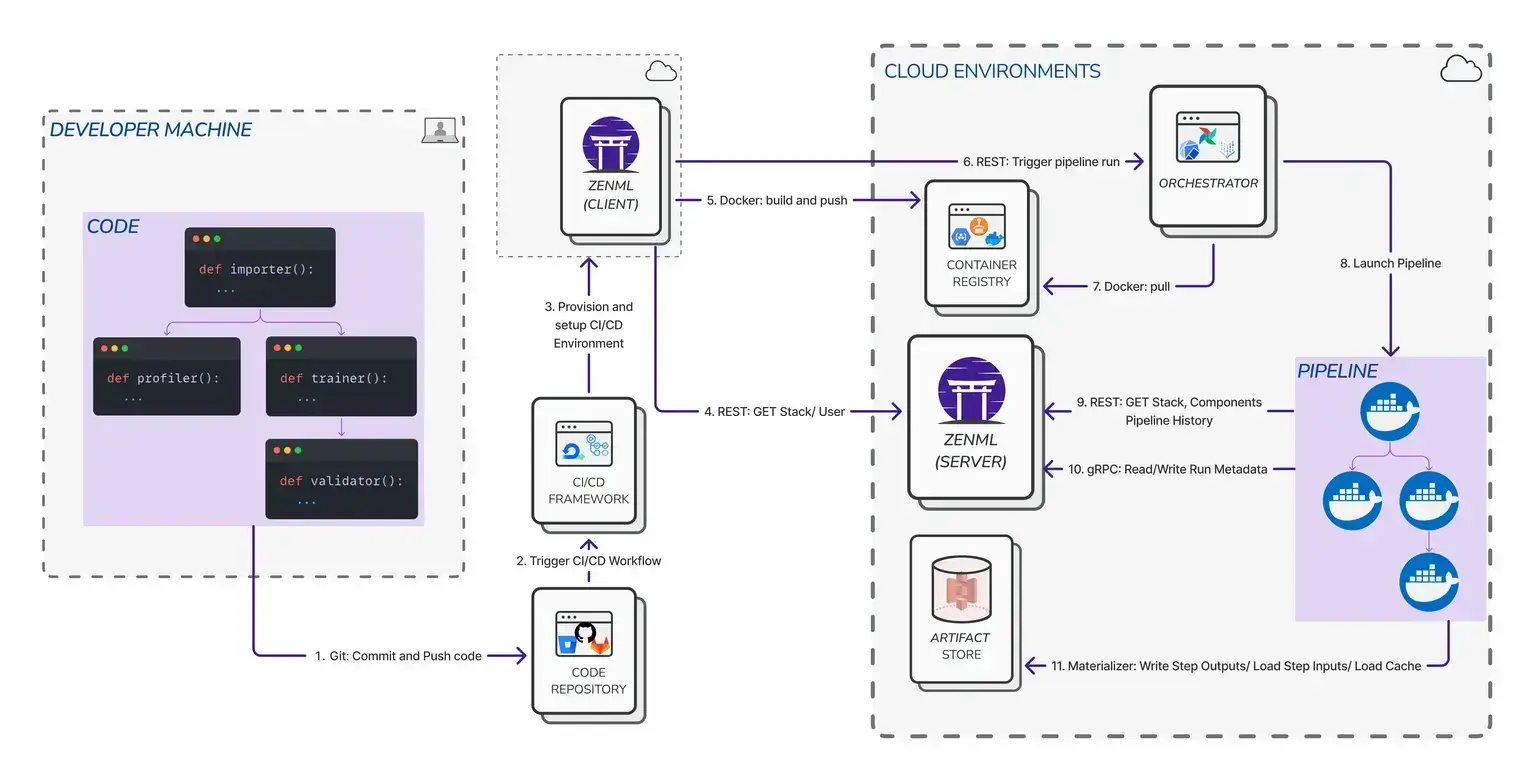

ZenML is an open-source MLOps + LLMOps framework that recently expanded into the agentic AI space. It provides a pipeline-centric approach to building intelligent workflows, treating agents and retrieval-augmented generation (RAG) tasks as steps in a reproducible pipeline.

The framework brings mature ML orchestration practices – versioning, deployment, experiment tracking – to LLM and agent workloads.

Features

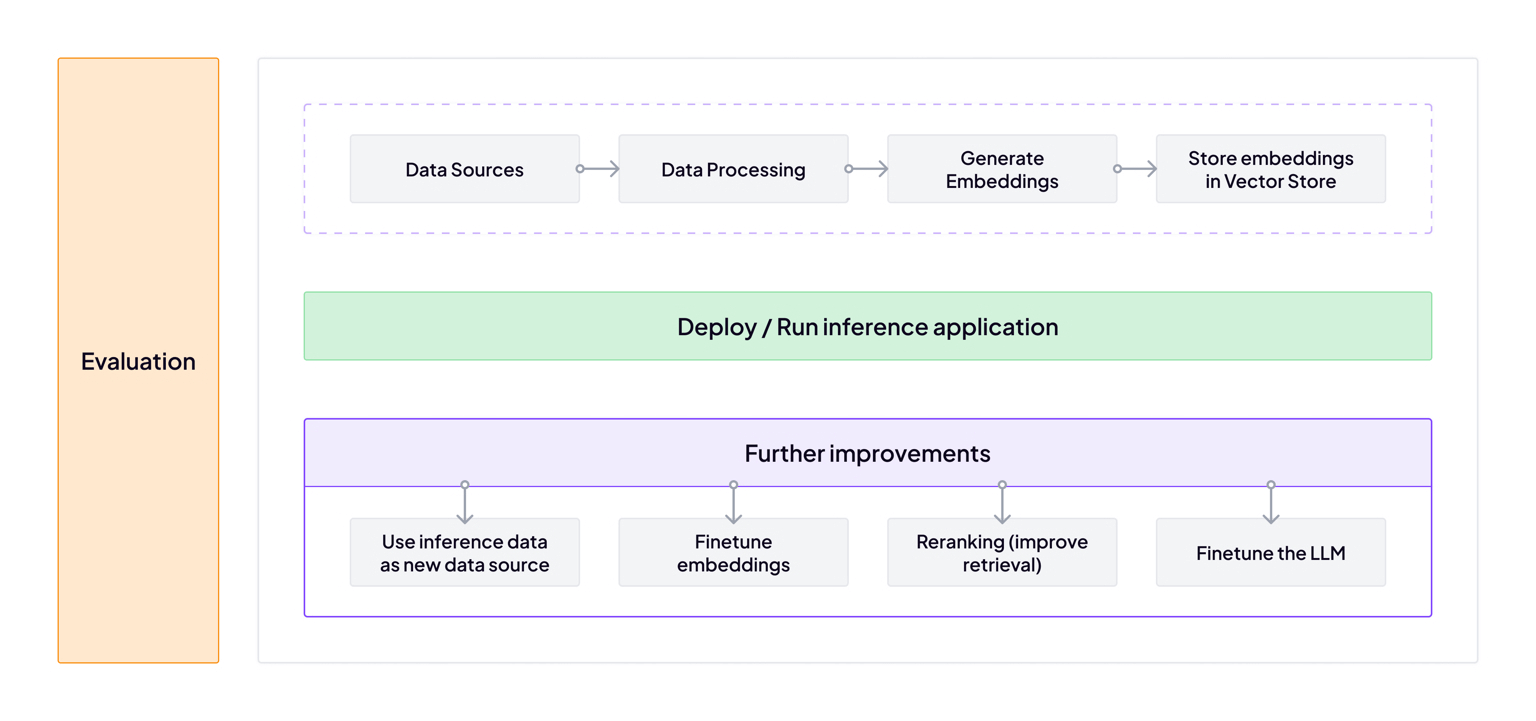

- Pipeline Components for RAG and Agents: Provides pre-built steps for data ingestion, embedding generation, vector store integration, LLM calls, etc., which you can chain to implement RAG pipelines. Agents can be incorporated as pipeline steps, allowing multi-step agent workflows to be orchestrated systematically.

- Orchestration and Reproducibility: Because ZenML treats everything as a pipeline, you can run agent workflows on various backends - local, Kubernetes, Airflow, and more, with each step tracked and versioned.

- Integration with Agent Libraries: Our platform lets you plug in external agent frameworks like LangGraph, CrewAI, etc., inside ZenML pipelines. This lets ZenML handle surrounding tasks like data prep or RAG, feeding context into an agent’s prompt or capturing the agent’s output downstream.

- Extensible Stack Components: ZenML is provider-agnostic – it supports many vector DBs (FAISS, Weaviate, Pinecone) and LLM providers (OpenAI, Anthropic, local models) via its ‘stack’ integrations. This flexibility means you can choose your own tools for embedding, memory, models, etc., rather than being locked in.

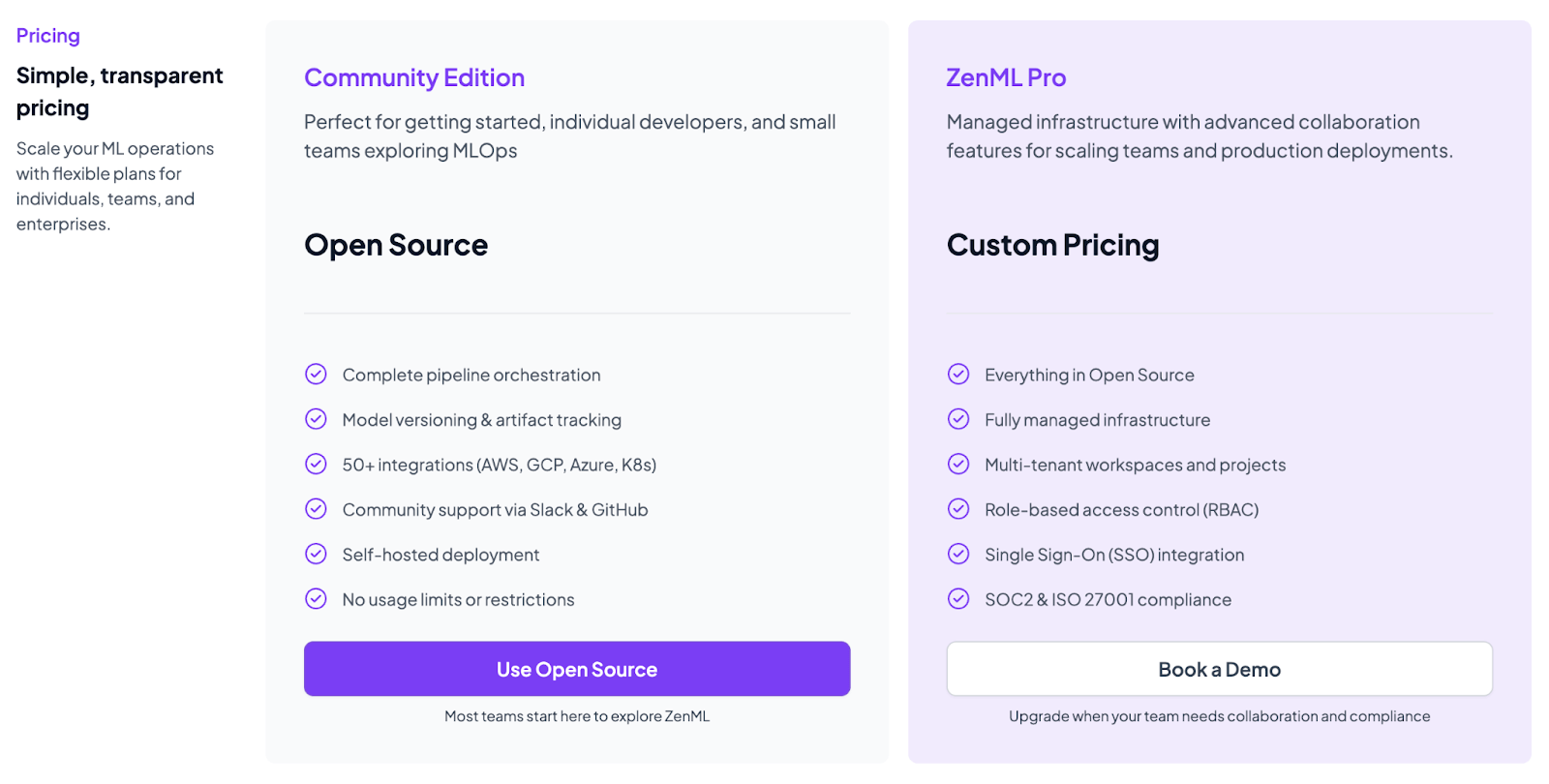

Pricing

We are upgrading our platform to bring every ML and LLM workflow into one place for you to run, track, and improve. Think of processes like data preparation, training, RAG indexing, agent orchestration, and more, all in one place.

Pros and Cons

ZenML’s biggest strength for agentic AI is that it bridges the gap between MLOps and agent orchestration. You get experiment tracking, artifact and data lineage, model/prompt versioning, and pipeline deployments out of the box, which brings much-needed discipline to AI agent workflows.

It’s highly extensible and tool-agnostic, allowing you to integrate any model or database via its modular stack.

On the downside, ZenML is not a specialized agent UI or a one-click solution for building an agent – it’s a framework and requires writing pipeline code. Teams must be willing to adopt ZenML’s abstractions (pipelines, stacks, steps), so using ZenML involves a learning curve.

2. LangGraph

LangGraph is a library from the creators of LangChain for building stateful, multi-agent applications by representing them as graphs. It extends the LangChain ecosystem with explicit, cyclical workflows that give developers precise control over an agent's reasoning loop.

Features

- Create graphs of agents or tool calls that run sequentially, in parallel, or conditionally. LangGraph supports single-agent flows, multi-agent collaborations, hierarchical task breakdowns, and other complex patterns by modeling them as directed graphs.

- Provides first-class memory objects to store state between nodes. This means an agent’s intermediate conclusions or conversation context can persist and be accessed by later nodes or agents in the graph, enabling long-term coherence.

- The framework includes components that allow you to insert human review or moderation steps.

- Offers token-by-token streaming of LLM outputs. This allows downstream nodes to start processing partial results without waiting for the full completion, enabling more responsive multi-agent interactions.

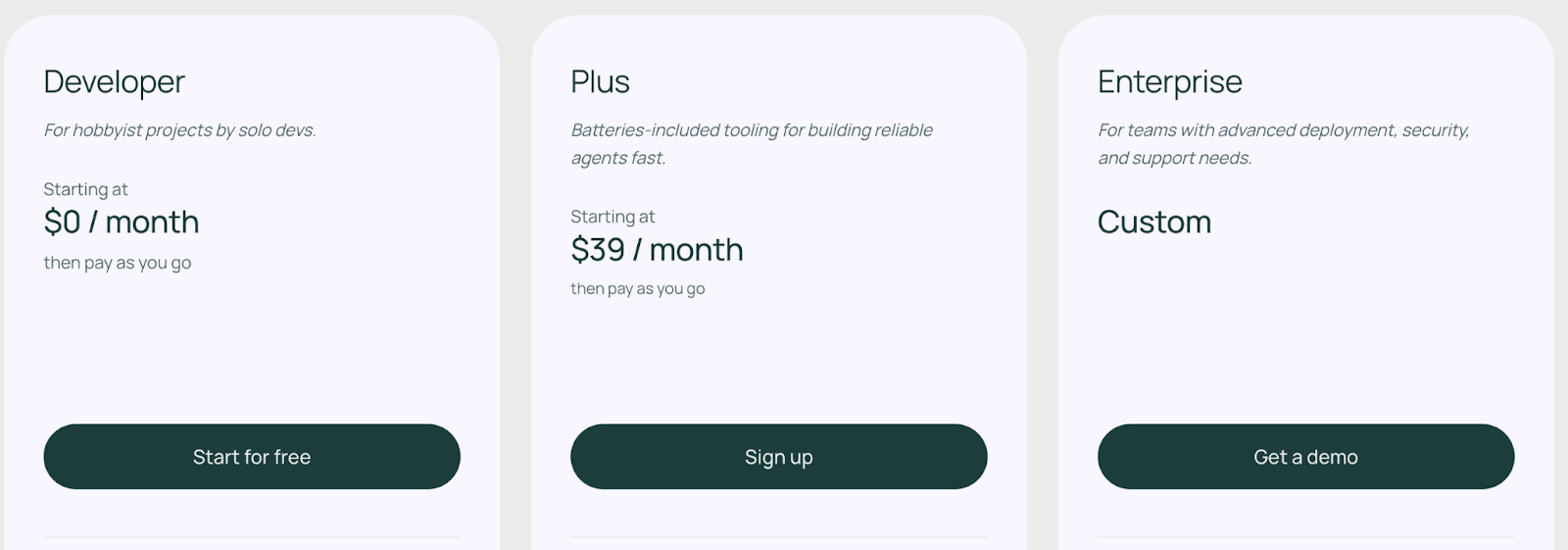

Pricing

LangGraph comes with an open source plan that lets you get started for free and offers basic features. But to collaborate with your team and unlock premium features, you can upgrade to one of the two paid plans it offers:

- Plus: $39 per month

- Enterprise: Custom pricing

Pros and Cons

LangGraph’s strength is in making the invisible visible. By defining your agent workflow as a graph of nodes and edges, you essentially get a blueprint of the entire reasoning process. This explicit structure makes it easier to understand complex agent logic at a glance and to debug issues – you can inspect each node’s input/output to pinpoint where a chain might be breaking.

The flip side of LangGraph’s flexibility is complexity. There is non-trivial boilerplate in defining graphs and nodes, especially for intricate workflows.

3. Agno

Agno (formerly called Phi-Data) is an open-source framework for building full-stack agentic systems with an emphasis on memory, knowledge, and reasoning. It enables you to create everything from single AI agents to teams of agents, complete with long-term memory stores, tool use, and even a web-based agent UI.

Features

- The framework supports the creation of agent teams with different collaboration modes, including

route,collaborate, andcoordinate. - This allows for the orchestration of specialized agents that can work together on complex tasks.

- Agno provides long-term memory via storage drivers and short-term memory via session context. Agents can remember facts across conversations and retrieve stored knowledge when needed, enabling continuous learning and context retention.

- Agno comes with a library of 20+ ready-made tools like web search, APIs like DuckDuckGo or yFinance, code execution, etc., and makes it easy to add new tools by simply writing a Python function with a decorator.

- Comes with an Agent Playground UI for chatting with your agents and a monitoring dashboard to track agent sessions in real time.

Pricing

Agno is completely open-source (licensed under MPL 2.0) and free to use. You install the Python package and run it on your own hardware or cloud.

The platform also offers an ‘Agno pro’ plan free of charge for students, educators, and startups with less than $2 million in funding. For more information or to access this discount, you can contact support@agno.com.

Pros and Cons

Agno covers a lot of ground: multi-agent orchestration, memory management, tool integration, UI, and deployment. We liked its ‘batteries included’ approach – you can spin up a fully functional agent (with memory and a web UI) in a few dozen lines of code.

One downside is that Agno’s breadth can translate to complexity. With so many features and levels of agent sophistication, the learning curve can be steep – especially for beginners who might be overwhelmed by concepts like custom reasoning models or building multi-modal agents.

4. CrewAI

CrewAI is an open-source framework for orchestrating role-playing, autonomous AI agents that work together as a collaborative team. It’s designed to foster collaborative intelligence by allowing agents to delegate tasks and work together to tackle complex objectives.

Features

- Configure each agent’s role, goal, and expertise explicitly via a YAML config or code. For example, one agent could be the ‘Brainstormer’ with the goal of generating ideas, and another the ‘Evaluator’ to critique and refine them.

- By default, CrewAI runs agents in a fixed sequence – one agent produces an output, then passes it to the next agent, and so on. This turn-by-turn execution is deterministic, making it easier to follow the workflow and debug.

- Comes with 40+ built-in tools that agents can use, including web search, code execution, web scraping, and more.

- The framework records each step an agent takes - every message, tool call, result, etc.- and it allows you to replay a session with modifications.

Pricing

CrewAI’s core framework is also MIT-licensed and open-source. But the platform offers several paid plans to choose from:

- Basic: $99 per month

- Standard: $6,000 per year

- Pro: $12,000 per year

- Enterprise: $60,000 per year

- Ultra: $120,000 per year

Pros and Cons

CrewAI’s structured approach brings clarity and reliability to multi-agent systems. Enforcing turn-taking and defined roles, it avoids the chaos that can often happen when multiple agents talk or act at once.

It’s also relatively easy to set up common workflows like a Q&A, where one agent finds info and another writes the answer using provided YAML templates.

The flip side is rigidity. CrewAI is optimized for workflows that naturally fit a sequential pipeline of roles. If your use case requires free-form dialogue between agents or dynamic reordering of steps, CrewAI might feel limiting.

5. Microsoft AutoGen

Microsoft AutoGen is an open-source framework from Microsoft Research for building AI applications with multiple interacting agents. It takes a conversational paradigm – agents communicate via messages and can collaborate or compete to solve tasks.

Features

- AutoGen agents communicate through an asynchronous messaging system. You can set up event-driven loops or a request/response style interaction. Multiple agents (and even a human participant) can send messages to each other in a chat session, making it easy to simulate conversations between AI ‘characters’ collaborating on a problem.

- The framework treats a human user or moderator as just another agent in the conversation. You can include a human agent that the system will pause and wait for, allowing for real-time human oversight or input during an agent run.

- AutoGen agents are capable of both using tools and executing code. A

UserProxyAgentcan be configured to automatically run Python code blocks sent by anAssistantAgentin a sandboxed environment, such as Docker. - As of version 0.4, AutoGen supports agents written in Python and .NET that can work together.

Pricing

AutoGen is released under an open-source license (MIT) as part of Microsoft’s research projects. It is completely free to use. You can install it via pip (pip install autogen), and there are no usage fees or paid tiers.

Pros and Cons

AutoGen provides a robust foundation for agent systems, especially if you need fine-grained control. Its asynchronous, event-driven design is great for agents that need to wait on external events or handle streaming data.

Compared to other agentic frameworks, AutoGen is more of a toolkit than a turnkey solution. There’s no high-level GUI or simple YAML config to spin up an agent – you’ll be writing Python code, subclassing agent classes, and managing message loops.

6. OpenAI Agents SDK

The OpenAI Agents SDK is a lightweight Python framework for building agentic AI applications. Evolving from OpenAI's experimental Swarm project, the SDK provides a set of production-ready primitives designed to simplify the creation of sophisticated, multi-agent workflows with a minimal learning curve.

Features

- The SDK is built around a few key components:

Agents- LLMs with instructions and tools,Handoffs- for delegating tasks between agents, andGuardrails- for validating inputs and outputs. - Instead of introducing complex abstractions, the SDK encourages the use of native Python language features for orchestrating and chaining agents.

- The SDK includes built-in session memory to automatically maintain conversation history across multiple agent runs.

- The framework automatically traces agent runs, which makes it easier to track, debug, and visualize the behavior of agents. This tracing is extensible and supports integration with various external monitoring tools.

Pricing

The OpenAI Agents SDK is free and open source (available on GitHub under the MIT license). There is no cost to use the SDK itself. However, it is designed to work closely with OpenAI’s APIs for the LLM calls and possibly certain integrated tools like browsing, so you will incur the normal usage fees for any OpenAI API calls your agent makes.

Pros and Cons

The OpenAI Agents SDK is arguably the easiest entry point into building an agent if you’re already using OpenAI models. It abstracts the messy parts of prompt engineering for tool use (thanks to function calling) and provides sensible defaults.

Being tightly coupled to OpenAI’s ecosystem can be a double-edged sword. If you want to use non-OpenAI models or more complex memory/storage backends, the SDK might feel limiting; it’s somewhat geared towards OpenAI’s way of doing things.

7. AWS Strands

AWS Strands is an open-source SDK for building autonomous AI agents with a model-first approach. Initially developed and production-tested by internal AWS teams, it provides a flexible framework that integrates seamlessly with AWS services while remaining open to third-party components.

Features

- Strands leverages the reasoning capabilities of the underlying LLM to plan, orchestrate tasks, and reflect on goals. This model-first philosophy reduces the need for rigid, hard-coded workflow logic.

- While optimized for Amazon Bedrock, Strands is designed to work with any LLM provider, including OpenAI, Anthropic, and local models via Ollama.

- The framework offers a rich set of native tools for interacting with AWS services. It also supports easy deployment to various AWS environments, including Lambda, Fargate, and Amazon Bedrock AgentCore.

- Comes with built-in observability features, including support for OpenTelemetry, metrics, logs, and distributed tracing. This makes it easier to monitor and debug agents in production environments.

Pricing

AWS Strands is an open-source framework released under the Apache 2.0 license. It is free to use, with costs arising from the use of AWS services like Amazon Bedrock and the underlying infrastructure.

Pros and Cons

Strands aims to significantly simplify agent development. In many cases, you just declare what the agent can do (tools) and give it a prompt, and the heavy lifting of planning is done by the model.

The model-driven approach, while simpler, means less deterministic control. You’re leaning on the LLM to make decisions, which might result in unpredictable behavior if the model isn’t well-guided.

How ZenML Helps In Closing the Outer Loop Around Agentic AI

Agentic AI frameworks help your agent reason, plan, and execute tasks. The outer loop is everything that keeps this reliable in production: orchestration, lineage, evaluation, and day-2 ops. ZenML provides that layer around your agentic AI stack.

Orchestrate the Full Agentic Flow

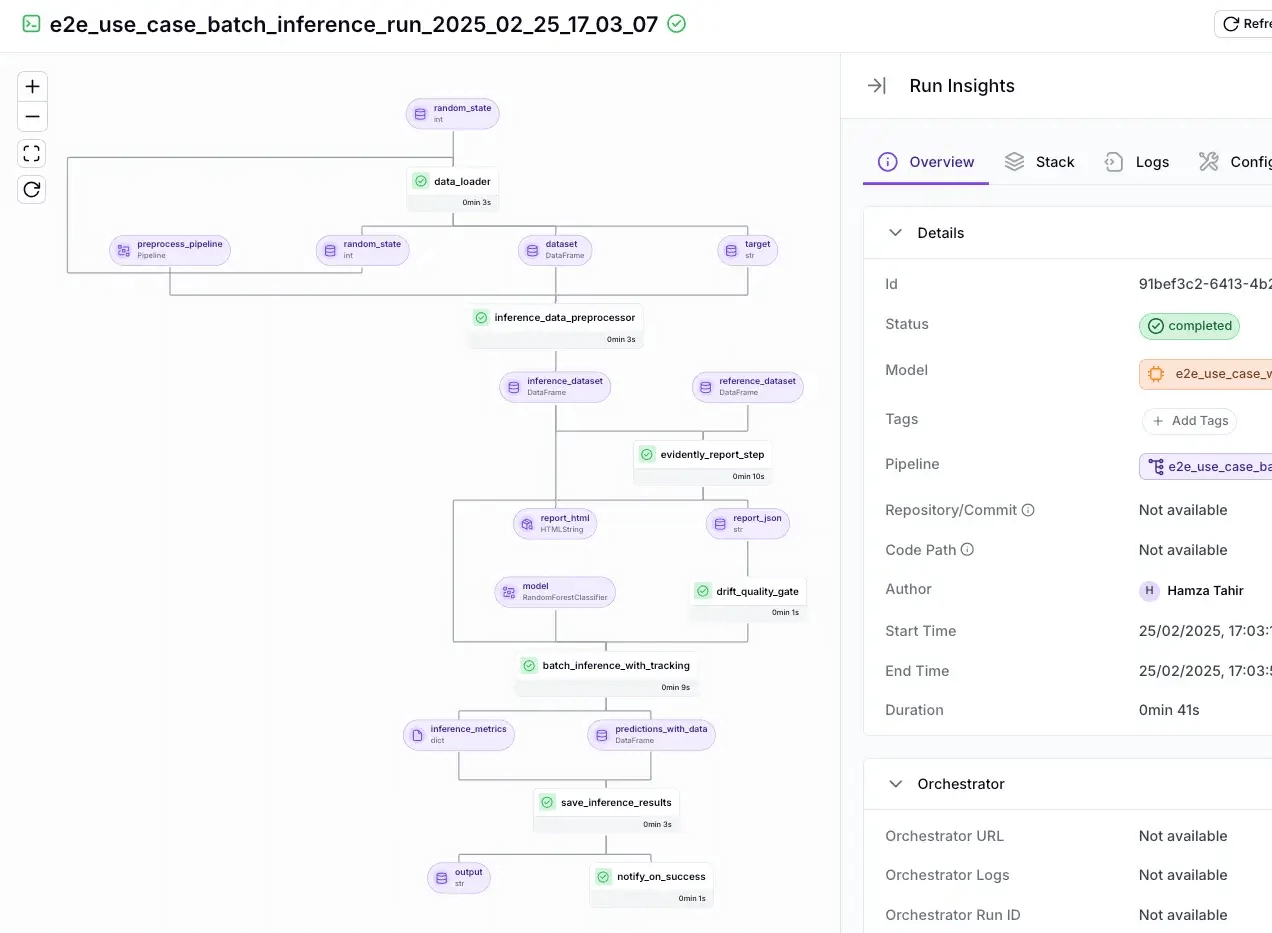

ZenML lets you define every part of your agentic system: from data ingestion and tool definition to agent execution and post-processing, as steps in a single pipeline.

You can run the same pipeline on a schedule, trigger it from CI/CD, or even from other pipelines. Parameters and YAML configs let you switch models or tools without touching code, which is handy for A/B testing different approaches.

Unified Visibility and Lineage

Every step in a ZenML pipeline produces versioned artifacts with full lineage. The ZenML dashboard lets you browse runs, inputs, and outputs so you can trace an agent's decision back to the data and code that produced it.

Our platform also records code repo commit hashes for runs, which helps when you need to compare behavior across revisions.

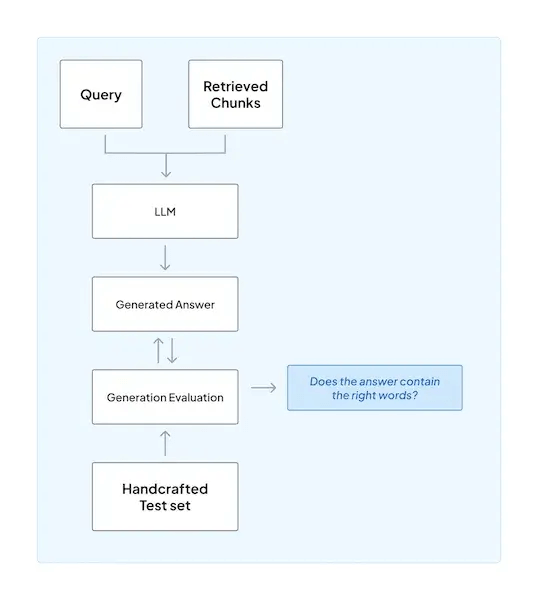

Continuous Evaluation and Feedback

ZenML ships with an evaluation workflow that can be adapted for agentic systems. You can use an LLM judge for automated evaluation and keep a human in the process for review and approval.

What’s more, you can also set up alerts that integrate with Slack or Discord to notify your team of failures or review requests.

Production Rollout and Scale

ZenML lets you choose an orchestrator that fits your environment - Kubernetes, Kubeflow, Airflow, and more are supported.

Secrets and cloud access are handled through ZenML’s centralized secrets store and Service Connectors, so your pipelines can securely access the resources they need.

ZenML does not replace your agentic AI framework. It wraps it. The idea is that you use your preferred agentic framework, tools, and evaluators - ZenML will tie them into one repeatable, observable process and track every agent you run.

Which Agentic AI Framework Should You Use?

Choosing the right agentic AI framework depends on your project’s needs and your team’s preferences.

- If you prioritize a robust MLOps + LLMOps foundation and reproducibility, ZenML offers a strong backbone to productionize agents (and you can integrate other tools into it).

- For developers who want explicit control and transparency, LangGraph might be appealing – the framework lets you design clear workflows or leverage enterprise plugins.

- If speed of iteration and ease of use are key, a platform like OpenAI’s Agents SDK (minimalistic code) could let you get something working in a day.

- Meanwhile, Agno provides an all-in-one powerhouse for those who want state-of-the-art features and are willing to handle a bit of complexity.

In many real-world cases, you might even combine these tools – for example, using ZenML to orchestrate a CrewAI agent as part of a larger pipeline, or designing an agent in LangGraph and deploying it with AWS Strands for scalability.

ZenML's upcoming platform brings every ML and LLM workflow - data preparation, training, RAG indexing, agent orchestration, and more - into one place for you to run, track, and improve. Type in your email ID below and join the early-access waitlist. Be the first to build on a single, unified stack for reliable AI. 👇