Building AI agents in Python has never been more exciting – or more challenging. As an experienced ML engineer, I’ve spent time exploring various agent frameworks to automate complex tasks with LLMs. I recently tested and compared LlamaIndex and CrewAI - both powerful frameworks for constructing agentic AI systems, yet they take very different approaches.

In this LlamaIndex vs CrewAI article, I break down the key differences between the two frameworks across features like their workflow models, multi-agent capabilities, human-in-the-loop support, and guardrails.

I also discuss integration options and pricing for each, and show how you can leverage ZenML (our product) to productionize agents built with either framework.

LlamaIndex vs CrewAI: Key Takeaways

🧑💻 LlamaIndex: A flexible agent framework that excels at connecting LLMs with your data and building agentic workflows. LlamaIndex provides robust tools for ingesting and indexing proprietary data, which makes it especially powerful for RAG pipelines where agents need to retrieve and reason over documents.

🧑💻 CrewAI: A role-based orchestration framework designed to make multiple AI agents collaborate as a structured ‘crew.’ Inspired by the analogy of a human team, CrewAI lets you define specialized agent roles - Researcher, Writer, Validator, and more - with distinct goals, and have them work together under an event-driven workflow.

Framework and Maturity

To set some context on adoption and maturity, here’s a quick comparison of the two frameworks:

👀 Note: Data as of mid-2025; these numbers may evolve.

Despite being the younger project, CrewAI has grown extraordinarily fast – it averages over 1.3 million installs per month on PyPI and has amassed a large community of users in under a year.

LlamaIndex, having launched about a year earlier, enjoys a slightly larger GitHub following and has a proven track record in enterprise settings.

Both frameworks are independent of LangChain, meaning they’re built from the ground up and don’t require LangChain as a dependency.

LlamaIndex vs CrewAI: Features Comparison

Before drilling into details, here’s a high-level feature comparison between LlamaIndex and CrewAI:

Now, let’s unpack each of these features in more detail and see how LlamaIndex and CrewAI approach them.

Feature 1. Workflow Model

The workflow model defines how tasks and logic are structured and executed. Both LlamaIndex and CrewAI have embraced event-driven architectures, but they arrived there from opposite starting points to solve different initial problems.

LlamaIndex

LlamaIndex's Workflow engine is an event-driven abstraction designed to overcome the limitations of more rigid, graph-based structures like Directed Acyclic Graphs (DAGs). While DAGs are effective for linear data pipelines, they cannot handle the loops and conditional logic essential for sophisticated agentic behaviors like self-correction and retries.

The Workflow model provides developers with a code-native way to build complex, stateful, and even cyclical agent logic. It operates on two core concepts:

- Steps: These are Python functions decorated with

@step. Each step is a distinct unit of work in the workflow. - Events: These are typed data classes that trigger steps. A step's function signature declares which event type it listens for and which event type it emits upon completion. The framework includes special

StartEventandStopEventclasses to manage the workflow's lifecycle.

This design allows developers to chain operations with a high degree of flexibility. For instance, a validation step can emit a ValidationErrorEvent that triggers an extraction step to run again, creating a self-correction loop that would be impossible in a traditional DAG.

LlamaIndex moved from a structured pipeline model to this flexible, event-driven one to better support the dynamic nature of agentic systems.

📚 Also read about LlamaIndex vs LangGraph

CrewAI

CrewAI offers a dual approach to workflows, allowing developers to choose between high autonomy and high precision.

- Crews: This is the original, high-level abstraction for autonomous collaboration. A

Crewconsists of multiple agents that work together based on a predefined process, likeProcess.sequentialorProcess.hierarchical. In this model, the framework manages the task delegation and agent interaction implicitly, which is excellent for rapid prototyping of collaborative systems. - Flows: For tasks that require deterministic control and auditability, CrewAI introduced

Flows. This is a granular, event-driven orchestration layer that looks similar to LlamaIndex's model. It uses decorators like@startto initiate a workflow and@listento have one step react to the output of another.

This dual system reflects CrewAI's evolution. It started with a highly autonomous agent model and later added a structured, event-driven layer to give developers the precise control needed for enterprise-grade automations.

Bottom line: LlamaIndex suits developers who want maximum flexibility and code-native event loops for RAG-style or dynamic agent workflows.

CrewAI is better if you need a higher-level crew abstraction with clear roles and an optional Flows engine for precise orchestration in enterprise-grade, multi-agent processes.

📚 Also read about CrewAI vs AutoGen

Feature 2. Multi-Agent Functionality

How frameworks manage the interaction between multiple agents is a critical differentiator. LlamaIndex provides the building blocks for developers to construct their own systems, while CrewAI offers a more opinionated, out-of-the-box solution.

LlamaIndex

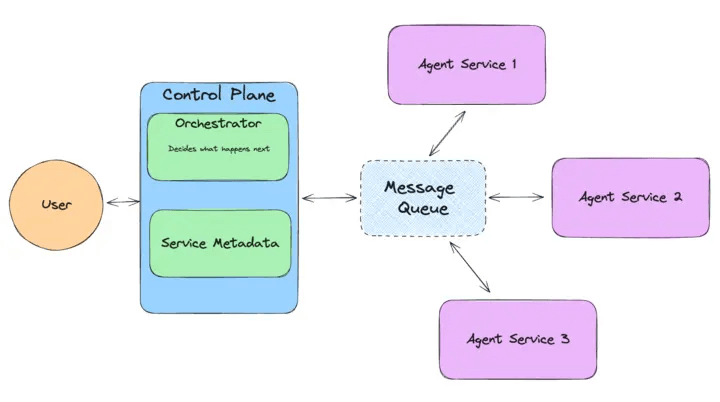

While LlamaIndex began primarily as a single-agent RAG framework, it has grown to support multi-agent scenarios through its AgentWorkflow and the newer LlamaDeploy module.

In LlamaIndex, you can certainly have multiple agents or tools working together, but the paradigm is a bit different from CrewAI’s crew-of-peers model.

Typically, LlamaIndex employs a central orchestrator agent that delegates tasks to other agents or tools as needed. This is similar to a manager-worker pattern: one agent decides what needs to be done, and calls on sub-agents to do it.

In June 2024, the LlamaIndex team announced llama-agents (now LlamaDeploy) that allow every agent to run as an independent microservice with a message queue for communication and an LLM-driven orchestrator to route messages.

Here's a simple example of how to set up a basic multi-agent system using llama-agents:

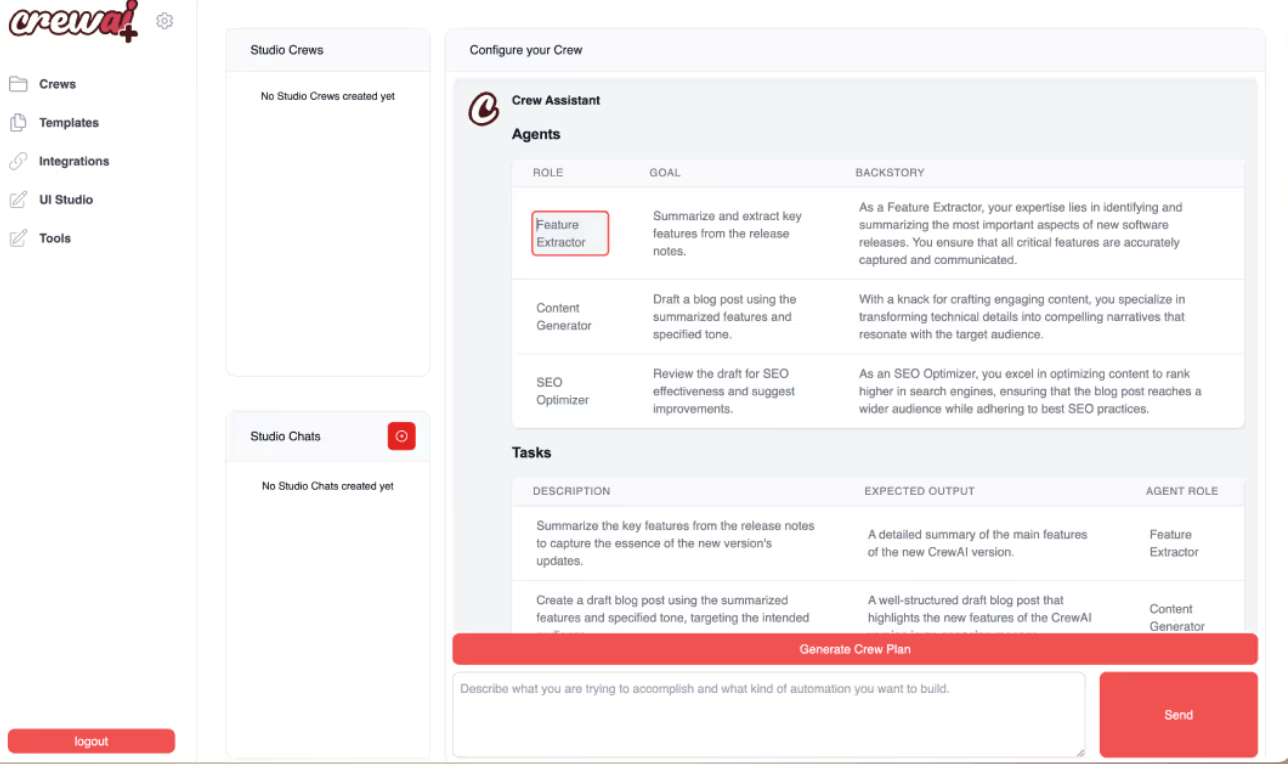

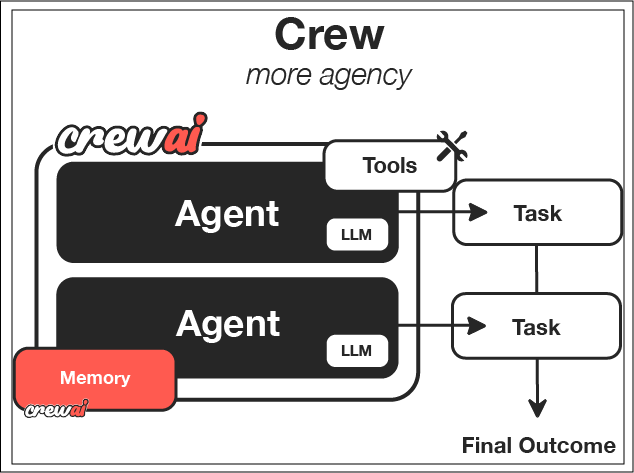

CrewAI

Multi-agent collaboration is the core of CrewAI. From the ground up, CrewAI is built on the idea that multiple agents will work together as a crew. This means when you use CrewAI, you’re inherently designing a system with multiple specialized agents.

Each agent in a crew has a role, some knowledge or toolset, and possibly a backstory or persona that informs its behavior. For instance, you might define one agent as a ‘Code Writer’, another as a ‘Code Reviewer’, and another as a ‘DevOps Deployer’ if you were automating a coding task. These agents then collaborate to fulfill the overall goal of the crew.

The way CrewAI orchestrates this is through an event-driven engine that manages agent interactions according to the workflow you defined.

You explicitly assign tasks to each agent role, and CrewAI ensures the right agent picks up the right task at the right time.

Agents can also communicate via shared memory or intermediate artifacts: for example, the Researcher agent might produce a report that the Writer agent then uses.

CrewAI encourages thinking of agents as team members in a project – they can even delegate tasks to each other if allowed, and they operate concurrently or sequentially as configured by the Process (CrewAI supports sequential, parallel, and conditional task execution models).

Bottom line: LlamaIndex is ideal for teams that prefer building custom multi-agent patterns around a central orchestrator or distributed microservices.

CrewAI is purpose-built for collaborative, multi-agent setups, offering role-based crews and structured task delegation, which makes it easier to model team-like agent behavior.

Feature 3. Human-In-the-Loop

Incorporating human oversight is crucial for building reliable and safe agentic systems. These two frameworks integrate Human-in-the-Loop (HITL) capabilities in ways that reflect their core design philosophies.

LlamaIndex

In real-world applications, full agent autonomy isn’t always desirable – sometimes you need a human check. LlamaIndex doesn’t have a one-click ‘pause for human’ feature, but it allows human-in-the-loop (HITL) interactions by leveraging its event system.

Essentially, you can create a special tool or step in a workflow that waits for a human response before proceeding.

You can create an InputRequiredEvent when human confirmation is needed, and then halt the agent until a corresponding HumanResponseEvent is received.

For example, imagine an agent that’s about to execute a potentially risky action, say, deleting records. You could have it emit an event ‘Are you sure? (yes/no)’ which no automated step consumes – effectively a signal that a human needs to intervene.

Your application could catch this event and present it to a user. When the user responds (yes or no), you send a HumanResponseEvent back into the workflow, which unblocks the agent and lets it continue based on the human input.

The only downside of this functionality is that it requires you to write the wiring logic.

CrewAI

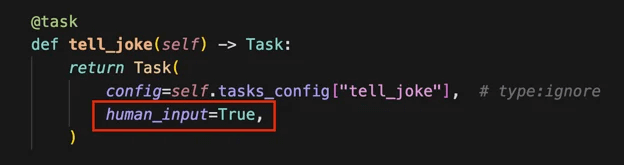

CrewAI takes a more out-of-the-box approach to human-in-the-loop functionality. The framework has a concept of tasks that can be designated for human input, which essentially means the agent crew will pause execution at that task and wait for a human to give the go-ahead or additional info.

Concretely, when you start a crew run, you can provide a webhook URL for human input. If a task requires human review, CrewAI will send a webhook notification containing the task details and output when it reaches that point, then put the crew in a ‘pending’ state.

At this point, your human operator (or perhaps a UI you built) can examine what the agents have done so far and decide how to proceed. The human might approve the result, modify it, or provide additional guidance. They then call a resume endpoint on the CrewAI system, essentially sending the human feedback and a signal to continue.

CrewAI will incorporate the feedback – it treats the human’s input as additional context for the task – and then resume the workflow from where it left off. This loop can repeat if the human gives negative feedback, causing the agents to retry the task with the new context. Once the human is satisfied and gives positive feedback, the crew moves on to subsequent tasks.

Bottom line: LlamaIndex enables flexible HITL via custom events, giving developers fine-tuned control but requiring extra wiring.

CrewAI provides built-in human-review checkpoints through webhooks and task pauses, making it a stronger fit for teams that need immediate oversight and smoother human-agent collaboration with minimal setup.

Feature 4. Guardrails and Testing

Ensuring the quality and reliability of agent outputs is another critical aspect of building production-ready systems. LlamaIndex's tools are highly analytical and focus on information quality, whereas CrewAI's tools are more operational and focus on execution performance.

LlamaIndex

LlamaIndex provides a robust suite of LLM-based evaluation modules designed primarily for assessing the quality of RAG and agent outputs. These tools perform a post-hoc analysis to validate the information generated by the system. Key modules include:

- FaithfulnessEvaluator: Checks if the agent's response is factually grounded in the retrieved context, helping to detect hallucinations.

- RelevancyEvaluator: Measures whether the retrieved documents and the final response are relevant to the user's original query.

- CorrectnessEvaluator: Compares the agent's answer to a ground-truth or reference answer to determine its correctness.

These evaluation tools are invaluable for debugging RAG pipelines and systematically improving the factual accuracy of knowledge-based agents. LlamaIndex's approach essentially asks, ‘Is the answer correct and faithful to the source data?’

CrewAI

CrewAI's approach to quality is more holistic and performance-oriented. It offers a dedicated testing framework through its command-line interface.

The crewai test command runs an entire crew for a specified number of iterations and generates a performance report. This report includes quality scores (from 1-10) for each task and agent, as well as the average execution time. This helps developers identify bottlenecks or underperforming agents within the collaborative workflow.

Additionally, CrewAI offers a crewai train command, which facilitates an interactive training session where a human can provide feedback to improve agent performance over multiple runs. CrewAI's testing framework asks, ‘Did the team perform its job well and efficiently?’ This reflects its focus on the overall success of a collaborative process.

Bottom line: LlamaIndex emphasizes output quality and factual correctness with built-in evaluators, making it powerful for RAG debugging and knowledge validation.

CrewAI emphasizes workflow performance and iterative improvement, offering native testing and training commands, making it ideal for refining multi-agent task execution in production-ready settings.

LlamaIndex vs. CrewAI: Integration Capabilities

An agent framework's value is significantly enhanced by its ability to connect to external tools, data sources, and services.

LlamaIndex

LlamaIndex boasts one of the most extensive integration ecosystems in the agentic AI space. Its primary hub for integrations is LlamaHub, a community-driven repository with over 300 connectors. These integrations cover a vast range of categories:

- Data Loaders: Connectors for hundreds of data sources, including APIs (Slack, Notion, Salesforce), file formats (PDF, PowerPoint), and databases (SQL, NoSQL).

- LLMs and Embedding Models: Support for nearly every major provider, including OpenAI, Anthropic, Google, Mistral, and open-source models via Hugging Face and Ollama.

- Vector Stores: Dozens of integrations with vector databases like Pinecone, Weaviate, Chroma, and Milvus.

This makes LlamaIndex a powerful and flexible data integration layer, allowing developers to build agents on top of virtually any existing data stack.

CrewAI

CrewAI comes with its own integrations and also uses external Python libraries. It natively includes a library of 40+ built-in tools that comprise:

- LLMs: Groq, OpenAI, Anthropic

- Services: Revium, RagaAI, StartSE

- Education: PWC, DeepLearning, K2 Consulting

- Applications: Composio, Chroma, Cloudera

- Integrations: Notion, Slack, Replit

- Infrastructure: Microsoft Azure, MongoDB, Nexla

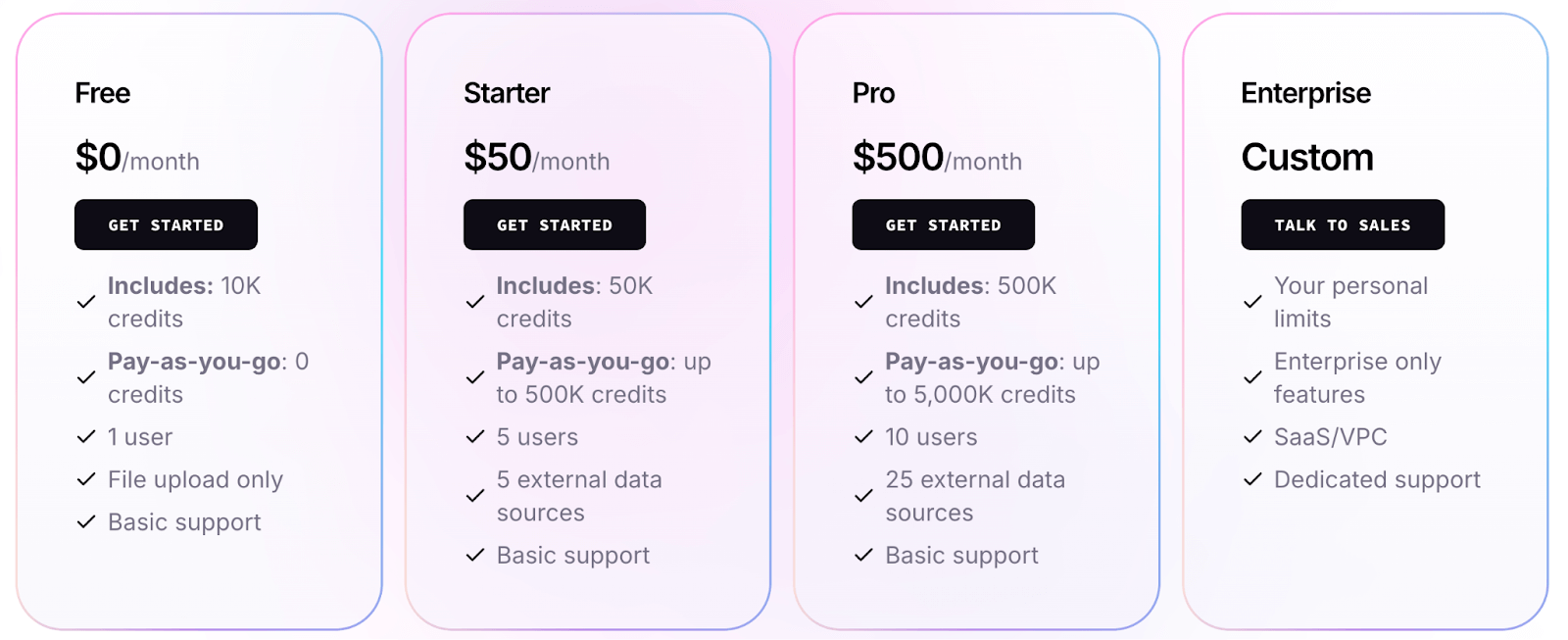

LlamaIndex vs CrewAI: Pricing

Both frameworks are built on a strong open-source foundation, but they also offer managed cloud platforms with different pricing models.

LlamaIndex

The open-source LlamaIndex library is MIT-licensed and free to use. You can pip install llama-index and build with it locally or on your own servers without paying anything (aside from costs of the underlying LLM API calls and infrastructure).

LlamaIndex also provides a hosted platform, which offers more and better features. Their pricing (as of 2025) is credit-based:

- Free tier: $0, includes 10k credits (sufficient to try out with smaller workloads).

- Starter plan: $50 per month, includes 50k credits.

- Pro plan: $500 per month, includes 500k credits.

- Enterprise plan: Custom pricing

CrewAI

CrewAI’s core framework is also MIT-licensed and open-source. But the platform offers several paid plans to choose from:

- Basic: $99 per month

- Standard: $6,000 per year

- Pro: $12,000 per year

- Enterprise: $60,000 per year

- Ultra: $120,000 per year

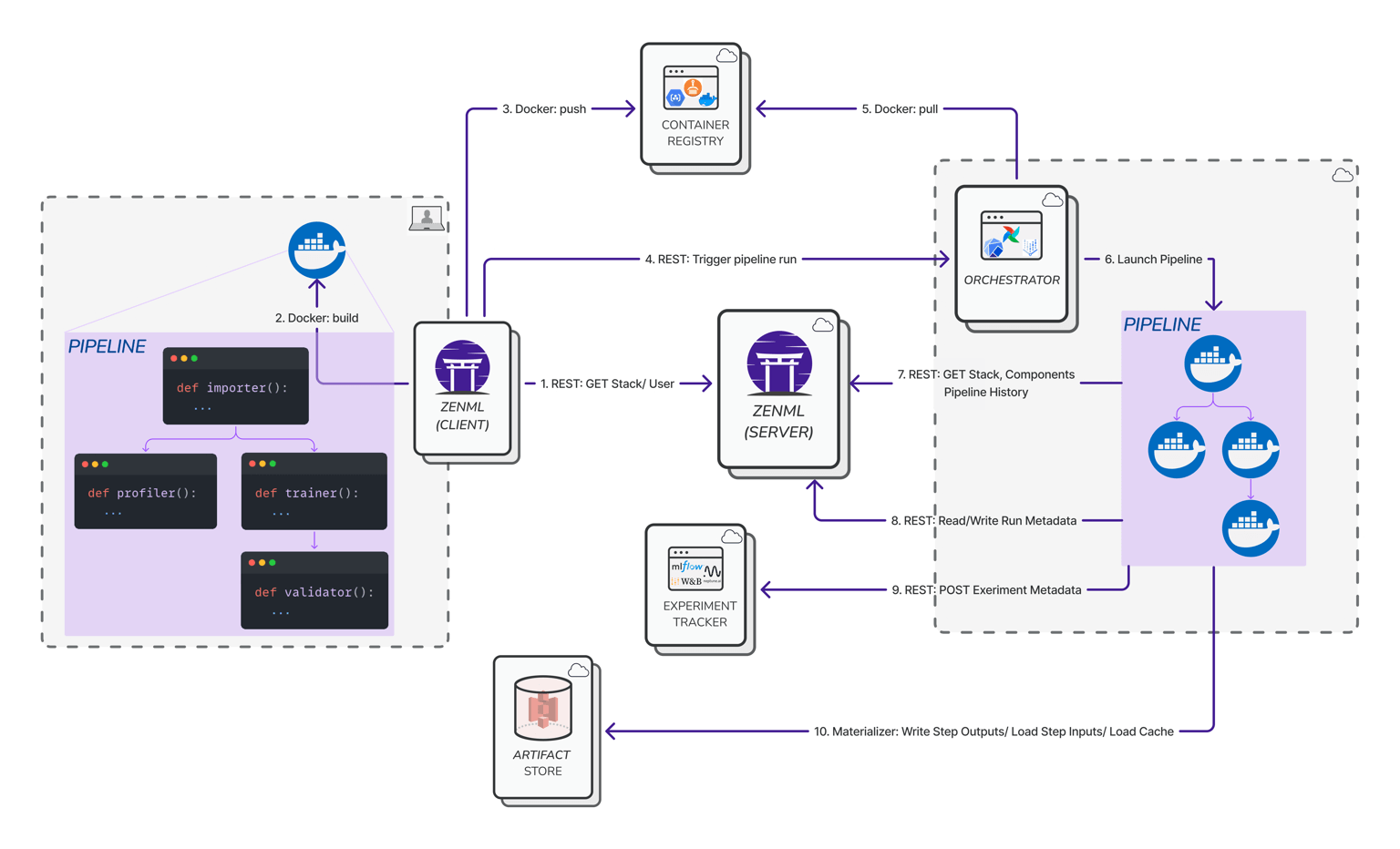

How ZenML Helps In Closing the Outer Loop Around Your Agents

By now, it’s clear that LlamaIndex and CrewAI can help you build sophisticated AI agents. However, they mainly tackle what I think of as the ‘inner loop’ of development – designing and running the agent’s reasoning process.

When it comes to the ‘outer loop’ – things like orchestrating the overall ML pipeline, deploying to different environments, data versioning, and monitoring over time – this is where ZenML comes into play.

Here are the features our product offers that will help you complete your agentic AI journey.

1. Pipeline Orchestration

ZenML lets you embed your LlamaIndex or CrewAI agents into a larger workflow (for data preprocessing, post-processing, model training, etc.) defined as a ZenML pipeline.

For example, you can have a pipeline step that spins up a CrewAI agent to analyze data and return an output, then feed that into another step for evaluation.

ZenML handles the scheduling and running of these pipelines so you can incorporate agentic steps alongside traditional ML steps seamlessly.

2. Experiment Tracking and Visibility

When you run agents through ZenML, you get integrated experiment tracking. ZenML will log parameters, outputs, and metrics from your agents, which means you can compare different runs easily.

This is especially useful when you’re tweaking prompts or workflow designs – ZenML can help you keep track of which version of your agent performed better and why.

Essentially, you gain visibility over the entire process: not just what the agent did, but how it fits into the bigger picture.

3. Reproducibility and Versioning

ZenML’s philosophy is to make ML and LLM workflows reproducible. If you have a ZenML pipeline that includes your agent, you can version the pipeline and pipeline runs.

Later, if your agent starts behaving oddly, you can trace back to which pipeline (with which code, data, and model versions) produced the result.

LlamaIndex and CrewAI by themselves don’t track this outer context – they focus on the agent logic – so ZenML fills that gap, ensuring you know exactly what code and inputs went into an agent’s decision.

4. Combining Tools

Perhaps one of the coolest things is that you’re not forced to choose either LlamaIndex or CrewAI when using ZenML. You can actually use both together if it makes sense.

For example, I’ve experimented with using LlamaIndex as a data retriever within a CrewAI flow – ZenML can orchestrate a pipeline that initializes a LlamaIndex query and passes the result into a CrewAI agent for further analysis.

What’s more, our product provides a unifying layer where multiple frameworks can cooperate. Need to use LangChain or AutoGen alongside these?

ZenML can integrate those, too, in the same pipeline. This way, you can leverage each tool for what it’s best at and not get locked into one paradigm.

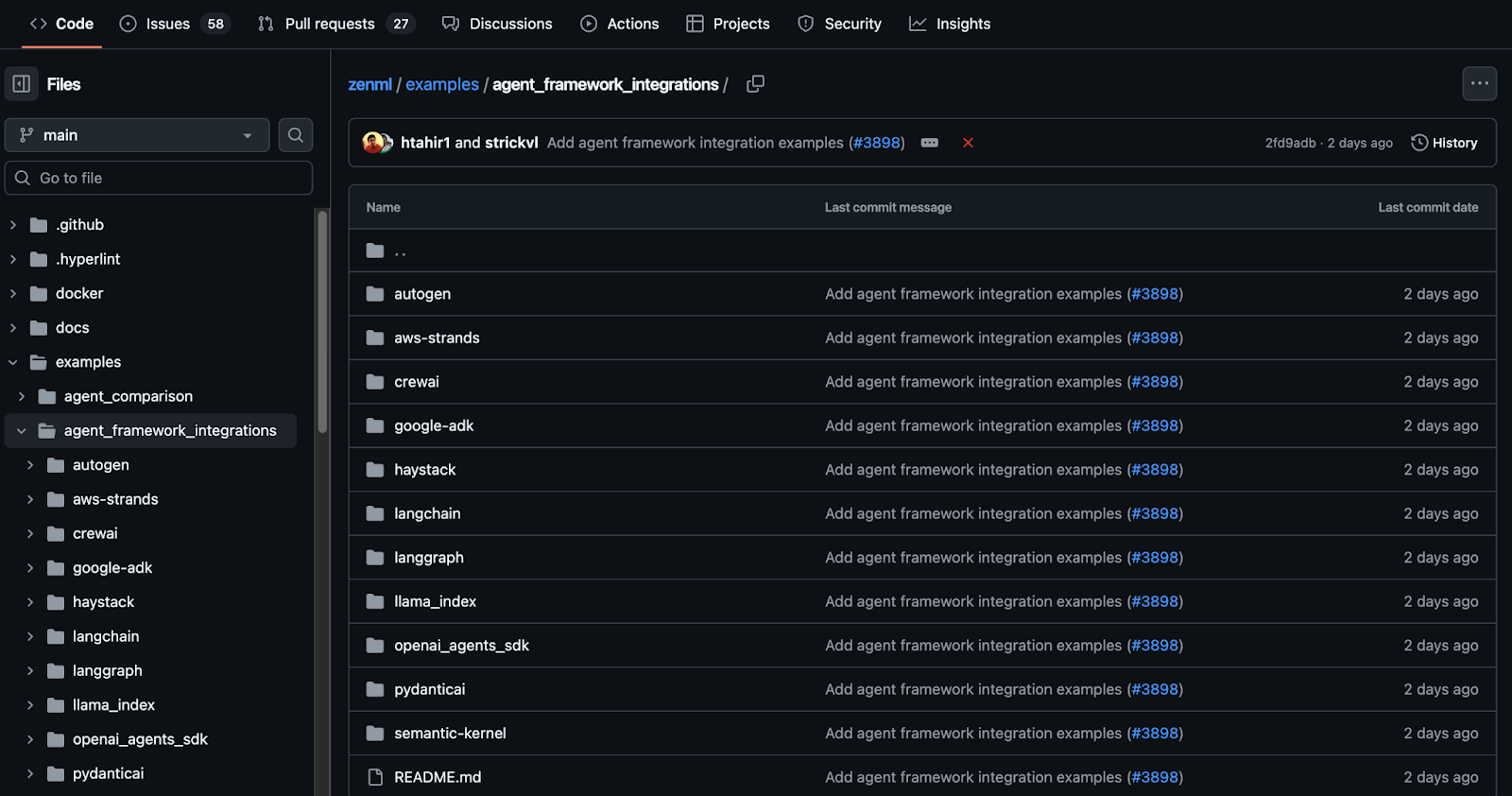

👀 Note: At ZenML, we have built several agent workflow integrations with tools like LlamaIndex, CrewAI, and more. We are actively shipping new integrations that you can find on this GitHub page: ZenML Agent Workflow Integrations.

In short, ZenML acts as the glue and guardrails around LlamaIndex and CrewAI. It doesn’t replace their functionality – rather, it complements it by managing the lifecycle and deployment of your agents.

📚 Related Agentic AI comparison articles:

Which AI Agent Builder Is the Best for You?

So, LlamaIndex or CrewAI? Ultimately, the best choice depends on your project’s needs and your preferred way of working. Here are some guidelines from my experience to help you decide:

✅ Choose LlamaIndex if your primary goal is to build data-aware LLM applications quickly and you need flexibility. It’s fantastic for scenarios like chatbots, Q&A systems, or assistants that need to pull information from custom documents and knowledge bases.

✅ Choose CrewAI if you need fine-grained control over multi-step reasoning or multi-agent interactions. For building complex agent workflows – say an AI system that plans tasks, calls various tools in parallel, iterates on results, and involves several AI specialists – CrewAI provides the structure and reliability to do it with confidence.

Of course, it’s not strictly either/or. You might start with LlamaIndex for a quick MVP and later introduce CrewAI if you need more agents working in tandem. Or use LlamaIndex inside a CrewAI agent for retrieval tasks. Thanks to ZenML, you can even run hybrid setups and see what works best, all within one unified pipeline.

One more thing: No matter which framework you pick, consider how you’ll manage the system in production. That’s where ZenML can help by bringing all your ML and LLM workflows – data prep, model tuning, RAG indexing, agent orchestration, monitoring – into one place.

If you’re interested in taking your AI agent projects to the next level, consider joining the ZenML waitlist. We’re building out first-class support for agentic frameworks (like LlamaIndex, CrewAI, LangGraph, and more) inside ZenML, and we’d love early feedback from users pushing the boundaries of what AI agents can do. With ZenML, you can seamlessly integrate whichever agent framework you choose into robust, production-grade workflows. Join our waitlist to get started.👇