Both LangGraph and AutoGen are application frameworks for building multi-agent systems and complex AI workflows. While both aim to simplify the creation of intelligent agent systems, they differ in design philosophy, feature sets, and integration options.

In this LangGraph vs AutoGen article, we break down the key differences in features, integrations, and pricing for both these platforms. We also discuss how you can leverage both LangGraph and AutoGen (with a tool like ZenML) to get the best of both worlds.

Let’s start by getting to know a little about both of these platforms.

LangGraph vs AutoGen: Key Takeaways

🧑💻 LangGraph: A framework from the LangChain team for building stateful, multi-agent AI applications as explicit graphs. The platform gives developers fine-grained control over agent workflows by representing each step as a node and connecting them with edges.

🧑💻 AutoGen: AutoGen is an open-source (MIT-licensed) multi-agent framework from Microsoft that treats agent interactions as a conversation rather than a static graph. It was one of the first frameworks for LLM agent collaboration and emphasizes dynamic, event-driven exchanges between agents.

Framework Maturity and Lineage

The maturity and development trajectory of these frameworks provide important context for adoption decisions:

👀 Note: The data in the table above is written as of 18th July 2025 (might vary with time).

LangGraph launched a few months after AutoGen but has demonstrated rapid growth in adoption, as evidenced by its higher monthly download numbers. While AutoGen has attracted more GitHub stars, LangGraph shows higher deployment activity, suggesting stronger production usage patterns.

Both frameworks maintain active development cycles, with LangGraph benefiting from the broader LangChain ecosystem and AutoGen receiving ongoing support from Microsoft Research. The choice between them often depends more on architectural preferences than maturity concerns.

LangGraph vs AutoGen: Features Comparison

The table below provides a high-level summary of the key differences between LangGraph and AutoGen, which will be explored in detail in the subsequent sections.

Feature 1. Multi-Agent Orchestration

Orchestration defines how agents, tools, and decisions flow within an application. LangGraph and AutoGen offer distinct models for managing these complex interactions.

LangGraph

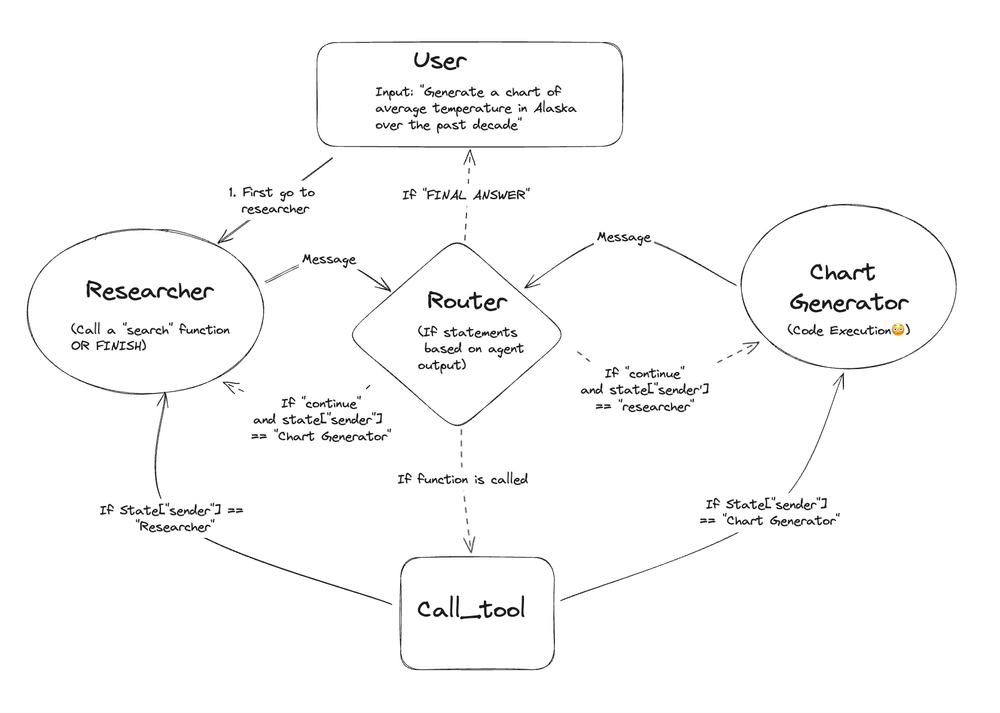

In LangGraph, a multi-agent system is constructed as an explicit state machine. Each agent, or a logical step, is represented as a node in a graph. The flow of control is managed by edges, which connect these nodes.

One particularly powerful feature of LangGraph is the conditional_edge, which acts as a router, directing the workflow to the next appropriate node based on the application's shared state. This paradigm is exceptionally well-suited for creating hierarchical agent teams.

A common and effective pattern is to implement a ‘supervisor’ node that analyzes an incoming task and routes it to one of several specialized ‘worker’ nodes (for example, a researcher_agent or a coder_agent).

After a worker completes its sub-task, it returns control to the supervisor, which can then decide the next step, like routing to another worker or finishing the process. This architecture provides developers with full, predictable, and auditable control over the agentic workflow.

A conceptual implementation in LangGraph would involve defining the state, adding nodes for each agent, and wiring them together with edges.

📚 Related reading: LangGraph alternatives

AutoGen

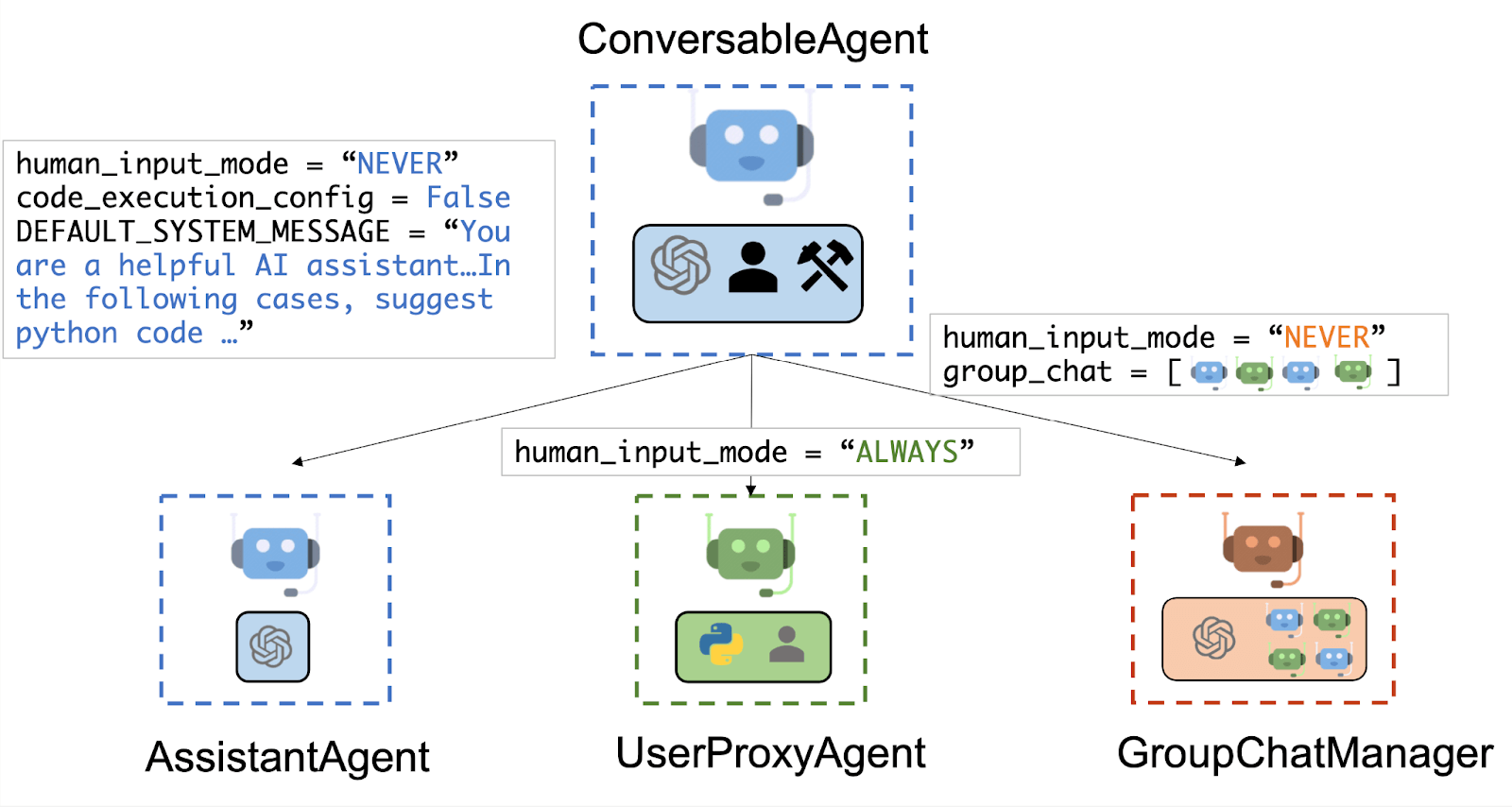

AutoGen orchestrates agents through structured conversational patterns rather than explicit graphs. The most common pattern is the GroupChat, which is managed by a GroupChatManager.

In this setup, a group of agents are defined, and they take turns speaking to contribute to solving a task.

The framework relies on two primary agent types:

- the

AssistantAgent, which is the LLM-powered worker that performs tasks - the

UserProxyAgent, which acts as a proxy for either a human user or an automated code executor.

The flow of the conversation is less about predefined routing and more about which agent is best suited to reply to the last message, a decision often made by the GroupChatManager (which can itself be powered by an LLM).

This allows for more dynamic and emergent problem-solving, where the path to a solution is discovered through collaboration rather than being hardcoded.

A conceptual setup for a multi-agent chat in AutoGen looks like this:

Bottom line: There’s a trade-off you face when selecting one of the two orchestration models:

LangGraph is ideal for building deterministic, reliable systems where the workflow must be predictable and auditable, such as in enterprise automation or regulated industries. Its state-machine nature makes it easier to debug and guarantee behavior.

AutoGen excels at building dynamic, emergent systems where the goal is to leverage the collective intelligence of agents without pre-defining every possible path. This is powerful for creative problem-solving, complex research, and scenarios where the solution path is unknown at the outset.

Feature 2. Human-in-the-loop Controls

When building AI agents, human oversight is crucial to validate, correct, and steer agentic systems. LangGraph and AutoGen provide this capability, but their implementations reflect their core philosophies.

LangGraph

LangGraph's approach to Human-in-the-Loop (HITL) is built directly on its persistence and state management capabilities.

It allows for surgical intervention at any point in the graph's execution. Using functions like interrupt_before or interrupt_after, you can configure the graph to pause execution at a specific node.

When the graph is interrupted, a human can inspect the entire **graph state - every message, every variable, every piece of data. They can then modify this state if needed and resume the execution.

This is an incredibly powerful mechanism for debugging, course-correction, and adding approval gates for sensitive operations like executing code or calling a paid API.

In practice, LangGraph’s HITL capabilities let you implement patterns like:

- Approve or Reject: A human must approve an AI decision before proceeding.

- Edit state: A human can correct the agent’s intermediate results.

- Review tool outputs: A human can review outputs before they are used.

AutoGen

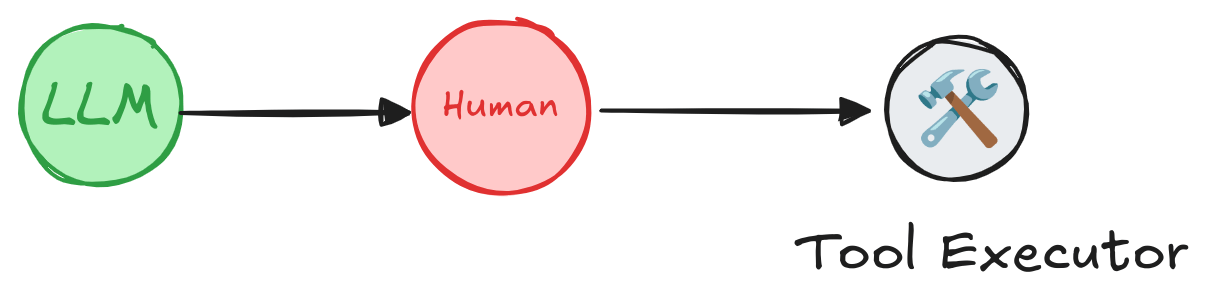

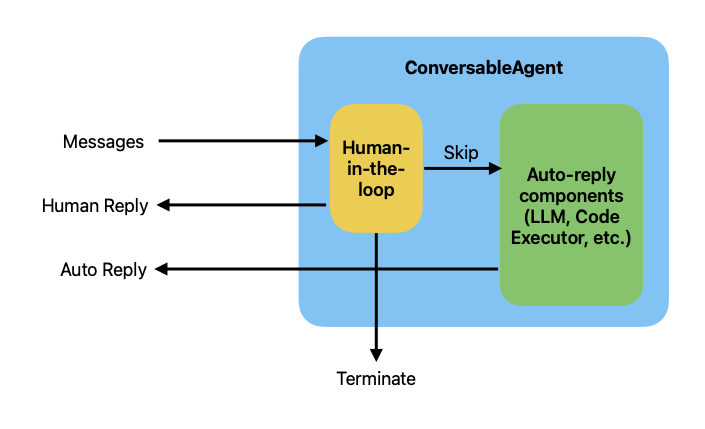

AutoGen also supports human involvement, but its model is a bit different. Rather than pausing arbitrary code, AutoGen includes a special agent type called UserProxyAgent (or simply human agent) that can be part of the agent team.

The idea is that one of the ‘agents’ in the conversation represents the human user or operator. When the system reaches a point where human input is needed, it hands control to this UserProxyAgent, which waits for actual user input before continuing.

Using a UserProxyAgent means you include a human in the agent round-robin.

For example, in a round-robin chat, you might have [AssistantAgent, UserProxyAgent] so that the assistant will eventually prompt the user for a decision, and then wait.

Once the human responds, the control returns to the AI agents, and the conversation proceeds.

Bottom line: From a developer’s perspective, LangGraph’s approach makes HITL feel like a natural extension of coding workflows. In fact, the interrupt function is deliberately similar to Python’s built-in input(), except it works asynchronously and in production environments.

AutoGen’s human-in-loop method is suitable for short interventions or interactive sessions where the user is actively monitoring the agent. It’s recommended for immediate feedback scenarios like clicking ‘Approve’ or providing a quick answer.

Feature 3. State and Memory Management

An agent's ability to remember past interactions and learned facts is what makes it truly intelligent. LangGraph and AutoGen approach this critical feature from different angles.

LangGraph

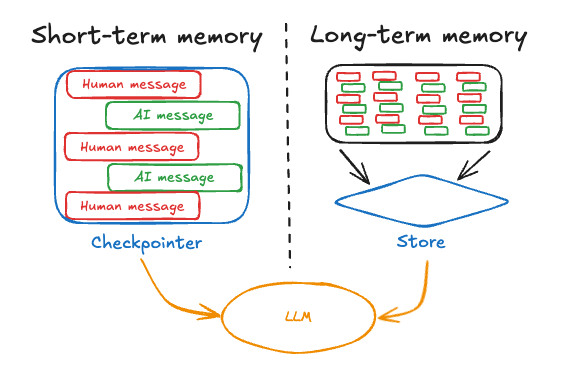

The state is a first-class citizen in LangGraph. The entire application is modeled as a StateGrapp, and the state is explicitly defined and managed. The key to its power is the checkpointer, a component that automatically persists the graph's state after each step.

For development, you can use an InMemorySaver, but for production, robust backends like PostgresSaver or RedisSaver ensure that the agent's state is durable and can survive restarts or failures.

LangGraph also formalizes the concept of memory, distinguishing between:

- Short-Term Memory: The history of messages and data within a single conversational

thread, managed automatically by the checkpointer. - Long-Term Memory: Persistent knowledge stored across threads, categorized into

semantic(facts),episodic(past experiences), andprocedural(learned rules) memory.

This structure is designed for building truly stateful agents that can maintain context over long periods and learn from interactions.

AutoGen

AutoGen's memory system is primarily designed to support Retrieval-Augmented Generation (RAG) workflows. It uses a flexible Memory protocol that allows agents to retrieve relevant information from a knowledge source and add it to their context before generating a response.

While the state of agents and teams can be explicitly saved to a file or database using save_state() and load_state(), this is a more manual process for persistence rather than an automatic, built-in feature of the core execution engine.

The emphasis is less on durable execution and more on providing the agent with the right external knowledge at the right time. This is evident in its integrations with vector stores like ChromaDBVectorMemory and external memory services like Mem0.

The design philosophies here lead to different application types.

Bottom line: LangGraph is engineered to build agents that learn and evolve their internal state over time, like a personal assistant that remembers user preferences across months of conversations.

AutoGen is optimized to build agents that are experts at retrieving and using external knowledge on demand, like a customer support bot that can instantly find the correct article in a vast knowledge base.

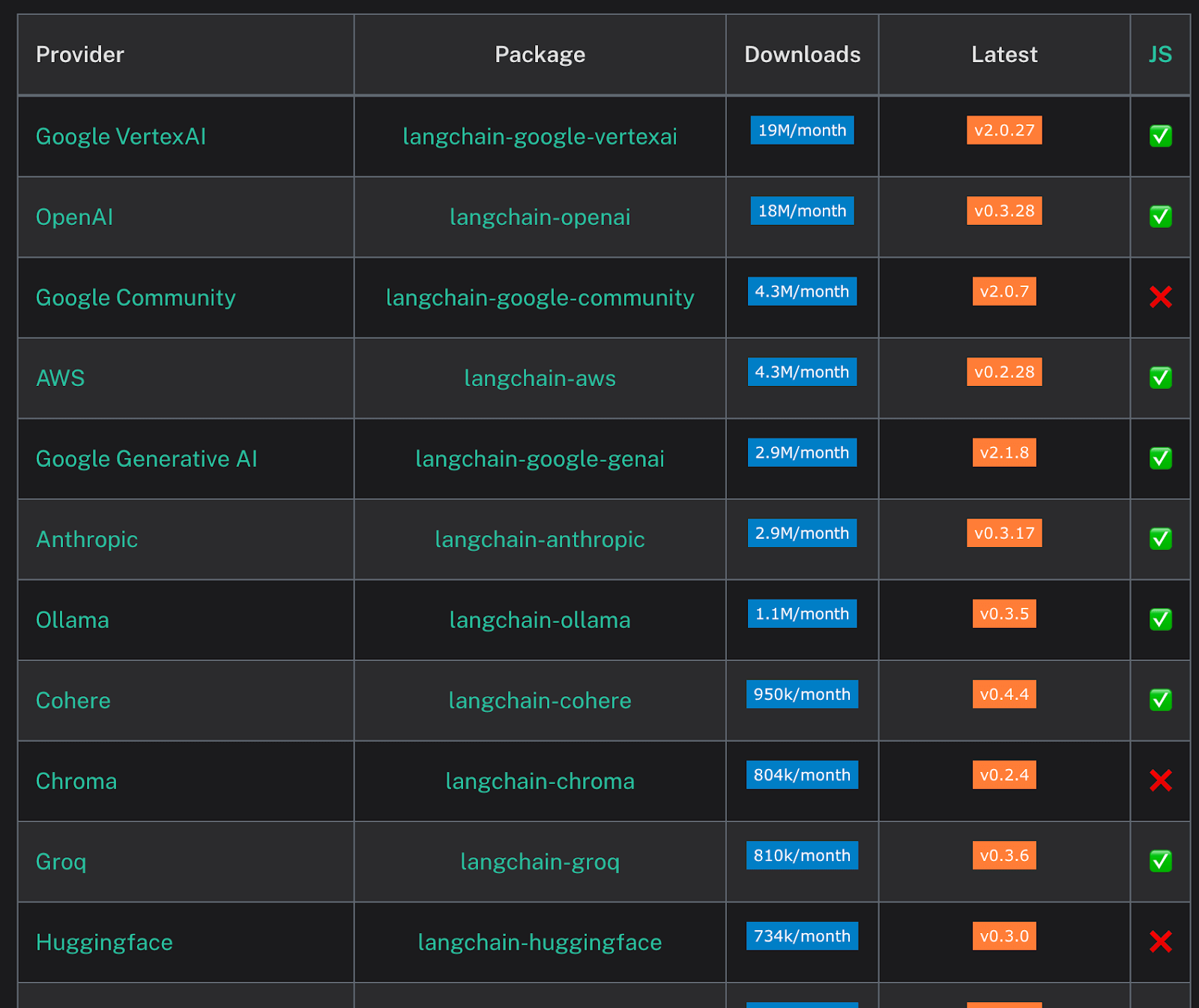

LangGraph vs. AutoGen: Integration Capabilities

No LLMOps framework exists in a vacuum. The ability to integrate with other models, data sources, and tools is crucial for building real-world applications.

LangGraph

LangGraph's primary strength is its deep, native integration with the broader LangChain ecosystem. This gives developers immediate access to:

- LangSmith: A best-in-class platform for observability, tracing, debugging, and evaluating LLM applications. Traces from LangGraph are visualized in LangSmith, showing the step-by-step execution of the graph.

- LangChain Components: The vast library of LangChain integrations for LLMs, document loaders, text splitters, vector stores, and tools that you can use directly as nodes within a LangGraph graph.

- LangGraph Platform: A commercial, managed service for deploying, hosting, and scaling stateful LangGraph agents, providing a complete, production-ready stack.

This creates a vertically integrated, cohesive experience where all components are designed to work seamlessly together.

AutoGen

AutoGen, while a Microsoft project, is positioned as a more neutral integration hub. Its layered architecture (Core, AgentChat, Extensions) is designed to be extensible and encourages third-party integrations.

Its ecosystem is broad and diverse, and has integrations for:

- Other Agent Frameworks: LlamaIndex, CrewAI, and even LangChain itself.

- Observability Tools: AgentOps, Weave, and Phoenix/Arize.

- Data and Memory: Numerous vector databases (Chroma, PGVector), data platforms (Databricks), and memory services (Zep, Mem0).

- Prototyping: AutoGen Studio provides a no-code/low-code UI for building and testing agent workflows.

This positions AutoGen as a central orchestrator in a flexible, best-of-breed MLOps stack. The choice is a strategic one for development teams: LangGraph offers a polished, vertically integrated experience, while AutoGen offers maximum flexibility and choice in a horizontally integrated ecosystem.

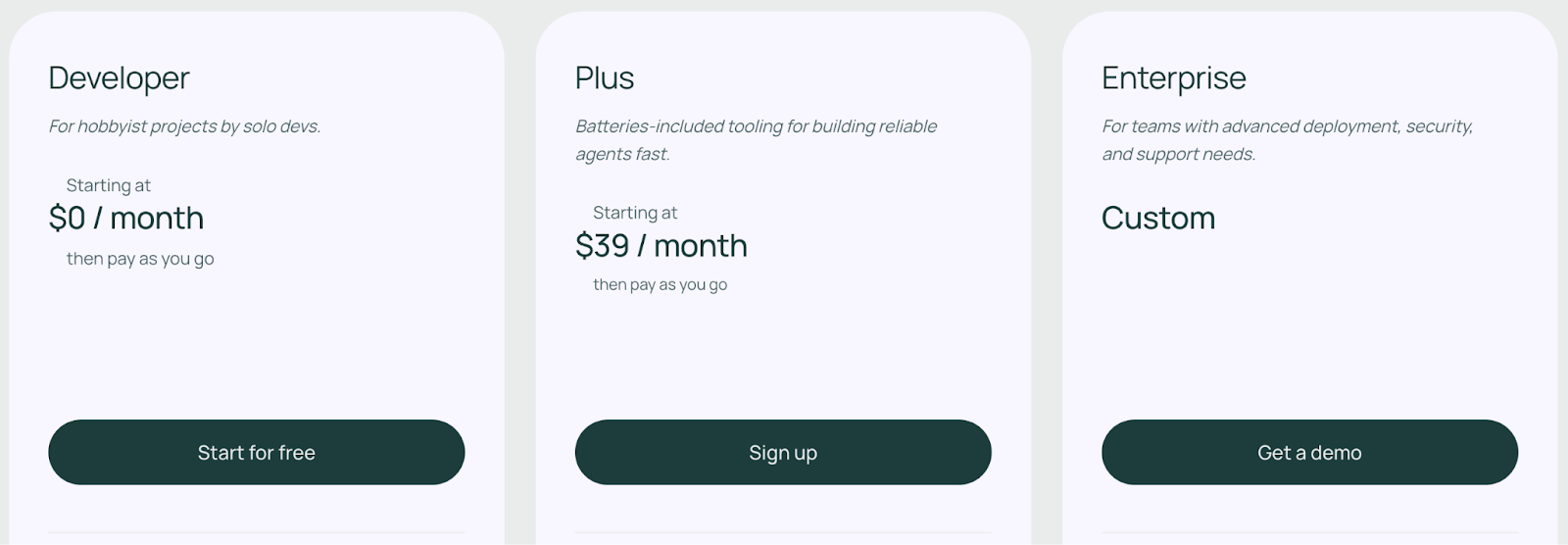

LangGraph vs. AutoGen: Pricing

Both frameworks are open-source, but the total cost of ownership differs based on their approach to managed services and infrastructure.

LangGraph

LangGraph comes with an open-source plan that’s free to use. If you install the LangGraph Python or JS package, you get the MIT-licensed code to design agents with no licensing cost or usage fees. This open-source plan has a limit of executing 10,000 nodes per month.

Apart from the free plan, LangGraph offers three paid plans to choose from:

- Developer: Includes up to 100K nodes executed per month

- Plus: $0.001 per node executed + standby charges

- Enterprise: Custom-built plan tailored to your business needs

📚 Related article:LangGraph pricing guide

AutoGen

The AutoGen framework is also open-source (MIT license) and completely free, with no paid ‘Pro’ or SaaS version offered by Microsoft.

The costs associated with using AutoGen are indirect and stem from the infrastructure that you must provision and manage yourself. These costs include:

- LLM API Calls: Fees for using models from providers like OpenAI or Anthropic.

- Cloud Compute: The cost of virtual machines or containers needed to host the agents and execute code.

- Third-Party Services: Subscription fees for any managed vector databases, observability platforms (like AgentOps), or other tools integrated into the stack.

How ZenML Helps In Closing the Outer Loop Around Your Agents

While LangGraph and AutoGen are powerful frameworks for the ‘inner loop’ of development, defining, writing, and iterating on agentic behavior, they don’t, by themselves, solve the challenges of the ‘outer loop.’

The outer loop encompasses the entire lifecycle of productionizing, deploying, monitoring, and improving these agents over time. This is where a dedicated MLOps and LLMOps framework like ZenML becomes essential.

ZenML acts as the unifying outer loop that governs the entire production lifecycle of your agents, regardless of whether they are built with LangGraph, AutoGen, or any other tool. Here’s how ZenML complements these frameworks:

- Embed agents in end-to-end pipelines: A ZenML pipeline can wrap an entire agentic workflow. Steps in the pipeline can handle data preparation for RAG, the execution of the LangGraph or AutoGen agent itself, and subsequent evaluation of the agent's output. This makes the entire process versioned, reproducible, and executable on any connected infrastructure.

- Unified visibility and lineage: ZenML automatically tracks and versions every part of your pipeline run, including the input prompts, agent responses, LLM models used, and data sources. It provides a single dashboard that visualizes the lineage of all artifacts, giving you and your team a complete picture of how your systems are behaving and making it possible to debug failures systematically.

- Continuous quality checks: Inner-loop tools help define agent behavior, but ZenML helps you understand if that behavior is good or bad. A ZenML pipeline includes steps that automatically run evaluations after each agent execution, flagging bad runs and enabling continuous quality monitoring in production.

- Combine tools and avoid lock-in: ZenML's component-and-stack model decouples your code from the underlying infrastructure. This means you can mix and match tools, even using a LangGraph agent and an AutoGen agent within the same pipeline, and run it all on your chosen cloud stack. This avoids vendor lock-in and lets you use the best tool for every part of the job.

In short, LangGraph and AutoGen define what the agent does; ZenML governs how that agent lives, scales, and evolves in a production environment.

📚 Related comparison article: LangGraph vs CrewAI

Which MLOps Platform Is Best For You?

The choice between LangGraph and AutoGen depends entirely on your project's goals, your team's expertise, and your requirements for control versus flexibility.

✅ Choose LangGraph if your primary goal is to build highly reliable, auditable, and complex agentic systems. It’s the superior choice when you need fine-grained control over every step of the workflow, require cyclical logic for self-correction or iteration, and are comfortable working within the powerful, vertically integrated LangChain ecosystem.

✅ Choose AutoGen if your primary goal is rapid prototyping and exploring the emergent, collaborative capabilities of multi-agent systems. AutoGen helps you with abstracting away low-level orchestration logic, focusing on defining agent roles and tools, and leveraging a broad, flexible ecosystem of integrations.

✅ Use ZenML when you are ready to move any agentic application from a research notebook to a robust production system. It’s the perfect choice when you need reproducibility, scalability, automated evaluation, and a unified platform to manage the entire lifecycle of your AI agents, regardless of the framework used to build them.

ZenML's upcoming platform brings every ML and LLM workflow - data preparation, training, RAG indexing, agent orchestration, and more - into one place for you to run, track, and improve.

Type in your email ID below and join the early-access waitlist. Be the first to build on a single, unified stack for reliable AI. 👇🏻