LangGraph is an agent orchestration framework introduced by the LangChain team. It comes in two plans: an open-source library, which is MIT-licensed and free to use, and a hosted LangGraph platform with paid tiers for deploying and scaling agentic workflows.

The question we answer in this LangGraph pricing article: is LangGraph affordable or expensive for ML teams? In this guide, we break down all the plans, key cost factors, and how LangGraph’s value stacks up.

We also compare it to ZenML, an MLOps alternative, so you can decide which fits your needs best.

TL;DR

Here's a quick summary of LangGraph's pricing tiers and whether they make sense for your team:

LangGraph is worth considering when:

✅ You want a reliable way to orchestrate complex LLM agent flows without building infrastructure from scratch.

✅ If you’re already using the LangChain ecosystem and need added control for agents, especially if a single Developer seat or the usage-based Plus fees cover your team’s needs comfortably.

✅ Small teams can get started on LangGraph Platform essentially for free (100k node executions included), and LangGraph Plus can be reasonable if you only deploy modest workloads.

However, you might consider an alternative (or sticking to free tools) if:

❌ You expect to run very large volumes of agent actions – usage fees of $0.001 per node add up for high-scale deployments.

❌ If you need a full-on-prem or BYOC deployment without jumping to an Enterprise contract, LangGraph’s lower tiers don’t support that; the fully self-hosted and hybrid modes are only in Enterprise.

❌ If you expect to do more than just agent orchestration: many teams who work with agents realise that they have to fine-tune models, run complex evaluations, and do much more. LangGraph isn’t the one if you need an all-rounder. If you still use LangGraph, you’d need to integrate additional tools (and potentially incur separate costs) for those capabilities. In such cases, an alternative like ZenML might provide more value.

Recently Updated (November 2025): This pricing guide reflects LangGraph's evolution following its 1.0 stable release in October 2025 and the product's rebranding to "LangSmith Deployment." The competitive landscape analysis now includes major 2025 entrants like Microsoft's Azure AI Foundry Agent Service, Google's Agent Development Kit (ADK), and OpenAI's Agents SDK. All pricing tiers and usage calculations remain current as of November 2025.

LangGraph Pricing Plans Overview

LangGraph uses a tiered pricing model that's closely integrated with LangSmith, LangChain's observability and evaluation platform. The pricing structure distinguishes between development and production usage, with different deployment options available at each tier.

Unlike traditional SaaS pricing, LangGraph's model combines seat-based licensing with usage-based billing for compute resources. The platform measures usage in ‘nodes executed’ for workflow complexity and deployment minutes for infrastructure costs.

Understanding LangGraph's pricing requires considering both the LangSmith subscription costs and the additional usage charges for running agents in production. This dual-cost structure can make budgeting more complex, but it provides flexibility for teams with varying workload patterns.

LangGraph Pricing Factors to Consider

Before deciding if LangGraph fits your budget, consider a few key factors that influence the true cost:

1. Usage Volume (Node Executed and Uptime)

The non-open-source LangGraph version uses a usage-based model. As mentioned above, you pay $0.001 per node executed, along with a small fee for deployment uptime. The first 100k node executions are free on the Developer plan, but above that, you will be paying as you go.

This means the more complex or frequent your agent’s tasks, the higher the bill. For instance, executing 1 million nodes would cost around $1,000 in usage fees alone. Standby time (keeping an agent deployment running) is also metered on Plus.

Bottom line: If you only run occasional agent workflows, costs stay low, but heavy, continuous workloads rack up significant fees over time. It’s important to estimate your agent’s call volume – a high-volume automation that invokes many LLM calls will directly increase LangGraph costs.

2. Team Size and Seats

LangGraph’s pricing isn’t just about API calls – it also depends on how many users will use the platform. The open-source library has no seat limits.

However, LangGraph’s Plus plan requires a LangSmith Plus subscription at $39 per user per month, which has a limit of 10 users.

One seat is included for free dev deployments, but if you have, say, 5 or 8 developers collaborating, those costs ($39 × number of users each month) add to the total. Large teams beyond 10 users would likely need an Enterprise plan.

3. Deployment and Support Requirements

LangGraph Platform’s Plus plan is a fully managed SaaS (cloud deployment in LangChain’s service), which may be fine for many.

But if you want your agents to run in your own cloud or on-premises for data privacy or latency reasons, that pushes you to the Enterprise tier. Enterprise offers flexible deployment options – cloud, hybrid (control plane SaaS + data plane in your VPC), or fully self-hosted in your infrastructure. However, these come at a premium price.

If you can live with the standard cloud service and community support, the lower tiers will suffice; if not, be prepared for Enterprise-level costs.

All Pricing Plans that LangGraph Offers (Open Source + Paid)

This section provides a detailed breakdown of each plan offered by LangGraph, from the completely free open-source library to the various paid tiers.

Plan 1. Open Source (LangGraph Framework)

LangGraph (open-source) is a free framework for building agentic applications. If you install the LangGraph Python or JS package, you get the MIT-licensed code to design agents with no licensing cost or usage fees.

This gives you full control: you can run agents on your own infrastructure, modify the code, and there are no artificial caps.

The open-source LangGraph provides the core primitives for agents – defining tools, memory, and control flows – but you manage execution and scaling yourself. No managed server or web UI is included.

It’s important to note what you don’t get with just the open-source framework. Unlike the Platform, the OSS version doesn’t come with a persistent agent server or turnkey scaling infrastructure.

For example, scheduling capabilities are absent in pure open-source LangGraph (no built-in cron or triggers), and monitoring is minimal - you can opt to log traces to LangSmith manually for observability, but it’s not automatically integrated unless you use LangSmith.

Using LangGraph OSS might mean writing more custom code to handle persistence and concurrency or using LangChain’s other tools in tandem, which is a bit of a hassle.

That said, for many developers, the open-source route is attractive: $0 cost and complete autonomy. LangGraph’s open library is free forever for any use.

Plan 2. Developer Plan

The Developer plan is an entry-level plan that LangGraph offers. It's essentially a free ‘lite’ tier of the hosted platform.

Despite the name, this plan still involves self-hosting: you deploy a LangGraph server in your environment, say, a Docker container or Kubernetes, rather than using LangChain’s cloud. The benefit is that you incur no platform charges as long as you stay within the limits. The Developer plan includes up to 100,000 nodes executed per month for free.

In other words, you can orchestrate up to 100k agent actions on your own infrastructure before any usage fees kick in. This makes it ideal for personal projects or initial prototyping in a startup.

Here are the features this plan offers:

- Debugging traces: Drill into every call in a chain or agent to spot wrong inputs, prompts, or model outputs fast.

- Dataset collection: Capture prompts/response pairs to turn real traffic into a reusable test set.

- Testing and evaluation: Run structured checks or regression tests against those datasets to prove changes work.

- Prompt management: Version prompts, compare variants, and roll back when a change hurts quality.

- Security and deployment: OAuth with Google and GitHub hosted in the US or EU; comes with basic admin roles.

- Community Discord support only.

There are some limits that you also want to keep in mind:

- You get 1 seat and 1 personal workspace.

- The free usage is limited to 5K base traces a month; pay $0.50 per 1K after that. Extended-retention traces cost $4.50 per 1K.

- Throughput - up to 50K events per hour; 500 MB traces stored per hour.

LangGraph Developer is a generous free tier to get started: you pay nothing to use the platform locally up to 100k actions. It’s perfect for trying out LangGraph in a self-managed way or running a small-scale project. Just remember that it’s not intended for production-scale usage or team collaboration – it’s a bridge to the paid Plus plan once you grow.

Plan 3. Plus Plan

The Plus plan is LangGraph’s primary paid offering for teams, and it unlocks the fully managed cloud experience. With Plus, you deploy agents on LangChain’s Cloud SaaS (with data residency options in the US or EU) - meaning LangChain hosts the infrastructure for you.

This plan is designed for teams who want to quickly go from development to production without worrying about servers, and to have their agent apps accessible from anywhere via the cloud.

LangGraph Plus uses metered usage pricing. There is no fixed monthly fee for the platform itself; instead, you pay for what you use.

The cost is $0.001 per node executed (each agent action) plus a small per-minute charge for standby time, i.e., when your agent deployment is live but idle. Standby minutes are priced at $0.0007 per minute for dev environment deployments and $0.0036 per minute for production deployments.

But here’s the main catch: to use Plus, you must have LangSmith Plus, which costs $39 per user per month.

The Plus plan includes one free deployment (development environment) with some usage bundled, but beyond that, it’s pure pay-as-you-go.

What do you get for these costs? All the features of the Developer tier, and more. The Plus plan unlocks critical production features:

- Managed cloud deployment: No need to host your own servers. LangChain runs and auto-scales the agent infrastructure for you in their cloud.

- Cron scheduling: Schedule agents to run on intervals or at specific times via built-in cron triggers.

- Authentication and authorization: Provides an auth layer so you can securely call LangGraph APIs from your applications.

- Smart caching: Caches LLM calls to reduce redundant hits to the LLM API. In essence, if your agent asks the same question or performs the same step repeatedly, LangGraph can reuse previous results to save tokens.

- LangGraph Studio (Cloud): A web-based studio for prototyping and debugging agents, which is available in the Plus tier (the Developer tier has a local-only version).

- Plus everything in the Developer plan: State management APIs, memory, streaming, etc., and support for up to 10 seats.

The Plus plan’s appeal is that it offers scalability without upfront investment. You don’t pay a large subscription; you pay in proportion to usage. For a team deploying an agent-powered app, this can be cost-efficient if usage is moderate or variable – you’re essentially charged per workflow run.

Plan 4. Enterprise Plan

The Enterprise plan is LangChain’s top-tier offering for LangGraph, tailored to companies with advanced needs around security, scale, and support.

Unlike the self-serve Plus plan, Enterprise involves contacting the LangChain sales team for a custom agreement – pricing is not publicly disclosed (we think it’s typically negotiated on a case-by-case basis).

What does Enterprise include? In short, everything in Plus, and then some more:

- Flexible deployment options: Enterprise customers can deploy LangGraph wherever they need – this includes fully self-hosted on your own cloud or on-premises, or a hybrid model (LangChain runs the control plane while your infrastructure handles the data plane).

- Enterprise-grade support: LangGraph Enterprise comes with a formal Service Level Agreement (SLA) for uptime on managed offerings. You also get designated support staff, like a dedicated Customer Success Engineer, architectural guidance consultations, and even a shared Slack channel with the LangChain team for fast help.

- Team training and onboarding: The Enterprise package includes training sessions to help your team use LangGraph effectively.

- Enterprise admin features: Things like SSO integration (SAML/OKTA login), granular role-based access control, audit logging, and other compliance-related features typically come with the Enterprise plan.

- Custom billing and procurement: Annual invoicing, ACH payments, vendor onboarding support – basically, the sales process is adapted to enterprise procurement needs.

Is LangGraph Expensive?

Now for the big question: How much value do you get for LangGraph’s cost, and is it expensive? The answer depends on your scale and perspective:

If you’re a small-scale user, LangGraph can be cost-effective. The combination of an open-source core and the free Developer tier means you might not pay anything at all.

Even the Plus plan, if used sparingly, won’t break the bank. Let’s assume you used 50k node executions in a month, which would be around $50 in usage fees, and a couple of developer seats, ~$78, which is quite reasonable for a business.

In that sense, LangGraph’s pricing is accessible; it lowers the barrier to start orchestrating agent workflows. The flip side comes with scale.

LangGraph’s costs scale linearly with usage, so large workloads can become expensive.

In our research, we found that a user pointed out that the $0.001 per node fee effectively ‘doubles my COGS’ for generating content, since each piece involved ~10 model calls (10 × $0.001 = $0.01 overhead) on top of the model API costs.

The Reddit user said, “I just don’t think the current pricing is reasonable for any system at scale,” suggesting it was about 10x higher than they anticipated.

So when you want to scale, you will need to look for an alternative to LangGraph. Here’s one that we suggest.

The Agent Orchestration Landscape in Late 2025

The agent orchestration market has intensified dramatically since LangGraph's launch. The agentic AI market is projected to grow from $6.96 billion in 2025 to $42.56 billion by 2030—a 43.6% CAGR—reflecting rapid enterprise adoption across industries.

This explosive growth has attracted major players who launched competitive frameworks throughout 2025:

Microsoft consolidated its offerings in early 2025, unifying Semantic Kernel and AutoGen into the Azure AI Foundry Agent Service, now generally available with Agent-to-Agent (A2A) protocol support and backing from 50+ technology partners including Salesforce, MongoDB, and ServiceNow.

Google introduced the Agent Development Kit (ADK) at Google Cloud NEXT 2025, designed specifically for multi-agent systems with native GCP integration. ADK powers Google's internal tools like Agentspace and offers sequential, loop, and parallel agent orchestration patterns.

OpenAI's Agents SDK launched in early 2025 and rapidly gained traction, emphasizing production-grade deployments with streamlined integration for teams already using OpenAI models. Despite being months old, it's achieved nearly 10,000 GitHub stars.

Open-source alternatives are gaining ground too. CrewAI (30,000+ GitHub stars) offers role-based team orchestration without LangChain dependencies, while Agno claims 50x less memory usage than LangGraph—addressing a common complaint about resource consumption at scale.

LangGraph's response: The October 2025 release of LangGraph 1.0 marks the first stable major release in the agent orchestration space, signaling production readiness with a commitment to API stability until 2.0. The platform also added Model Context Protocol (MCP) support, node-level caching, and deferred execution capabilities.

The pricing implication: With intensifying competition from well-funded corporate alternatives and lightweight open-source options, LangGraph's usage-based pricing faces more scrutiny. Teams now weigh the $0.001 per node execution fee against free alternatives from Microsoft and Google that bundle agent orchestration into existing cloud contracts—or ultra-lightweight frameworks that eliminate the "abstraction tax" entirely.

For context, 85% of organizations have now integrated AI agents in at least one workflow, but only 2% have deployed agentic AI at scale. This gap between experimentation and production deployment makes cost predictability increasingly important as teams evaluate which framework will carry them from pilot to production.

ZenML – An Affordable LangGraph Alternative

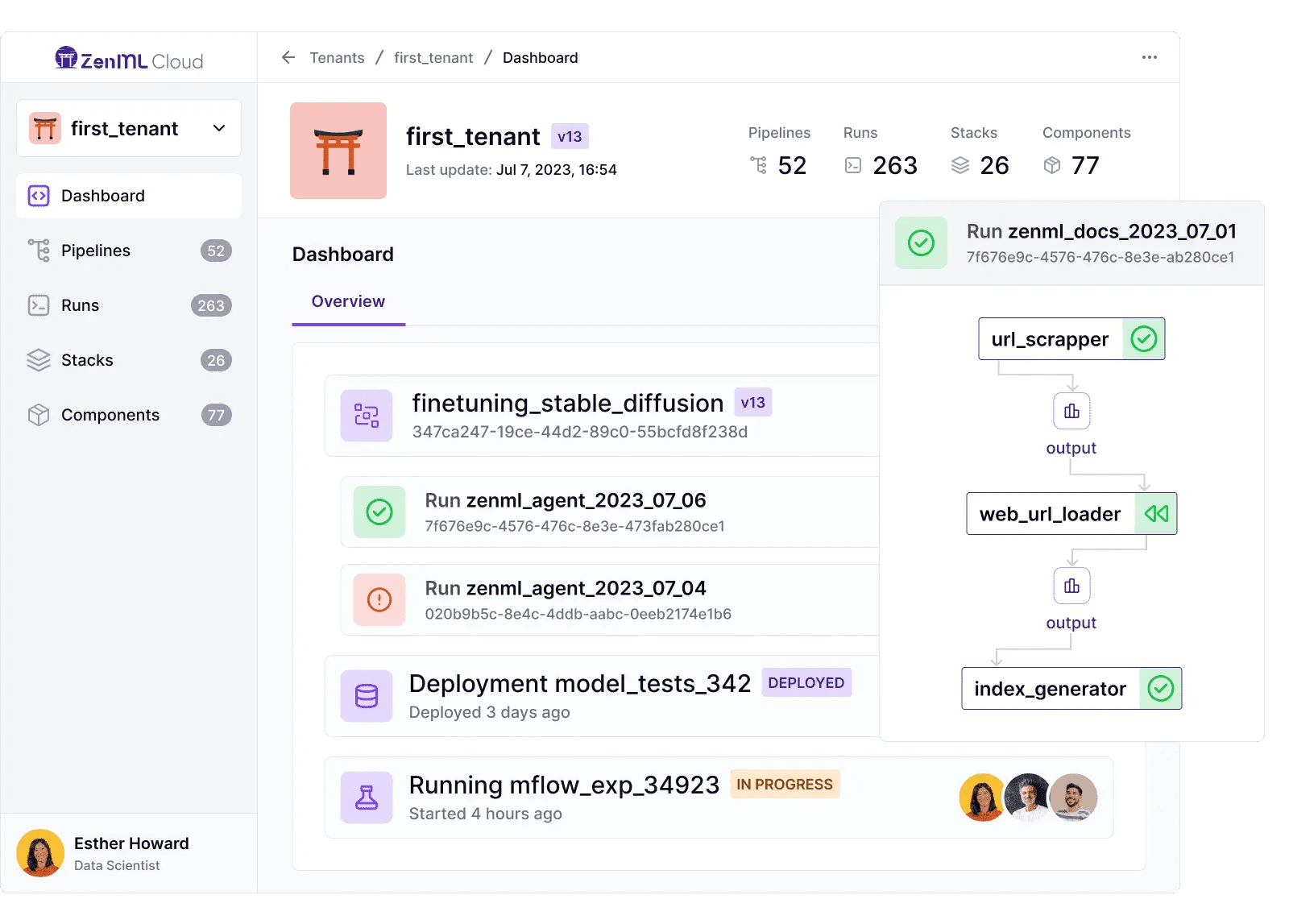

ZenML is an MLOps framework that provides pipeline orchestration, experiment tracking, and model deployment capabilities at a fraction of the cost.

Unlike LangGraph's focus on agent orchestration, ZenML takes a broader approach to ML workflows. It can handle agent development while also providing comprehensive MLOps features that LangGraph lacks, potentially eliminating the need for multiple tools.

Let’s compare LangGraph and ZenML across some key aspects:

Feature 1. Deployment Flexibility

One clear gap shows up when you try to deploy - LangGraph puts “deploy in your own cloud or on-prem” behind the Enterprise tier, and even there, you get only a few opinionated templates. If your stack or security rules diverge, you are on your own; there is no promise that its pre-built scripts will match your custom use case or keep pace with future changes.

ZenML goes the other way. The core framework is open source and cloud-agnostic, so you can self-host on any target: AWS, GCP, Azure, bare-metal Kubernetes, or your laptop, without buying an extra license. A managed SaaS option (ZenML Pro) exists for teams that want zero-ops convenience, but it is not required.

This BYOC model keeps you in control. Need to live inside a locked-down VPC? Want a multi-cloud or hybrid setup? ZenML’s stack abstraction handles that from day one, so you avoid vendor lock-in and can adapt the deployment to your exact security and compliance rules.

Feature 2. Artifact Store

LangGraph is focused on agent logic and state, but it doesn’t offer an integrated artifact store concept for general ML artifacts.

ZenML, however, treats artifact management as a first-class concern. Every ZenML pipeline comes with an Artifact Store component that automatically saves and versions the outputs of each pipeline step.

You can create artifacts with ZenML with a few lines of code:

Whether it’s a trained model, a dataset split, or an evaluation report, ZenML will persist it in a location of your choice - S3 bucket, GCS, Azure Blob, MinIO, etc. These artifacts are indexed and trackable by ZenML, enabling reproducibility and easy reuse.

ZenML acts as your artifact and metadata store by default, which can simplify your life when building end-to-end workflows.

All your outputs are safe, queryable, and in your control. If you need to incorporate traditional ML steps around your LLM agents, ZenML will handle the data movement and tracking seamlessly – something you’d have to assemble yourself if using LangGraph alone.

Feature 3. Observability and Interactive Debugging

Monitoring and debugging matter for complex agent pipelines. LangGraph leans on LangSmith for trace logging; usage beyond the free tier adds cost.

ZenML ships its own observability stack. The Dashboard shows live runs, step logs, and artifacts in one place without extra fees. You can still keep LangSmith if you want. Import the langsmith SDK in a ZenML step, and the pipeline records traces like any other artifact; the ZenML blog includes a working example.

📚 Read more about it here - LLM Evaluation & Prompt Tracking Showdown

ZenML also integrates with popular experiment tracking and monitoring tools if needed. It has native integration with Weights & Biases, MLflow, Neptune, WhyLogs, and others. So if you want advanced experiment analytics or data drift monitoring, you can plug those in – often with just a few lines of code or config.

LangGraph doesn’t offer such integrations; you’d have to instruct your LangGraph agents to send data to those systems manually.

What’s more, ZenML offers additional features enhancing its value as an MLOps framework and making it an attractive alternative for agentic workflows:

- End-to-end LLMOps Toolkit: Ready-made toolkit with pre-built steps, templates, and guides for RAG pipelines, evaluation, reranking, finetuning, and more.

- Scheduling and Triggers: Robust scheduling and triggers for pipelines automate ML workflows based on time or events, ensuring up-to-date models and efficient applications.

- Smart Caching: Automatically caches pipeline step outputs, preventing redundant computations. Speeds up development iterations and reduces compute costs, especially during experimentation.

Common Questions About LangGraph Pricing

Is LangGraph's pricing competitive with Microsoft and Google's agent frameworks? Microsoft's Azure AI Foundry and Google's ADK are bundled into existing cloud service contracts, making direct price comparison difficult. LangGraph's transparent usage-based model ($0.001 per node execution) can be more cost-effective for smaller workloads, but enterprise teams with existing Azure or GCP commitments may find better value in their platform-native solutions. The key differentiator is deployment flexibility—LangGraph's open-source core allows running agents anywhere, while Microsoft and Google's frameworks optimize for their respective clouds.

What changed with LangGraph 1.0 in October 2025? LangGraph 1.0 represents the first stable major release in the agent orchestration space, with a commitment to no breaking changes until version 2.0. The release included node-level caching to reduce redundant computation, deferred node execution for complex workflows, Model Context Protocol (MCP) endpoint support, and significant performance optimizations. Additionally, LangGraph Platform was rebranded to "LangSmith Deployment" to better reflect its integration with the broader LangSmith ecosystem.

How does LangGraph pricing compare to lightweight alternatives like Agno or CrewAI? Both Agno and CrewAI offer open-source frameworks with no usage fees—similar to LangGraph's open-source library. The pricing difference emerges when you need managed infrastructure. CrewAI requires you to handle your own deployment and scaling infrastructure entirely. Agno emphasizes extreme efficiency (50x less memory than LangGraph's managed platform), potentially reducing your compute costs if you self-host. LangGraph's Plus and Enterprise tiers charge for the convenience of managed infrastructure, observability, and production features—you're paying to avoid building that operational layer yourself.

Are there hidden costs beyond the $0.001 per node execution fee? Yes, several. First, LangGraph Plus requires a LangSmith Plus subscription at $39 per user per month—even if you only use LangGraph. Second, production deployments incur standby time charges ($0.0036 per minute) while your agent is live but idle. Third, trace storage beyond the included limits costs $0.50 per 1K base traces or $4.50 per 1K extended-retention traces. For high-availability production systems running continuously, these charges can significantly exceed the per-node execution costs, especially for agents with variable workload patterns.

Is LangGraph free to use if I self-host the open-source version? Yes, the LangGraph open-source library is MIT-licensed and completely free to use, modify, and deploy. You'll need to provide your own infrastructure (servers, databases, monitoring), implement your own scheduling and scaling, and handle persistence and memory management yourself. The Developer plan offers a middle ground with 100,000 free node executions per month and basic managed features, making it viable for personal projects or early-stage startups before you need production-scale infrastructure.

Book a Personalized Demo with ZenML to Create AI Agentic Workflows

LangGraph offers powerful capabilities for building stateful AI agents, but its pricing model might not be perfect for all team sizes. The combination of seat-based licensing through LangSmith and usage-based infrastructure charges can quickly escalate costs, especially for growing teams or high-volume deployments.

While LangGraph excels at complex agent orchestration, teams should carefully evaluate whether they need its specialized features or if a more general-purpose MLOps framework like ZenML could meet their needs at a lower cost.

The beauty of ZenML is that you can still leverage LangGraph inside of ZenML.

You can pip-install the open-source langgraph package inside a ZenML step, build an agent graph exactly as usual, then let ZenML orchestrate the run on any stack: local, Kubernetes, Airflow, AWS, GCP, or a locked-down VPC.

We lets you keep LangGraph’s node-based interface while gaining ZenML’s scheduling, caching, artifact tracking, and BYOC deployment freedom.

Real-world teams already follow this path:

- **Chaos Labs:** Edge AI Oracle used LangGraph for the multi-agent logic and ZenML for the surrounding LLMOps workflow.

- Jockey: Conversational Video Agent combined LangGraph graphs with ZenML infrastructure to scale video understanding pipelines.

So if you like LangGraph’s abstractions but want a portable, production-ready backbone, just run the OSS framework inside ZenML and deploy anywhere.

If you're looking to build agentic workflows without the complexity and cost of LangGraph, schedule a demo with ZenML. Our team can show you how to create sophisticated ML pipelines, including agent-based systems, while maintaining full control over your infrastructure and costs.

📚 Relevant helpful articles: