Integrate your Haystack RAG pipelines with ZenML

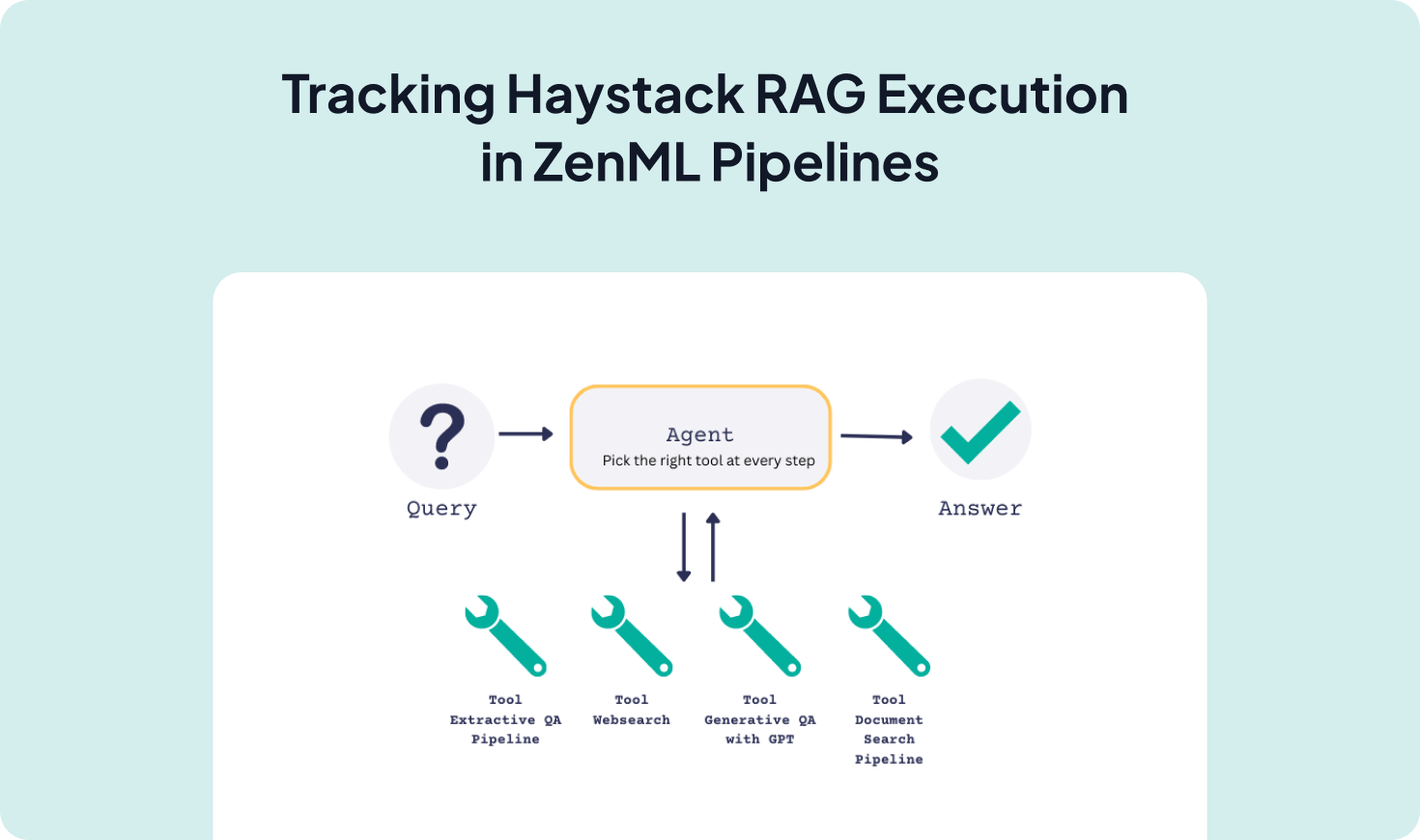

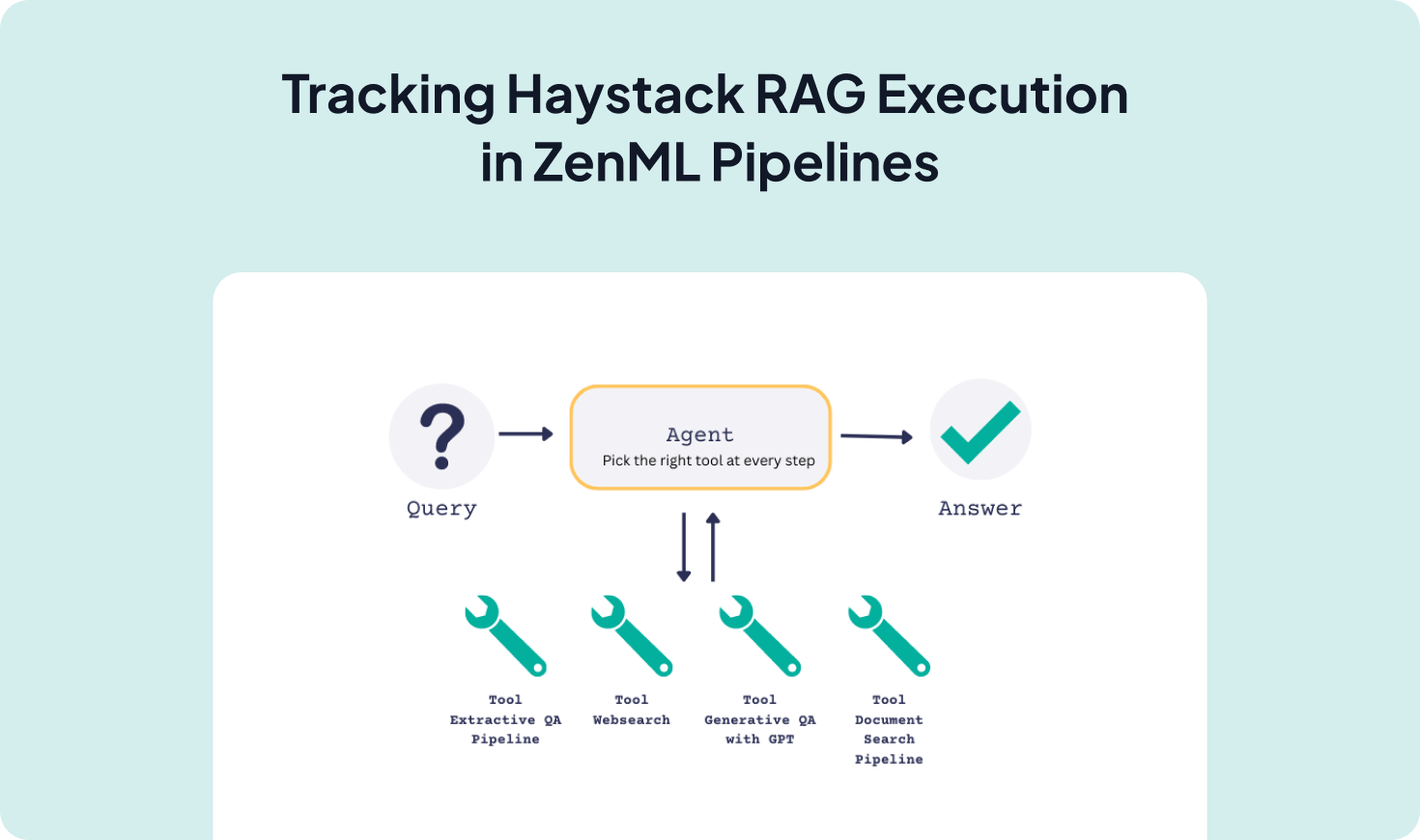

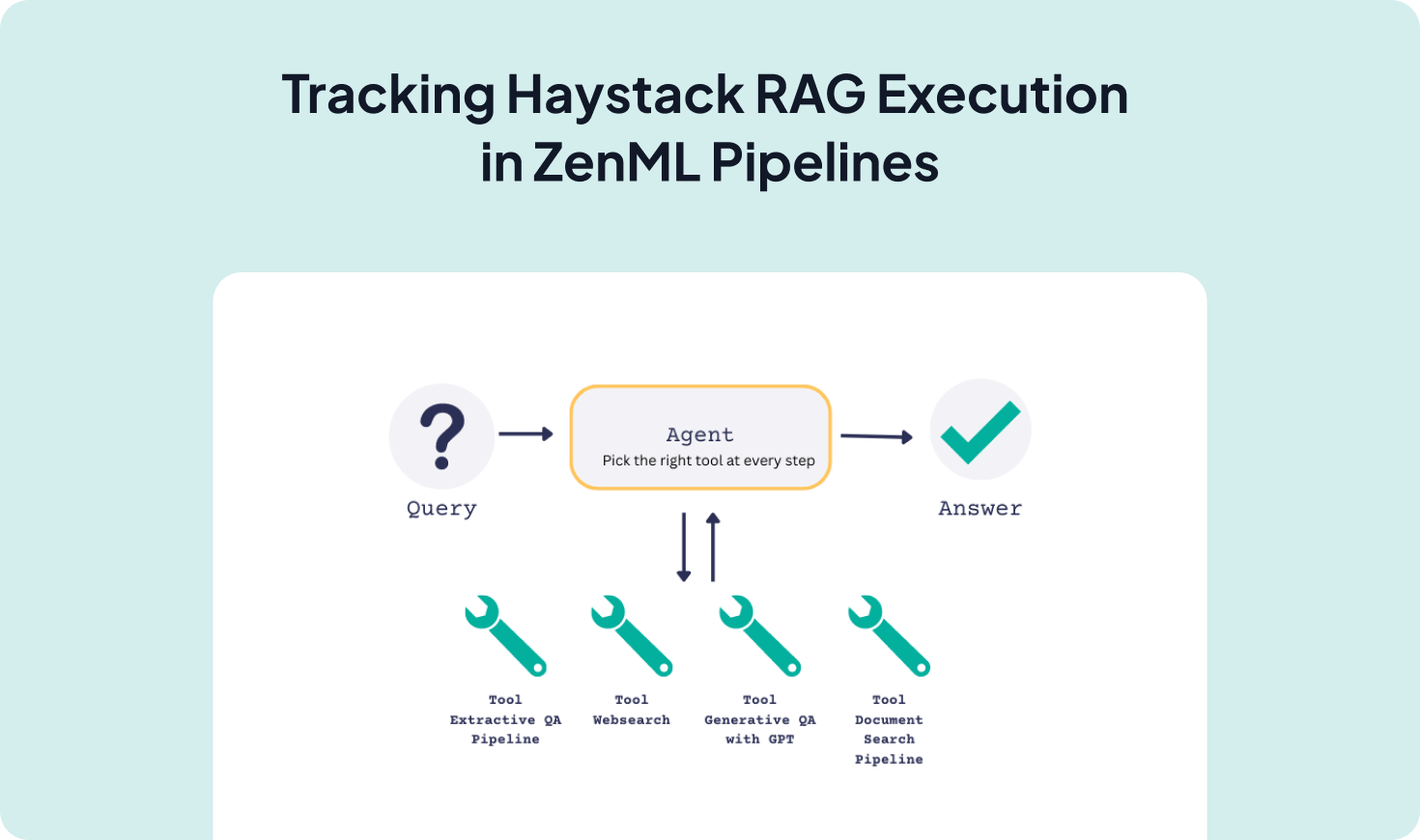

Haystack provides a component-based framework for Retrieval-Augmented Generation (RAG); integrating Haystack with ZenML wraps your retrievers, prompt builders, and LLM calls inside reproducible pipelines with artifact tracking, observability, and a clean path from local experiments to production.

Features with ZenML

- End-to-end RAG orchestration. Run complete Haystack retrieval and generation pipelines inside ZenML.

- Unified artifact tracking. Automatically log queries, retrieved documents, and answers as ZenML artifacts.

- Custom evaluation workflows. Add eval steps to measure answer quality, latency, or retrieval accuracy.

- Flexible retriever integration. Swap in-memory retrievers for vector databases or cloud backends within the same pipeline.

- Production-ready pipelines. Scale Haystack RAG from local dev to production orchestrators like Kubernetes or Airflow with no code changes.

Main Features

- Retrieval-Augmented Generation. Build RAG flows with retriever, prompt builder, and LLM.

- Component architecture. Swap or configure components without changing your pipeline shape.

- In-memory store. Use a simple in-memory document store and retriever for quick starts.

How to use ZenML with

Haystack

from zenml import ExternalArtifact, pipeline, step

from haystack_agent import pipeline as haystack_pipeline

@step

def run_haystack(question: str) -> str:

# Execute the Haystack pipeline and extract the first LLM reply

result = haystack_pipeline.run(

{"retriever": {"query": question},

"prompt_builder": {"question": question}},

include_outputs_from={"llm"},

)

replies = result.get("llm", {}).get("replies", [])

return replies[0] if replies else "No response generated"

@pipeline

def haystack_rag_pipeline() -> str:

q = ExternalArtifact(value="What city is home to the Eiffel Tower?")

return run_haystack(q.value)

if __name__ == "__main__":

print(haystack_rag_pipeline())

Additional Resources

ZenML Agent Framework Integrations (GitHub)

ZenML Documentation

Haystack Documentation