LlamaIndex has established itself as a powerful framework for building AI agents that understand and process your data. Its ability to create context-augmented research assistants and handle complex document processing tasks has made it popular among developers.

However, teams expanding into complex, multi-agent workflows often find LlamaIndex’s scope too narrow. In production use, it excels at indexing and retrieval, but brings only basic support for orchestrating agents or running pipelines.

There are more such drawbacks (more on this later), which is why you might consider switching to a LlamaIndex alternative.

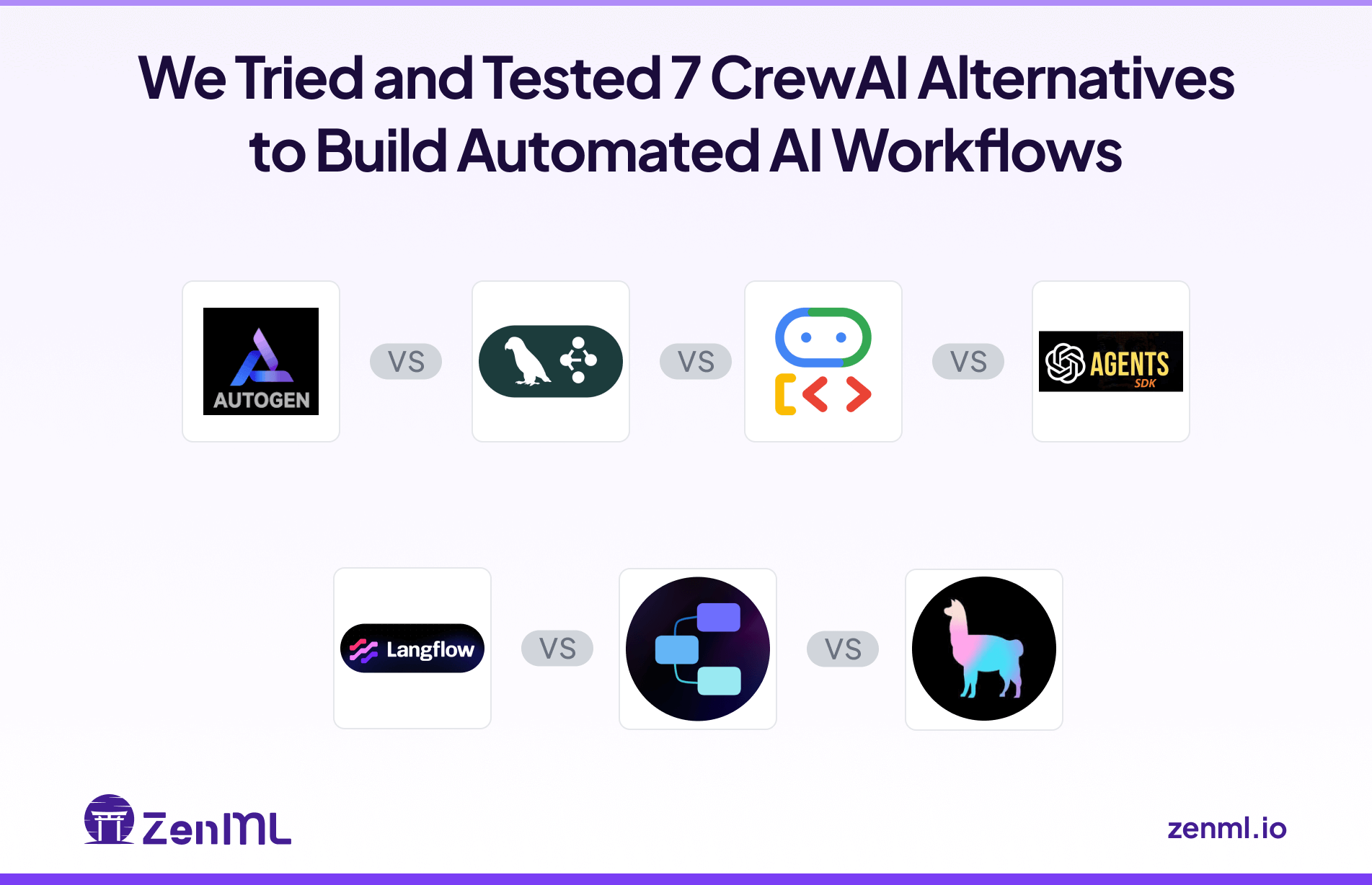

This article surveys the best LlamaIndex alternatives to build agentic AI systems. We cover why you might seek another tool, what evaluation criteria to use, and then dive into seven leading open-source and paid frameworks and platforms.

TL;DR

- Why Look for Alternatives: LlamaIndex shines at RAG, but it’s not designed as a full-fledged agent or workflow engine. It lacks built-in orchestration for complex multi-step or multi-agent pipelines and can be hard to debug due to its heavy abstractions.

- Who Should Care: Machine learning engineers, MLOps teams, and AI product developers who need more than just index-and-retrieve. If you’re building multi-turn applications, chained prompts, agent teams, or integrated pipelines with monitoring, alternatives that provide end-to-end pipelines, agent frameworks, or stronger observability may serve you better.

- What to Expect: We evaluate each alternative on its workflow coverage (built-in agent concepts, pipelines, components), performance/scalability (support for distributed or event-driven execution), and cost (open-source vs hosted, usage fees).

The Need for a LlamaIndex Alternative?

Although LlamaIndex makes knowledge retrieval easy, there are compelling reasons to consider alternatives when building complex AI agents or production systems.

Reason 1. LlamaIndex is Great at RAG but Thin Elsewhere

LlamaIndex is conceived as a knowledge assistant framework – its core focus is transforming unstructured data (documents, webpages, PDFs) into embeddings and fast indexes, then utilizing LLMs to query them. The emphasis is on indexing and retrieval.

However, for agent orchestration (having multiple steps, decision logic, or tool calls in sequence), LlamaIndex offers very little beyond basic workflows. While the new AgentWorkflow module introduces some planning and tools for agents, it remains largely centered on calling LLMs with tools as pure functions.

Reason 2. Over-Abstacted Design Makes Debugging Hard

LlamaIndex uses layers of abstraction - Pydantic data models, asyncio event loops, etc., to define skills and workflows. This makes for a nice high-level API, but it can hide errors. Asynchronous execution and a strict type scheme can produce confusing validation errors or unresponsive agents.

In simple terms, if an agent step fails or hangs, it’s hard to trace the reason behind it. Debugging often means delving into LlamIndex internals or adding verbose logging.

Some alternative frameworks opt for more explicit graphs or logs of every step, which can make diagnosing issues more straightforward.

Reason 3. Licensing and Compliance Headaches

LlamaIndex’s core library is Apache-2.0, a permissive open-source license. But some of its integration modules, especially around specialized data readers, are GPL-3.0.

For example, the llama-index-readers-pdf-marker plugin is explicitly GPL-3.0 licensed. This mix can trigger compliance concerns for your company if you redistribute the software or embed it in proprietary products.

In contrast, many alternatives use permissive licenses throughout (e.g., MIT or Apache), making them safer bets for corporate use. Even if licensing isn’t a showstopper, having clear, uniform licensing simplifies audits and legal reviews. Thus, if open-source licensing is a factor, you may lean toward tools with consistently permissive licenses.

Evaluation Criteria

Not all agent frameworks are built the same. Here are the key criteria to consider as you evaluate LlamaIndex alternatives:

1. Workflow and Feature Coverage

Look at how end-to-end the framework is. Does it just perform data retrieval, or does it also provide agents and pipelines? Key questions to ask:

- Does it offer built-in agent abstractions? Example: planners, dialogs, chain-of-thought, and tool execution.

- Can you define multi-agent workflows like pipelines or graphs of multiple LLM calls?

- Does it support features like memory, human-in-the-loop steps, or approval processes?

- Are there ready-made components? Retrievers, embedders, tools, or UI helpers like dashboards and log viewers.

- Is the architecture modular, allowing you to plug in your own LLMs, databases, or tightly coupled systems?

Ideally, alternatives should at least match LlamaIndex’s feature set (RAG plus basic agent tools) and go beyond with orchestration, monitoring, and end-to-end pipelines.

2. Performance and Scalability

AI agents, particularly those interacting with users or critical business processes, must be responsive and capable of handling varying loads without performance degradation.

Performance considerations include response time or latency for real-time applications, and assessing if performance degrades when processing huge volumes of data or multiple concurrent requests.

Scalability involves considering how the framework scales as the business grows, including the management of computational resources.

3. Cost Efficiency

Finally, consider cost, both financial and developmental. Many alternatives are fully open-source, whereas some also offer commercial services. When selecting an alternative, decide if you need:

- Open-source or a hosted platform.

- A framework that charges based on your usage.

- A platform that offers Enterprise plans to cover everything from the number of users to security.

What are the Best LlamaIndex Alternatives to Choose From?

Here’s a comparison of the top LlamaIndex alternatives:

1. LangGraph

LangGraph is a framework for building stateful, multi-agent applications with Large Language Models. Developed by LangChain, it provides developers with precise control over agent workflows through graph-based orchestration.

Features

- Supports single-agent, multi-agent, hierarchical, and sequential workflows. You can create graphs of agents that react to conditions or run in parallel.

- Has human-in-the-loop and moderation capabilities. Built-in components let you pause an agent to get human feedback or reroute the flow based on quality checks.

- Provides token-by-token streaming for agents’ responses, so downstream steps can act on partial results.

- Has first-class memory objects to store long-term context, making multi-turn conversations stateful across agents.

Pros and Cons

LangGraph’s strength is explicit workflow transparency. By defining a clear graph of nodes and edges, you get a single ‘map’ of the entire agent pipeline, which makes understanding and sharing complex logic easier. This structure also aids debugging: you can examine each node’s inputs and outputs in order.

On the flip side, LangGraph’s richness comes with complexity. There is boilerplate in defining graph structures and agents; you need to ‘fully buy in’ to its architecture.

Pricing

LangGraph comes with an open-source plan that’s free to use. If you install the LangGraph Python or JS package, you get the MIT-licensed code to design agents with no licensing cost or usage fees. This open-source plan has a limit of executing 10,000 nodes per month.

Apart from the free plan, LangGraph offers three paid plans to choose from:

- Developer: Includes up to 100K nodes executed per month

- Plus: $0.001 per node executed + standby charges

- Enterprise: Custom-built plan tailored to your business needs

2. Haystack (by deepset)

Haystack is an open-source framework by deepset for building production-ready LLM applications. It focuses on modular pipeline construction, making it easy to combine different components for search, question answering, and agent workflows.

Features

- Helps you build composable pipelines. Mix and max built-in components like dense/sparse retrievers, LLM generators, rankers, etc., to handle retrieval, question answering, RAG, PDF praising, and more.

- You can insert conditional branches and loops into pipelines for advanced flows.

- Connect easily to many LLM providers, vector databases, knowledge graphs, and AI tools via its modular architecture.

- Has built-in tracing, logging, and evaluation (QA metrics) so you can monitor performance and find bottlenecks.

Pros and Cons

Haystack’s biggest pro is its flexibility, backed by solid engineering. It truly offers everything from data ingestion to deployment hooks. Also, you get end-to-end control: plug in any model or database, branch logic as you like, and use the observability toolkit.

However, because Haystack tries to be a universal framework, you might end up writing more code/configuration compared to purpose-built agent libraries.

Pricing

Haystack itself is completely free and open-source under Apache-2.0. But it also has commercial plans. Deepset offers a managed platform for developing and deploying LLM applications and agents.

- Studio: This is a free plan for individuals prototyping, offering 1 workspace, 1 user, 100 pipeline hours, and community support.

- Enterprise: This is a custom-priced plan for teams building production-grade AI applications, providing unlimited workspaces, users, pipeline hours, and dedicated support, with cloud or custom deployment options.

3. CrewAI

CrewAI is a framework for orchestrating collaborative AI agents that work together like human teams. It emphasizes role-based agent design where each agent has specific responsibilities and expertise.

Features

- Offers role-based agents where you can define each agent’s persona, role, goal, and expertise via YAML or code. These agents have fixed responsibilities.

- Comes with several common tools like web search, code execution, scraping, and more, and lets you add custom ones by writing Python functions.

- Executes agents in a deterministic sequence; after one finishes, the next takes over, ensuring clear task handoff.

- Has a mechanism to record each agent step; you can replay with modifications to debug mistakes or tweak behavior.

Pros and Cons

CrewAI’s strength is clarity and ease of use for multi-step tasks. By enforcing a turn-taking structure, it makes flows predictable and the agent's responsibilities clear. This reduces the chance of agents ‘talking over each other’ or running off the rails. The use of YAML configs also means non-developers can set up agents without deep coding.

On the downside, this structure is rigid. CrewAI is optimized for workflows where tasks naturally form a sequence. It’s less suited for freeform ‘all-agents-chat’ scenarios.

Pricing

CrewAI’s core framework is also MIT-licensed and open-source. But the platform offers several paid plans to choose from:

- Basic: $99 per month

- Standard: $6,000 per year

- Pro: $12,000 per year

- Enterprise: $60,000 per year

- Ultra: $120,000 per year

👀 Note: To see CrewAI’s pricing plans, you must sign up for its free plan.

4. Microsoft AutoGen

AutoGen is Microsoft's open-source framework for building LLM applications through multi-agent conversations. It enables agents to collaborate autonomously or with human oversight to accomplish complex tasks.

Features

- Agents in AutoGen communicate asynchronously via messages, either in event-driven loops or request/response. They form chat sessions where multiple agents can participate dynamically.

- Lets you include a human participant in the conversation (as a special agent). Instead of auto-relying, the framework will wait for actual human input, enabling review steps.

- The framework is modular: you can plug in custom agents, tools, memory stores, or models.

- AutoGen has built-in support for tracking and tracing interactions (including OpenTelemetry support), so you can log what messages went between agents.

- Current release supports Python and .NET agents, with interfaces enforcing type checks at compile time (improving reliability).

Pros and Cons

AutoGen’s flexibility is its main draw. It imposes very little structure beyond asynchronous chat, so it excels at open-ended, complex tasks where agents negotiate the solution among themselves. This can make prototyping easy: just tweak a system prompt and get going without writing new pipeline code. Another plus is Microsoft’s engineering: AutoGen v0.4 introduces a robust, event-driven architecture with improved observability and scalability in mind.

However, this freedom and flexibility have a price. Because control is handed over to the agents’ conversation, the workflow can become unpredictable or inefficient. There’s a risk of agents looping indefinitely or failing to converge on a solution.

Pricing

AutoGen is fully open-source (MIT license). All of the code is available on GitHub, and there are no usage fees.

5. Semantic Kernel Agent Framework

Semantic Kernel is Microsoft's SDK for integrating LLMs into applications, with a recently matured Agent Framework for building both single and multi-agent systems. It emphasizes enterprise readiness and seamless integration with existing business processes.

Features

- Offers multiple collaboration patterns like pipeline (sequential), concurrent (parallel), group chat, handoff, and ‘magnetic’ (flexible dynamic) orchestration models.

- Provides base classes for different agent kinds - ChatCompletionAgent, OpenAIAssistantAgent, AzureAIAgent, etc., making it easy to instantiate agents with various model backends

- Built-in support for human-in-the-loop chats where you can interject or override agent turns.

- All orchestration patterns use a consistent async API. You can define an agent, create an AgentChat orchestration, and

InvokeAsync()regardless of the pattern.

Pros and Cons

The advantage of this platform is high quality and integration: it feels consistent with other Microsoft AI tooling, especially if you already use Azure services. The predefined patterns cover the most common agent designs, from simple pipelines to multi-agent group chats. This means you don’t have to wire everything from scratch.

On the downside, it’s brand new (as of 2025), so the agent features may not be as battle-tested or feature-rich as more mature projects. Also, because SK started in .NET, the Python API is sometimes experimental. If your team is not familiar with C# or .NET, there might be a learning curve.

Pricing

Semantic Kernel itself is open-source and free (MIT license) – the SDK and Agent Framework have no license cost. It’s a developer kit. However, to use certain services, for example, Azure OpenAI models or Azure AI Search for retrieval, you will incur those cloud costs.

6. Weaviate

Weaviate is an open-source vector database that goes beyond simple similarity search to enable AI-native applications. While not exclusively an agent framework, it provides powerful primitives for building intelligent search and retrieval systems.

Features

- Weaviate stores both objects and vectors, functioning as a vector database crucial for applications that rely on understanding the semantic meaning of data. It supports embeddings, which are numerical representations of data capturing meaning and relationships.

- Has built-in modules for RAG queries: it can take your query, find top documents, and even call a generative model to produce an answer, all within a single query.

- Built-in ML model integrations include support for OpenAI, Cohere, and Hugging Face models. Vectorize data automatically without managing separate embedding pipelines.

- Comes with support for complex filters, backup options, and even vector index compression to optimize memory usage.

Pros and Cons

Weaviate’s main advantage is its mature, production-ready vector search engine. It offloads much of the indexing and retrieval work from your code, which can simplify building the retrieval steps of an agent. The built-in RAG and ML modules mean you often don’t have to write extra glue: for instance, you can ask Weaviate to ‘answer from docs’ in one call.

The flip side is that Weaviate is not itself an agent orchestrator. You’d still need to glue it to an agent framework if you want multi-step logic. In terms of complexity, operating a database adds overheads like setup and resource planning.

Pricing

Weaviate offers free access to learning and prototyping with a 14-day trial, after which you will have to choose from the multiple paid plans it offers:

- Serverless Cloud (SaaS): Starts from $25 per month, with storage costing $0.095 per 1 million vector dimensions stored per month (Standard SLA). Higher SLA tiers, Professional and Business Critical, are more expensive.

- Enterprise Cloud (Managed Dedicated Instances): Starts from $2.64 per AI Unit (AIU), with flexible storage tiers priced per GB per hour or GBvCPU per month. Contact sales for custom pricing.

- Bring Your Own Cloud (BYOC): Custom pricing for a fully-managed solution or 24/7 support within your Virtual Private Cloud (VPC). Contact sales.

- Weaviate Embeddings: Provides access to various embedding models hosted in Weaviate Cloud, priced per million tokens, for example, Snowflake arctic-embed-m-v1.5 at $0.025 per 1 million tokens.

7. Jina AI Flow

Jina AI Flow is a framework for building neural search systems and AI applications with a focus on multi-modal data processing. It provides a unique approach to orchestrating AI services through event-driven pipelines.

Features

- Flow-based orchestration connects Executors into processing pipelines. Each Executor handles a specific task, and Flows define how data moves between them.

- Cloud-native design with built-in support for containerization and distributed deployment. Export Flows as Docker Compose or Kubernetes configurations with one command.

- Multi-modal processing handles text, images, video, and audio within the same pipeline. Build agents that can understand and generate different types of content.

- Streaming capabilities support real-time data processing and response generation. Process large documents or media files without loading everything into memory.

- Hub ecosystem provides pre-built Executors for common tasks. Share and reuse components across projects through Jina Hub.

Pros and Cons

Jina's focus on neural search and multi-modal processing makes it powerful for specific use cases. The cloud-native design simplifies deployment and scaling in production environments. Its streaming capabilities excel at handling large-scale data processing tasks.

However, the framework's specialization in search means it lacks general-purpose agent features. Building conversational agents or complex reasoning systems requires significant custom development. The learning curve can be steep for teams unfamiliar with neural search concepts.

Pricing

Jina AI Flow, as part of the Jina framework, is open-source and free to use. The platform also offers Jina AI Reader API, a component for web scraping and content extraction, which is free with generous rate limits.

The Best LlamaIndex Alternatives to Build AI Production Agents with Ease

There's no single ‘best’ alternative to LlamaIndex; the right choice depends entirely on your specific use case and requirements. Here are three standout options, each excelling in different scenarios:

- LangGraph: Perfect for teams that need transparent, debuggable workflows with complex orchestration. Its graph-based approach makes it ideal for production systems that require explicit control over agent behavior and clear visibility into execution paths.

- CrewAI: Excellent for structured, role-based workflows where agents have defined responsibilities. Choose this when building applications that mirror human team dynamics with sequential task handoffs.

- Microsoft AutoGen: Best for open-ended, collaborative AI systems where agents need to negotiate solutions dynamically. Its flexible conversation framework suits research applications and complex problem-solving scenarios.

The path forward is clear: evaluate your specific needs - whether it's orchestration transparency, structured workflows, or collaborative flexibility, then choose the framework that aligns with your production requirements. That’s how you decide which LlamaIndex alternative fits the best.

📚 Related alternative articles to read next: