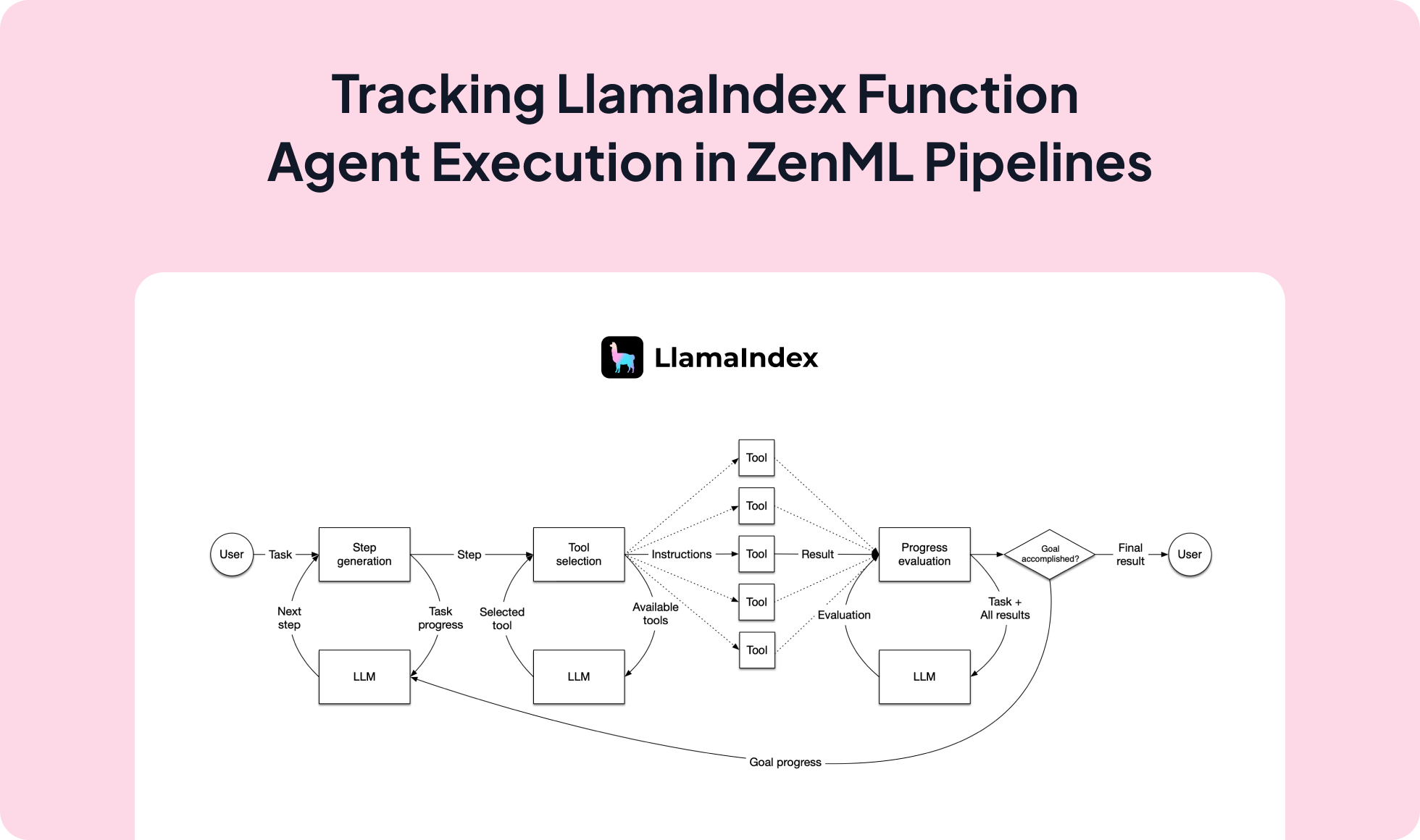

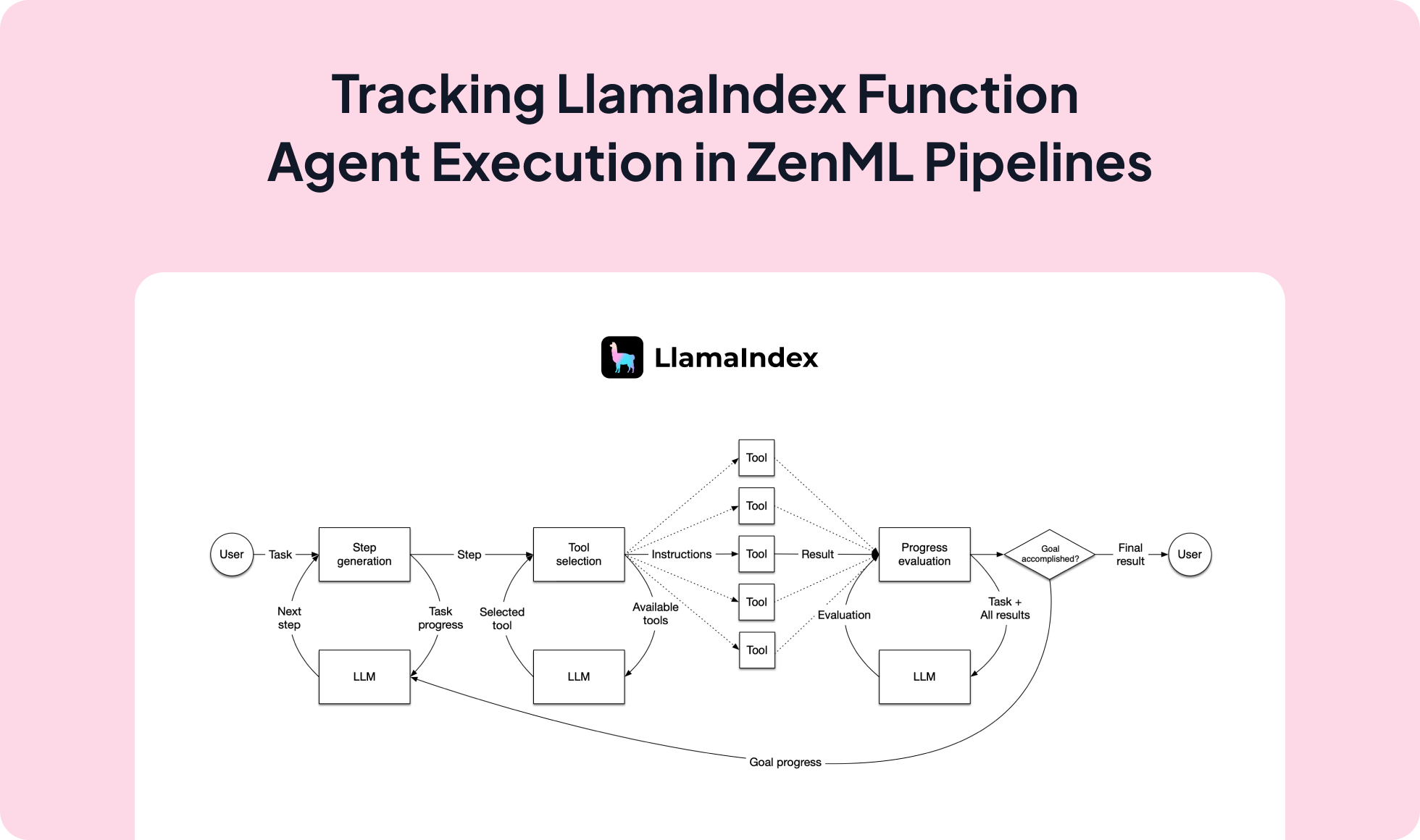

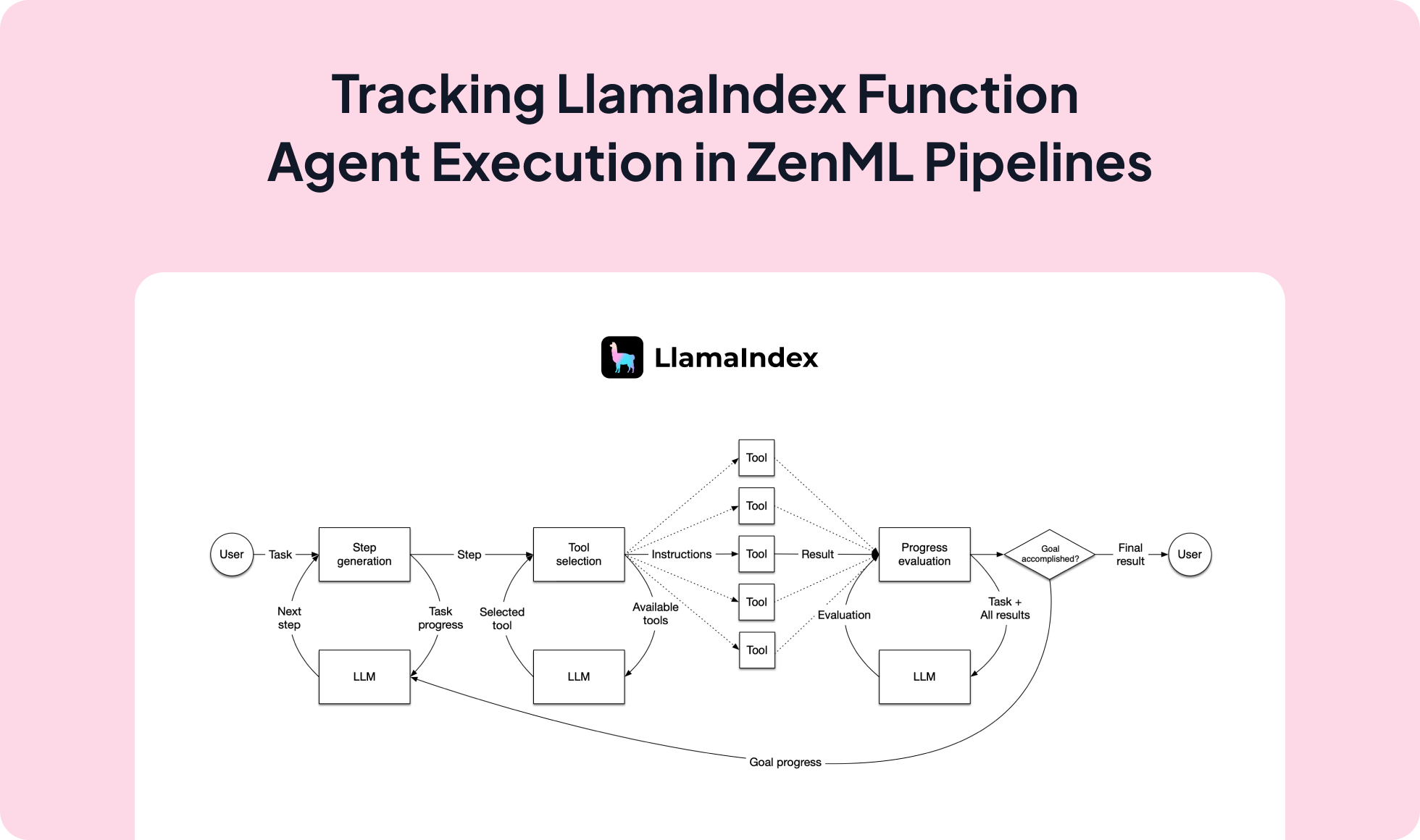

LlamaIndex Function Agent integrated with ZenML

LlamaIndex lets you build function agents that call multiple tools and often run asynchronously; integrating it with ZenML executes those agents inside reproducible pipelines with artifact lineage, observability, and a clean path from local development to production.

Features with ZenML

- Async-friendly orchestration. Run LlamaIndex function agents that require awaiting inside ZenML steps without changing agent code.

- Tool call lineage. Track queries, intermediate tool outputs, and final responses as versioned artifacts.

- Composable pipelines. Chain agents with retrieval, evals, and deployment in one DAG.

- Evaluation ready. Add post-run checks to score response quality, latency, and tool accuracy.

- Portable execution. Move the same pipeline from local runs to Kubernetes or Airflow via ZenML stacks.

Main Features

- Function agents. Define agents that call Python tools to solve tasks.

- Async execution. Properly await

agent.run(...) for non-blocking workflows. - Multiple tools. Plug in weather, tip calculator, and custom utilities.

How to use ZenML with

LlamaIndex

from zenml import ExternalArtifact, pipeline, step

from agent import agent # LlamaIndex function agent with tools

@step

def run_llamaindex(query: str) -> str:

# LlamaIndex agent.run is async; await it inside the step

import asyncio

async def _run():

return await agent.run(query)

resp = asyncio.run(_run())

return str(getattr(resp, "response", resp))

@pipeline

def llamaindex_agent_pipeline() -> str:

q = ExternalArtifact(

value="What's the weather in New York and calculate a 15% tip for $50?"

)

return run_llamaindex(q.value)

if __name__ == "__main__":

print(llamaindex_agent_pipeline())

Additional Resources

ZenML Agent Framework Integrations (GitHub)

ZenML Documentation

LlamaIndex Documentation