Microsoft Semantic Kernel integrated with ZenML

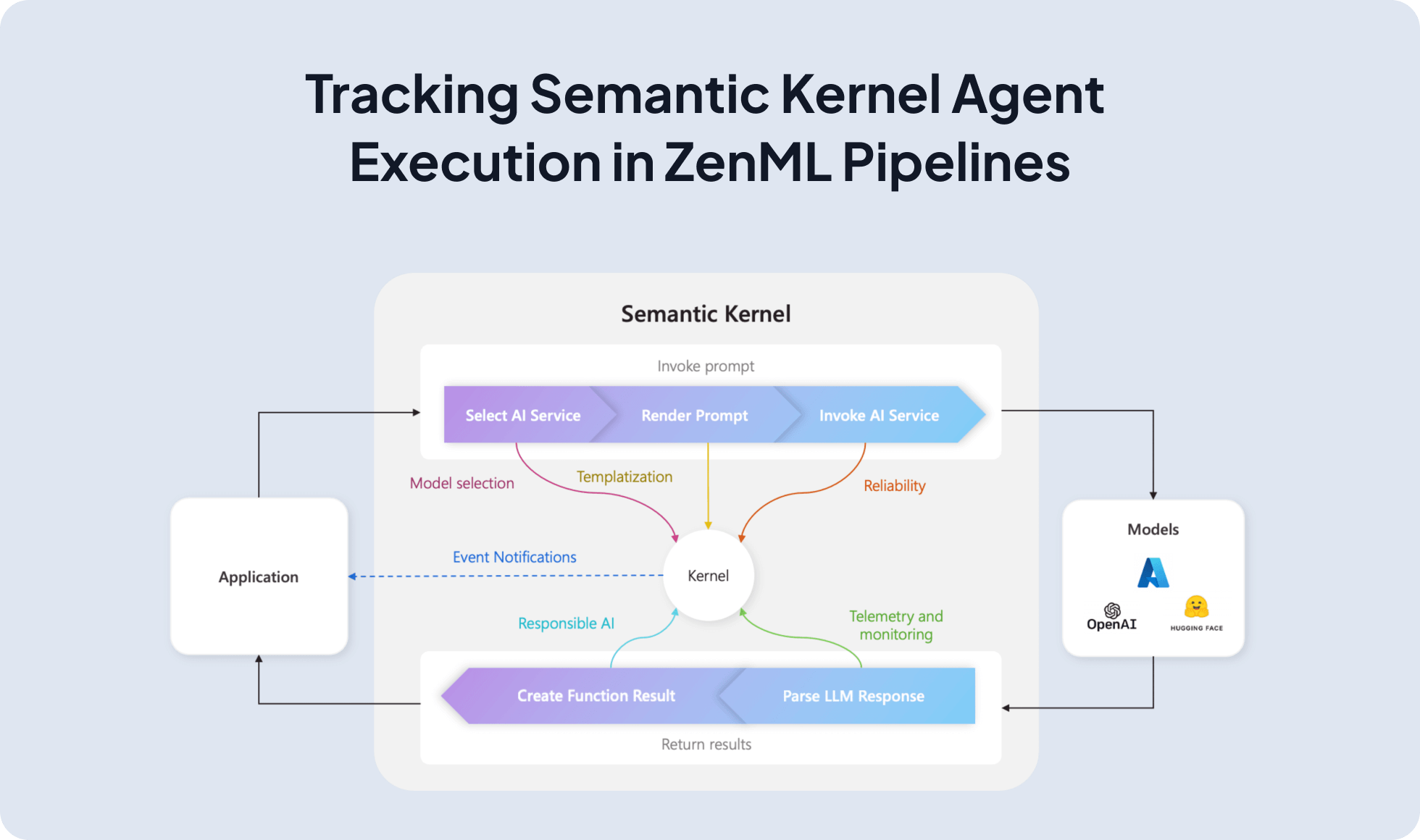

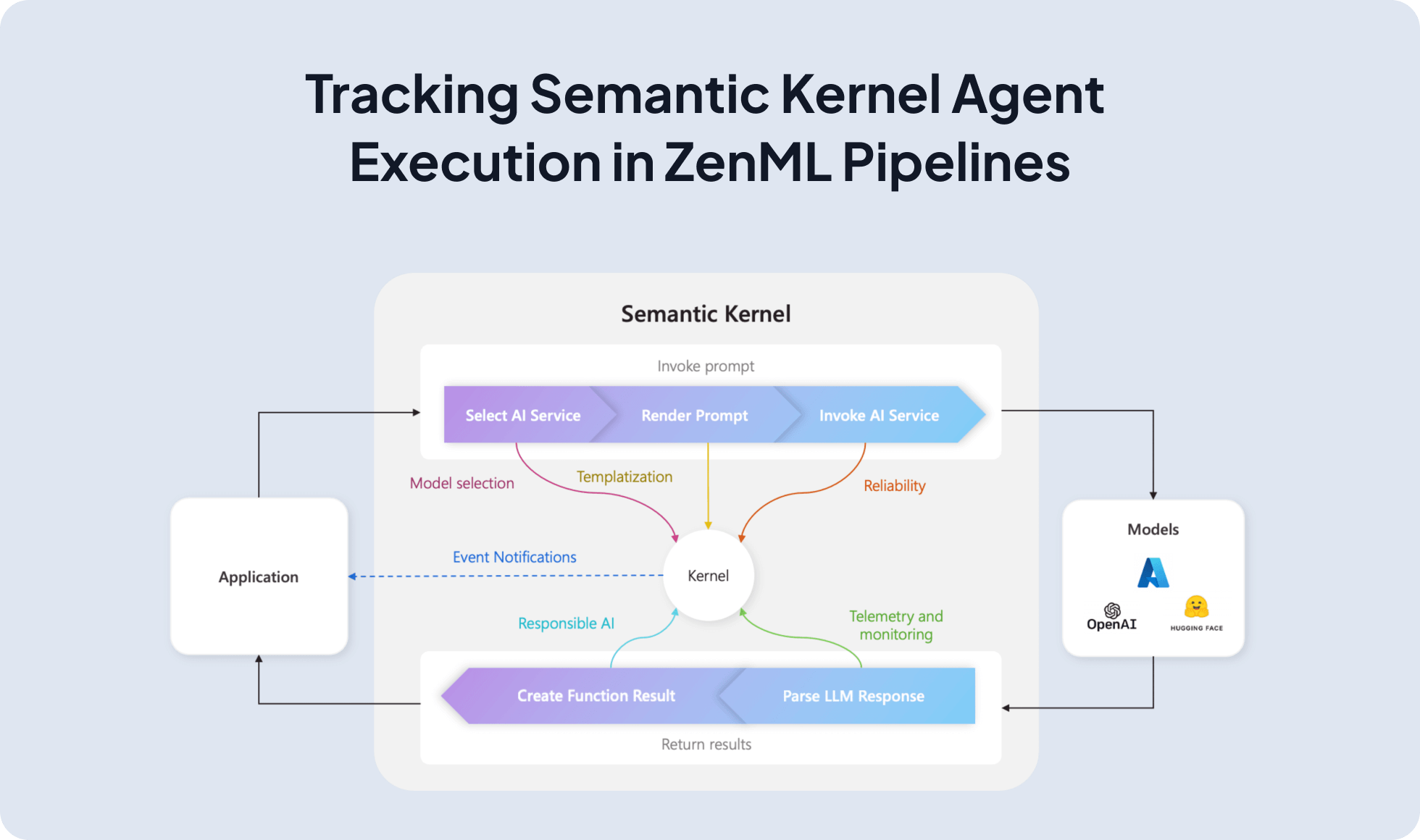

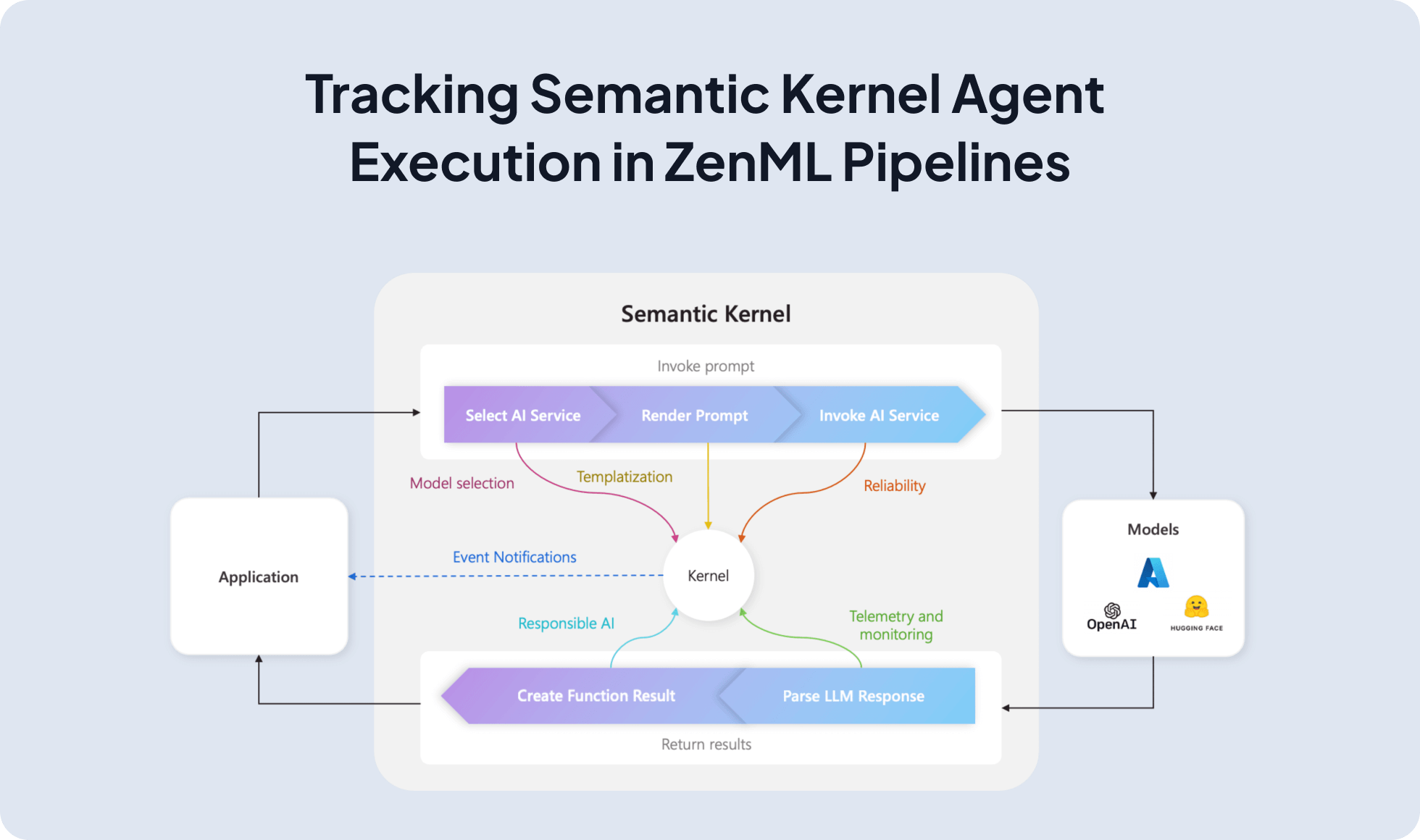

Semantic Kernel lets you build plugin-based agents using @kernel_function, auto function calling, and async chat with OpenAI; integrating it with ZenML runs those agents inside reproducible pipelines with artifact lineage, observability, and a smooth path from notebooks to production.

Features with ZenML

- Function-calling orchestration. Execute SK agents with automatic function calling inside versioned ZenML pipelines.

- Message and tool lineage. Track user prompts, tool invocations, and responses as artifacts for audit and replay.

- Async-first execution. Run async chat workflows cleanly inside steps with proper event-loop handling.

- Evaluation ready. Add post-run checks to score response quality, tool accuracy, latency, or cost.

- Portable deployment. Move the same agent pipeline from local to Kubernetes or Airflow without code changes.

Main Features

- Plugin-based architecture. Build skills with

@kernel_function and compose them as plugins. - Automatic function calling. Let the model choose and call functions when needed.

- Async chat completion. Stream or await chat responses with OpenAI-backed connectors.

How to use ZenML with

Semantic Kernel

from zenml import ExternalArtifact, pipeline, step

from semantic_kernel_agent import kernel

import asyncio

from semantic_kernel.connectors.ai.function_choice_behavior import FunctionChoiceBehavior

from semantic_kernel.connectors.ai.open_ai.prompt_execution_settings.open_ai_prompt_execution_settings import (

OpenAIChatPromptExecutionSettings,

)

from semantic_kernel.contents.chat_history import ChatHistory

@step

def run_semantic_kernel(query: str) -> str:

async def _run():

chat = kernel.get_service("openai-chat")

history = ChatHistory()

history.add_user_message(query)

settings = OpenAIChatPromptExecutionSettings()

settings.function_choice_behavior = FunctionChoiceBehavior.Auto()

resp = await chat.get_chat_message_content(

chat_history=history, settings=settings, kernel=kernel

)

return str(getattr(resp, "content", resp))

return asyncio.run(_run())

@pipeline

def semantic_kernel_agent_pipeline() -> str:

q = ExternalArtifact(value="What is the weather in Tokyo?")

return run_semantic_kernel(q.value)

if __name__ == "__main__":

print(semantic_kernel_agent_pipeline())

Additional Resources

ZenML Agent Framework Integrations (GitHub)

ZenML Documentation

Semantic Kernel Documentation