Your CrewAI demos looked great. Production did not.

How to make it better? You need strict state control, clean handoffs, real human approvals, memory that survives jobs, and deploy targets that match your stack and budget. Without that, agents drift, runs cannot be reproduced, and audits stall.

We tried and tested 7 real alternatives and ran the same tasks across each one. In this CrewAI alternatives guide, you will see exactly which CrewAI jobs these alternatives replace, where they win, and where they fall short.

We close with a practical path to operate agents at scale by wrapping your chosen framework with ZenML for the outer loop: versioning, lineage, evaluations, and dependable deployment.

TL;DR

- Why look for alternatives: Managed plans can sting, some teams need fine-grained control, and extra prompt layers can add latency and confusion for the model.

- Who should care: Machine learning engineers, MLOps teams, and AI developers shipping agentic apps where auditability, human review, and stable deployment matter.

- What to expect: A side-by-side view of seven options, mapped to features you’d normally use CrewAI for – multi-agent orchestration, workflow authoring, memory, HITL, observability/evals, RAG depth, deployment, and logging.

The Need for a CrewAI Alternative?

Why might you need a CrewAI alternative in the first place? While CrewAI is a solid foundation for multi-agent systems, there are a few reasons teams may hit its limits and seek other solutions.

Reason 1. Managed plans are extremely expensive

If you’re scaling concurrent agents with heavy tool use, per-step or per-node pricing stacks quickly. Open-source cores look free until you add tracing, evals, storage, and background jobs. Teams that can self-host usually do so to keep variable costs under control and to integrate with existing observability.

Reason 2. You need fine-grained control or specialized tooling

Agentic apps are no longer single-model chats. You need threads, state snapshots, resumable runs, event logs, and first-class interrupts.

You might also want MCP integration for tool servers, or a ‘hard’ workflow language, so not every routing decision is left to the LLM.

Alternatives like LangGraph give you a stateful graph runtime with checkpoints and time travel; others like Google’s ADK bring workflow agents for sequential, loop, and parallel patterns.

Reason 3. Quality degradation caused by extra prompt layers

Stacking wrappers and meta-prompts can bloat context windows, slow plans, and sometimes muddy instructions.

In our own dry runs, long wrappers around simple tools led to off-target tool selection.

Where possible, prefer frameworks that let you author deterministic control flow in code and keep prompts focused on the parts that actually need model judgment.

Evaluation Criteria

To provide a fair and comprehensive comparison, we evaluated all CrewAI alternatives against a consistent set of criteria that reflect the needs of developers building production-ready agentic systems.

1. Core Capabilities

Examine what built-in features each framework provides for agent-based workflows. This includes:

- Agent orchestration model: Graph-based, conversational, sequential, and more

- Support for memory: Short-term context and long-term knowledge

- Tool-calling abilities: Integrating external functions or APIs

- Human-in-the-loop (HITL) mechanisms

Essentially, how well can the tool replicate or extend CrewAI’s core features like multi-agent coordination, memory management, tool integration, and moderated oversight?

2. Performance and Cost

Consider the runtime performance and scaling of each alternative, as well as the cost model.

Ask questions like:

- Does the framework support high-throughput or distributed execution for complex workflows?

- Is it completely open-source (no usage fees) or tied to a paid service?

- If it’s a cloud service, are the costs usage-based or subscription-based?

The goal is to understand both the efficiency and the budget impact of adopting the tool.

3. Production Readiness

Assess how ready each framework is for real-world production deployment. This covers stability and maturity (Is it a well-tested project or a beta release?), the quality of documentation and community support, and features for observability, logging, and debugging in production.

Also, look at deployment options – can you self-host it on your infrastructure? Does it provide integrations with cloud platforms or container orchestration for scaling?

Production readiness means having not just fancy demos, but the reliability and support needed for long-term maintenance.

What are the Best Alternatives to CrewAI?

We tested seven promising alternatives to CrewAI, each offering a different approach to building AI workflows. The table below gives a quick overview of these tools and their key strengths:

1. AutoGen

AutoGen is an open-source framework from Microsoft Research for creating AI agents that collaborate through conversations. Instead of following a fixed sequence of tasks (like CrewAI’s crew workflow), AutoGen lets multiple agents interact in a free-form chat loop, even allowing a human to join the conversation when needed.

Features

- AutoGen replaces CrewAI's sequential process with a flexible, many-to-many communication model. Agents interact via asynchronous messaging, typically within a

GroupChatmanaged by an orchestrator agent that decides which agent speaks next. - Agents in AutoGen communicate asynchronously by exchanging messages. They can collectively solve problems by talking to each other, which allows for dynamic, emergent problem-solving rather than a rigid script.

- Lets you include a human agent in the chat. Certain steps can pause for human input or approval, providing oversight or guidance at critical junctures.

- AutoGen is highly modular; you can plug in custom tools (Python functions, APIs), define new agent roles, and integrate memory modules. It also logs every message and action, which makes debugging multi-agent interactions much easier.

Pros and Cons

AutoGen’s biggest strength is its flexibility. It imposes very little structure on how agents interact – essentially, it’s just messaging, so you can compose any conversation pattern. Another plus is Microsoft’s engineering heft behind it: AutoGen has evolved with robust features – the v0.4 release introduced an event-driven core with scalability and better observability in mind.

However, the freedom AutoGen provides comes at the cost of guidance. With no predefined workflow structure, developers must design the conversation logic and ensure coherence themselves. In CrewAI, the rigid sequence can be limiting, but it does give you a clear blueprint; in AutoGen, an agent chat that goes off track might be harder to rein in.

2. LangGraph

LangGraph is a stateful orchestration framework. Every step writes a checkpoint you can rewind to or branch from, which means you can pause for human input and later resume without losing context. Studio gives you a debugging view and integrates tightly with LangSmith for traces and evals.

Features

- LangGraph replaces CrewAI's linear process with a

StateGraph, where nodes represent agents or tools and edges define conditional transitions between them. This architecture allows for the creation of complex workflows with cycles, branches, and parallel execution paths, providing a level of control that is difficult to achieve in CrewAI. - Provides a global state or memory that all agents/nodes in the graph can access. Agents can write to and read from this shared context, allowing information from one part of the process to inform others.

- Because it builds on LangChain, LangGraph has access to a large ecosystem of tools and integrations (search engines, databases, APIs, etc.).

- You aren’t limited to a linear sequence. Nodes can have conditions to branch into different subgraphs, or even form loops for iterative reasoning.

Pros and Cons

The major pro of LangGraph is workflow transparency and control. By defining a clear graph of nodes and edges, you end up with a single visual map of your entire agent pipeline, which makes understanding and debugging much easier.

The downside is that LangGraph’s richness comes with complexity. There is some boilerplate in defining graph structures and nodes – you have to ‘buy into’ its way of modeling problems. Simple tasks that might be done with a quick script could require setting up nodes, edges, state handlers, etc., which can feel like overhead.

3. Agent Development Kit (Google)

Google’s Agent Development Kit (ADK) is a flexible, modular framework for developing and deploying AI agents. Announced as part of the Vertex AI ecosystem, it’s Google’s answer to making agent-building more like standard software development.

Features

- ADK is designed for creating modular and scalable applications by composing multiple specialized agents in a hierarchy. It supports both deterministic

Workflow Agents(likeSequentialAgentandParallelAgent) for predictable pipelines and dynamic, LLM-driven routing for more adaptive behavior. - Includes content moderation and guardrail features to keep agent outputs in check. It also makes it easy to register skills (tools/functions) that agents can use; wrapping a Python function or a Vertex API call as a skill is straightforward.

- ADK treats sessions and memory as first-class concepts. It has abstractions for maintaining conversation state, storing long-term memory, etc. You can configure a Session for an agent (with short-term memory) and attach external memory modules (like a vector store for long-term facts).

- Being on Vertex AI, ADK works seamlessly with Google’s models (like PaLM) and services. It offers enterprise necessities like IAM-based access control, audit logs, and multiple deployment options.

Pros and Cons

The ADK’s primary advantage is that it brings software engineering discipline to agent development. It was ‘designed to make agent development feel more like software development,’ meaning you get clearer abstractions, versioning, and testing practices. For organizations already using Google Cloud, ADK is a natural fit – it’s optimized for Google’s models (Gemini, etc.) and easily plugs into GCP for data and deployment.

In terms of downsides, ADK is relatively new (version 0.1.x) and evolving. As such, some features might be in preview or not be as polished. There may be a learning curve to grasp all its concepts (workflow agents, sessions, state, etc.), especially if you’re not familiar with Google’s terminology.

4. OpenAI Agents SDK

The OpenAI Agents SDK is a lightweight toolkit to build agents powered by OpenAI’s models (like GPT-4) that can use tools and perform multi-step reasoning. It’s essentially OpenAI’s in-house answer to frameworks like LangChain or CrewAI, streamlined for their API.

Features

- Define agents and their available tools with only a few lines of code. The SDK uses OpenAI’s function-calling under the hood, so agents can invoke your Python functions or API calls as needed without heavy boilerplate.

- Agents created with this SDK can use tools (via function calls) and even delegate tasks to other agents in a simple manager-worker style.

- It offers a lean, code-first experience with a few core primitives like

AgentandRunner. Workflows are orchestrated using standard Python control flow (loops, conditionals), which avoids heavy abstractions and gives developers direct control over the agent's logic. - State is managed through a

Contextsystem andSessions, but the SDK is less opinionated about persistence compared to other frameworks. It is up to you to implement how the state is saved and loaded across runs, providing flexibility but requiring more manual setup.

Pros and Cons

The OpenAI Agents SDK’s major advantage is simplicity and tight integration with OpenAI models. If you are already using GPT-5 and other older versions too, this SDK feels very natural – it’s basically an extension of the API’s function-calling mechanism. You can get an agent up and running with just a few lines of code.

On the downside, the Agents SDK is somewhat opinionated towards the OpenAI way of doing things. It assumes a chat-centric agent that decides on function calls (tools) as needed. If your needs fall outside that paradigm, say, complex parallel workflows or long-running agents that need checkpoints, you might hit limitations.

5. Langflow

Langflow is an open-source visual builder for LLM workflows; a no-code interface on top of LangChain. It lets you design chains of prompts, tools, and logic by drawing diagrams instead of writing code, which can dramatically speed up prototyping and collaboration.

Features

- Provides a canvas UI where you can drag-and-drop nodes representing components (LLMs, prompts, tools, memory stores, etc.) and connect them to define the flow of information. This visual approach makes it intuitive to map out an agent’s reasoning.

- Comes with a library of pre-built nodes for common tasks (LLMs, web search, database queries, conditional logic, etc.). You can configure each node’s parameters through the UI, saving time since you don’t need to implement these from scratch.

- Lets you run a workflow step by step, tweaking prompts or parameters on the fly to refine behavior. Once you're satisfied, export the workflow to a Python script for integration or version control.

- Memory is handled through dedicated components, like

Message Historyor vector store integrations, that you can add to your flow just like any other component.

Pros and Cons

Langflow’s obvious advantage is accessibility. It lowers the barrier to entry – even those with limited programming skills can set up a complex agent workflow. This makes it perfect for rapid prototyping and for communication between technical and non-technical team members. The visual nature means easy iteration; you can modify prompts, switch models, add a new tool, and immediately test the effect.

On the downside, visual tools can sometimes be limiting for very complex logic. Large flows might become messy on a canvas, and certain conditional logic or looping isn’t as easy to represent. In CrewAI or code, you could write an if-statement or a loop; in Langflow, you will need workarounds as the UI primarily represents directed acyclic graphs (DAGs) of tasks.

6. FlowiseAI

Flowise is another open-source visual workflow builder for LLMs, similar to Langflow. It lets you create, test, and deploy AI agent chains through a browser UI. Flowise’s focus is on the quick deployment of these workflows.

Features

- Offers a node-and-connection editor in which you assemble your agent’s logic. You’ll find nodes for LLM interactions, tools, data sources, and control flow, making it simple to design an agent’s behavior without coding.

- After designing a workflow, you can deploy it as a live REST API endpoint with a single click. This means an agent pipeline you built in the morning can be running as a service by the afternoon, without writing any server code.

- Comes with connectors for popular models and services. It supports major LLM APIs, integrates with common data sources (files, databases), and includes basic memory to maintain context within a conversation flow.

- FlowiseAI provides a dedicated

Agentflowbuilder designed for creating multi-agent systems and orchestrating complex workflows. It supports common patterns like supervisor-worker hierarchies, where a central agent delegates tasks to specialized agents.

Pros and Cons

FlowiseAI’s strengths lie in its production-oriented design. It is arguably more geared towards enterprise use than some other visual builders. Features like built-in monitoring hooks, horizontal scaling, and an API for integration show that it’s ready to not just toy around, but serve real users at scale.

While FlowiseAI supports multi-agent setups and API deployment, its memory management is limited to short-term conversational context out of the box. Persistent, long-term memory or advanced knowledge integration often requires custom modules or external services, adding development overhead.

7. LlamaIndex

LlamaIndex (formerly GPT Index) is an open-source framework focused on connecting LLMs with external data sources for retrieval-augmented generation (RAG). It’s not a full agent orchestrator like CrewAI, but it can augment or replace parts of CrewAI’s functionality when your agents need to work with a lot of knowledge or documents.

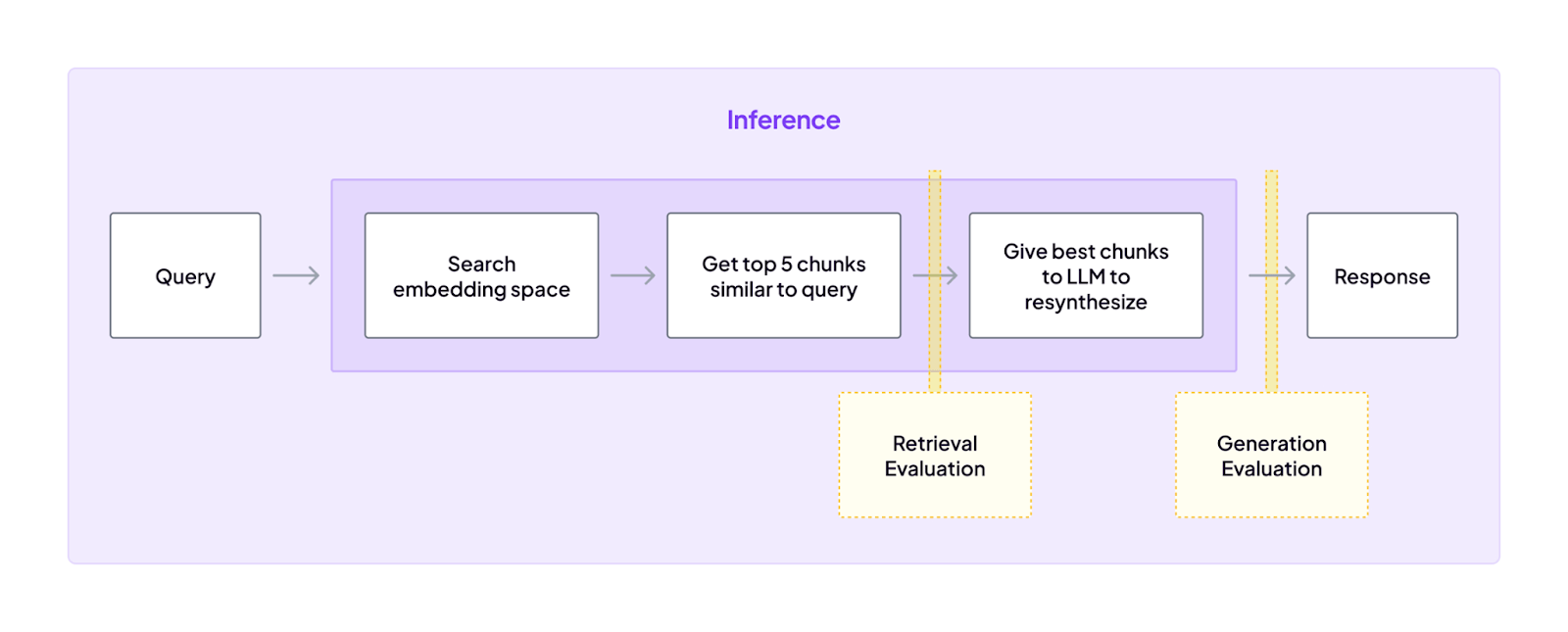

Features

- Offers several patterns for multi-agent systems. The most direct replacement for CrewAI's functionality is

AgentWorkflow, a high-level abstraction that manages hand-offs between a set of agents in a linear ‘swarm’ pattern. - Makes it easy to ingest documents, PDFs, websites, or databases and turn them into queryable indexes. It uses embeddings and other techniques so an LLM can efficiently retrieve relevant information when answering questions.

- Recent versions introduced an agent interface in LlamaIndex, allowing an LLM to perform multi-step actions involving data. An agent using LlamaIndex could first retrieve relevant documents, then call a calculator or API, then compose a final answer.

- Use LlamaIndex alongside other frameworks. Many teams plug LlamaIndex into LangChain or even into CrewAI flows to handle the knowledge retrieval aspect. It’s designed to integrate well, fetching data when needed and handing it back to whatever agent logic orchestrates the overall workflow.

Pros and Cons

The main strength of LlamaIndex is depth in data-centric tasks. If your application is about question answering, report generation, or chatbots over proprietary data, LlamaIndex is tailor-made for that. It excels at building knowledge-aware agents – for instance, a research assistant that can cite sources from your document repository.

However, LlamaIndex is narrower in scope than the other alternatives here. It was not originally designed for orchestrating multiple independent agents or complex tool use; it was designed to augment an LLM with knowledge. As a result, some things are rudimentary. For example, tool use in LlamaIndex is largely about picking the right index or doing a search – it’s not as general-purpose as, say, AutoGen or OpenAI’s Agents, where an agent can plan an arbitrary sequence of diverse API calls.

How ZenML Helps in Closing the Outer Loop Around CrewAI and Its Alternatives

All the alternatives above (and CrewAI itself) focus on the ‘inner loop’ of agent development – designing how agents think, interact, and solve tasks. However, building a clever agent is only half the battle.

The other half is the ‘outer loop’: everything needed to put that agent into production use and keep it running reliably.

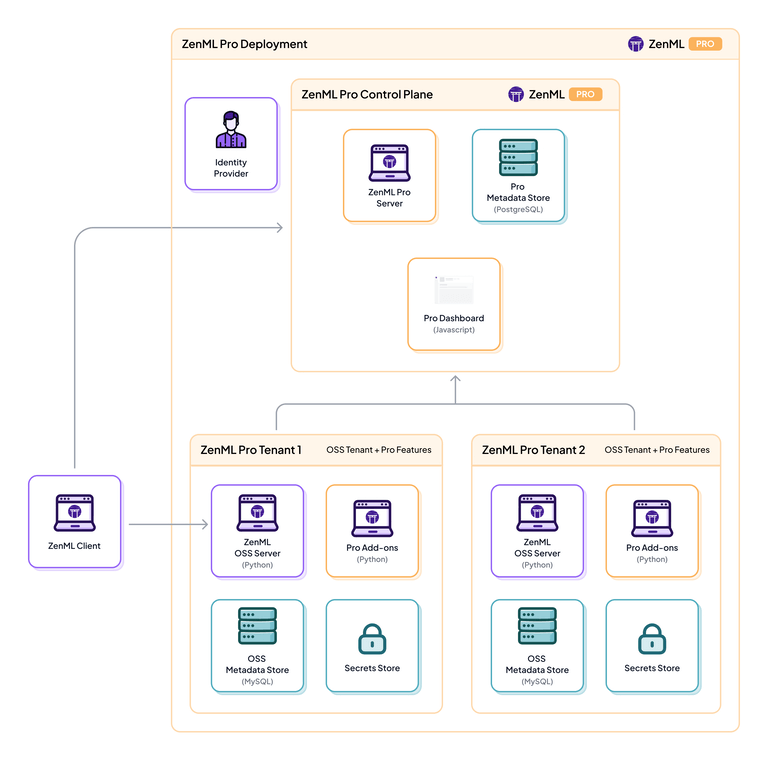

This is where ZenML – an open-source MLOps + LLMOps framework – comes in. ZenML is not an agent framework by itself; rather, it acts as a complement to whichever agent or workflow framework you choose (CrewAI or any alternative).

It provides the plumbing to manage your agents’ lifecycle, from deployment to monitoring to evaluation. In practical terms, ZenML fills the gaps around agent frameworks.

Here are a few ways our platform helps close the outer loop:

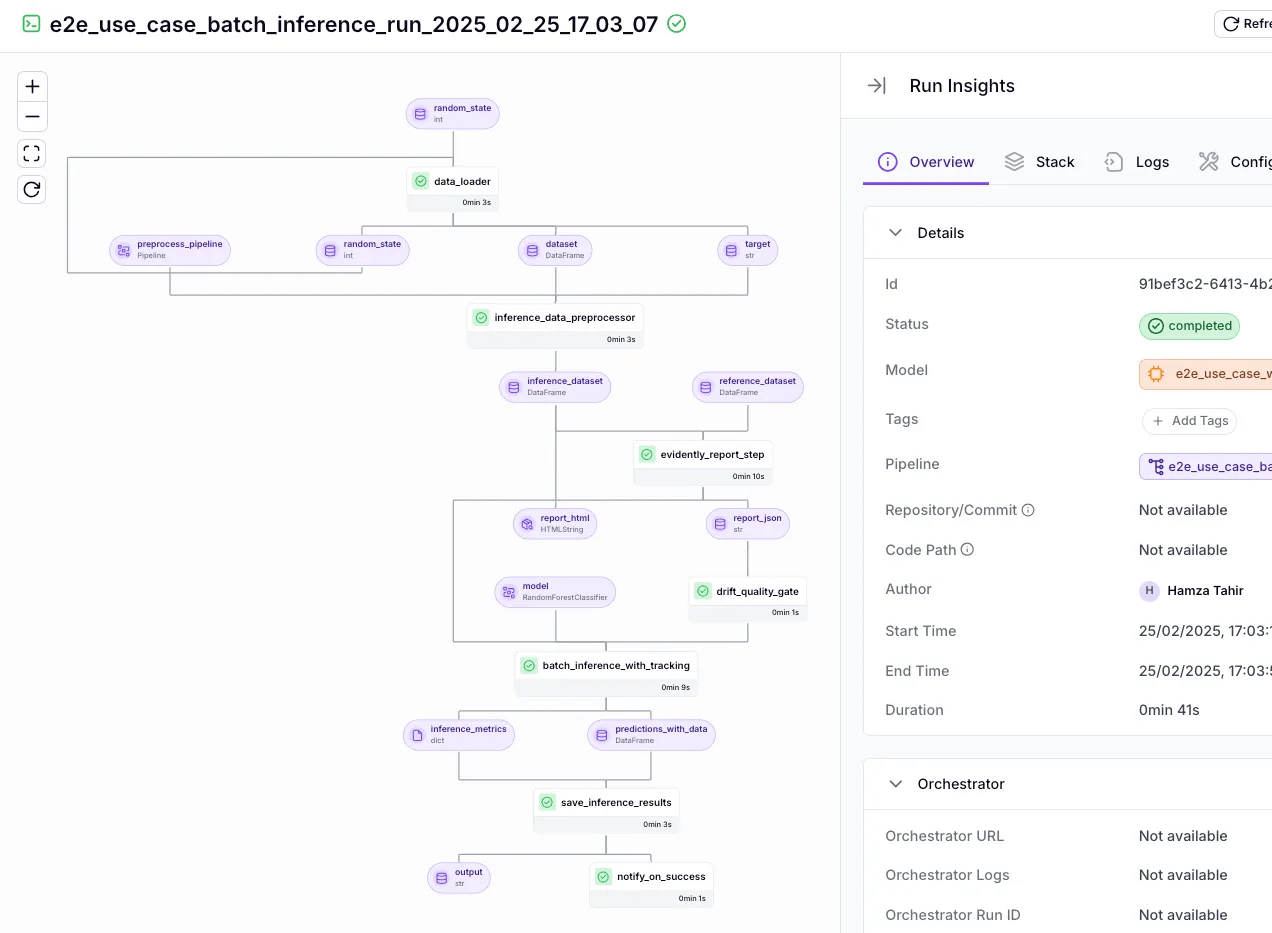

1. End-to-End Orchestration

ZenML allows you to embed your agent as one step in a larger pipeline.

For example, a ZenML pipeline might preprocess incoming data, then call your AI agent, then post-process or store the results. These pipelines are portable across environments – you can run them on a local machine for testing, then schedule them on Kubernetes or a cloud runner for production.

Steps and Pipelines ensure that moving from a prototype to a deployed workflow is seamless, addressing the engineering tasks around your agent.

2. Unified Visibility and Lineage

Once your agent is in a ZenML pipeline, everything it does can be automatically logged and tracked.

ZenML maintains a metadata store where inputs, outputs, and intermediate artifacts of each pipeline run are recorded. You get a central dashboard to inspect runs, compare them, and trace results back to their source.

For an agent, this means every decision, every tool used, each prompt and response can be traced as part of the pipeline run record. If something goes wrong (say the agent gave a faulty answer), you can pinpoint exactly which step and even which model version or prompt caused it.

This kind of lineage is crucial for debugging and for compliance (e.g., auditing why an AI made a certain decision).

3. Continuous Evaluation and Feedback

ZenML integrates evaluation steps as first-class citizens in the pipeline. After the agent produces an output, you can have ZenML automatically run quality checks or feedback routines.

For instance, you might include an LLM-based judge to grade the agent’s answer, or a set of unit tests if the agent generates code. If an output fails criteria, ZenML can trigger alerts or even kick off a model retraining workflow.

This closes the feedback loop by not just deploying an agent and forgetting it, but constantly monitoring its performance and improving it.

What’s more, you can also incorporate human feedback at scale – e.g., pipe low-confidence cases to a human review step and log their corrections for retraining later.

4. Production Deployment and Scaling

ZenML abstracts away the infrastructure details when deploying your agents. You define your pipeline with the agent and supporting steps, and then you choose an execution ‘stack’ for it – be it a simple Docker environment, a managed cloud service, or a Kubernetes cluster.

ZenML handles packaging your code, shipping it to the environment, managing secrets (like API keys), and running it on a schedule or in response to events.

Need to scale out to handle more load? ZenML can run parallel instances of your pipeline or distribute the work.

In short, while frameworks like CrewAI, LangGraph, AutoGen, etc., help you define what your AI agents do (the inner loop), ZenML focuses on how those agents are put into real-world operation (the outer loop). It provides the missing pieces for reliability, reproducibility, and maintainability.

📚 Relevant alternative articles you must read:

The Best CrewAI Alternatives to Build Automated AI Workflows

There’s no single best CrewAI alternative; the right pick depends on your use case, team skills, and production needs. Based on our testing, here’s how they stack up:

- For maximum control and production reliability → LangGraph: Ideal for building auditable, debuggable workflows with explicit state management, checkpoints, and replay.

- For open-ended problem-solving and research → AutoGen: Best for dynamic, multi-agent collaboration where the solution path isn’t fixed and agents can negotiate tasks in free-form conversation.

- For rapid, visual prototyping and non-technical collaboration → Langflow: A UI-first builder that speeds up experimentation and communication between technical and business teams.

- For fast prototyping and production deployment → FlowiseAI: Combines a visual editor with REST API deployment, scaling, and monitoring, making it a strong bridge from prototype to production.

- For RAG-heavy applications → LlamaIndex: Best-in-class for retrieval, indexing, and enabling agents to work over large, complex, or private knowledge bases.

If you’re interested in taking your AI agent projects to the next level, consider joining the ZenML waitlist. We’re building out first-class support for agentic frameworks (like CrewAI and AutoGen) in ZenML, and we’d love early feedback from users pushing the boundaries of what AI agents can do. With ZenML, you can seamlessly integrate whichever agent framework you choose into robust, production-grade workflows. Join our waitlist to get started. 👇