When it comes to building complex agentic AI workflows with little to no code, Langflow and n8n are two widely used platforms.

Langflow caters to developers who want a visual playground for building LLM applications and agents, while n8n appeals to those seeking low-code automation with deep integration across business tools.

In this Langflow vs n8n guide, we compare their features, integration, and pricing to determine which one is best suited for specific use cases.

Langflow vs n8n: Key Takeaways

- Langflow: An open-source, Python-based framework for visually building AI applications and agents. It provides a drag-and-drop canvas to connect LLMs, tools, prompts, and data sources into flows. Langflow’s no-code interface (built atop LangChain concepts) lets developers prototype chatbots, RAG workflows, and multi-agent systems without writing glue code, while still allowing Python customization if needed.

- n8n: A general-purpose, low-code workflow automation platform built in Node.js. It combines a visual, node-based editor with 1,180+ pre-built integrations and the option to inject custom code. n8n is a powerful tool for creating end-to-end business process automations that can now include native AI and agent-building capabilities.

Langflow vs n8n: Maturity and Lineage

Comparing the features of Langflow and n8n is important. But equally important is to compare their history and community adoption. These offer important context about both frameworks' stability, development velocity, and long-term viability.

n8n, founded in 2019, is a more mature platform. It built its reputation in the general automation space with tools like Zapier and Make, before the recent generative AI explosion.

On the contrary, Langflow comes from an established lineage rooted in the LangChain ecosystem. Langflow is younger but laser-focused on AI workflows, riding the wave of LLM enthusiasm.

n8n’s whopping 145k GitHub stars reflect its broader appeal. It crossed 100k stars by mid-2025 and continues to grow. Langflow, despite being three years younger, has also amassed a large community seeking no-code LLM tools.

Langflow’s 16.7k commits showcase it as a very active project among developers. n8n has seen steady growth since its launch and has six times more forks than Langflow, with significantly wider industry adoption.

Langflow vs n8n: Features Comparison

Feature 1. Visual Flow Builder

The visual canvas is the core of both platforms, but their design philosophies cater to different modes of thinking and problem-solving.

Langflow

In Langflow, you create a flow by dragging components onto a canvas and connecting them together in a directed graph. A component in Langflow is a building block of an LLM application. For example, there’s a component for:

- Chat Input/Output: UI interface for a user prompt or agent response

- LLM model: OpenAI, local model, etc.

- Prompt Template or Tool: An action the agent can take, like a web search

- RAG: Memory store, VectorStore retriever, etc.

Basically, you can visually connect prompts, LLMs, data sources, and agents. This flow-building style is intuitive for anyone familiar with LangChain concepts. You can start from scratch or use one of many pre-built templates as a starting point.

A key feature is the interactive Playground, a real-time testing and debugging area. You can enter a query or prompt and observe how it traverses the nodes. You can adjust parameters like prompt wording or model temperature on the fly and immediately re-run.

n8n

n8n also uses a node-and-connection paradigm on a visual canvas. You drag-and-drop nodes from a palette and connect them using edges to define a call flow.

n8n provides 400+ ready-made and thousands of community-created nodes. For example, to use an LLM, you might add an ‘OpenAI’ node configured for the GPT-4 model.

Similarly, each node performs some operation: calling an API, transforming data, executing custom code, etc.

n8n also includes nodes for control logic, such as If (conditional branching), Merge (converging branches), Wait (pause execution until some external signal), and Loop constructs.

For complex workflows with multiple collaborators, n8n lets you add sticky notes. These notes can be placed on the canvas to document complex sections of a workflow for other team members.

See the example below. Your notes can be extensive, as if explaining every node or step to a 10-year-old.

Feature 2. AI Agents

Langflow

Langflow provides a fundamental Agent node that encapsulates the typical Reasoning + Acting loop found in frameworks like LangChain.

When you add an Agent node to your flow, you can configure its type (e.g., a ReAct agent or Conversational agent) and connect Tools that it can invoke.

What’s powerful is that Langflow lets you turn any flow or component into a tool for an agent.

For instance, you might build a custom function using Python and then add it as an agent tool. This design allows for creative and flexible agent architectures. You can create a multi-agent system by simply configuring one Agent component to be a tool for another.

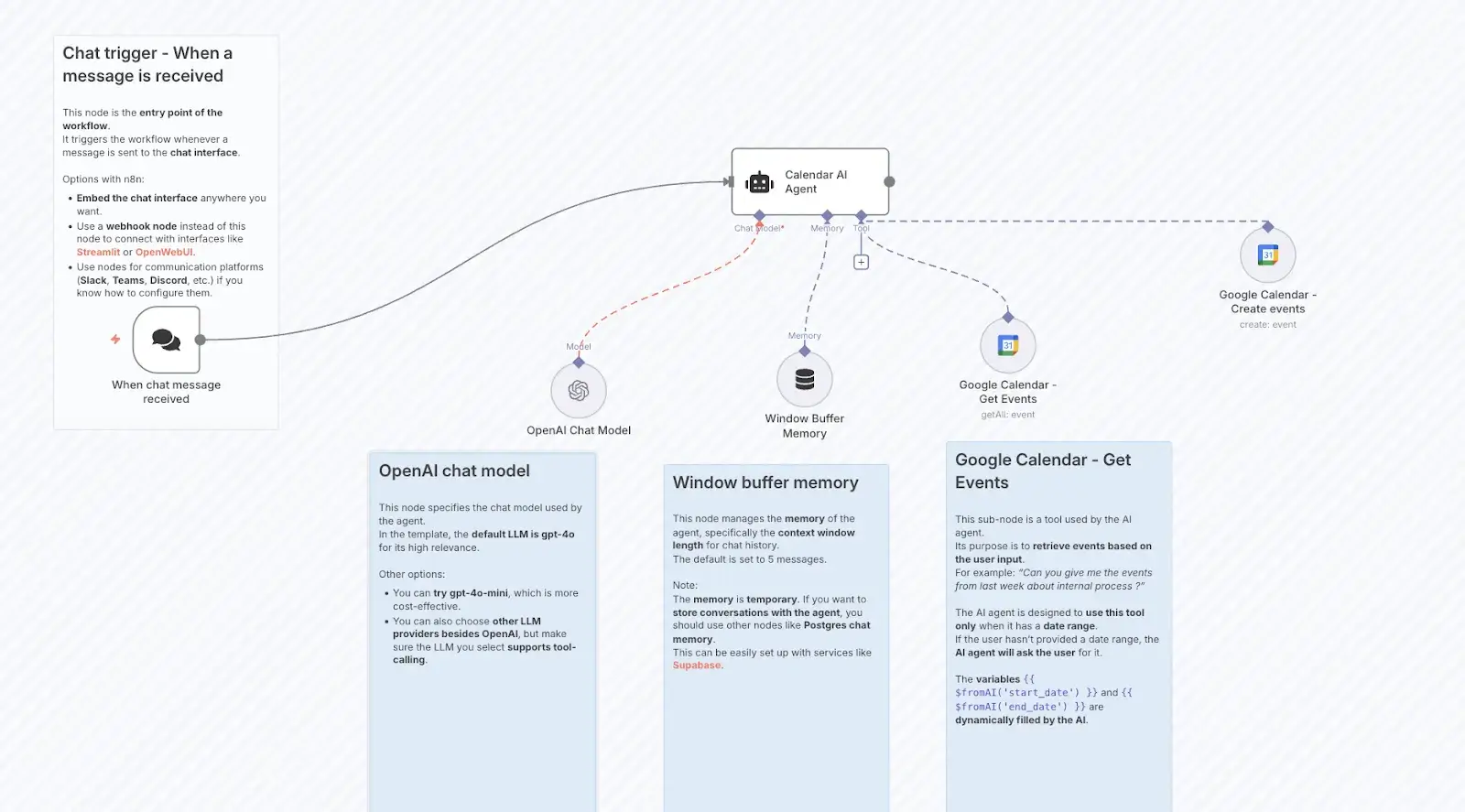

n8n

Similar to Langflow, n8n provides AI agents as a powerful node that is integrable in any workflow. However, the core paradigm is different.

n8n’s AI agent node serves as a container for an LLM along with some tools. But the way tools are provided is through adjacent nodes in the workflow. For example, n8n has dedicated nodes for common AI tools, and you can wire those into the agent’s execution path.

Conceptually, the node acts like a router that takes a user query and decides which actions to take among a set of Tools, then returns a final answer.

In practice, this requires creating a sub-workflow that bridges the agent’s decisions and tool executions. It’s not as one-click as Langflow’s agent configuration.

The best part is, n8n categorizes its AI agent nodes into two types:

- Tools Agent: They can use different tools to work on a query and even carry out actions across apps.

- Chat Agent: Best for chat-based interactions requiring memory and retrieval capabilities.

Below is a perfect example of a Tools Agent that acts as a Google Calendar Assistant.

Similarly, you can build different types of agentic workflows, like a single agent with multiple tools, a hierarchical system with a ‘gatekeeper’ agent that delegates tasks to specialists, or collaborative multi-agent teams.

You can also inspect the agent’s reasoning steps in the execution logs. Moreover, you can configure agents with sub-components, like your choice of LLM, Tools, and accessible memory.

Feature 3. RAG Building Blocks

Retrieval-Augmented Generation (RAG) is a typical pattern where an LLM is supplemented with external knowledge like company documents and databases.

Let’s see how Langflow and n8n enable RAG-first agents.

Langflow

Langflow is well-suited for building RAG pipelines. You can visually chain together data connectors, vector DB integrations, and LLM interfaces, all wired in a unified flow.

Essentially, a RAG flow in Langflow involves assembling a workflow from these fundamental components. The approach offers control over every step and encourages experimentation with advanced or custom RAG architectures.

The fact that Langflow is agnostic to specific LLMs or databases means you can mix and match. For example, use OpenAI’s text embedding model with a Pinecone vector DB, or use a local embedding model with Chroma.

Also, Langflow’s starter templates lower the barrier for Python devs to implement things like ‘Chat with your docs’ without writing boilerplate code. And since Langflow follows LangChain patterns, you can customize prompts, refine styles, add citations, and even run fully offline with local models and embeddings.

n8n

n8n treats RAG as a first-class, guided workflow. It offers a dedicated RAG Starter Template that provides two pre-built workflows: one for data ingestion and another for querying.

First, an ‘ingestion’ workflow uses a Vector Store node with an Insert Documents operation to process and store data. Second, a ‘query’ workflow uses an agent or another Vector Store node to retrieve information and generate a response.

Furthermore, n8n provides nodes and guidance for advanced RAG techniques. This includes using rerankers to improve the relevance of retrieved documents and enriching metadata to provide better context to the LLM.

One notable advantage of n8n is that you can incorporate real-time or external info retrieval easily because of its integration capabilities. However, n8n does not come with an internal vector store component. You will rely on external services for vector similarity search.

Feature 4. Human-in-the-Loop

Langflow and n8n offer distinct mechanisms for implementing human-in-the-loop workflows.

Langflow

Langflow does not have a dedicated ‘human approval’ or wait step within a flow. But it can implement sophisticated Human-in-the-Loop (HITL) capabilities in conjunction with Langgraph. It does so via two core methods:

- Checkpointers: LangGraph's checkpointers, which can be seen as a form of database, store the state of the graph, allowing it to be interrupted and resumed later. This is crucial for enabling human intervention.

- Interrupt Functionality: LangGraph provides an interrupt function that can be called within a node to pause execution. Humans can then provide feedback or input, and the execution can be resumed with this new information.

Both methods allow a workflow to pause at any node and wait for human input.

n8n

n8n provides a direct and practical solution for HITL with its core Wait node. This node can pause a workflow's execution until it receives an external signal.

This signal can be a time-initiated trigger, a specific time, a webhook call from another service, or a manual submission form.

Overall, n8n's HITL is designed for process control, which makes it easy to add simple approval gates to any automation. However, it does require thinking through how to notify the human and receive input. But since n8n integrates with email, chat apps, etc., you have many options.

Langflow vs n8n: Integration Capabilities

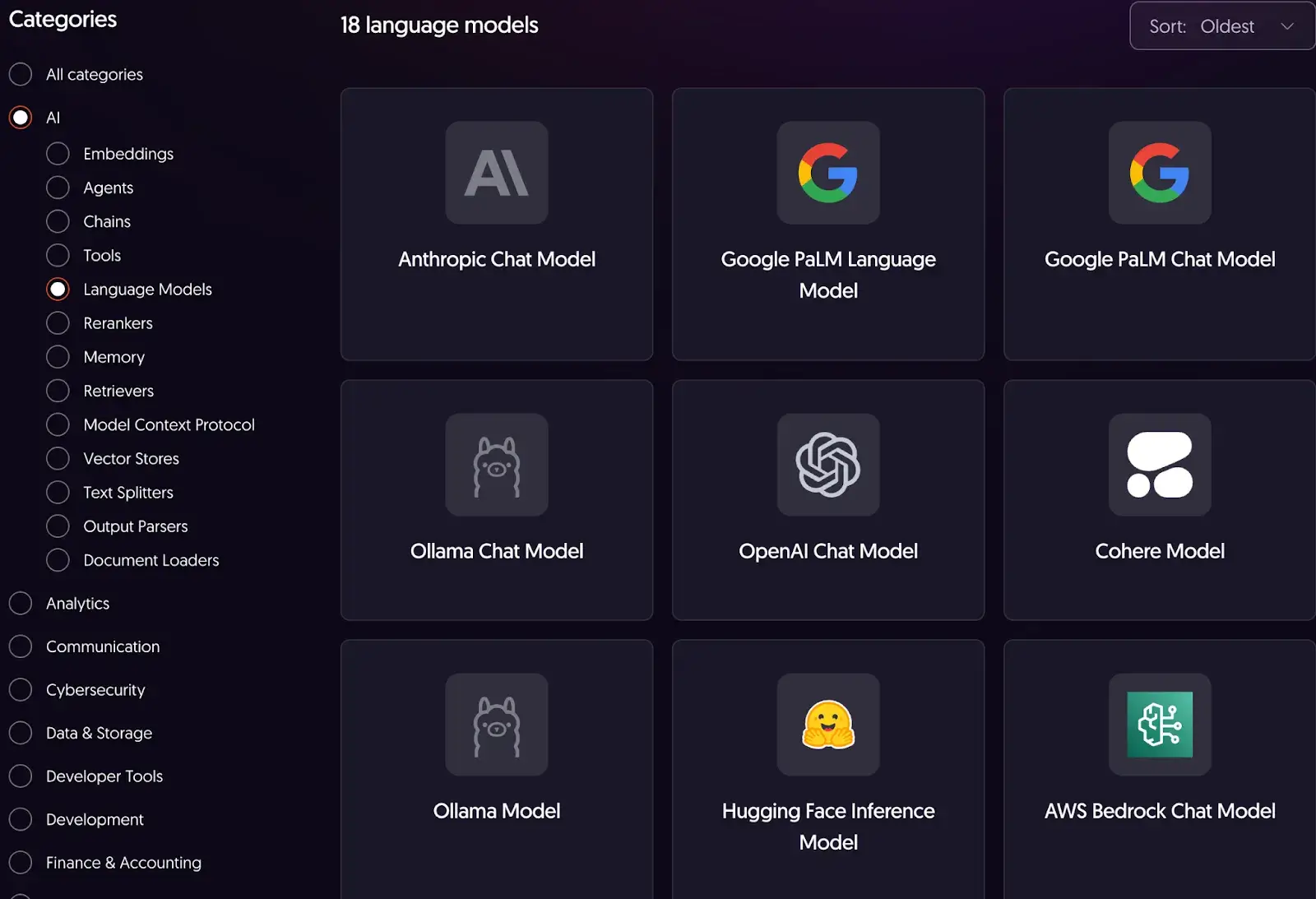

Langflow

Langflow's integration is tightly focused on the AI development stack. It integrates with:

- Major vector databases, including Chroma, Pinecone, Weaviate, Qdrant, and more.

- Cloud platforms, like Google, AWS, and more.

- Leading AI model providers, like OpenAI, Anthropic, Mistral, Hugging Face, and more.

Because Langflow is open-source, the community can contribute new integrations as custom components. It also allows Python-level custom connectors and APIs and integrates with other agent frameworks like CrewAI.

For broader connectivity to business applications, Langflow can connect to middleware platforms like Zapier.

n8n

n8n's primary strength is its vast ecosystem of over 1,000 pre-built integrations that span SaaS like CRMs, communication apps like Slack, developer tools like GitHub, APIs, and databases.

If a pre-built node does not exist for a specific service, the generic HTTP Request node allows n8n to connect to virtually any REST API.

One area both tools address is connecting to internal databases and custom apps. Langflow can use LangChain’s SQL or CSV agents to let an LLM query a database; n8n can directly query the database with its DB nodes and then feed results to the LLM.

Overall, n8n offers a broader integration palette. It is well-suited when your AI workflow needs to interface with various business applications or services beyond the typical AI toolkit.

Langflow vs n8n: Pricing

Both Langflow and n8n have open-source offerings, free to use. n8n offers commercial plans.

Langflow

The core Langflow framework is open-source. It’s completely free to use, modify, and self-host. The costs associated with using Langflow are indirect and stem from the infrastructure you provision to run it:

- API Usage: You pay your chosen providers for any LLM API calls or other external services your flows use.

- Cloud Hosting: When deploying Langflow from a cloud marketplace (e.g., AWS, Azure), you are billed for the underlying compute, memory, and storage resources.

The total cost of ownership is therefore a function of the infrastructure and API services you choose to manage yourself.

n8n

n8n uses a multi-faceted pricing model. For its managed service, n8n Cloud, it offers tiered plans that are priced based on the number of workflow executions per month.

- Starter: € 24 per month

- Pro: € 60 per month

- Business: € 800 per month

- Enterprise: Custom pricing

How ZenML Manages the Outer Loop when Deploying Agentic AI Workflows

By far, we discussed Langflow and n8n in terms of designing and running AI agent workflows. However, deploying agentic AI systems in production is a whole other realm.

This is where we use ZenML. To cover the outer loop: everything around the agent, such as orchestration in pipelines, observability, data tracking, evaluation, and scaling in different environments.

ZenML is an open-source MLOps + LLMOps framework that can integrate with Langflow or n8n workflows to provide a robust production pipeline.

Here’s how ZenML complements these tools:

1. Unified Orchestration

You can wrap a Langflow flow or an n8n workflow as steps in a ZenML pipeline.

For example, a ZenML pipeline could have steps: data preparation → run Langflow agent → post-process output → evaluation.

ZenML ensures that each step is reproducible and can run on different infrastructure using the same code and configurations.

This means you design your agent in Langflow or n8n, and then ZenML takes over to run it reliably as part of a larger automated process.

2. End-to-End Visibility and Lineage

ZenML automatically tracks and versions every component of your pipeline run. When your agent runs, ZenML can log the input prompts, outputs, the model used, and any intermediate artifacts.

Every trace is visualized using a central dashboard where you can see the entire lineage. If an agent makes a faulty decision, ZenML helps trace back exactly what happened, even if the inner logic was a black-box agent.

The bottom line: Your team gets a complete and auditable picture of how your systems are behaving, which makes debugging and compliance much simpler.

3. Continuous Quality Checks

A ZenML pipeline can include steps that automatically run evaluations after each AI output. These steps can be an automated metric like BLEU score, LLM-as-a-judge, or even a human feedback step integrated in the pipeline.

Neither Langflow nor n8n on their own has a built-in evaluation store for AI outputs; that’s the gap ZenML fills. ZenML closes the loop by running the agent, checking it, and feeding those checks back into improvement cycles.

👀 Note: At ZenML, we have built several such integrations with tools like CrewAI, LangGraph, LlamaIndex, and more. We are actively shipping new integrations that you can find on this GitHub page: ZenML Agent Workflow Integrations.

📚 Related comparison articles to read:

Which framework to choose: Langflow vs n8n?

Neither is universally superior; they are optimized for different tasks. The choice depends on your project's goals, your team's expertise, and the nature of the problem at hand. But here’s a rule of thumb that will help:

✅ Choose Langflow if: Your primary goal is to rapidly prototype and build the AI-native core of an application with maximum architectural freedom.

✅ Choose n8n if: You value a guided development experience with powerful built-in logic nodes and prefer a predictable, all-inclusive pricing model for cloud deployment or self-hosted enterprise features.

Ultimately, these frameworks are not mutually exclusive. With a robust MLOps platform like ZenML, you can orchestrate and evolve agents built on both frameworks within a unified production environment.

If you’re interested in taking your AI agent projects to the next level, consider joining the ZenML waitlist. We’re building out first-class support for agentic frameworks (like CrewAI, LangGraph, and more) inside ZenML, and we’d love early feedback from users pushing the boundaries of what AI agents can do. With ZenML, you can seamlessly integrate whichever agent framework you choose into robust, production-grade workflows. Join our waitlist to get started.👇