To build the most efficient AI agents and workflows, the choice of framework becomes a big decision.

The AI market is now flooded with new AI agentic framework options; some good, some not so good. Two emerging options you must have heard of are Langflow and LangGraph, each taking a different approach to agentic AI development.

In this Langflow vs LangGraph article, we break down the key differences in features, integrations, and use cases. We also discuss how you can potentially use both together (with a tool like ZenML) to get the best of both worlds. Let’s dive in.

Langflow vs LangGraph: Key Takeaways

🧑💻 Langflow: Langflow is a powerful visual tool for building and deploying AI-powered agents and workflows. It provides an intuitive canvas to connect LLMs, tools, prompts, and memory into flows that define an agent’s behavior. With Langflow’s no-code/low-code interface, developers can prototype multi-step or multi-agent applications quickly.

🧑💻 LangGraph: LangGraph is a newer framework from the LangChain ecosystem for building stateful, multi-agent systems as directed graphs. It gives developers fine-grained, code-level control over agent workflows by representing each step (LLM call, tool invocation, decision point, etc.) as a node in a graph and connecting these nodes with explicit edges.

Framework Maturity and Lineage

The table below compares the framework maturity and lineage of Langflow and LangGraph:

👀 Note: The data in the table above is written as of mid-2025 and may vary over time.

Langflow’s earlier launch and visually impressive interface have earned it a massive following on GitHub, with over five times the stars of LangGraph. The ‘wow-factor’ of its drag-and-drop canvas makes it easy for developers to experiment with LLM concepts and share their creations visually.

In contrast, LangGraph's adoption metrics tell a different story. Despite being a much newer framework with fewer stars, its PyPI download volume is orders of magnitude higher. This staggering number, exceeding 7 million monthly downloads, is a strong indicator of its use in automated, production-oriented environments.

This difference can be attributed to their lineage. Langflow is an independent project that has built its community from the ground up. LangGraph, however, is a direct extension of the vast and mature LangChain ecosystem.

Langflow vs LangGraph: Features Comparison

To better understand how Langflow and LangGraph stack up, let’s compare their capabilities across four core feature areas:

Now, let’s dive deeper into each feature and see how Langflow and LangGraph differ in practice.

Feature 1. Workflows and Agents

The way a framework allows you to define and execute logic is its most fundamental characteristic. Langflow and LangGraph represent two opposing philosophies: visual abstraction vs. code-based explicit control.

Langflow

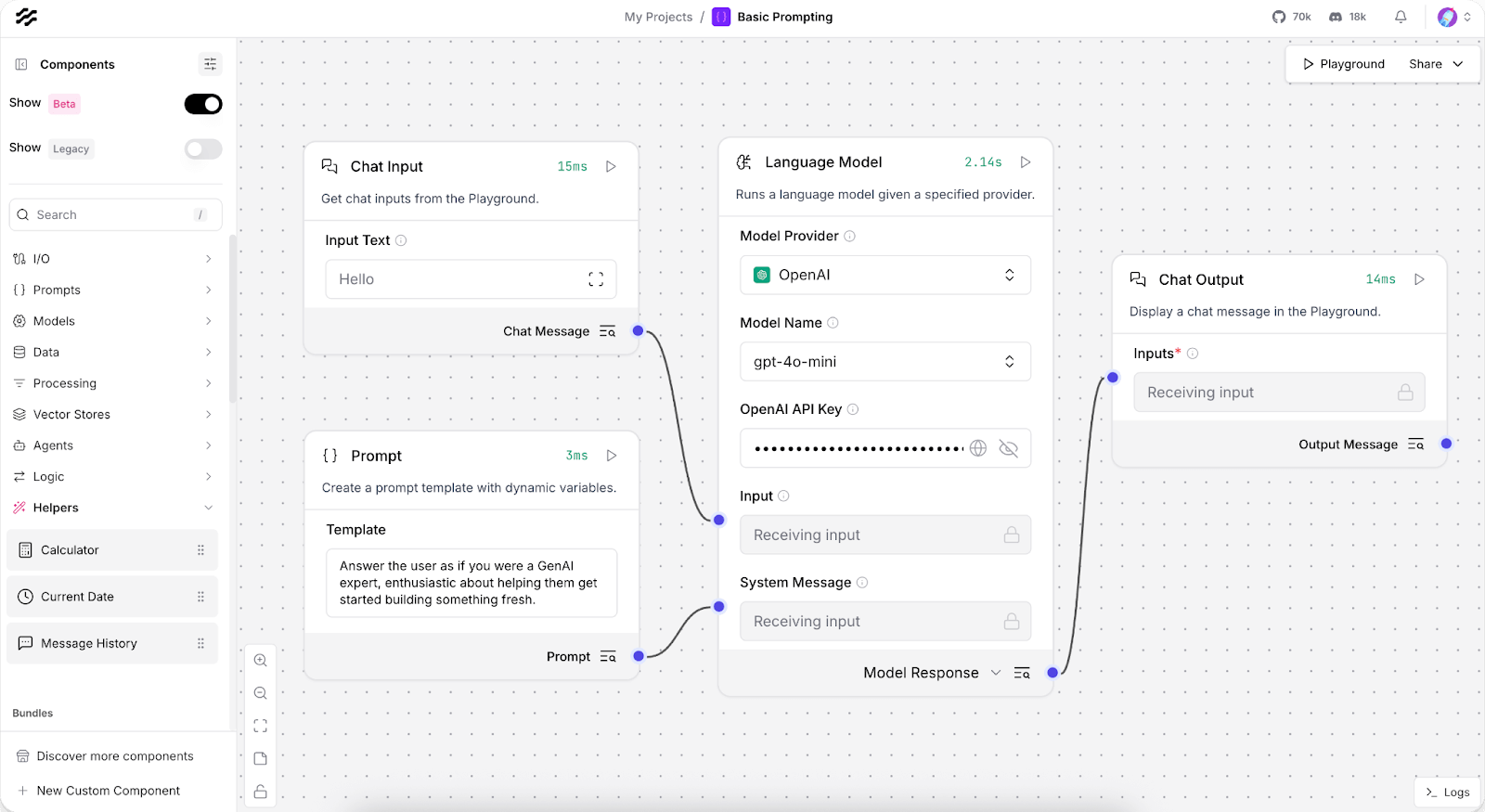

Langflow is designed primarily for low-code and no-code builders, using the concept of ‘flows’ to define an AI application’s workflow. A flow is a directed pipeline of components that can include LLMs, prompts, tools (functions/actions), and memory stores.

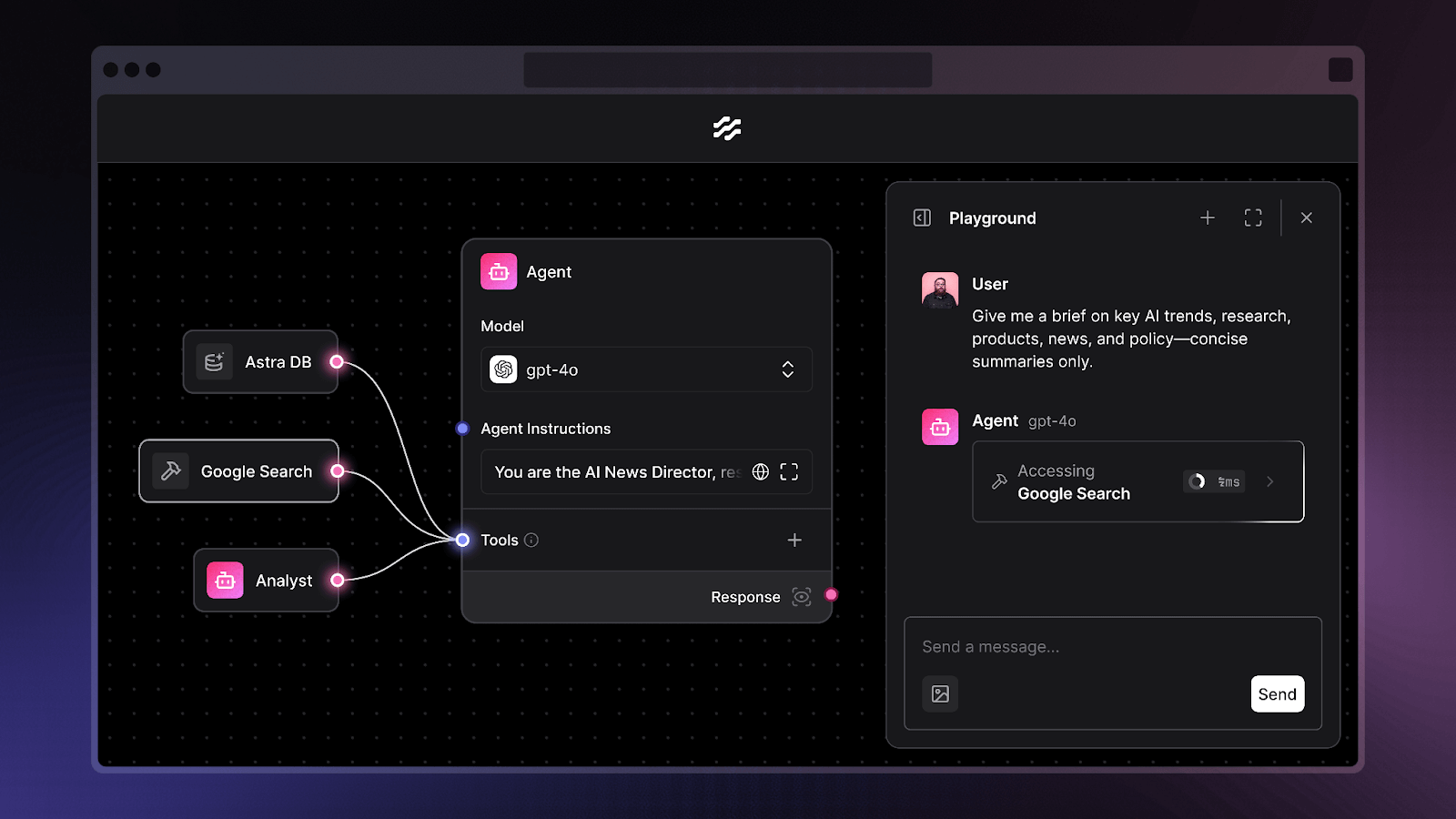

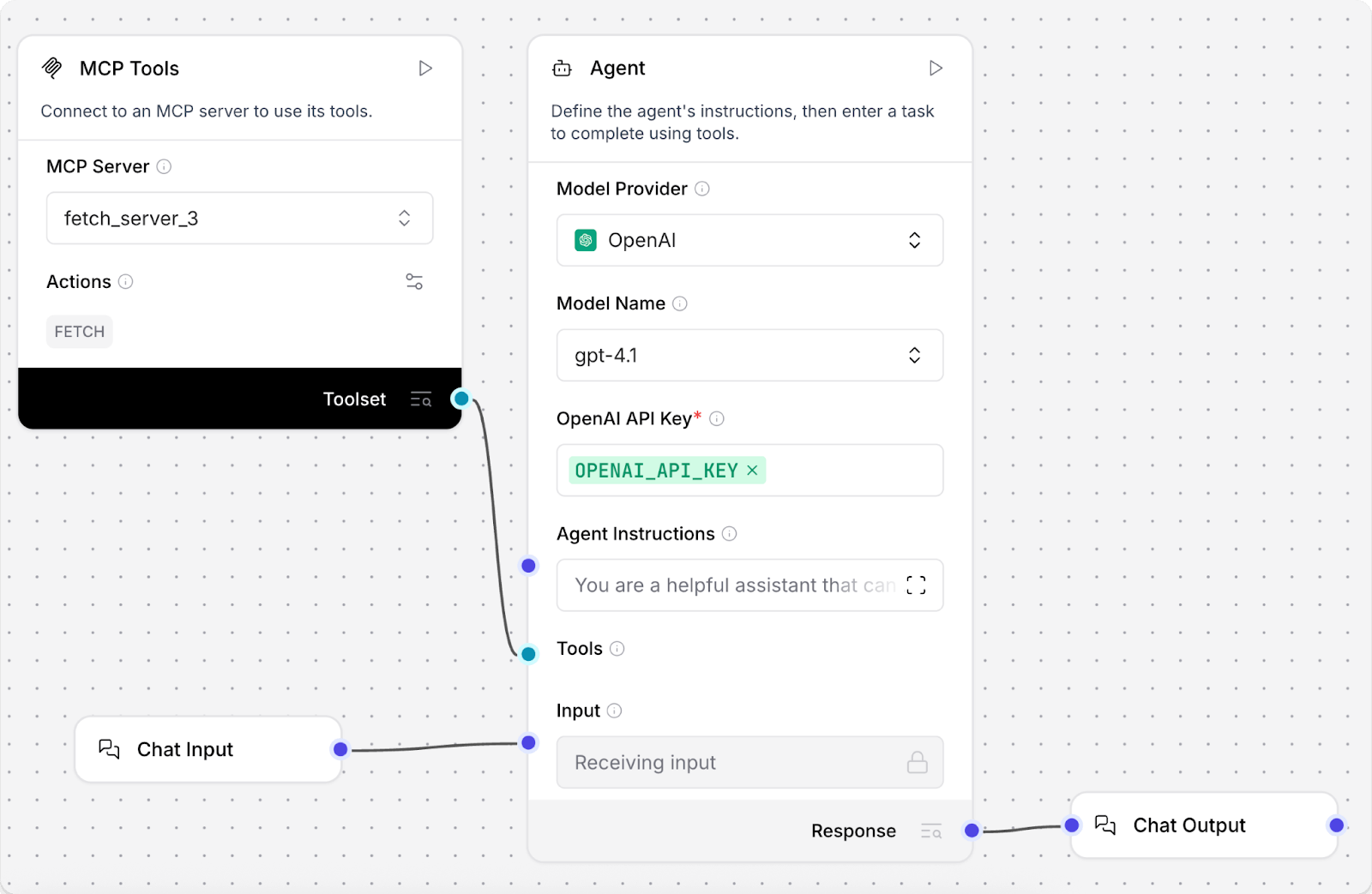

The UI: A Visual, Low-Code Canvas

The core of Langflow is its user interface, which provides a drag-and-drop canvas for building applications. This visual-first approach is its key differentiator.

You can select components from a menu and place them on the canvas, then visually wire them together to define the flow of data and logic. This empowers you to construct and iterate on complex chains and agentic workflows without writing extensive code, making AI development more accessible.

The Langflow Playground further enhances this by providing an interactive interface to run flows step-by-step. This allows you to test queries, observe agent behavior, and tweak prompts on the fly.

An Agent component in Langflow encapsulates an LLM ‘brain’ that autonomously decides which connected tool to use.

You visually connect an Agent to tools like web search or calculators, and Langflow handles the underlying logic.

What’s more, the framework also lets you build multi-agent workflows by including multiple Agent components that interact in sequence, or achieve conditional branching using logic components.

LangGraph

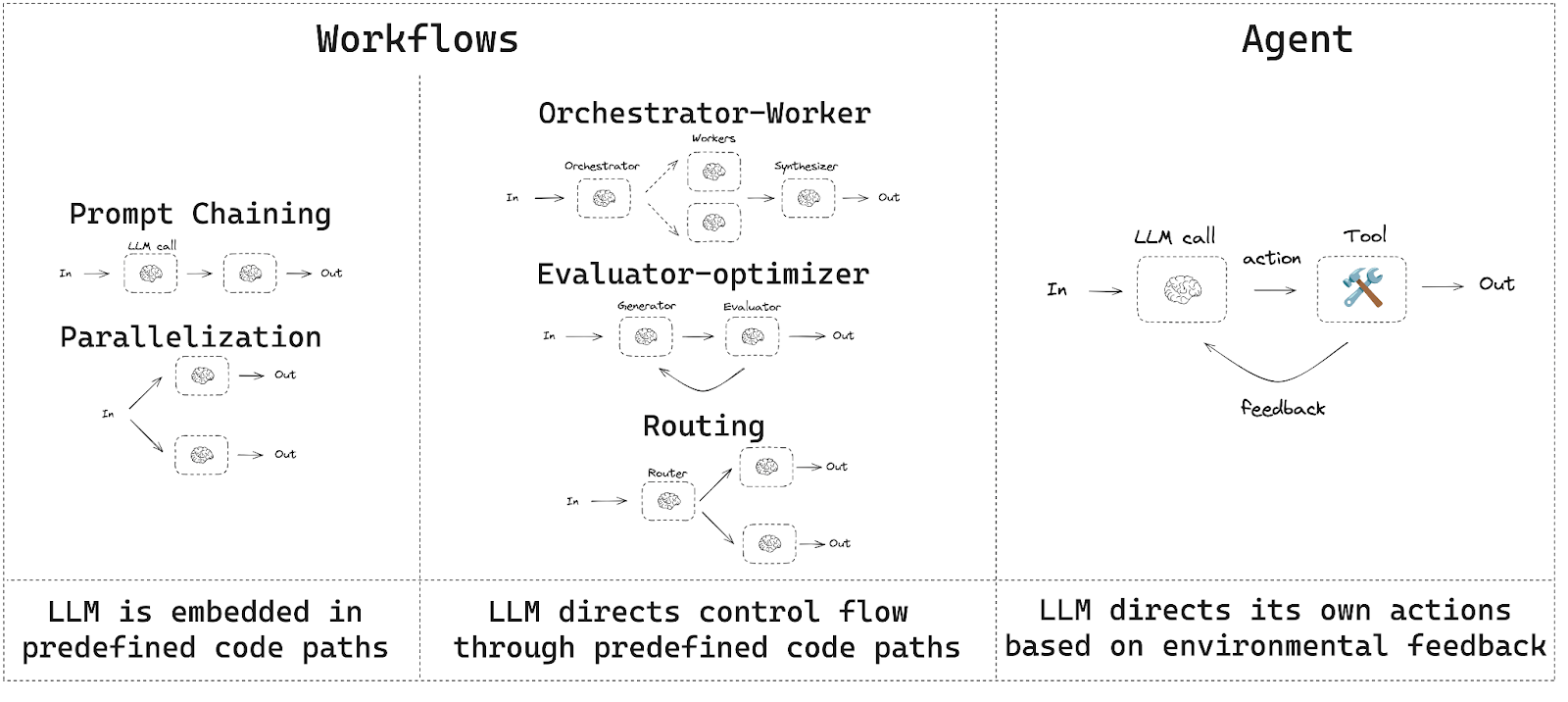

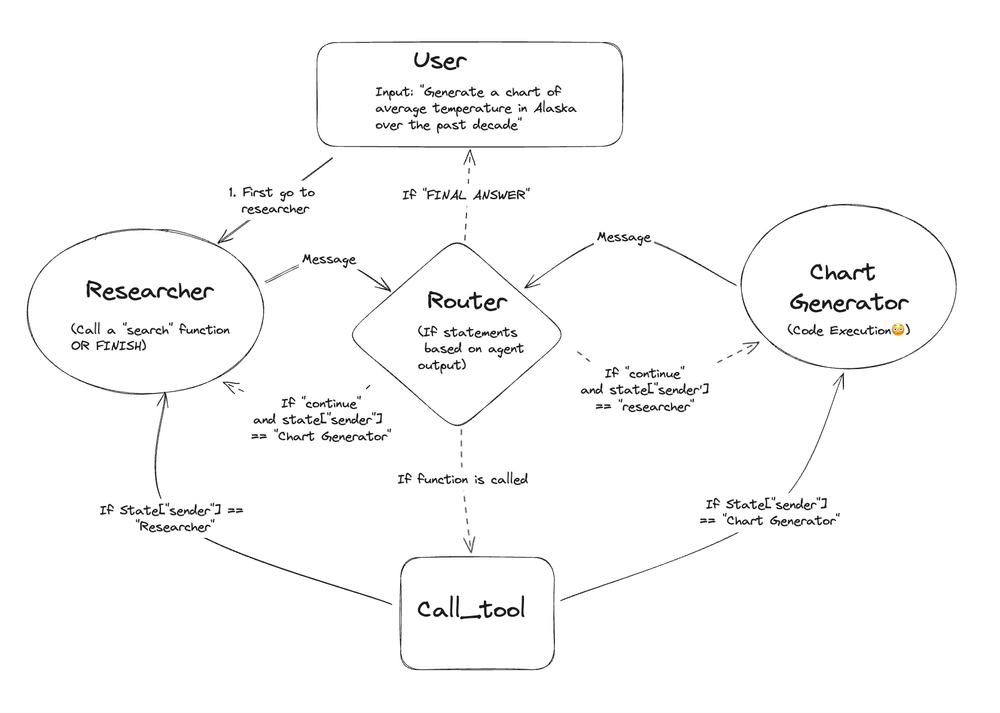

LangGraph approaches workflows from a code-centric angle. Instead of a visual canvas, you define an agent workflow by writing code that specifies a graph of nodes and their connections.

Each node represents a step – an LLM call, a tool execution, or a conditional branch – and you explicitly define the edges that direct the flow of information.

This design treats the agent’s decision process as a state machine that you, the developer, configure with precision.

The benefit? You get granular control to create sophisticated behaviors like cyclical loops (revisiting a task until a condition is met) or complex routing.

Multi-agent collaboration is where LangGraph excels. Since any node can be a sub-graph or another agent, it’s straightforward to implement patterns like an Agent Supervisor overseeing other agents or hierarchical teams. This explicit, code-based structure allows for robust and auditable agent architectures.

📚 Related reading: LangGraph alternatives

Bottom Line: The choice between them comes down to ease-of-use vs. expressive power.

Langflow empowers low-code builders to stand up powerful agent workflows quickly through a visual UI.

LangGraph offers developers the fine-grained control to craft bespoke, complex agent architectures that can handle intricate logic, at the cost of writing more code.

Feature 2. Agent Architecture

The underlying architecture defines how flexible, configurable, and powerful an agentic system can become. Langflow and LangGraph offer distinctly different architectural approaches: abstracted simplicity versus granular control.

Langflow

In Langflow, an ‘Agent’ is a concrete, pre-built component that you add to your flow from the component menu.

Its internal architecture, which typically follows a standard pattern like ReAct (Reason+Act), is largely abstracted away from the user.

A developer's job is not to build the agent's reasoning loop but to configure the agent component by connecting its inputs: the LLM to use for thinking, the tools it can access, a memory object for conversation history, and a system prompt to guide its behavior.

This component-based approach makes it incredibly simple to get a standard agent running. The complexity of the agent's internal workings is hidden, which allows you to focus on the inputs and outputs.

While this is great for speed and simplicity, it offers less flexibility if you need to fundamentally alter the agent's decision-making process or create a novel collaborative architecture.

LangGraph

LangGraph doesn’t provide a monolithic ‘Agent’ component. Instead, it provides the low-level building blocks to construct any agent architecture you can design in code. This allows for a much wider range of sophisticated patterns:

- Routers: A simple but powerful architecture where an LLM's primary job is to act as a router. Based on the input and current state, it selects the next node to execute from a predefined set of options, often using structured output to make reliable decisions.

- Tool-Calling Agents: The classic ReAct loop is implemented as a cycle in the graph. A node containing the LLM decides which tool to call, a

toolnode executes it, and the result is fed back into the LLM node for the next step of reasoning. This loop continues until the agent has solved your request. - Hierarchical Agent Teams: LangGraph truly excels at creating complex multi-agent systems. A developer can build a ‘supervisor’ graph that orchestrates other, more specialized ‘worker’ graphs. These worker graphs, or subgraphs, operate with their own isolated state and tools, reporting their results back to the supervisor.

Bottom line: This difference in approach speaks directly to the target use case. Langflow operates at a higher level of abstraction, offering agents-as-a-service within its UI. It’s a tool for using agents.

LangGraph operates at a lower level, providing the primitives for building agent runtimes. If a standard agent implementation meets your needs, Langflow is faster. If you need to invent a new way for agents to handle failures, collaborate, or reason, LangGraph has the necessary power and control.

Feature 3. State Management and Memory

An agent's ability to remember, learn, and maintain context over time is what separates a simple chatbot from a truly intelligent system. Langflow and LangGraph handle this critical aspect differently; one highlights a focus on temporary context and the other on durable statefulness.

Langflow

In Langflow, state management is relatively straightforward. Each flow execution is typically treated as a single session (especially for chat agents).

Langflow includes Memory components that you can add to your flow to enable persistence of conversation state.

For example, you can drag in a ‘Message History’ component and a ‘Store Messages’ component along with a memory backend like a Redis-based chat memory.

By connecting these to your Agent, you give it the ability to retain the last N interactions or store/retrieve messages by a session_id. This allows your agent to have short-term memory of the conversation – crucial for chatbots that need context from previous user prompts.

That said, Langflow’s approach to memory is largely through external stores. It leverages LangChain’s memory abstractions like ConversationBufferMemory, under the hood.

You configure a memory component with, say, a vector database or an in-memory database, and Langflow will use that to fetch relevant past dialogues or facts when the agent runs.

The Memory is scoped per session, and if you reset or start a new session (or if you don’t include memory), the agent doesn’t remember earlier queries. There isn’t an automatic global long-term memory in Langflow unless you explicitly wire one via a vector store.

That being said, Langflow does offer some observability of state during development. The interactive execution mode lets you inspect the state at each step.

LangGraph

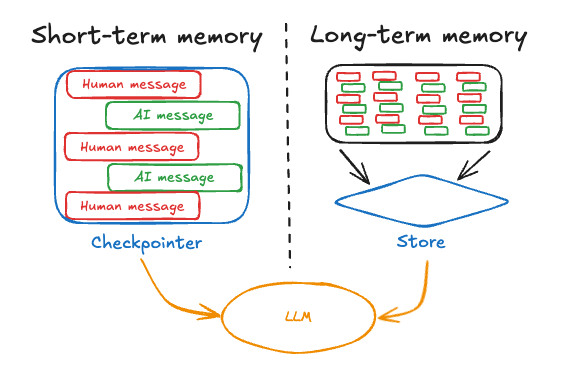

State management is a core strength of LangGraph. By design, LangGraph treats the agent’s execution as a stateful process. Every time an agent (graph) runs, it maintains a State object that can carry information from node to node.

What’s more, LangGraph can persist this state using a checkpointer mechanism. After each node (or at configurable intervals), the current state can be saved to a database or storage. This enables a few powerful capabilities:

- Resume/Recovery: If an agent workflow is interrupted, say due to server crashes or the process is stopped, LangGraph can reload the last checkpoint and resume from that point.

- Step Replay (Time Travel): LangGraph allows you to jump back to a prior state, alter something, and then continue the execution.

- Parallel state: Because LangGraph supports parallel branches, it manages multiple state copies that later merge.

Regarding memory, LangGraph distinguishes between short-term and long-term memory in a very structured way. Short-term memory is basically the conversation or task state within the current thread of execution. This is automatically kept in the State and saved so it can be restored. LangGraph’s short-term memory, being part of the agent state, is always accessible to any node that needs context.

For long-term memory, LangGraph provides constructs for semantic, episodic, and procedural memory: these align with storing knowledge that persists across sessions or is more permanent.

Bottom line: Langflow's memory system is designed to solve the problem of a chatbot forgetting the last turn of a conversation.

LangGraph's state management system is designed to solve the problem of a production agent losing hours of work if a server restarts.

The former is about context; the latter is about true statefulness, a non-negotiable for many real-world applications.

Feature 4. Model Context Protocol (MCP)

The Model Context Protocol (MCP) is an emerging standard to allow AI applications and agents to discover and use each other's capabilities as tools. The frameworks' support for MCP reveals their strategic positioning within the broader AI ecosystem.

Langflow

Langflow fully embraces MCP by functioning as both a server and a client.

- As an MCP Server: Any Langflow project can automatically expose all of its constituent flows as tools over a standard MCP endpoint. This means another MCP-compatible application, like the Cursor code editor, can dynamically discover and orchestrate these flows.

- As an MCP Client: Langflow also includes an

MCP Toolscomponent. This component can connect to any external MCP server, allowing a flow to import and use tools exposed by other applications.

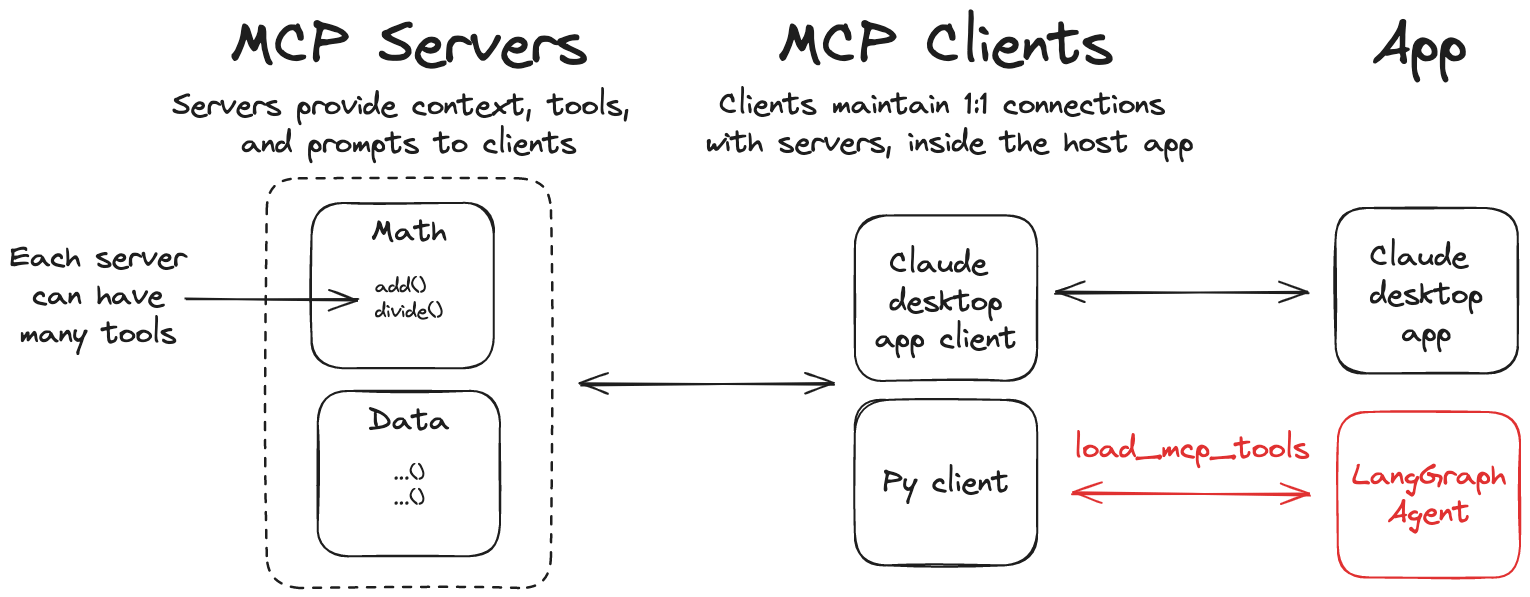

LangGraph

LangGraph's primary role within the MCP ecosystem is that of a powerful client.

It provides adapters and functions that allow a LangGraph agent to connect to MCP servers and load their exposed resources, i.e., tools and prompts, into its workflow. This creates a powerful, synergistic relationship between the two frameworks.

Your team can use Langflow's visual interface to rapidly build and expose a specific capability, like a complex document query engine, as an MCP tool. Then, a core engineering team using LangGraph could build a sophisticated supervisor agent that consumes this tool as part of a larger, more complex business process.

Bottom line: Langflow becomes the tool factory, and LangGraph becomes the assembly line. This shows that the frameworks are not merely competitors but can be complementary parts of a modern, interoperable AI stack.

Langflow vs LangGraph: Integration Capabilities

No framework exists in a vacuum. The ability to connect to other models, data sources, and tools is crucial for building real-world applications.

Langflow

Langflow has a horizontal integration strategy that positions the platform as a neutral hub that can connect to a wide and diverse ecosystem of third-party services. This ‘best-of-breed’ approach provides developers with maximum flexibility.

Key integration categories include:

- LLM Providers: OpenAI (GPT-4o, o3), Anthropic (Claude), Google (Gemini), Meta’s LLaMA models, Hugging Face models (via API or local), Cohere, etc.

- Vector Databases: Langflow includes components for popular vector stores like Pinecone, Weaviate, Qdrant, Milvus, Vectara, Redis, Cassandra/Astra DB, and more.

- Data Connectors and Tools: Via its Tools and Data components, Langflow offers integrations for common services. Need a web search? There’s likely a Bing or Google search tool. Need to interact with PDFs or Notion? Langflow has template flows and components.

- Custom Python logic: If a certain integration isn’t provided, Langflow allows custom code components. You can write a small Python function directly in the UI or by modifying the code and using it as a component.

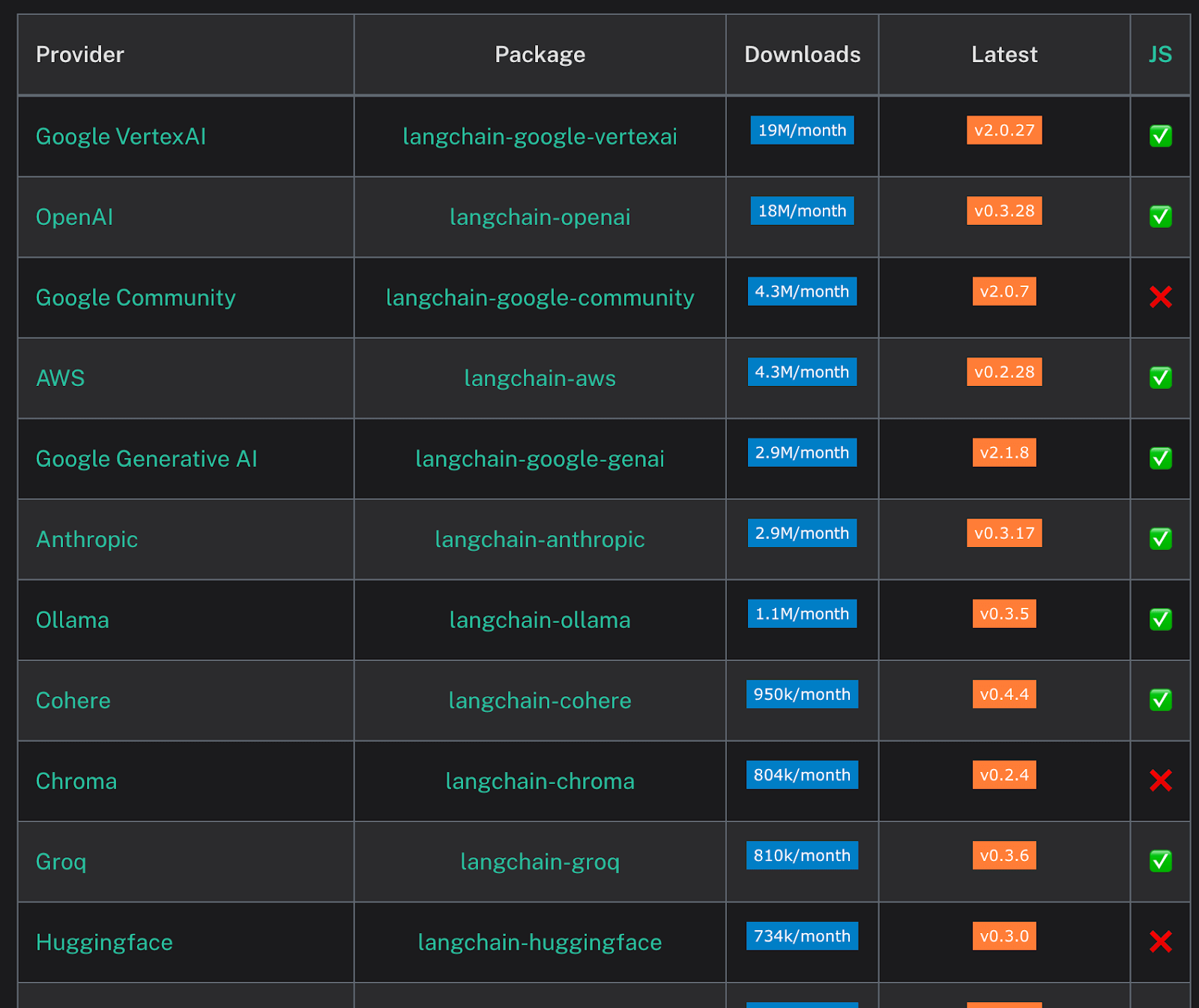

LangGraph

LangGraph’s strength lies in its deep, vertical integration with the LangChain ecosystem. This provides a highly cohesive and powerful development experience for those invested in its stack.

- LangChain Components: As a LangChain library, LangGraph can natively use any LangChain-compatible LLM, tool, vector store retriever, or memory module as a node within a graph.

- LangSmith: This is LangGraph's premier integration. Traces from LangGraph executions are visualized step-by-step in LangSmith, offering an unparalleled view into the agent's reasoning process, state transitions, and tool calls.

- LangGraph Platform and Studio: The ecosystem is completed by a commercial platform for deploying and scaling agents, which includes LangGraph Studio, a visual IDE for prototyping, debugging, and interacting with graphs.

Bottom line: The choice here is between flexibility and cohesion. Langflow offers the breadth to connect with a diverse, existing stack.

LangGraph offers the depth of a purpose-built, vertically integrated toolset designed specifically for building and debugging stateful agents.

Langflow vs LangGraph: Pricing

Both frameworks are open-source, but the total cost of ownership depends on their approach to managed services and infrastructure.

Langflow

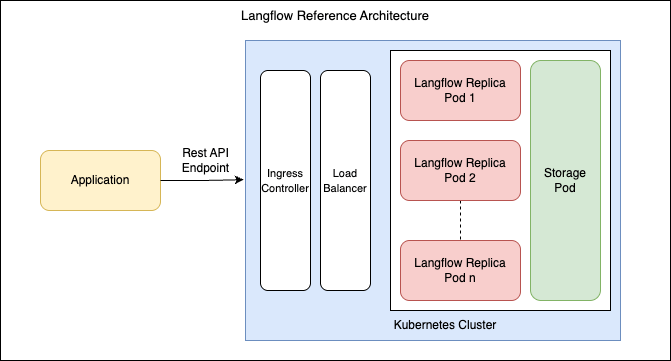

The Langflow software is free and open-source under an MIT license. There are no direct licensing fees or subscriptions.

The costs associated with using Langflow are indirect and stem from the infrastructure you provision to run it:

- API Usage: You pay your chosen providers for any LLM API calls or other external services your flows use.

- Cloud Hosting: When deploying Langflow from a cloud marketplace (e.g., AWS, Azure), you are billed for the underlying compute, memory, and storage resources.

The total cost of ownership is therefore a function of the infrastructure and API services you choose to manage yourself.

LangGraph

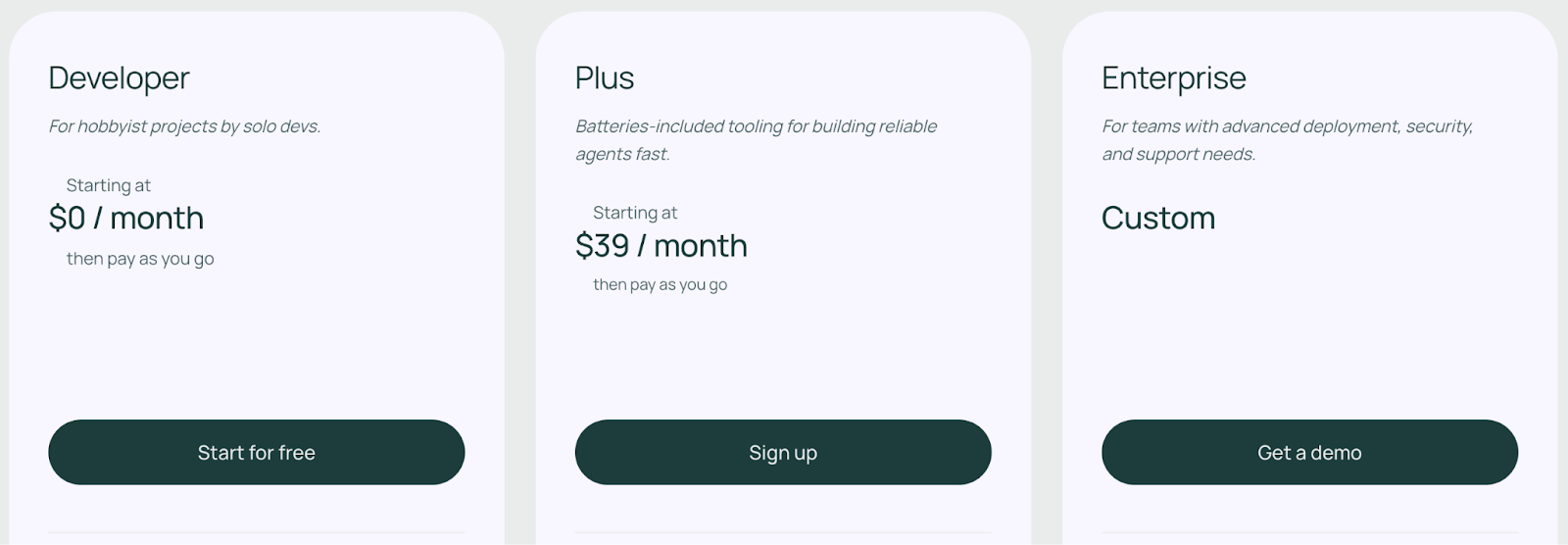

LangGraph employs a freemium model that separates the open-source library from its commercial platform.

The langgraph Python and JavaScript libraries are completely free (MIT license) and can be self-hosted without any usage limits from LangChain.

LangGraph Platform

This is the managed commercial offering with a tiered structure designed to scale with your needs and has three plans to choose from:

- Developer: Includes up to 100K nodes executed per month

- Plus: $0.001 per node executed + standby charges

- Enterprise: Custom-built plan tailored to your business needs

📚 Related article:LangGraph pricing guide

How ZenML Helps In Closing the Outer Loop Around Your Agents

Langflow and LangGraph solve the inner loop: they let you define agents, connect tools, and run reasoning steps. Real production systems still need an outer loop that handles everything around the agent. ZenML fills that gap and wraps any agent framework in a repeatable, observable process. Here’s how:

End‑to‑End Orchestration

ZenML treats a Langflow flow or a LangGraph graph as a step in a ZenML pipeline. The platform can pre‑process data, call the agent, post‑process the output, and push results to downstream services. Pipelines run on local machines, CI runners, Kubernetes, or managed clouds using the same code, so promotion from dev to prod is trivial.

Unified Visibility and Lineage

ZenML records every run automatically. Inputs, prompts, model versions, intermediate artifacts, and final outputs are stored in a central metadata store. A single dashboard lets engineers trace a decision back to the raw data and see exactly which node in the agent graph produced it.

Continuous Evaluation and Feedback

Quality checks are first‑class steps. After the agent finishes, ZenML can launch LLM‑as‑judge evaluators, rule‑based tests, or human review tasks. Failures raise alerts or trigger a retraining pipeline, bringing a true feedback loop to agent development.

Production, Deployment, and Scaling

ZenML abstracts infrastructure details. Choose a stack - Docker, VM, serverless, or Kubernetes - and ZenML provisions resources, manages secrets, and handles parallel execution. Pipelines can run hourly or in response to events without code changes.

In short, ZenML turns experimental agents into reliable services by adding orchestration, observability, evaluation, and scalable deployment; all without locking you into a single vendor.

📚 Related comparison articles to read:

Which Agentic AI Platform Is Best For You?

The choice between Langflow and LangGraph depends entirely on your project's goals, your team's expertise, and your requirements for control versus flexibility.

✅ Choose Langflow if:

- Your primary goal is rapid prototyping and visual development.

- You need to build and demonstrate LLM workflows quickly for stakeholders.

- Your application involves straightforward, linear (DAG-based) logic.

✅ Choose LangGraph if:

- Your primary goal is to build reliable, auditable, and production-grade agentic systems.

- You require fine-grained control over the agent's logic, including cycles for self-correction and iteration.

- You are building complex, long-running, or multi-agent applications that require durable, persistent state.

✅ Use ZenML when:

- You are ready to move any agentic application from a research notebook to a robust production system.

- You need reproducibility, scalability, automated evaluation, and a unified platform to manage the entire lifecycle of your AI agents, regardless of the framework used to build them.

ZenML's upcoming platform brings every ML and LLM workflow - data preparation, training, RAG indexing, agent orchestration, and more - into one place for you to run, track, and improve. Type in your email ID below and join the early-access waitlist. Be the first to build on a single, unified stack for reliable AI. 👇