LangChain agent chains integrated with ZenML

LangChain provides a powerful framework for building chains and agents that combine LLMs with tools like search, retrieval, or calculators. By connecting LangChain to ZenML, you can run these chains inside reproducible pipelines with full artifact tracking, observability, and seamless scaling from notebooks to production orchestrators.

Features with ZenML

- Orchestrated runnable chains. Run LangChain chains as reproducible ZenML pipeline steps.

- Tracked outputs. Automatically log prompts, summaries, and tool results for lineage and debugging.

- Custom evaluation hooks. Add eval steps to score generations, monitor latency, or validate correctness.

- Composable workflows. Combine LangChain with RAG, agents, or deployment steps in a single DAG.

- Production-ready pipelines. Move from prototyping to scalable workflows on Kubernetes, Airflow, or other stacks.

Main Features

- Runnable chains. Execute LangChain chains with integrated tools and components.

- Math and search. Extend workflows with calculators, web search, and retrieval helpers.

- Composable architecture. Build flexible pipelines using chain composition and pipe operators.

How to use ZenML with

LangChain

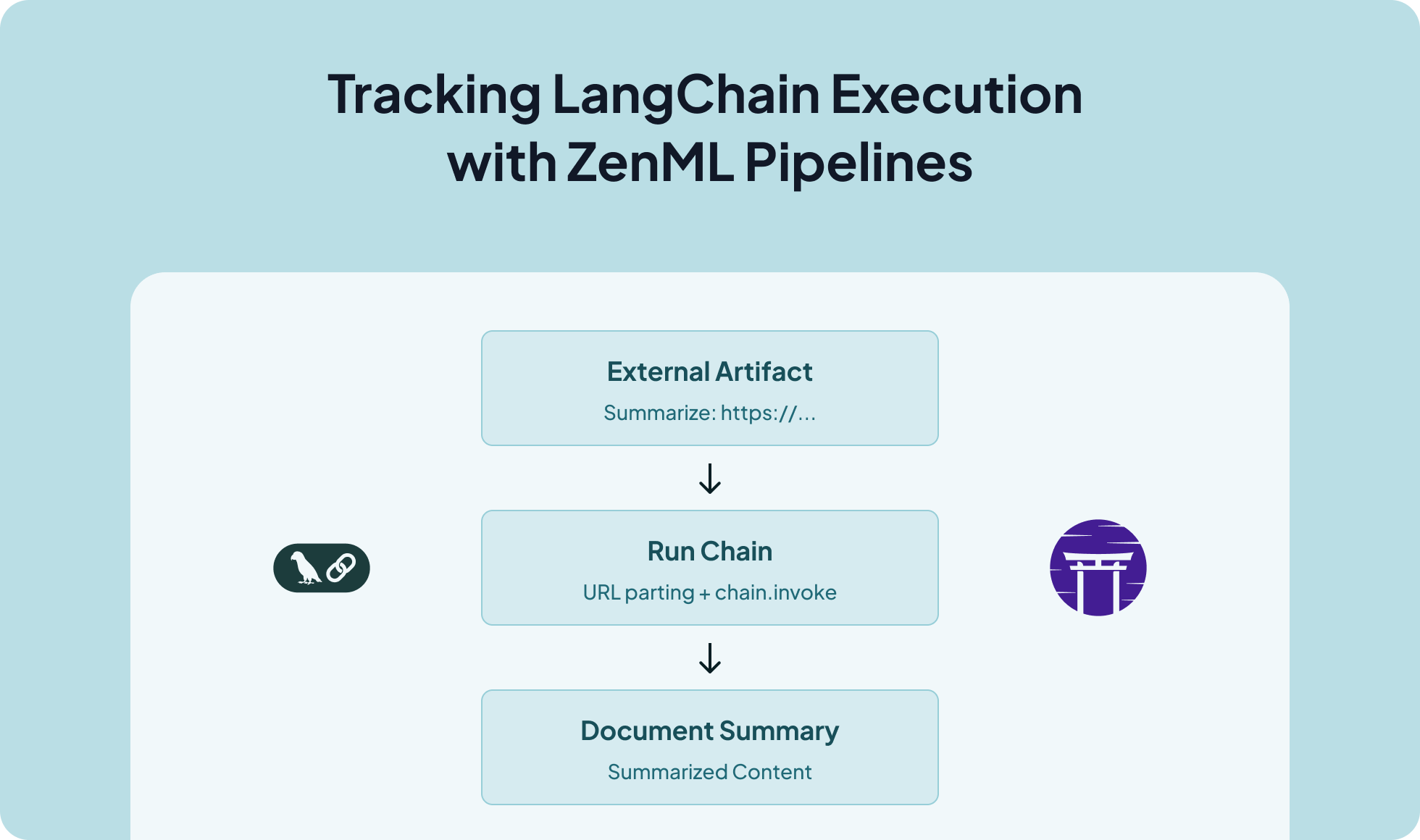

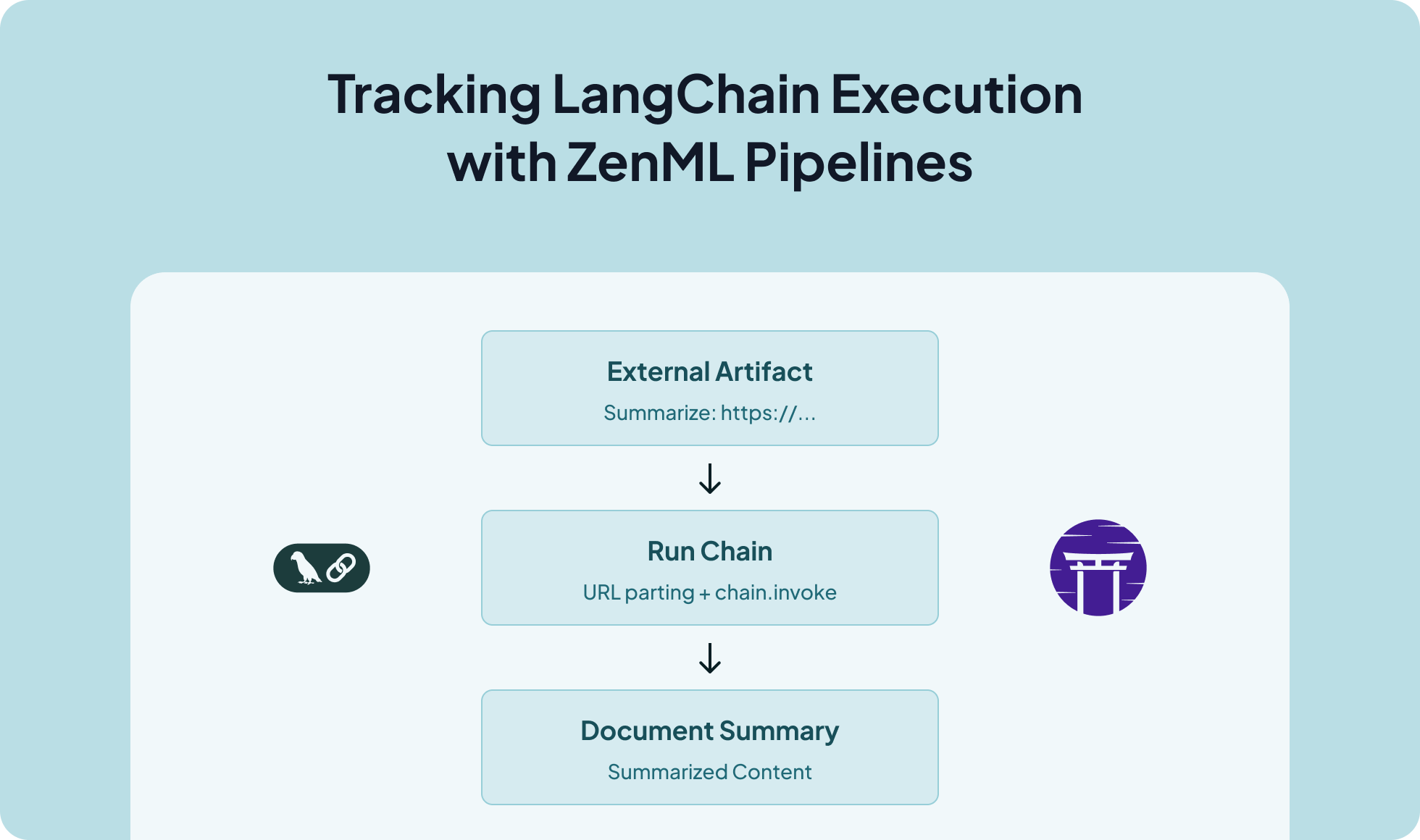

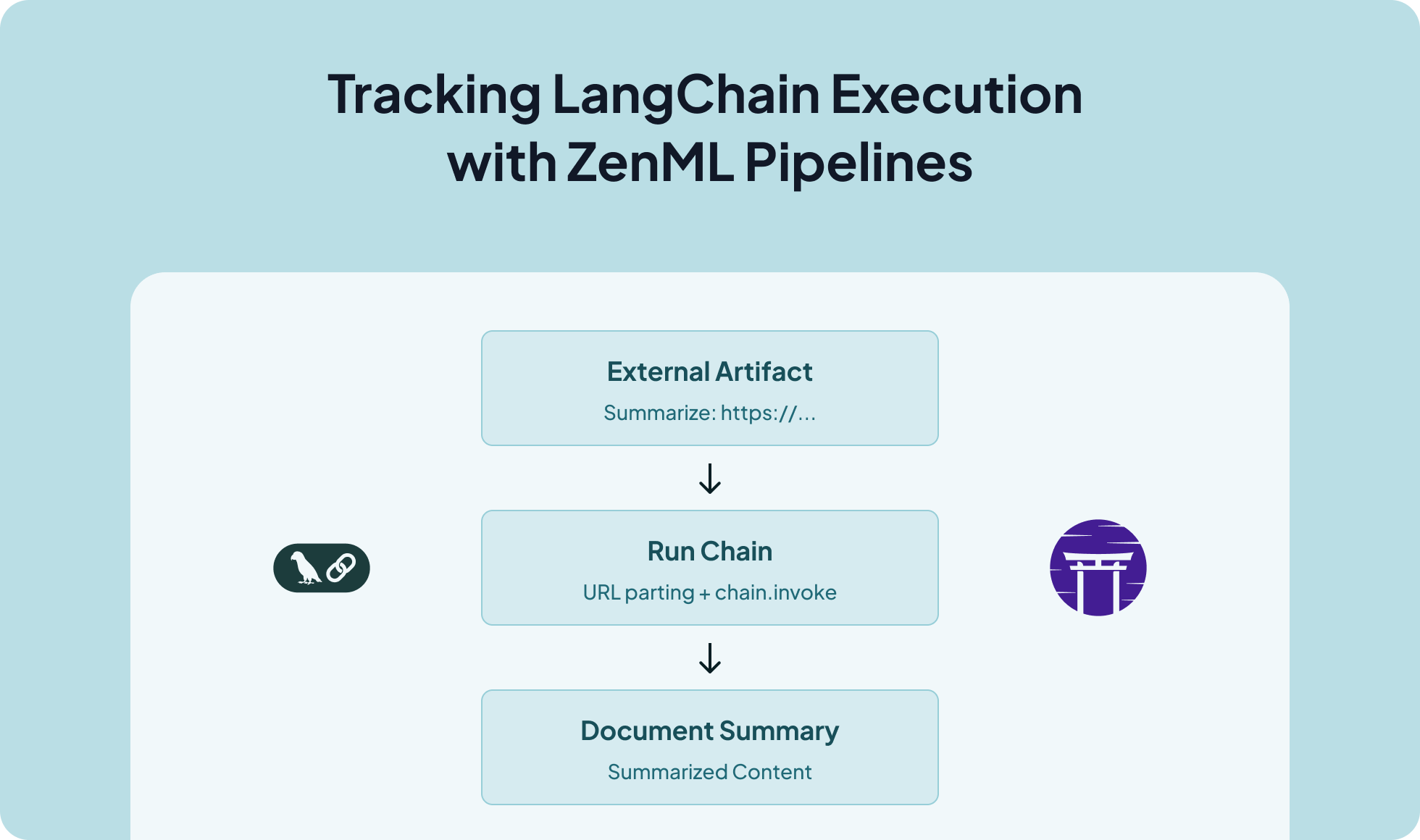

from zenml import ExternalArtifact, pipeline, step

from langchain_agent import chain

@step

def run_chain(url_input: str) -> str:

# Extract URL from "Summarize: URL" format

if ":" in url_input and url_input.startswith("Summarize"):

url = url_input.split(":", 1)[1].strip()

else:

url = url_input # Fallback to use input as URL directly

return chain.invoke({"url": url})

@pipeline

def langchain_summarization_pipeline() -> str:

doc = ExternalArtifact(

value="Summarize: https://python.langchain.com/docs/introduction/"

)

return run_chain(doc.value)

if __name__ == "__main__":

print(langchain_summarization_pipeline())

Additional Resources

ZenML Agent Framework Integrations (GitHub)

ZenML Documentation

LangChain Documentation