LlamaIndex (formerly GPT Index) is a framework and platform to build agentic AI systems – in simpler terms, it helps you create GPT-powered knowledge assistants on top of your own data.

It’s open-source at its core (meaning you can self-host it for free), but the company also offers a managed cloud service with extra features and support. Naturally, that raises the question of cost: is LlamaIndex’s pricing considered high, low, or reasonable?

In this LlamaIndex pricing guide, we break down the framework’s pricing plans and the key factors that affect your bill, so you can decide if it’s worth the investment for your projects.

Finally, since running AI agents in production involves more than just LlamaIndex itself, we’ll show how ZenML - our open-source MLOps + LLMOps framework - can complement LlamaIndex to manage the complete lifecycle of your AI agents.

TL;DR

Here’s a quick summary of LlamaIndex’s pricing tiers, followed by guidance on when LlamaIndex is a good choice (and when it isn’t):

When LlamaIndex is worth considering:

✅ You want a reliable way to connect LLMs with your proprietary data. LlamaIndex provides built-in document parsing, indexing, and an agent framework, saving you from writing all that glue code yourself.

✅ You appreciate having an open-source core with optional cloud scaling. You can start for free with the open-source Python library (or the free tier on LlamaIndex Cloud) and only begin paying as your usage grows.

✅ You need an agent framework and document QA in one solution. LlamaIndex isn’t just a vector database or an LLM API – it combines data connectors, an indexing engine, and an agent orchestration framework. For teams that want an all-in-one toolkit for Retrieval-Augmented Generation (RAG) and agent workflows, investing in LlamaIndex can replace a bunch of separate tools.

When you might not need LlamaIndex:

❌ If you plan to build everything in-house with basic open-source tools. For simple Q&A over documents, you might get by with a vector database and direct LLM calls without LlamaIndex’s advanced parsing. Budget-conscious projects could stick to purely free libraries (though you’d be writing a lot of the logic manually).

❌ If you expect to operate at an extremely large scale right away. LlamaIndex’s usage fees can add up for high-volume deployments. For instance, using the high-accuracy Premium parsing mode costs about 60 credits per page – meaning 100,000 pages would consume 6 million credits (roughly $6,000).

📚 Also read: LlamaIndex vs LangGraph

LlamaIndex Pricing Plans Overview

LlamaIndex offers two types of plans: open-source (self-managed) and LlamaIndex Cloud (managed).

The open-source llama-index library is completely free to use under the MIT license – you can run it locally or on your servers, and you’ll only pay the underlying LLM provider (e.g., OpenAI API costs) for any model calls.

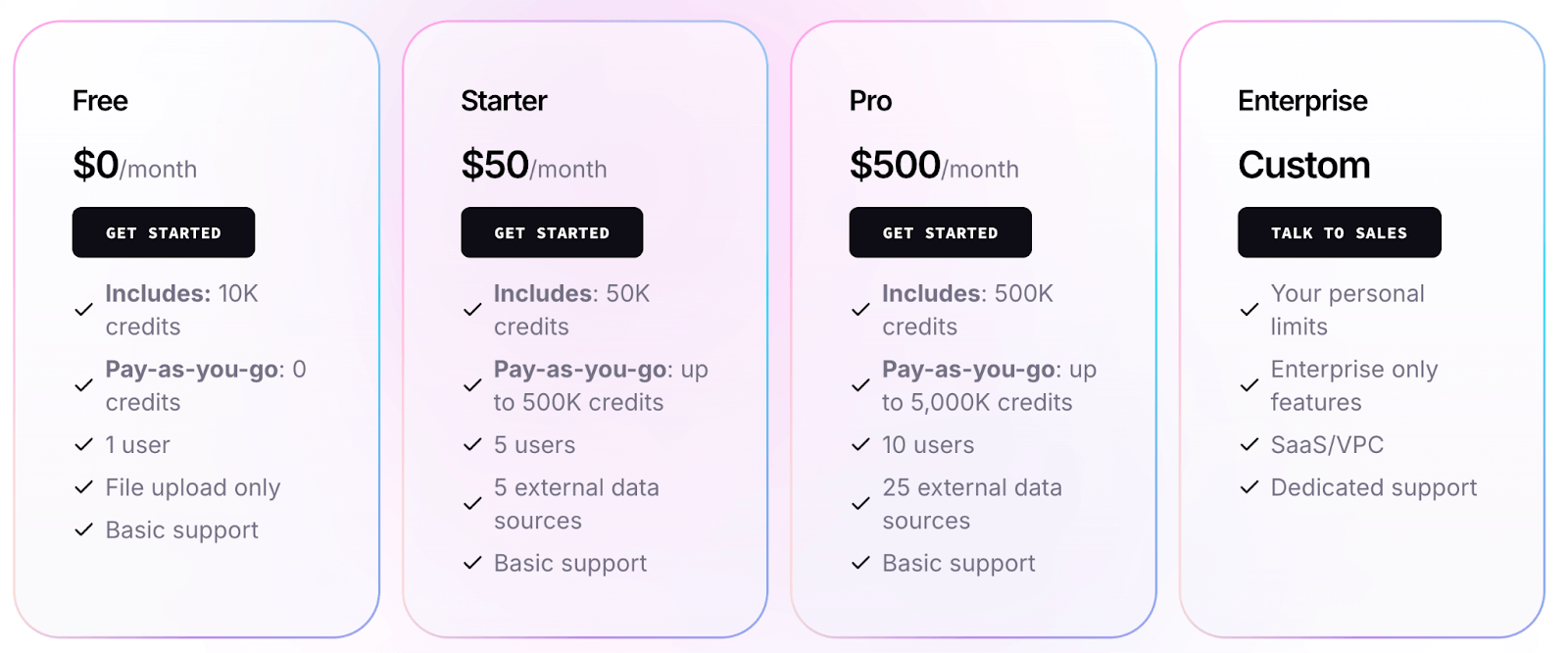

In contrast, LlamaIndex Cloud is the hosted platform that provides a web UI, scalable backend, and extra services (like document parsing and data connectors) on top of the core framework. The cloud service uses a credit-based pricing model:

- Every operation on LlamaIndex Cloud – parsing a document, creating an index, running a query, extracting data, etc. – consumes credits. Credits are basically the platform’s internal currency for compute/LLM usage. By definition, 1,000 credits = $1.00 (in the default region). So using 1 credit is $0.001 of value.

- Each subscription plan (Free, Starter, Pro, Enterprise) includes a monthly allotment of credits. If you exceed the included credits, you can pay-as-you-go for additional credits (up to certain limits on Starter/Pro) at the same rate ($0.001 per credit).

- The plans mainly differ in scale and collaboration features: higher tiers give more credits, support for more users, and the ability to connect more data sources and manage more projects/indexes.

LlamaIndex Pricing Factors to Consider

When planning your LlamaIndex usage, it’s important to understand the factors that drive cost. The actual cost you incur will depend on how you use the framework.

Here are the key pricing factors to consider:

1. Credit Price and Region Multipliers

The standard rate of $1.00 per 1,000 credits is the baseline, but it is not universal. A crucial factor that can significantly alter your costs is the geographical region where your data is processed.

LlamaIndex applies a regional multiplier for its services. While processing in North America adheres to the standard $1.00 rate, processing in Europe incurs a 1.5x multiplier, raising the cost to $1.50 per 1,000 credits.

This is not a minor detail. For companies operating under strict data residency regulations like GDPR, this multiplier can increase operational costs by 50% for the exact same workload.

2. Model Choice During Parsing

LlamaIndex's managed services, particularly its powerful LlamaParse tool, are not monolithic. The number of credits consumed per page of a document is highly dependent on the underlying model and the specific parsing mode selected for the task. This choice represents a direct trade-off between cost, speed, and the quality of the output.

The table below details the credit cost per page for various LlamaParse modes and models, illustrating how quickly costs can escalate.

As the table shows, the cost differential is substantial.

A simple text extraction without AI assistance (Parse without AI) costs only one credit per page. Opting for the recommended ‘Cost-effective’ setting with a basic LLM costs 3 credits.

However, leveraging a powerful agent with a state-of-the-art model like Anthropic's Sonnet 4.0 for parsing can consume 90 credits per page, a 90-fold increase over the most basic option.

This means that the statement ‘using LlamaParse’ can have vastly different financial implications depending on the configuration.

3. Index Build and Query Token Budget

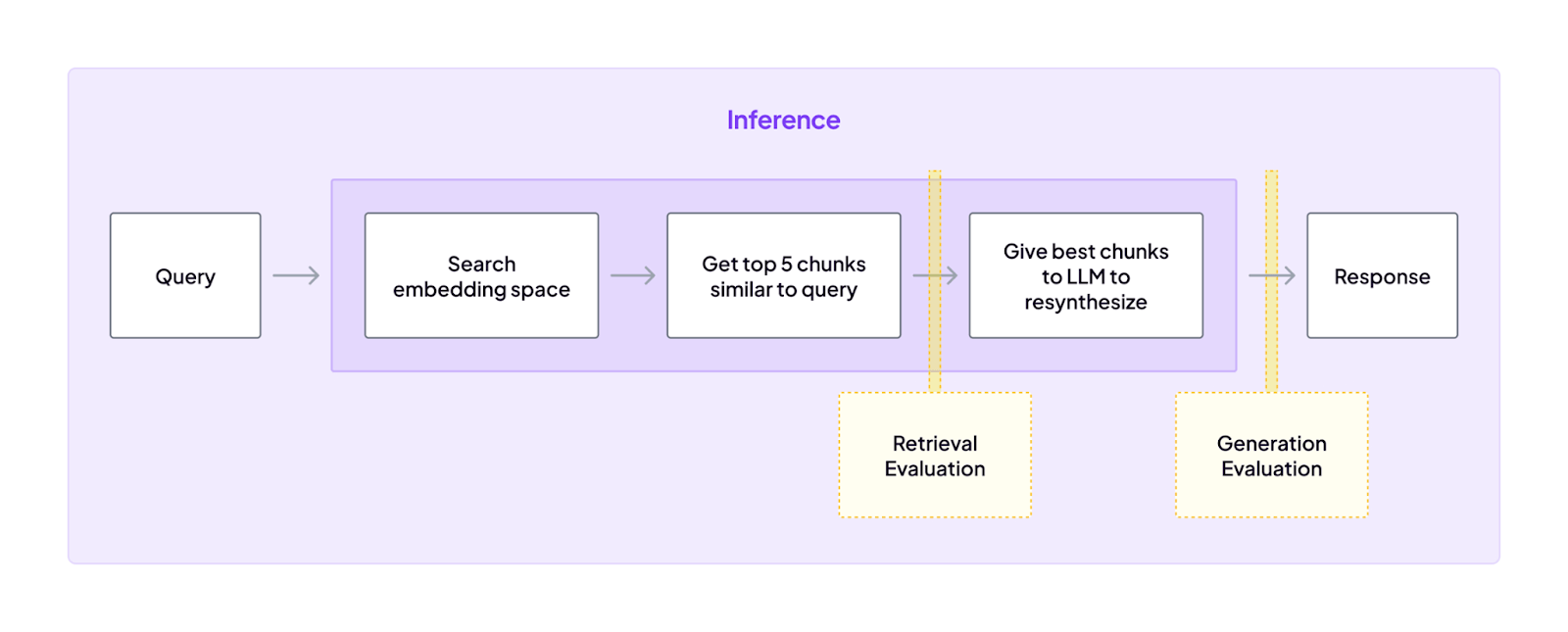

Costs on LlamaIndex are not confined to the initial document parsing stage. The entire Retrieval-Augmented Generation (RAG) pipeline, from building an index to querying it, has cost implications that are primarily driven by LLM and embedding model API calls.

Index Construction Costs: The cost to build an index varies by type. Some indices, like the common VectorStoreIndex, have minimal build costs, which are limited to the price of generating embeddings. Since embedding models like text-embedding-3-small are relatively inexpensive, this cost is often negligible.

Query Time Costs: Querying is often the most significant and recurring expense in an RAG system. The cost of a single query depends on several parameters, including similarity_top_k, which determines how many data chunks are retrieved, and response_mode, which dictates how the LLM synthesizes an answer from those chunks.

For instance, the default response mode, known as ‘create and refine,’ makes a separate LLM call for each retrieved document node, which can be costly. The compact mode is more economical because it attempts to fit as much context as possible into a single prompt, reducing the number of LLM calls.

All Pricing Plans that LlamaIndex Offers

LlamaIndex currently offers one free tier - the open-source, self-hosted option plus a free cloud allowance, and three paid plans. Let’s look at each and what they provide:

Open Source – $0 per Month (Free Tier)

Open-source LlamaIndex is completely free to use. You can install the LlamaIndex Python package and integrate it into your application without paying anything to LlamaIndex.

This free tier also extends to the LlamaIndex Cloud platform in a limited form: when you sign up, there’s a Free plan on the cloud that costs $0 and includes 10,000 credits per month at no charge. Essentially, they give everyone a small bucket of usage to experiment with the hosted services.

The free tier is best for individual developers, researchers, or small projects just getting started.

It allows 1 user and 1 project in the cloud, so you’re not meant to have a team collaborating there.

And there are notable limitations:

- On the free cloud plan, you can only upload files directly for ingestion; no connectors to external data sources or cloud storage.

- You can create up to 5 indexes with up to 50 documents each – enough to toy around, but not for a production workload.

- Support is basic, meaning community forums or documentation (don’t expect a support engineer on call).

Despite these constraints, the free tier is quite useful for evaluation and prototyping. You could parse a handful of documents and build a toy QA bot without spending a penny.

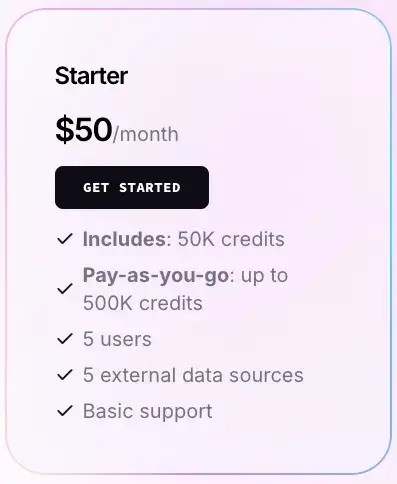

Starter – $50 per Month

The Starter plan costs $50 per month and is the entry-level paid offering for LlamaIndex Cloud. This plan is built for small teams or projects that have grown beyond tinkering and need to support multiple users and a larger dataset.

What do you get for $50?

In a nutshell: a bigger allowance of everything.

Here’s what the plan offers:

- 50,000 monthly credits included (your subscription essentially prepays $50 worth of usage).

- Pay-as-you-go: up to 500,000 credits per month at $0.001 per credit before requiring a Pro upgrade.

- Up to 5 users in a shared cloud workspace (vs. 1 on Free).

- Lets you integrate with 50+ external data sources like S3, Google Drive, SharePoint, and more. (max 5 connectors allowed).

- 50 indexes allowed (vs. 5 on Free).

- 250 files per index capacity.

- Up to 5 concurrent extraction agents (special agents for automated data extraction).

- Cloud-based collaboration features suitable for small teams/startups.

It’s worth noting that the $50 price point is relatively low in the world of enterprise software.

LlamaIndex seems to intentionally price this tier to be accessible to independent developers or tiny companies.

For $50, if you’re getting 50K credits, you could parse on the order of 5,000 pages in cost-effective mode or maybe 800 to 1,000 pages in the very expensive premium mode, per month.

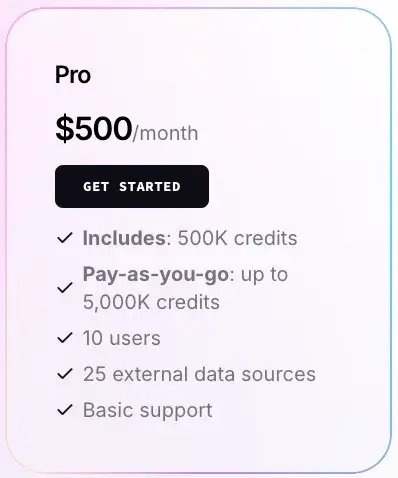

Pro – $500 per Month

The Pro plan is a big step up in capacity (and price). At $500 per month, it’s aimed at larger teams or applications in active development or early production. Here’s what Pro includes:

- 500,000 credits per month included: This is 10 times the Starter credits, corresponding to 10X the price. It effectively gives you $500 worth of usage included. Beyond that, you can pay-as-you-go up to 5 million extra credits. Five million credits would cost an additional $5,000 if you somehow used them all in a month.

- Up to 10 users: A larger team can collaborate under the Pro workspace. More team members might be needed if you have multiple engineers, data scientists, etc., working on different aspects of your LlamaIndex project.

- 100 external data sources: Pro significantly expands how much data you can connect. You could, for example, hook up dozens of SharePoint sites, databases, or cloud buckets. It’s unlikely a single project needs 100 distinct connectors, but Pro doesn’t really limit you on that front for practical purposes.

- More indexes and files: Pro plan allows up to 5 projects with 100 indexes and up to ~1,250 files per index. That’s a lot of documents (over 100k files total if fully used). If you are managing multiple separate knowledge bases or agent use-cases, Pro gives you the flexibility to compartmentalize them into different projects and indexes.

Pro is the plan for when you’re moving from experiment to production pilot. It can handle serious workloads - parsing and indexing tens of thousands of pages or serving a moderate user base of an LLM-powered app.

At $6,000 per year, it’s a non-trivial expense, so you’d want to be sure your project is delivering value at that scale.

Enterprise – Custom Pricing (Contact Sales)

For organizations with advanced needs, LlamaIndex offers an Enterprise plan. There’s no published price – it’s a custom-negotiated contract. Typically, this is for larger companies or use cases where the provided limits of Pro are insufficient or certain features or compliance are needed. Here’s what the plan offers:

- Customized limits and pricing: Enterprise customers can usually negotiate higher credit allowances, more users (unlimited users are listed), and any specific usage-based pricing that makes sense. If you anticipate using tens of millions of credits or have unpredictable spikes, LlamaIndex’s sales team might be able to work out a volume discount or a special rate.

- Enterprise-only features: This could include things like single sign-on (SSO) integration, audit logs, advanced security compliance (SOC2, HIPAA, etc.), and features like on-premises deployment - SaaS/VPC - meaning you can choose to have LlamaIndex deploy a private instance of their cloud in your Virtual Private Cloud or on-prem environment.

- Dedicated support and SLA: Enterprise comes with priority support, likely a dedicated support engineer or account manager, guaranteed response times, and uptime SLAs for the service. If you are running a production system that can’t go down, this support is important. They also often include training or onboarding help for your team.

In short, Enterprise is a ‘let’s make a deal’ territory for when your needs surpass the self-serve plans. The cost could range widely, presumably in the thousands to tens of thousands per month, depending on scale.

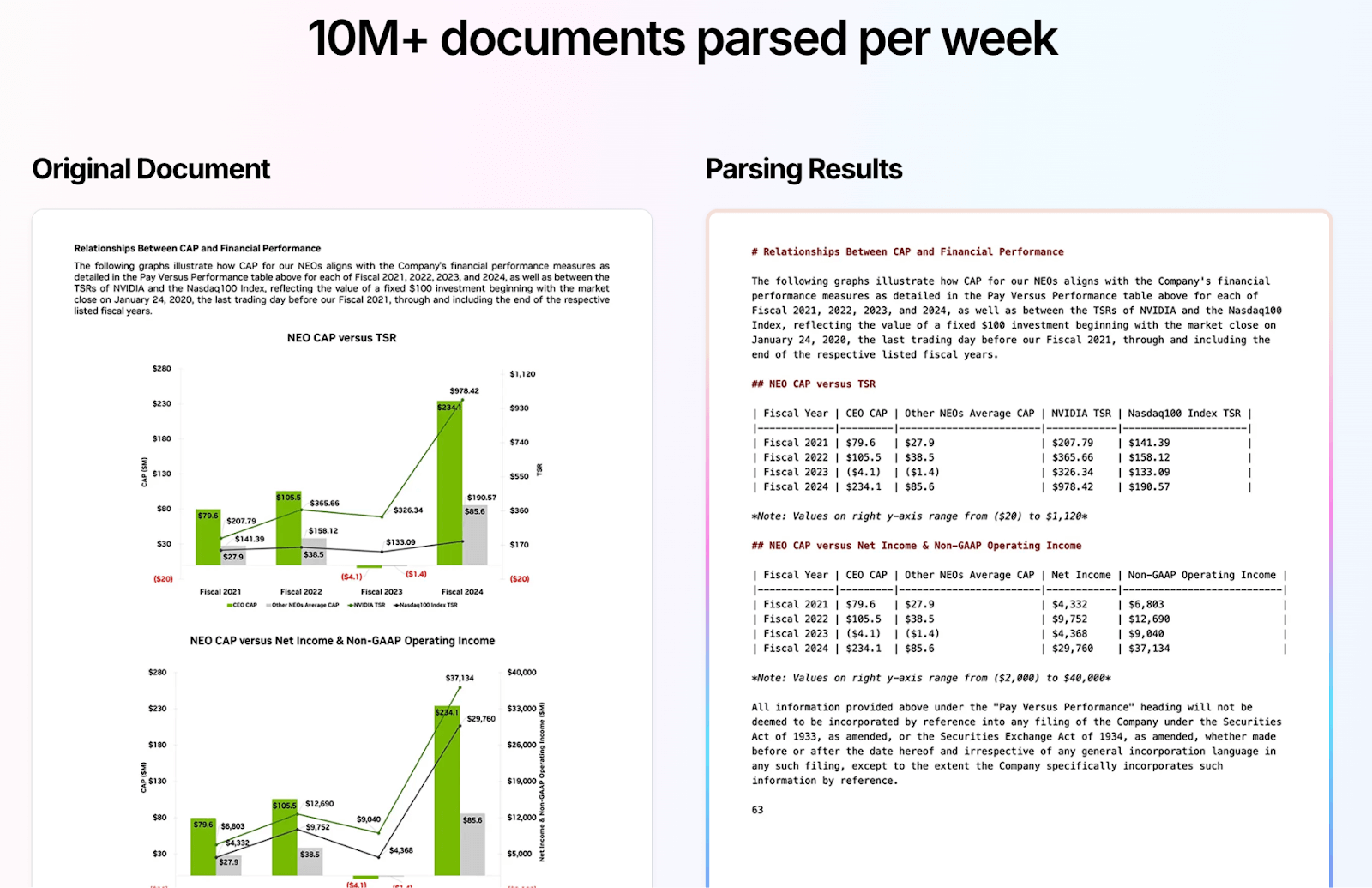

LlamaParse

Aside from the core plans, LlamaIndex offers LlamaParse as a product feature.

LlamaParse is the ‘GenAI-native’ document parsing service, designed to handle complex PDFs, images, and more.

It’s not a separate plan you buy – it’s available to use on any LlamaIndex Cloud plan (including free, which gives some free parsing credits), but it will consume credits when you use it.

To give a sense of cost: parsing a document with LlamaParse will cost credits per page based on the mode or model.

For example, using the default Cost-effective mode might cost ~3 credits per page.

When to use LlamaParse? If your documents have a lot of non-text elements (tables, forms, scans) that standard PDF text extraction might mess up, LlamaParse is likely worth it. It uses LLMs to intelligently extract and structure content, giving much better results for downstream querying. The accuracy of things like financial reports, scientific papers with formulas, or images with text can be far superior with LlamaParse.

Practically, you and your team should try LlamaParse on your documents during the free credits phase to gauge how many credits it consumes and how much better the data quality is. If it saves developer time and improves answer accuracy for your agent, you can justify the cost.

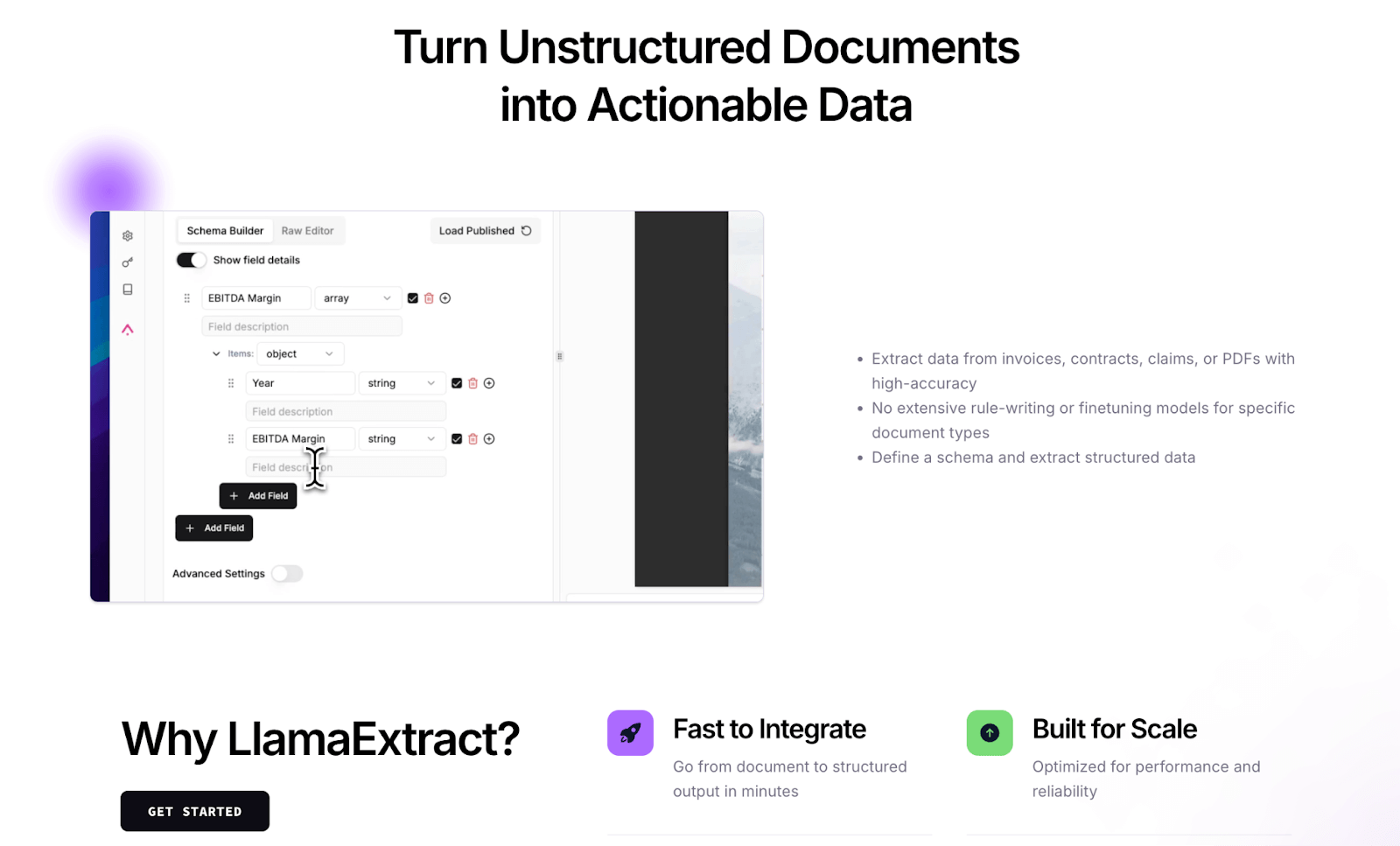

LlamaExtract

LlamaExtract is another add-on feature of LlamaIndex Cloud – it’s a structured data extraction tool.

While LlamaParse focuses on turning documents into LLM-readable text and JSON, LlamaExtract aims to pull specific fields or facts from documents according to a schema you define.

For example, extracting invoice numbers, dates, and names from contracts, etc., using an LLM under the hood.

Like LlamaParse, LlamaExtract usage is priced by credits. The platform offers different extraction modes - Fast, Balanced, Multimodal, Premium - which trade off speed/cost for accuracy. Fast might be 5 credits per page, Premium 60 credits per page.

Who needs LlamaExtract? Any workflow where you have unstructured documents but need structured outputs (key-value pairs, database records).

Instead of writing a complex regex or training a custom model, you let LlamaExtract’s LLM-based engine do it. This can drastically speed up building automations for things like invoice processing, forms processing, etc.

You define a JSON schema for the info you want, and the system populates it from the text.

From a pricing perspective, if your use case is extracting the same fields from thousands of documents, definitely factor in the per-page credit cost.

For instance, extracting 1,000 receipts in Premium mode would be ~60,000 credits = $60 (or $20 if they were cached from parsing). That might be acceptable given the value of automating that work.

On a Pro plan with 500k credits, you could theoretically extract data from ~8,300 pages in premium mode within the included credits.

Is LlamaIndex Expensive?

Now for the big question: is LlamaIndex worth the money, or is it expensive for what it offers? The answer truly depends on your perspective and scale. Let’s break down a few scenarios:

1. For small-scale or individual use, LlamaIndex can be extremely cost-effective

The free open-source library lets you do a lot without any platform fees at all – you’re only paying the underlying LLM provider (OpenAI, etc.) for calls. Even the cloud’s free tier gives a decent amount of usage to play with. If you’re a solo developer building a prototype, you might incur $0 in platform costs and maybe just a few dollars in OpenAI API fees. That’s a big win compared to some enterprise tools that have high minimum prices.

LlamaIndex’s Starter plan at $50 is also within reach of an individual or small startup budget, and it can carry a surprisingly non-trivial workload.

2. For moderate usage by a team, LlamaIndex’s pricing is in line with similar SaaS offerings.

At the Pro tier ($500 per month), you are supporting a team and a production app. This cost is comparable to other cloud services in the AI/ML space, and is likely a fraction of what you’re spending on the LLM API calls themselves if you’re doing heavy NLP work.

Many teams find the hosted service worth it because it speeds up development (no need to build a parsing pipeline from scratch) and covers a lot of maintenance (scaling, caching, updates to new models) automatically.

3. At a large scale, costs will scale too – but consider the alternatives.

If you are parsing millions of pages or answering tens of thousands of queries, you will certainly be spending significant money on LlamaIndex (likely via an Enterprise contract). However, doing the same in-house would mean paying either a team of engineers to maintain custom pipelines or paying for other services.

Large language model applications in general tend to incur significant costs as LLMs themselves aren’t cheap to run. In context, LlamaIndex’s fees might be only a small add-on to your overall AI budget.

For example, if your application consumes $10,000 worth of OpenAI API calls monthly, paying another $2,000 to LlamaIndex for orchestration and data handling could be reasonable.

LlamaIndex is generally affordable for the functionality it delivers, especially at small and medium scales. It doesn’t impose exorbitant flat fees; you can start free, and your spending naturally grows with your usage.

📚 Also read: LlamaIndex Alternatives

Deploy AI Agents with LlamaIndex and Handle the Complete Lifecycle with ZenML

Building an AI agent with LlamaIndex is only half the story. LlamaIndex excels at the ‘inner loop’ of agent development – things like parsing documents, indexing knowledge, and defining how an agent retrieves information or calls tools.

However, once you have such an agent, you face an ‘outer loop’ of challenges: deploying the agent in a production environment, scheduling its runs or keeping it live as a service, monitoring its performance over time, handling version upgrades, and ensuring reliability.

This is where ZenML comes into play as a powerful complement to LlamaIndex.

ZenML is an open-source MLOps + LLMOps framework that helps orchestrate and manage the entire lifecycle of machine learning and LLM workflows, including agent-based systems.

(Disclosure: ZenML is our product, and we’re passionate about its ability to help in scenarios like this. The key is that with ZenML handling the outer loop, you free yourself to focus on building the agent logic with LlamaIndex, knowing that deployment, scheduling, and observability are taken care of.)

Here are the key ways ZenML helps you productionize LlamaIndex agents:

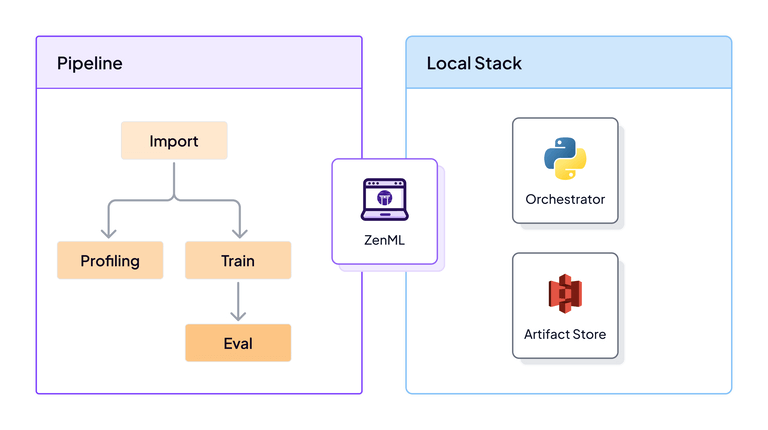

1. Orchestration and ‘Outer’ Pipeline

ZenML lets you embed your LlamaIndex agent into a larger automated workflow. For example, you might have a ZenML pipeline that periodically fetches new data, uses LlamaIndex to update an index, then triggers an agent to answer any pending queries or generate reports. ZenML treats the agent as just one step in a reproducible pipeline.

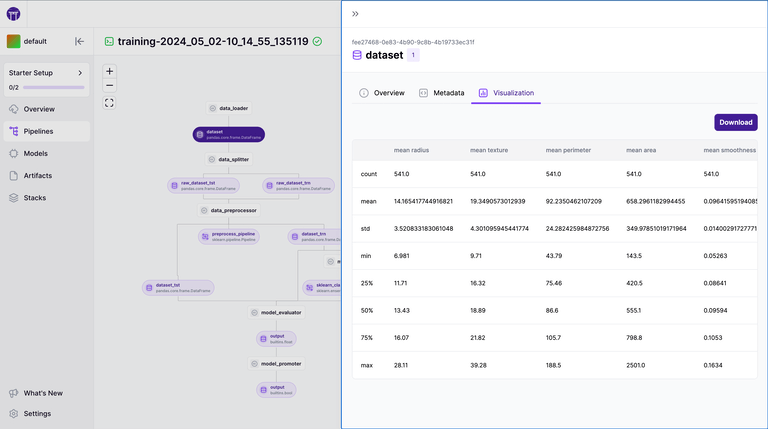

2. Experiment Tracking and Visibility

During development and even after deployment, you want to know how your agent is doing.

ZenML provides built-in experiment tracking and logging – every time your agent pipeline runs, it can log metrics like number of tokens used, response time, accuracy of answers if you have a way to measure that, and store artifacts like the model prompts, responses, etc.

ZenML’s dashboard lets you see all your pipeline runs in one place, compare different runs, and even trace through steps.

3. Continuous Evaluation and Quality Control

ZenML can help you keep an eye on whether your agent is behaving as expected. For instance, you can integrate evaluation steps or tests into a ZenML pipeline that runs your LlamaIndex agent on a set of sample queries and checks the outputs.

Over time, this can alert you if a change in the LLM or data causes quality to drop. ZenML makes it easier to answer ‘is my agent still good?’ by tracking performance metrics across pipeline runs.

4. Integration and Combining Tools

In real-world scenarios, LlamaIndex might be one of several tools you use. You might also use a vector database, external APIs, or have a training job for a custom model.

ZenML’s framework is designed to integrate diverse tools and libraries into one coherent workflow. You can combine LlamaIndex, plus your own Python code, plus other MLOps, all under ZenML’s orchestration.

All in all, ZenML wraps around LlamaIndex to productionize it. LlamaIndex defines what your agent does (how it uses data and LLMs to answer questions). ZenML defines how that agent is deployed, monitored, and managed over time.

👀 Note: ZenML is open-source, and you can get started with it for free.

📚 Relevant Pricing articles you must read:

Is LlamaIndex Worth Investing In to Build AI Agents?

LlamaIndex is an undeniably powerful and popular framework for the core task of building RAG and other agentic applications. Its specialized tools, like LlamaParse, are considered best-in-class for handling the complex, unstructured documents commonly found in enterprise environments.

However, an investment in LlamaIndex is not purely monetary. The open-source path requires a significant investment in engineering time to manage infrastructure, handle scaling, and maintain the system.

The LlamaCloud path requires a direct financial investment that can scale unpredictably with usage and still presents a learning curve for advanced customization and non-standard use cases.

Ultimately, LlamaIndex is worth investing in if your primary challenge is connecting LLMs to complex data sources, and you are prepared for the associated costs, whether they are in engineering hours or cloud fees.

The combination of LlamaIndex and ZenML represents a complete, end-to-end solution. LlamaIndex provides the specialized, data-centric tools for building the agent, while ZenML provides the essential production backbone for managing its entire lifecycle. This synergy allows teams to leverage the best of both worlds: advanced agent capabilities and a reliable path to production.

ZenML's upcoming platform brings every ML and LLM workflow - data preparation, training, RAG indexing, agent orchestration, and more - into one place for you to run, track, and improve. Type in your email ID below and join the early-access waitlist. Be the first to build on a single, unified stack for reliable AI. 👇