If you’re looking to build agentic AI systems, choosing the right framework makes all the difference in shaping their entire development lifecycle.

Both Google ADK and Langgraph assist well in building complex AI agents, but their core philosophies, architectures, and feature sets are fundamentally different.

In this article, we compare Google ADK vs LangGraph across their core features, integration capabilities, and pricing models to help you find the optimal tool for your next agentic workflow.

We’ll also discuss how you can use both together (with a tool like ZenML) to get the best of both worlds in production.

Google ADK vs LangGraph: Key Takeaways

🧑💻 Google ADK: An open-source, code-first Python toolkit from Google designed to build agentic AI workflows. It provides higher-level abstractions and pre-built workflow patterns that promote a modular, testable architecture. It’s optimized for Gemini/Vertex AI yet model-agnostic with strong logging, tracing, and built-in eval.

🧑💻 LangGraph: A library from LangChain for building stateful, multi-agent applications as an explicit graph of nodes/edges. It offers tight control over agent workflows and integrates with the LangChain ecosystem for LLMs, tools, memory, plus LangSmith/Langfuse for tracing and eval.

Google ADK vs LangGraph: Framework Maturity and Lineage

The table below compares the framework maturity of Google ADK and LangGraph:

Of the two, Google ADK is the newer framework, and so its stars and commits trail behind LangGraph’s mammoth numbers. By contrast, LangGraph leads in community signals with ~ 5.7k more stars and ~5084 commit count.

For downloads, LangGraph shows higher recent PyPI pulls, suggesting broader day-to-day use across projects. ADK’s downloads are growing quickly as teams trial it alongside Gemini and Vertex AI.

Google ADK vs LangGraph: Features Comparison

Let’s see how ADK and LangGraph stack up against each other. Here’s a quick peek:

Now, let’s dive deep with one-on-one feature comparison:

Feature 1. Orchestration Model

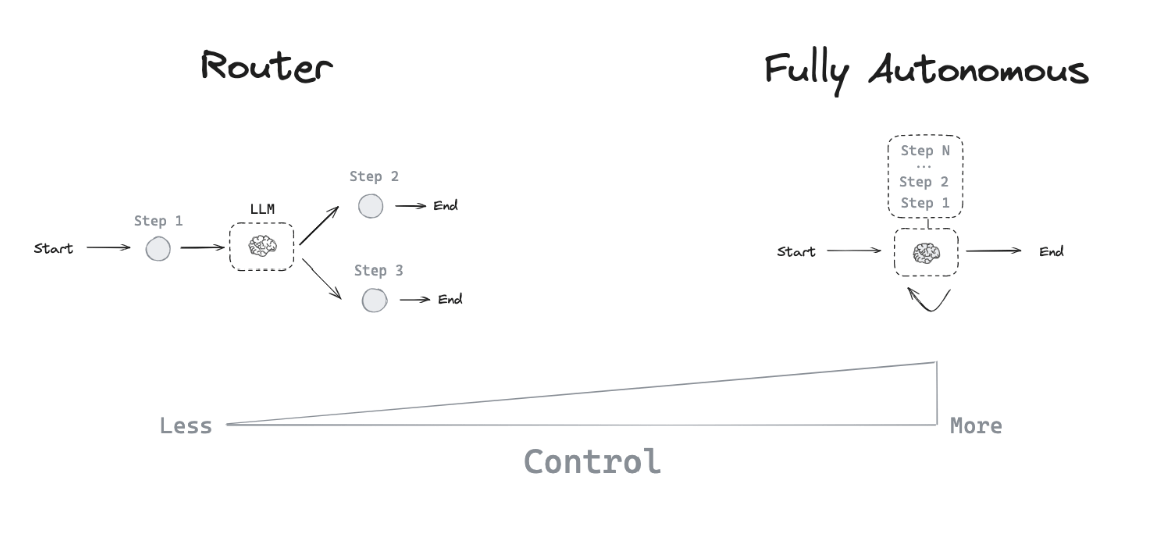

Orchestration is how you define an agent’s overall workflow: the sequence of steps, logic branches, and concurrency that transform a basic LLM prompt into a multi-step agent.

LangGraph and Google ADK take fundamentally different approaches here: explicit graphs vs event-driven workflows.

LangGraph

LangGraph uses explicit graph orchestration. Rather than one monolithic agent deciding everything on the fly, you lay out a state machine workflow with a graph of nodes and edges that the agent follows.

Every agent workflow is enclosed as a StateGraph with three core components:

- State: A central, shared object (often a Python

TypedDict) that holds all the data and context for a given workflow. This state is passed to every node and is persisted between steps, providing a robust mechanism for memory. - Nodes: Individual computational units, represented as Python functions. A node can be an LLM call, tool use, human approval, or any custom logic. Each node receives the current state and can return updates to it.

- Edges: Directed connections between nodes that define the flow of execution. LangGraph supports both standard edges and

conditional_edges, which act as routers to dynamically determine the next step based on the current state.

Unlike traditional Directed Acyclic Graphs (DAGs), LangGraph manages a shared state like TypedDict that travels with the run in real-time so other nodes can read prior outputs, preserve memory, and support checkpoints for pause, debug, and replay.

The benefit is full control and predictability. You can implement complex flows like: ‘Node A splits into Node B and Node C in parallel, and once both complete, merge into Node D.’

Google ADK

Google ADK approaches orchestration with a more code-driven workflow engine. Instead of drawing out nodes and edges, you write Python classes/functions to define how the agent proceeds.

Essentially, ADK’s orchestration model is two-fold:

You can compose agents via pre-built Workflow agents like Sequential, Parallel, and Loop classes to enforce a defined, static structure.

For instance, a SequentialAgent(sub_agents=[A, B, C]) will call Agent A, then B, then C in order. A ParallelAgent(sub_agents=[X, Y]) would run X and Y concurrently.

Next, you also have LLM-driven dynamic routing via the LlmAgent and agent transfer mechanism. An LlmAgent acts like an intelligent router that automatically transfers controls from one agent to another based on the user’s request or the current context.

Let’s see a conceptual example that uses both orchestration models for getting a task done.

Suppose you created a CoordinatorAgent for a travel planning task that has three sub-agents, say, a FlightAgent, HotelAgent, and SightseeingAgent. (see the code below):

In the first approach above, itinerary_planner will always call the FlightAgent, then the HotelAgent in sequence.

In the second, the TripPlanner LLM agent is free to decide whether a given user query requires the flight agent, the hotel agent, or both (and in what order). ADK’s flexibility lies in this ability to mix fixed workflows with dynamic decision-making.

Bottom line: While both can achieve similar outcomes, the developer experience differs: drawing a map vs. writing a script.

ADK workflows might be less visual than LangGraph’s, but more familiar to Python developers who prefer writing code over configuring graphs.

LangGraph gives you an explicit graph (state machine) to control agent flows with precision, ideal for complex or highly-regulated workflows. Google ADK provides a flexible programming model with both structured and dynamic orchestration.

Feature 2. Agent Primitives and Patterns

Agent primitives and patterns define the fundamental building blocks and architectural patterns Google ADK and LangGraph use for constructing agents.

LangGraph

LangGraph builds on LangChain’s rich collection of primitives like LLM wrappers, tools, memory modules, etc., but repackages them into a graph paradigm. It’s up to you to wire them into patterns.

Each node in a LangGraph workflow can be considered an agent step with a specific primitive.

For example, you could have: an LLM Call node, a Tool node to trigger web search or code execution, a Human node to insert human review and checkpoints, or even a Chain node (embedding a LangChain chain as a single node).

Though the most popular patterns are the classic ReAct (Reason+Act) loop, where an LLM reasons and decides on a tool, then acts, then repeats until a ‘done’ condition.

For more complex multi-agent systems, the ‘supervisor’ pattern is a common and powerful architecture. A ‘supervisor’ node acts as a high-level router extending specialized ‘worker’ nodes, each skilled for a specific task (e.g., searching the web, writing code).

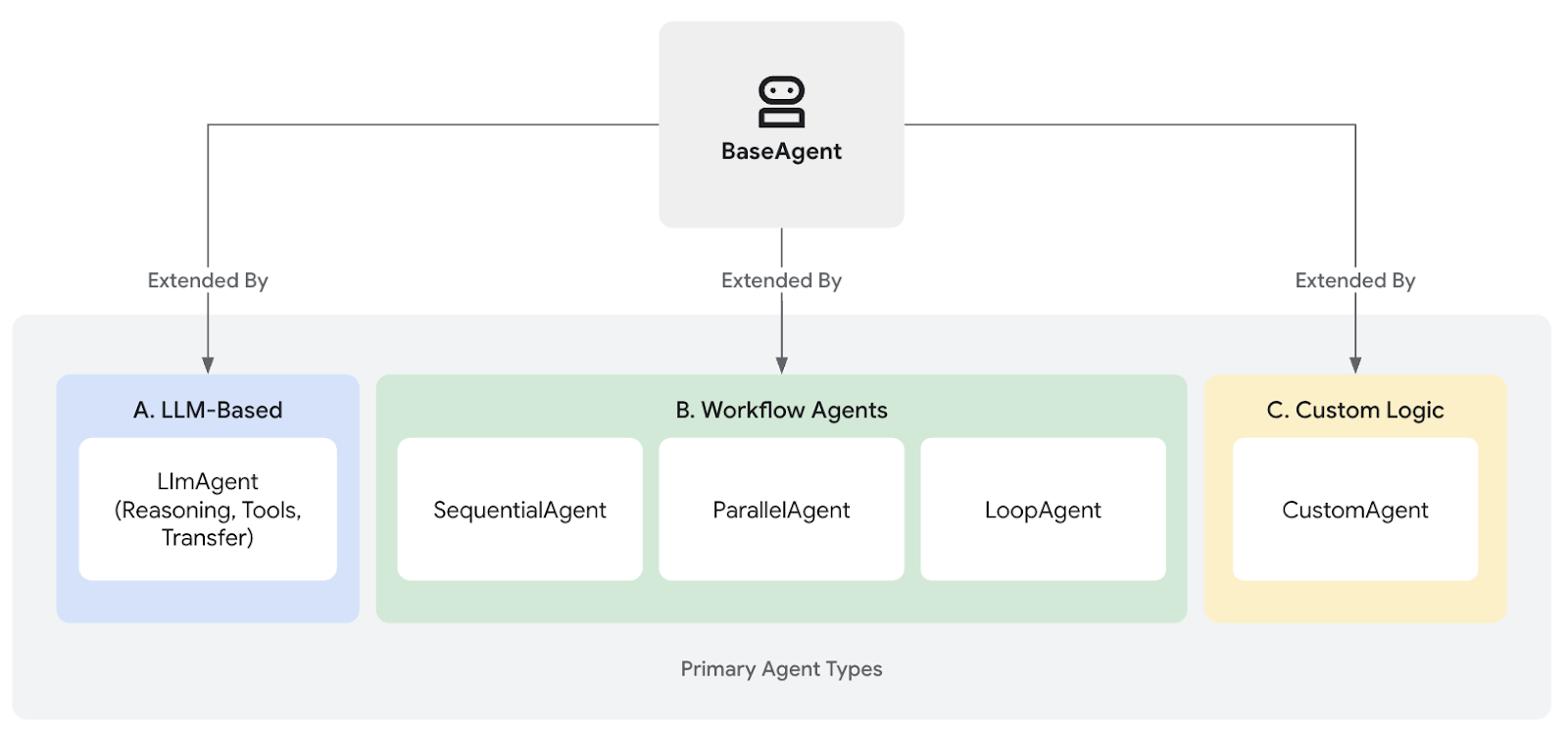

Google ADK

In ADK, the fundamental primitives are the BaseAgent and LlmAgent classes. You can create specialized agents by either extending BaseAgent for custom logic or configuring an LlmAgent with specific instructions, tools, and data schemas.

On top of primitives, ADK’s multi-agent patterns are influenced by its design. Essentially, a parent-client hierarchy with a high-level primitive extended using the sub_agents. A parent agent can delegate tasks to its children in two primary ways:

- LLM-Driven Delegation: The parent

LlmAgentcan dynamically decide which sub-agent to invoke based on the user's query and thedescriptionfield of each sub-agent. The LLM itself acts as the router, providing a flexible and adaptive delegation mechanism. - Explicit Invocation: A sub-agent can be wrapped in an

AgentTool, allowing the parent to call it directly just like any other tool. This provides more deterministic control over the workflow.

For example, you could write a custom agent that performs a specific API call or deterministic calculation; this agent can then be inserted into a workflow alongside LLM-based agents.

A nice-to-have feature is that you can integrate external agent frameworks, like LangChain or Crew AI, as tools within an ADK agent. This speaks to ADK’s cross-app operability. Google knows you might want to reuse agents built elsewhere, so ADK can treat them as just another callable tool.

Bottom line:

Both frameworks support complex multi-agent designs like hierarchical teams or iterative self-improvement, but they package the concepts differently.

- If you prefer a more declarative, visual breakdown of an agent’s logic, LangGraph wins.

- If you prefer code-level control and pattern libraries (and maybe plan to integrate with cloud infrastructure), ADK might feel more natural.

Feature 3. Observability, Tracing, and Evaluation

For any production-ready agentic system, the ability to observe, debug, and evaluate its behavior is non-negotiable. Both Google ADK and LangGraph frameworks provide robust solutions, but the implementation style is different.

LangGraph

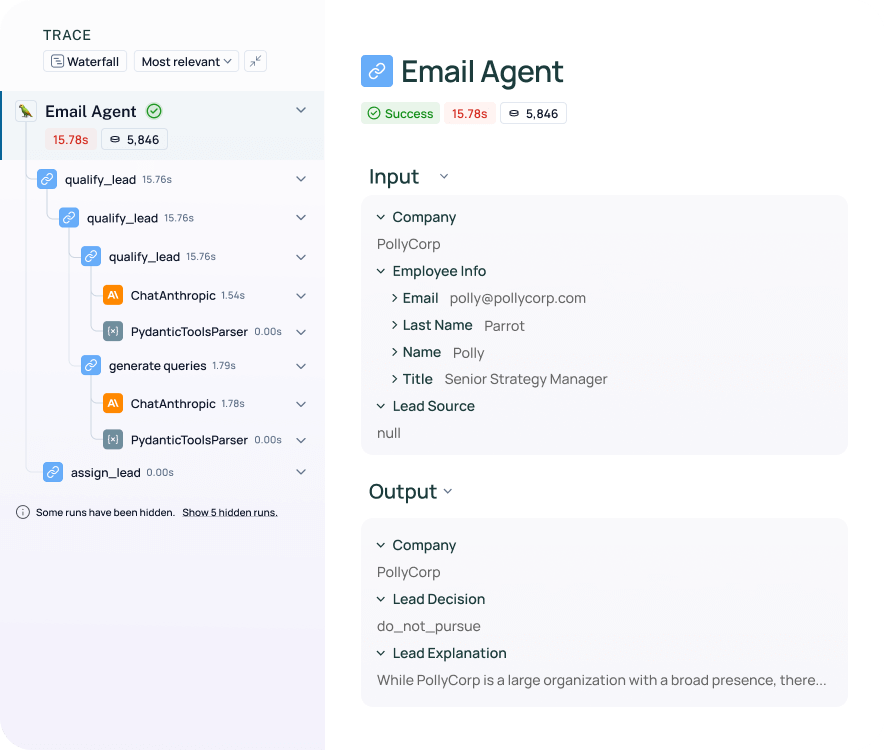

LangGraph integrates with LangSmith, LangChain’s observability and evaluation platform. With LangSmith, you can instrument your LangGraph workflows to log each step (node) execution, input, and output.

Because LangGraph’s execution is deterministic given the same state, you can take a trace from LangSmith and replay it step by step, even injecting fixes. For instance, if an agent took a wrong turn at a conditional edge, you can simulate a different decision and see how it would propagate.

All this information can be visualized in LangSmith’s UI for debugging. A rich visual interface allows you to:

- Trace Execution: Visualize the step-by-step path an agent takes through the graph, inspecting the inputs and outputs of each node.

- Debug Trajectories: ‘Time-travel’ through an agent's run to understand its reasoning process and identify the root cause of errors or unexpected behavior.

- Evaluate Performance: Save production traces to datasets and run evaluations against them using LLM-as-judge evaluators or human feedback to measure metrics like correctness and relevance.

While LangSmith is the flagship integration, LangGraph's callback system also allows it to connect with other observability tools like Langfuse.

Google ADK

ADK is built with production monitoring in mind. It comes instrumented with OpenTelemetry, which means ADK can integrate with any OpenTelemetry-compatible backend and allow you to use your existing observability stack.

ADK has documented one-click integrations with several LLM observability platforms, including:

- Google Cloud Trace

- Phoenix (by Arize AI)

- Weights & Biases Weave

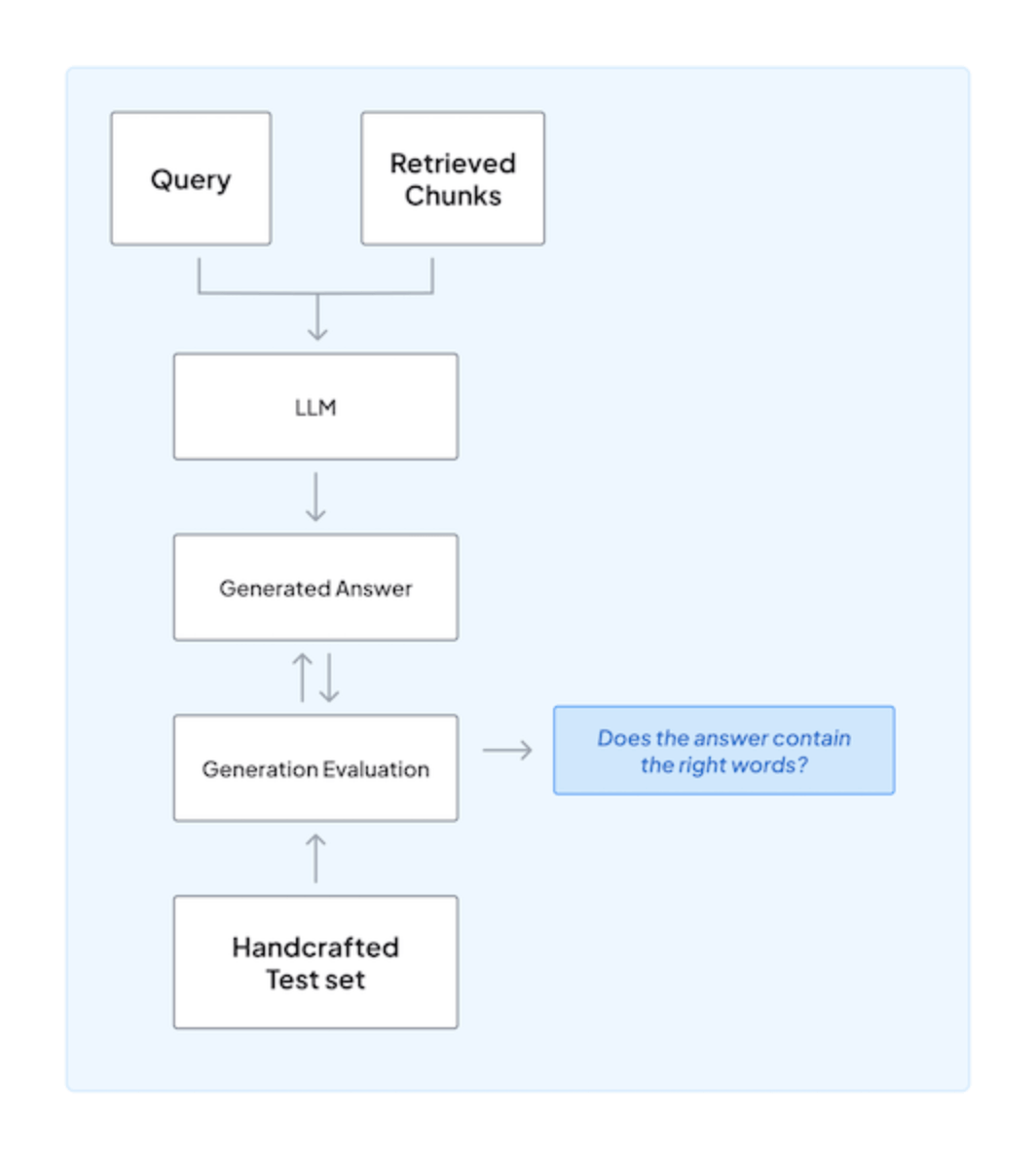

Given Google’s focus on enterprise, ADK has a built-in evaluation framework that ties into Google’s Model Context Protocol (MCP). You can create test cases and run evaluations via a command-line interface, Pytest, or an interactive web UI.

This is similar to LangSmith’s evaluation but integrated into ADK. Also, ADK’s evaluation can check both final answers and intermediate steps

Bottom line:

Both frameworks recognize that observability is crucial for agentic systems, which can be nondeterministic and complex. LangGraph leans on LangChain’s mature tooling (LangSmith/Langfuse) to deliver this, emphasizing stepwise trace and debug. ADK's OpenTelemetry-first strategy gives more breathing space and avoids vendor lock-in.

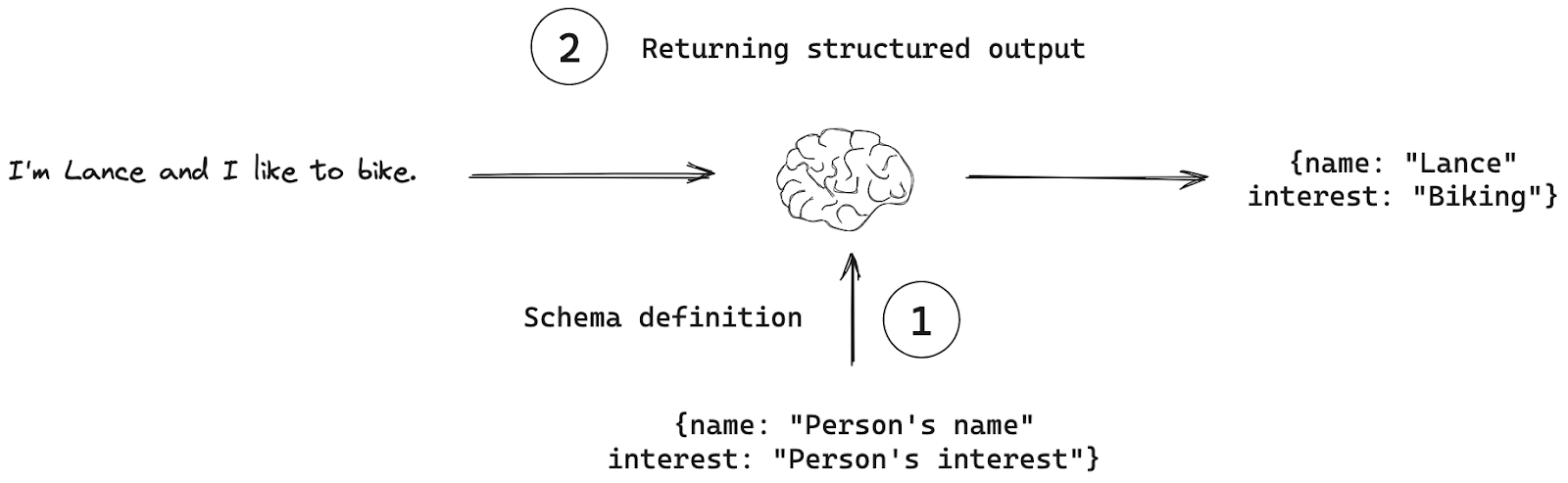

Feature 4. Structured Output (Pydantic, JSON Mode)

A common need when building AI agents is to get agents to produce predictable, machine-readable outputs rather than free-form text. Let’s see how LangGraph and ADK handle structured outputs and output parsing.

LangGraph

LangGraph inherits LangChain’s with_structured_output() method. It allows you to bind a schema, like a Pydantic model or JSON schema, to the LLM call, so that the LLM’s response is automatically parsed into that structure if possible.

If not, the parsers can attempt to correct or call the LLM again with clarification as part of LangChain’s around output parsing for better error handling.

Google ADK

ADK supports structured outputs via output_schema (Pydantic/JSON) and function-calling across backends.

When a Pydantic model is passed to this parameter, ADK configures the agent's underlying LLM calls to produce a JSON response that strictly matches the schema.

ADK can work with model-native function calling. If you’re using OpenAI through ADK or a model that offers function calling, ADK can define functions (like JSON schema functions) that the model will use to format output.

What’s more, ADK lets you choose which approach to use for structured data – either prompt-based parsing or the model’s API. You could prompt the LLM with instructions like ‘You must respond in JSON only.’

Bottom line: Both Google ADK and LangGraph frameworks excel at turning the sometimes-messy text outputs of LLMs into clean data structures:

LangGraph gives you with_structured_output() and output parsers that work across many models.

Google ADK offers Pydantic program support and multi-backend structured output handlers. Whether you use OpenAI, Gemini, or others, ADK can coerce outputs into a Python dataclass/Pydantic model for you.

Google ADK vs LangGraph: Integration Capabilities

Google ADK

ADK is well-integrated within the Google Cloud ecosystem. It features native integrations with services like Vertex AI Agent Engine, Gemini models, Google Search grounding, and BigQuery. This makes it a natural choice for teams already invested in Google Cloud.

Given its enterprise focus, ADK integrates with services like databases, APIs, etc., through its plugin system. On top of that, you can integrate OpenAI models, Anthropic’s models, open-source models, etc., by configuring the appropriate endpoints or SDKs.

It also provides dedicated wrappers, such as LangchainTool and CrewaiTool, to integrate components from other popular frameworks, and ADK supports the Model Context Protocol (MCP), an open standard (introduced by Anthropic), via built-in MCP tools, so agents can talk to external toolservers.

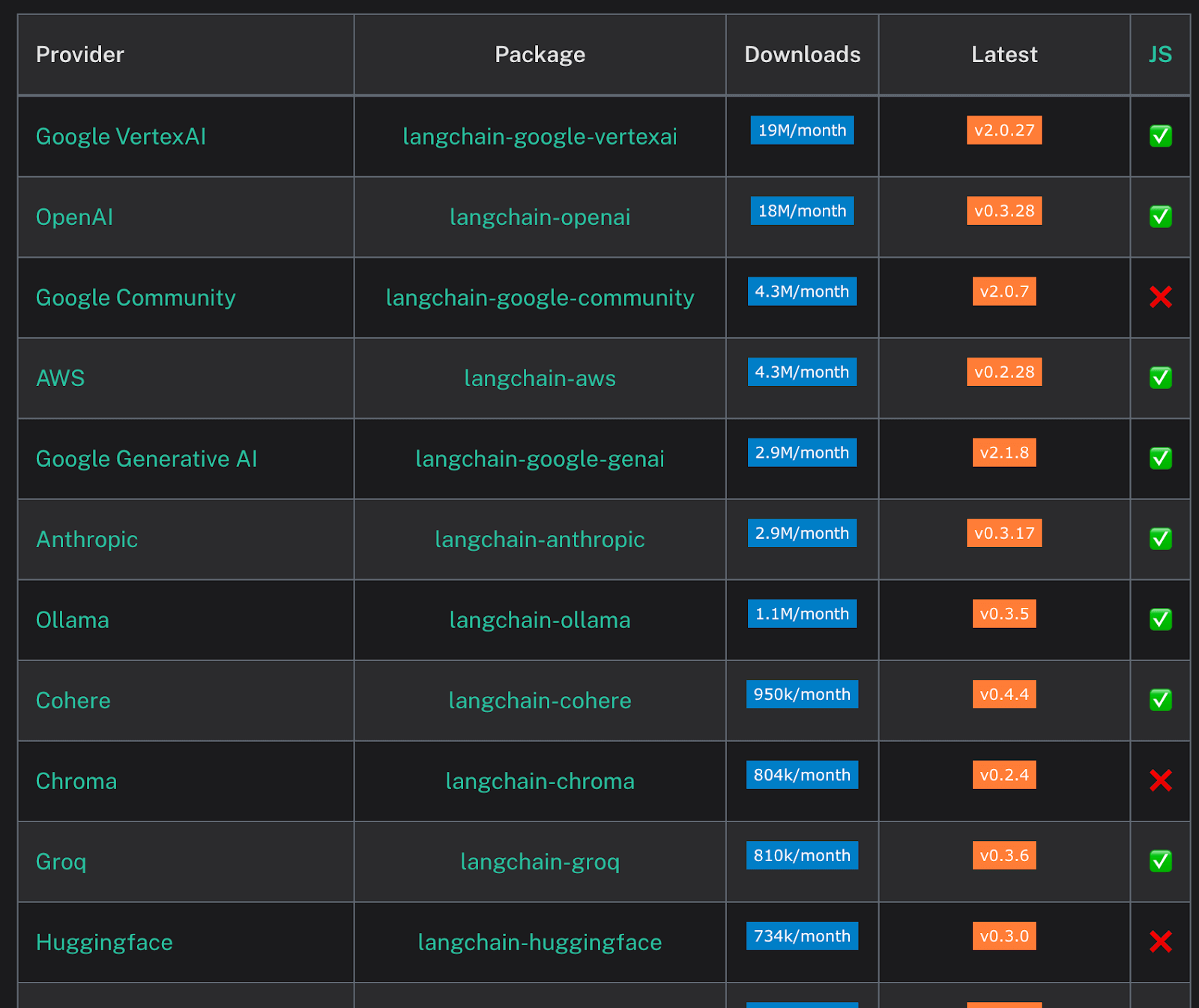

LangGraph

As a library built by LangChain, agentic AI workflows built on LangGraph can use any component that LangChain supports.

You’ve access to hundreds of pre-built integrations for:

- LLMs and Embedding Models: OpenAI, Google, Anthropic, AWS, Cohere, Hugging Face, and many more.

- Vector Stores: Chroma, Pinecone, Weaviate, Qdrant, Milvus, and dozens of others.

- Tools and Data Loaders: A vast library of connectors for APIs, databases, and file formats, from Google Search and Tavily to Notion and Slack.

Put simply, if a component exists in LangChain, it can be integrated as a node or tool within a LangGraph workflow.

LangGraph can also serve as an orchestration layer for agents built with other frameworks like AutoGen or CrewAI

Google ADK vs LangGraph: Pricing

On the pricing front, here’s how Google ADK and LangGraph compare:

Google ADK

Google ADK is open-source (Apache 2.0 license) and free to use. There is no license fee or subscription required to develop with ADK or to run it on your own infrastructure. You can pip install it and get started.

Obviously, if you go with Google’s fully managed route (ADK + Vertex AI + Cloud deploy), you’ll be paying cloud provider bills, but you get convenience and scalability in return.

LangGraph

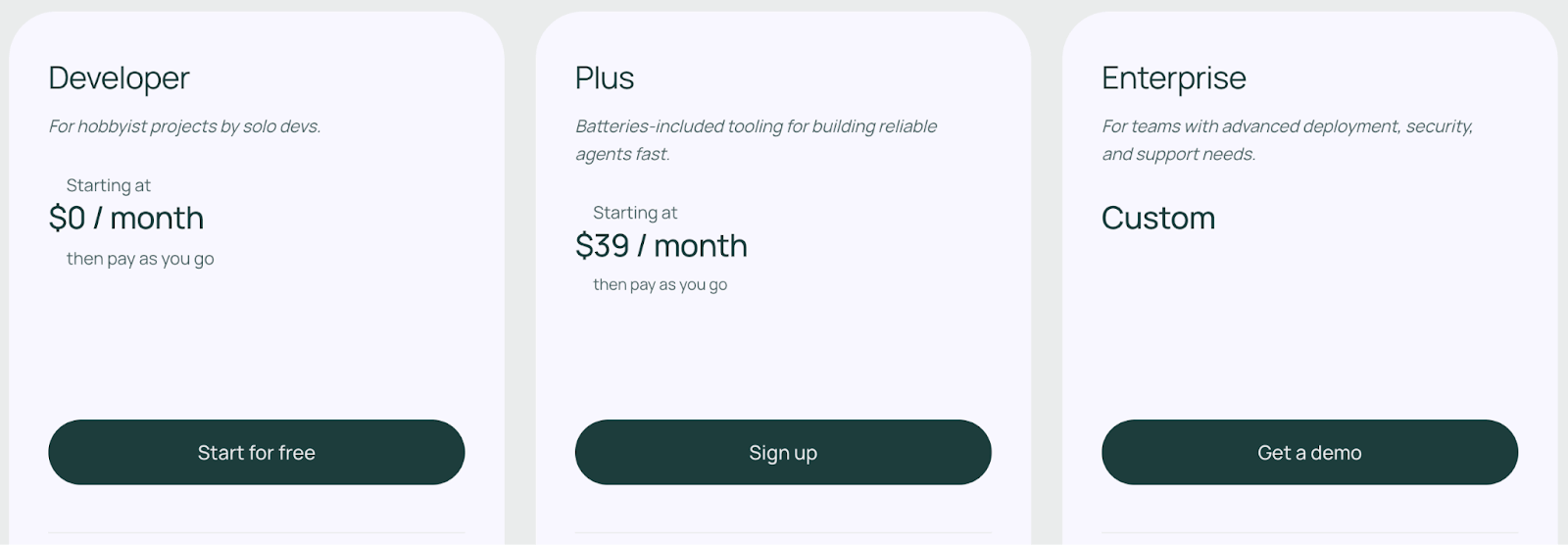

The LangGraph library is open-source under the MIT license and is free to use.

You can install the Python or JavaScript package and build and run agents on your own infrastructure without any licensing fees. LangChain offers two paid plans for LangGraph:

- Plus: $39 per month

- Enterprise: Custom

How Does ZenML Help You Use Both These Platforms with Ease

Both Google ADK and LangGraph are powerful for building the inner loop of intelligent agents. With both, you can design how agents reason, act, and produce results.

However, when it comes to the outer loop of deploying and managing these agents in production, you'll need additional tools.

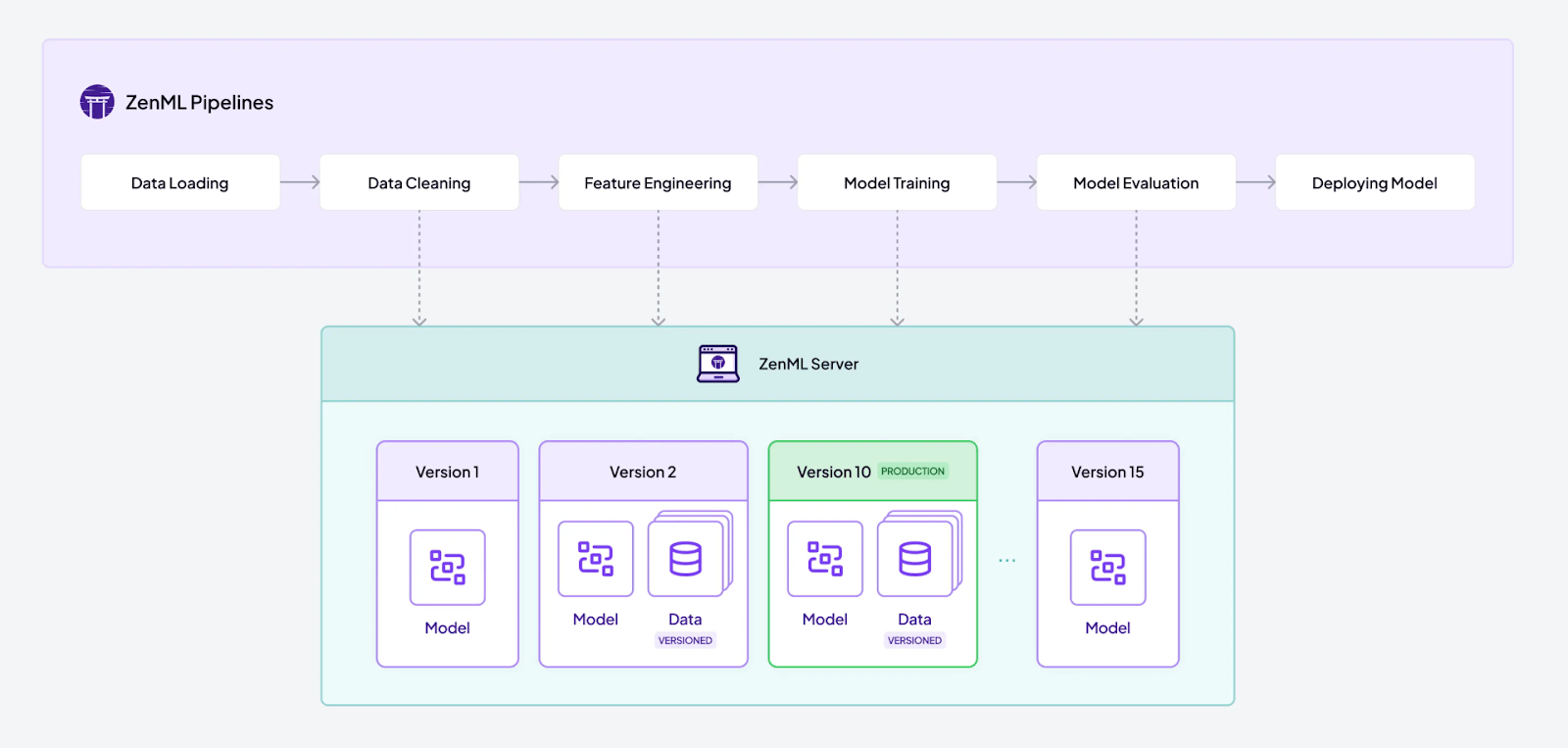

ZenML is an MLOps + LLMOps framework that complements ADK and LangGraph by handling the end-to-end lifecycle of your AI workflows.

Here’s a quick peek at how ZenML helps:

1. Unified Orchestration

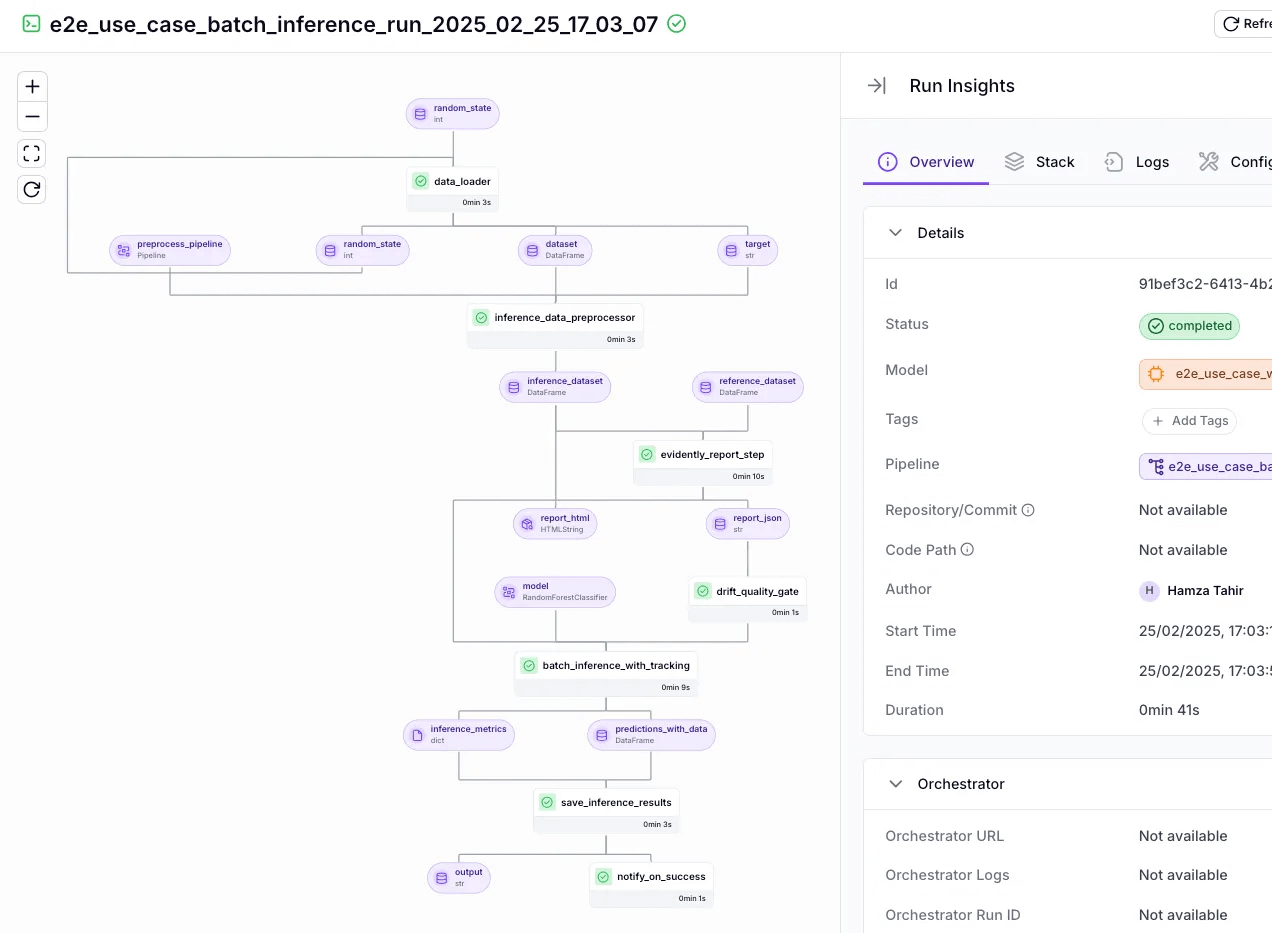

You can embed your ADK or LangGraph agent as a step in a larger ZenML pipeline. For example, pipeline steps can handle data preparation for RAG, the execution of the ADK or LangGraph agent itself, and evaluation of the agent's output.

ZenML handles scheduling, running, and retrying these pipelines, ensuring the entire process is versioned, reproducible, and automated on any infrastructure.

📚 Also read: How Brevo accelerated model development by 80% using ZenML.

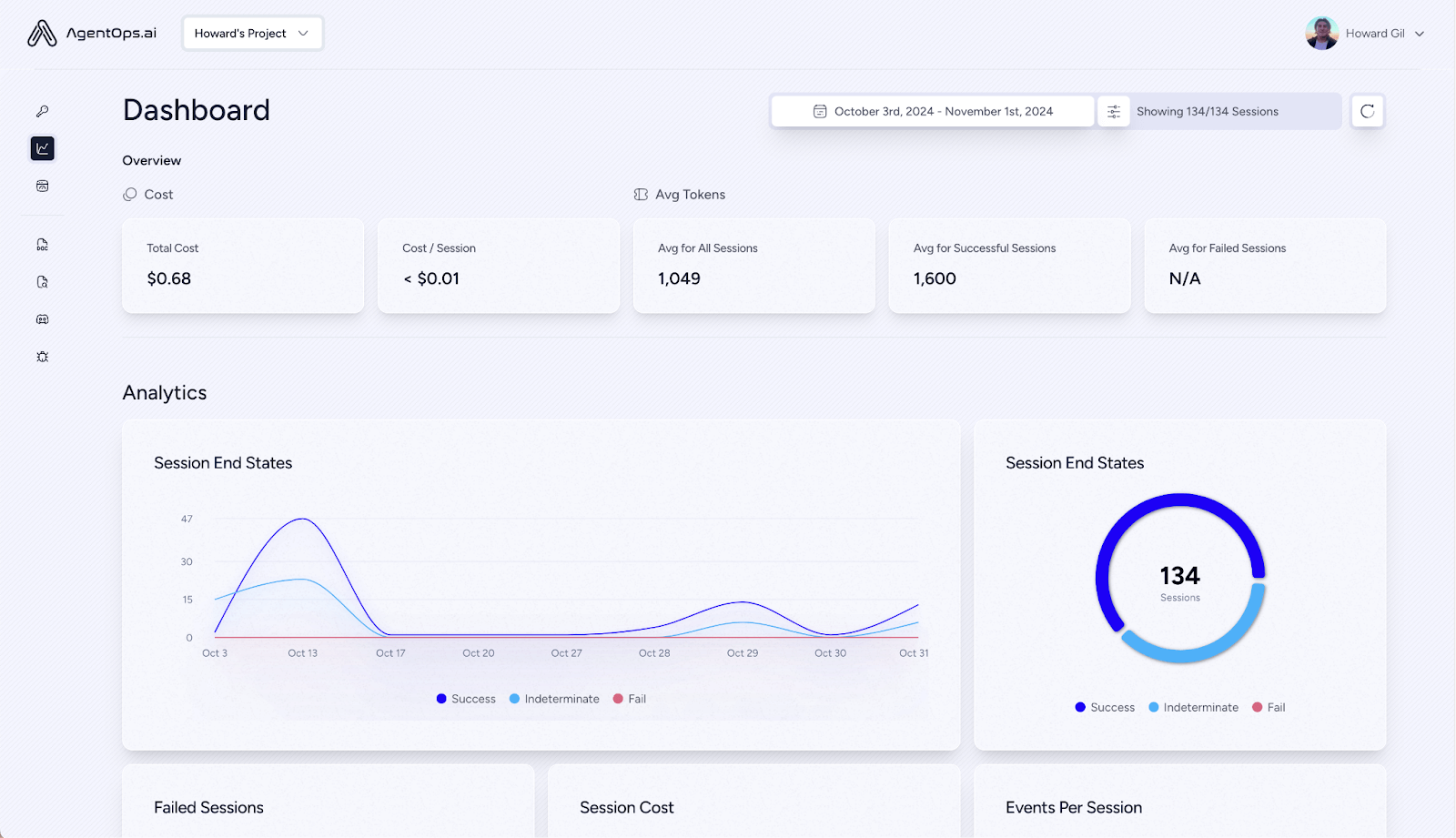

2. End-to-End Visibility and Lineage

ZenML automatically tracks and versions every component of your pipeline run, including the input prompts, agent responses, LLM used, and data sources.

It provides a single dashboard that visualizes the lineage of all artifacts and gives your team a complete and auditable picture of how your systems behave, making them easier to debug.

3. Continuous Quality Checks

Inner-loop tools help you define an agent's behavior, but ZenML helps you understand if that behavior is good or bad over time.

A ZenML pipeline can include steps that automatically run evaluations after each agent execution. These steps can flag bad runs, detect performance regressions, and enable continuous quality monitoring in a production environment.

4. Combine Tools and Avoid Lock-in

ZenML's component-and-stack model decouples your code from the underlying infrastructure. This means you can mix and match tools, even using a LangGraph agent and a Google ADK agent within the same pipeline, and run it all on your chosen cloud stack. This approach avoids vendor lock-in and lets you use the best tool for every part of the job.

In short, Google ADK and LangGraph define what the agent does. ZenML governs how that agent lives, scales, and evolves in a production environment.

📚 More comparison articles for you to read:

Which Framework of the Two Is Best for Building Agentic AI Workflows?

The choice between Google ADK and LangGraph depends on your project's goals, your team's expertise, and your requirements for control versus convention.

✅ Choose Google ADK if you value a code-first framework tightly integrated with cloud deployment and need fine control with an enterprise polish

✅ Choose LangGraph if you want explicit control over complex agent logic with the vast LangChain ecosystem at your fingertips, and you prefer the transparency of graph-defined flows.

At the end of the day, these frameworks aren’t mutually exclusive. You might use LangGraph to prototype a novel agent workflow and Google ADK to productionize a different use case.

With ZenML, you don’t have to pick one; you can orchestrate, monitor, and evolve agents built on both within a unified MLOps pipeline. Rest assured, ZenML ensures that regardless of which agent framework you experiment with, you have a clear path to take it from a research demo to production-ready.

If you’re interested in taking your AI agent projects to the next level, consider joining the ZenML waitlist. We’re building out first-class support for agentic frameworks (like LangGraph, CrewAI, and more) inside ZenML, and we’d love early feedback from users pushing the boundaries of what AI agents can do. With ZenML, you can seamlessly integrate whichever agent framework you choose into robust, production-grade workflows. Join our waitlist to get started.👇