Building reliable, production-grade AI agents requires more than just prompting a large language model. It demands robust frameworks for defining logic, managing state, and orchestrating complex workflows.

Pydantic AI and LangGraph are two frameworks that can help Python developers and ML engineers build efficient, agentic AI workflows.

In this Pydantic AI vs LangGraph article, we compare both in terms of their maturity, core features, integrations, and pricing, and highlight how each shines in different scenarios. By the end, you’ll understand which framework might be the better fit for your needs, and how you can even use both together in production with ZenML’s orchestration.

Pydantic AI vs LangGraph: Key Takeaways

🧑💻 Pydantic AI is a Python-native framework that brings type safety and validation to AI agent development. Built by the team behind Pydantic, it treats agents as Python objects with strongly-typed inputs and outputs. The framework emphasizes developer experience with features like dependency injection, structured outputs, and native async support.

🧑💻 LangGraph is a stateful graph framework from the LangChain team that models agent workflows as explicit state machines. Each step in the workflow is a node, connected by edges that define control flow. LangGraph excels at building complex, multi-step agent systems where you need precise control over execution order and state management.

Framework Maturity and Lineage

Maturity matters. LangGraph has simply been around longer (early 2024) and matured inside the broader LangChain ecosystem, which is why you’ll see more production references and an earlier push to a v1.0 alpha (Sep 2, 2025).

Pydantic AI is newer. It arrived publicly in late 2024 and, for a while, didn’t even have features similar to LangGraph. That gap has narrowed fast: the team shipped V1 on Sep 4, 2025, with an API-stability commitment in the coming months.

Bottom line: LangGraph is the more mature, graph-first option with earlier enterprise uptake; Pydantic AI is newer but hit V1 in Sept 2025 and caught up on core features quickly.

Pydantic AI vs LangGraph: Features Comparison

Let’s compare Pydantic AI and LangGraph across four core feature areas that are vital for agentic AI development:

- Agent Abstraction

- Multi-Agent Orchestration

- Human-in-the-Loop

- Graph-Based Workflow Modeling

For each area, we show you how the frameworks approach the problem and what that means for developers building with them.

Feature 1. Agent Abstraction

What is an ‘agent’ in each framework? This fundamental question reveals a philosophical difference between Pydantic AI and LangGraph.

Pydantic AI

In Pydantic AI, an agent is a first-class Python object (the Agent class) that you instantiate and configure. It behaves a lot like a FastAPI app or a Python class: you declare which LLM model it uses, define its instructions (system prompt), specify the structure of its outputs, and register any tools or functions it can call.

Every agent in Pydantic AI is parameterized by types; you assign a Pydantic BaseModel to be the output schema, and specify a dependencies dataclass to inject resources into the agent at runtime.

The agent will validate that the LLM’s answer conforms to the output_type model, automatically retrying or correcting if validation fails.

For example, here’s a simplified look at defining an agent in Pydantic AI with a structured output and a tool:

In the code above, support_agent is a self-contained agent. The framework uses the SupportOutput model to parse and validate the LLM’s answer, ensuring it returns a message, a boolean, and an integer. If the model’s first attempt doesn’t produce valid JSON for SupportOutput, the agent can detect it and prompt the model to correct itself.

LangGraph

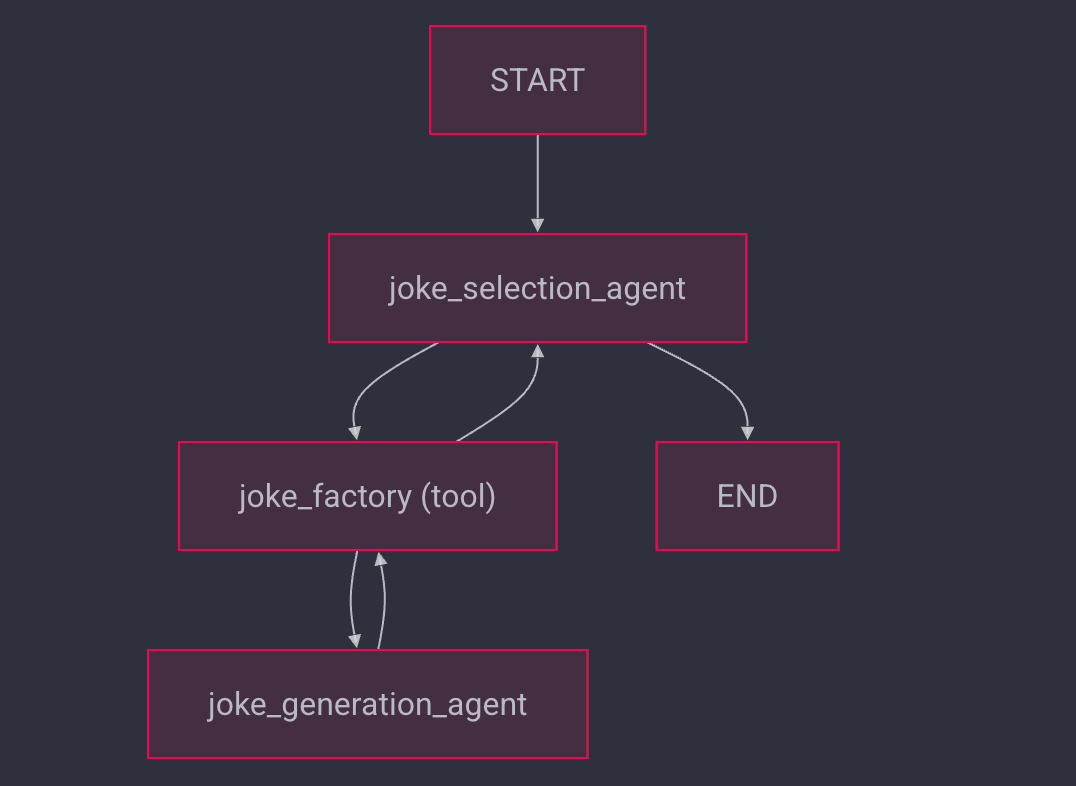

LangGraph takes a lower-level view. Rather than one Python object, i.e., one agent, LangGraph defines an agent in terms of a graph of steps.

In practice, you often use LangGraph by constructing a set of nodes. Each node could correspond to an LLM call, a tool invocation, or even another agent, and then connect them.

The simplest case can resemble a single-agent loop, but LangGraph shines when you need multiple steps or multiple agents working together. The abstraction here is closer to a state machine than a class instance.

For example, LangGraph provides utilities like create_react_agent to quickly spin up a single agent with some tools using LangChain’s standard ReAct prompting under the hood, but it also allows building completely custom agent architectures by subclassing nodes and defining transitions.

To illustrate a basic LangGraph usage, here’s how you can create a simple agent with a tool using a LangGraph prebuilt function:

The agent created here is managing a conversation loop with the Claude model, deciding when to call get_weather based on the ReAct logic.

If we step beyond this convenience function, LangGraph’s core abstraction is that an agent’s logic = a directed graph. Each node can be an LLM call or a decision point; edges dictate the flow from one node to the next.

This makes LangGraph extremely flexible; you can implement complex plans or loops that would be hard to express in a linear prompt. However, it also means LangGraph is more verbose and developer-driven: you explicitly orchestrate every transition.

Bottom line: Pydantic AI treats an agent as a high-level construct defined by data schemas and Python functions, whereas LangGraph treats an agent as a graph of states and transitions.

Feature 2. Multi-Agent Orchestration

Both frameworks can handle multiple agents working together, but the approach differs.

Pydantic AI

Pydantic AI doesn’t spawn multiple agents by itself in a conversation; it’s not like some frameworks where two agents automatically chat. Instead, it provides patterns for you to orchestrate agents if you need more than one. Here are the multi-agent patterns you get with this framework:

- Agent delegation: One agent can call another agent as if it were a tool. This means you can register a function tool that internally invokes a second

Agent. The first agent delegates a subtask to the second agent and waits for the result. - Programmatic hand-off: Your application code can run one agent, then, based on its output or some condition, hand control to another agent. This is a simple sequential orchestration done in Python code.

- Graph-based control flow: For the most complex scenarios, Pydantic AI actually lets you define a workflow using a Pydantic Graph via the pydantic_graph module (more on this later).

LangGraph

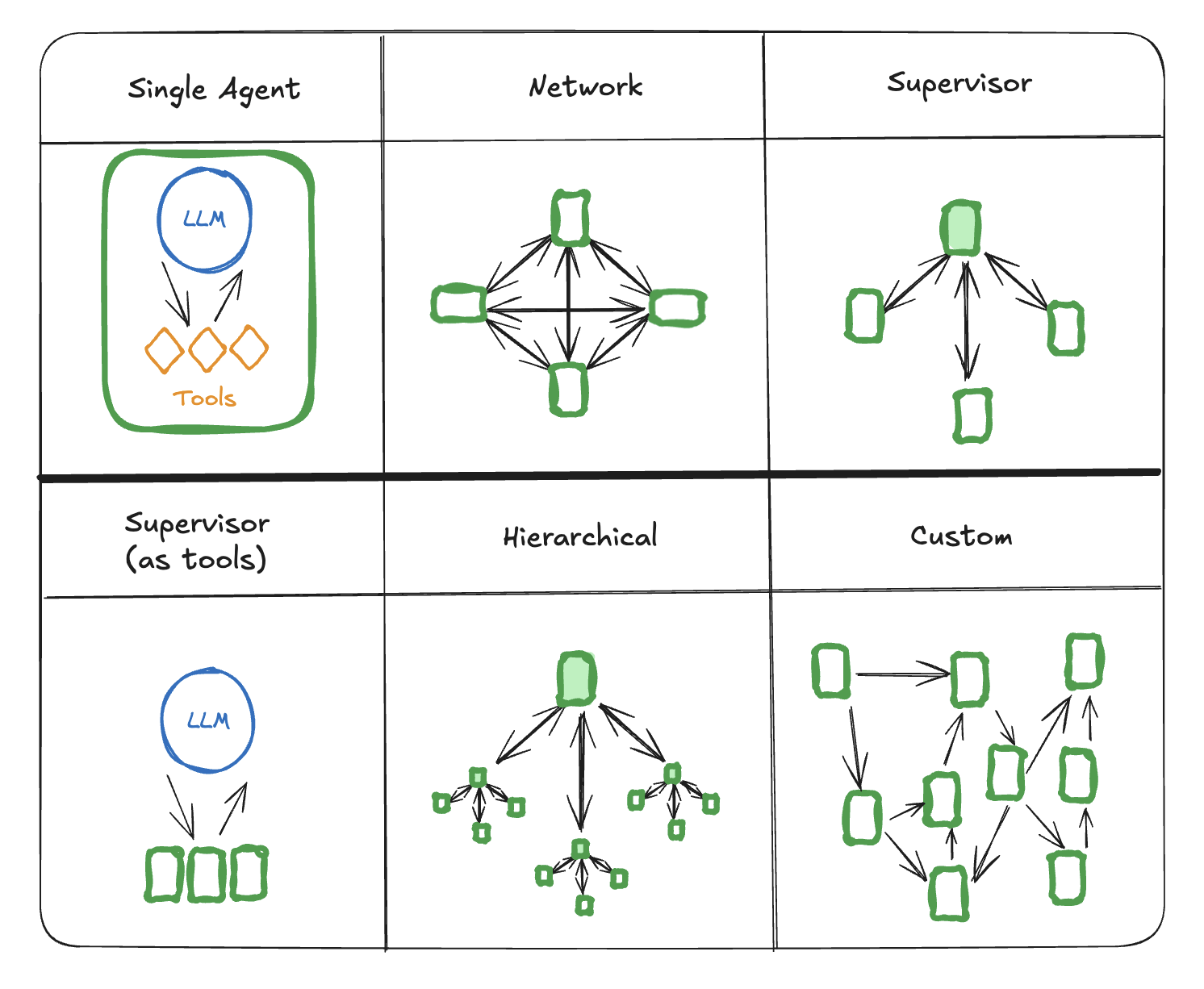

Multi-agent orchestration is LangGraph’s home turf. The framework was explicitly designed to coordinate teams of agents solving a task.

In LangGraph, you create several agent nodes within one graph, e.g., a node for a ‘Planner’ agent, nodes for various expert agents, etc. The graph’s edges manage how these agents interact.

One particularly powerful construct is the conditional edge, which can route the workflow dynamically based on the state or content of the conversation. This makes hierarchical agent teams straightforward to implement.

For instance, a Supervisor agent node can analyze an incoming query and then dispatch it via different edges to one of several Worker agent nodes, like a Researcher agent or a Coder agent. Once the worker finishes, control returns to the supervisor node, which can decide the next step. Because LangGraph expresses this in a graph structure, such multi-agent coordination is declarative in the workflow.

The execution engine handles invoking each agent node and passing along the shared state.

LangGraph supports features like parallel branches - two agents working concurrently if their tasks don’t depend on each other and loops/cycles where an agent might be re-invoked multiple times until a condition is met.

Bottom line: Pydantic AI utilizes multiple agents, but it does so by letting you compose agents through code patterns or by dropping down into an explicit graph if needed.

LangGraph makes multi-agent orchestration a core concept: from the start, you think in terms of how agents (nodes) connect and cooperate. If you know your problem requires multiple specialized LLMs or a complex back-and-forth, LangGraph provides a built-in framework for that.

Feature 3. Human-in-the-Loop

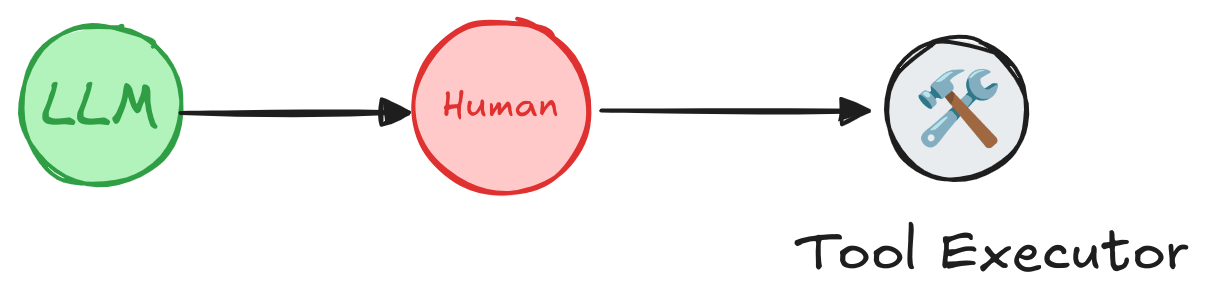

Real-world AI systems often need a human in the loop to approve certain actions, provide input, or correct the course if the AI is going astray. Both Pydantic AI and LangGraph acknowledge this need, but implement it differently:

Pydantic AI

Pydantic AI implements HITL primarily for tool approval using a feature called ‘deferred tools.’ The mechanism is driven by Python's exception handling.

A tool can be designed to raise an ApprovalRequired exception, which pauses the agent's execution. The application code is responsible for catching this exception, presenting the pending action to a human operator for review, and then resuming the agent's run with the human's approval or denial.

This mechanism is relatively straightforward to set up, especially since it’s integrated with the Pydantic Logfire UI for monitoring. Logfire can show a live trace of the agent’s actions and provide a prompt to approve/reject at the right time.

LangGraph

In LangGraph, HITL is not just a feature; it is an emergent property of its core architecture: durable, checkpointed state machines. The primary mechanism is the interrupt() function.

Any node in the graph can call interrupt(), which pauses the graph's execution indefinitely. The entire state of the application is automatically saved by a ‘checkpointer.’

At this point, a human can inspect the complete state, provide feedback, edit variables, or decide on the next course of action. The graph is then resumed by invoking it with a special Command object containing the human's input.

This state-based approach is more general-purpose than Pydantic AI's exception-based method.

You can pause the graph for any reason, not just tool approval, and the human operator has the power to inspect and modify the entire application state.

This makes LangGraph better suited for building truly collaborative human-agent systems where intervention is a core part of the workflow.

Bottom line: Pydantic AI provides a convenient switch for human approval on tools, making it easy to prevent unchecked autonomous actions.

LangGraph offers a more expansive toolkit for human interaction, appropriate for building complex workflows that might require multiple human touchpoints or oversight of an agent’s reasoning process at arbitrary junctures.

Feature 4. Graph-Based Workflow Modeling

One of the headline differences between these frameworks is the role of graphs in designing the workflow. Let’s see how both frameworks approach this functionality differently.

Pydantic AI

Pydantic AI provides graph-based workflow capabilities through a separate but tightly integrated library called pydantic-graph.

This library is a pure, asynchronous graph state machine that uses Python dataclasses to define nodes (BaseNode) and return type hints on a node's run method to define edges. It has its own concepts of state, context, and persistence, and can function independently of Pydantic AI.

Within the Pydantic AI ecosystem, pydantic-graph is positioned as an advanced tool for ‘the most complex cases’ where standard procedural control flow becomes difficult to manage. It is not the default abstraction for building a simple agent; rather, it is an escape hatch for when orchestration requirements grow in complexity.

LangGraph

LangGraph represents agent logic as a graph of nodes. This is not an optional feature; it’s the fundamental paradigm. Each node in a LangGraph could be a call to an LLM or a logical operation, and edges determine the sequence of execution. This explicit graph approach brings a few advantages:

- Clarity and Auditability: Visualize the entire agent workflow as a directed graph. It’s clear what happens first, what can happen next, and under what conditions.

- Complex Control Flow: Graphs make it natural to implement branching (if/else logic), looping, or even concurrent flows. LangGraph supports conditional edges - an edge can have a condition that is evaluated at runtime to decide if it should be followed.

- Durable State: Because the agent’s state is explicitly managed at each node, LangGraph can checkpoint the state between nodes. In fact, it includes persistent state management via Checkpointers; you can snapshot the state at certain nodes so that if the process crashes or needs to pause, it can resume from the last checkpoint without redoing everything.

The downside to this graph-everywhere approach is the learning curve and verbosity.

Bottom line: LangGraph treats graph-based modeling as the norm: every LangGraph solution is inherently a directed graph of operations. Pydantic AI treats graph modeling as a powerful tool in the toolbox, but not the default mode. You start simple with just an agent and some tools, and only introduce graphs if the scenario demands it.

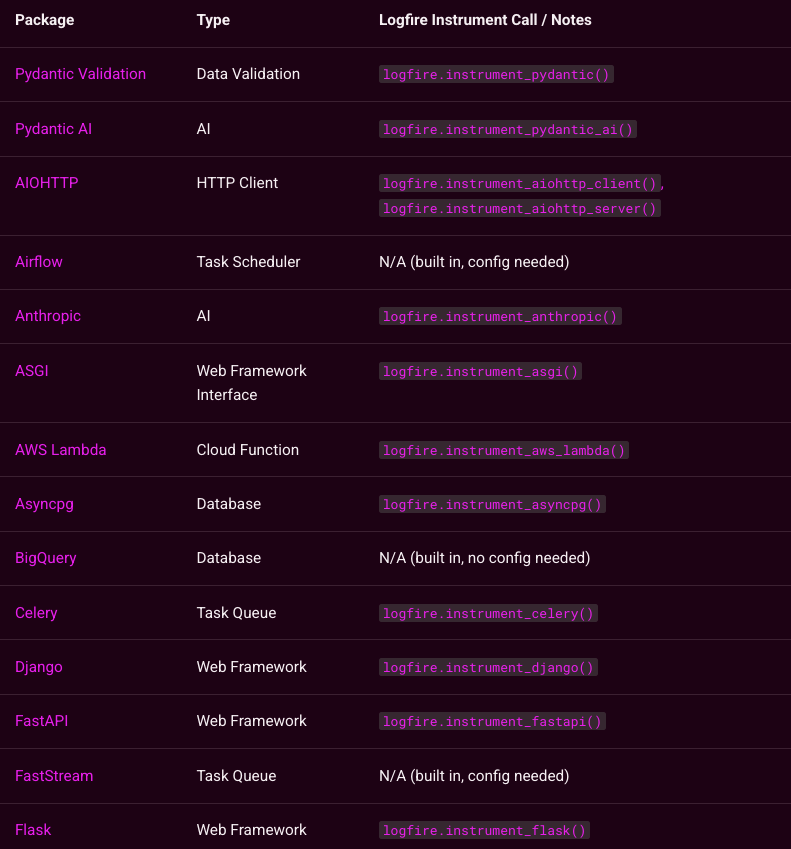

Observability and Logging Integrations

It’s worth noting how each framework ties into a logging/observability stack, as this can be critical in production.

Both Pydantic AI and LangGraph come with natural integrations for monitoring agent behavior; typically pairing with the platforms created by their parent projects:

Pydantic AI + Logfire: Pydantic AI is designed to work seamlessly with Pydantic Logfire, which is an OpenTelemetry-based observability platform that the team built alongside the framework. With minimal setup, Pydantic AI will emit traces of each agent run, including model calls, tool calls, and validations, to Logfire.

LangGraph + LangSmith: Similarly, LangGraph naturally integrates with LangSmith, LangChain’s debugging and monitoring service. LangSmith provides tools for tracing agent executions, visualizing the chain/graph, and evaluating performance.

Pydantic AI vs LangGraph: Integration Capabilities

An agent framework is only as powerful as the ecosystem it connects to. Both frameworks offer extensive integrations, but they prioritize different strategies.

Pydantic AI

Pydantic AI's integration strategy is focused on strategic depth, prioritizing open standards and tools that reinforce its core value proposition of building robust, interoperable, and production-grade systems.

- LLMs: It is model-agnostic, with built-in support for all major providers like OpenAI, Anthropic, Gemini, and Cohere, as well as a wide range of OpenAI-compatible endpoints.

- Observability: It offers native integration with Pydantic Logfire and supports any other OpenTelemetry-compatible tool, such as Langfuse.

- Interoperability: It is built around open standards like the Model Context Protocol (MCP) for accessing external tools, Agent2Agent (A2A) for inter-agent communication, and AG-UI for connecting to interactive frontends.

- Durable Execution: For long-running and fault-tolerant workflows, Pydantic AI integrates with production-grade workflow engines like Temporal and DBOS.

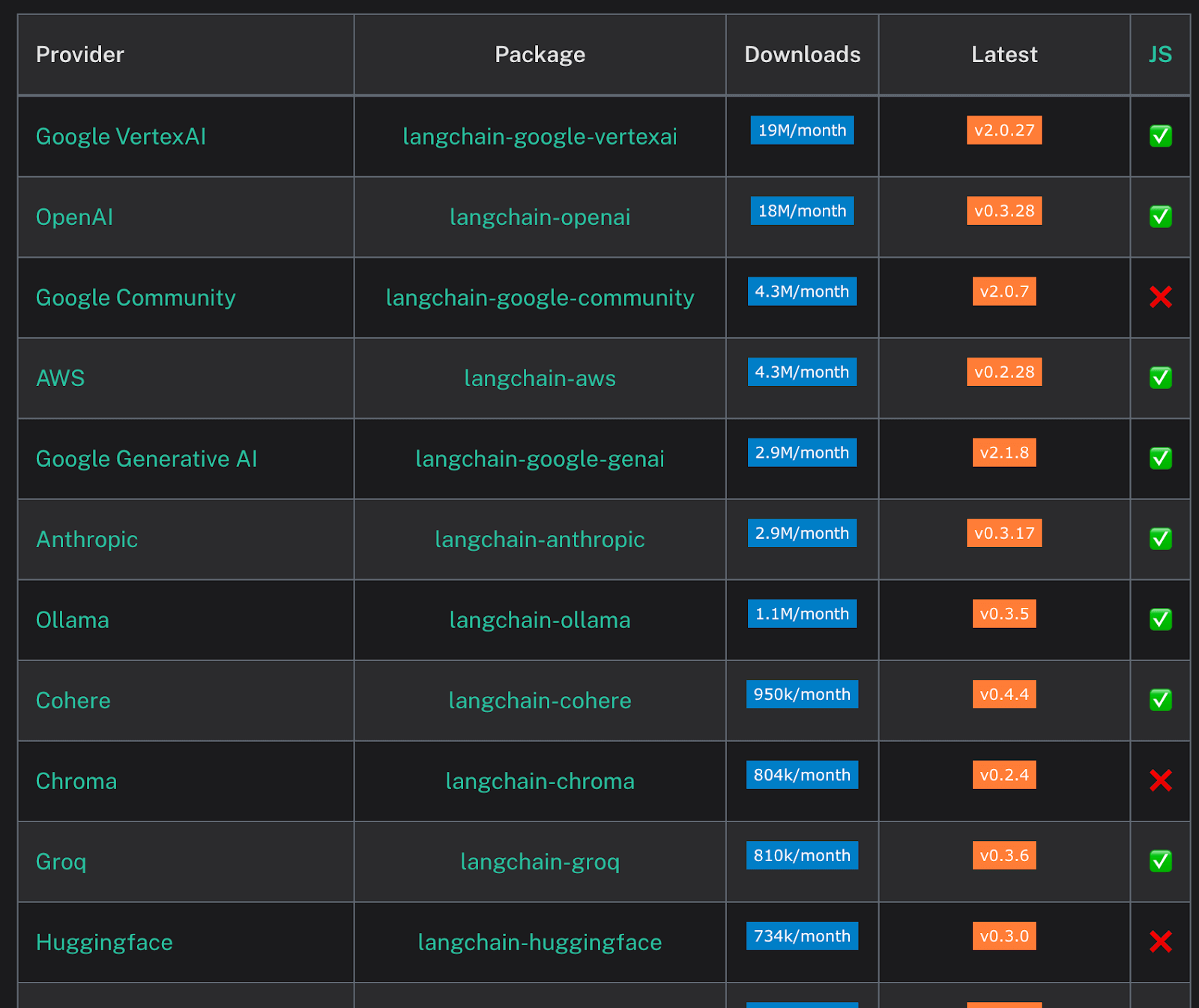

LangGraph

LangGraph’s strength lies in its deep, native integration with the broader LangChain ecosystem. This gives developers immediate access to:

- LangChain Components: The vast library of LangChain integrations for LLMs, document loaders, text splitters, vector stores, and tools can be used directly as nodes within a LangGraph graph.

- LangSmith: A best-in-class platform for observability, tracing, and debugging. Traces from LangGraph are automatically visualized in LangSmith, showing the step-by-step execution of the graph, which is invaluable for understanding and improving complex agent behavior.

- Extensibility: As a Python library, LangGraph can be integrated with any other Python package or API, offering limitless extensibility for developers.

Pydantic AI vs LangGraph: Pricing

Both Pydantic AI and LangGraph are open-source frameworks you can use for free. The pricing comes into play for the hosted/enterprise services associated with each and any usage-based limits if you choose those services:

Pydantic AI

Pydantic AI is completely open-source under the MIT license. There are no paid tiers or commercial offerings for the framework itself.

But it does have plans to increase the limits of ‘spans/metrics.’

A span is the building block of a trace; a single row in our live view. To give an example of how you might conceive of a span, imagine you were measuring how many birds cross a specific river. If you instrumented one border of the river with a counter, you would receive one span back for every time this sensor was triggered.

A metric is a single data point, sometimes called a "sample" or "metric recording".

Here are the plans it offers:

- Pro: $2 per million spans/metrics

- Cloud Enterprise: Custom pricing

- Self-hosted Enterprise: Custom pricing

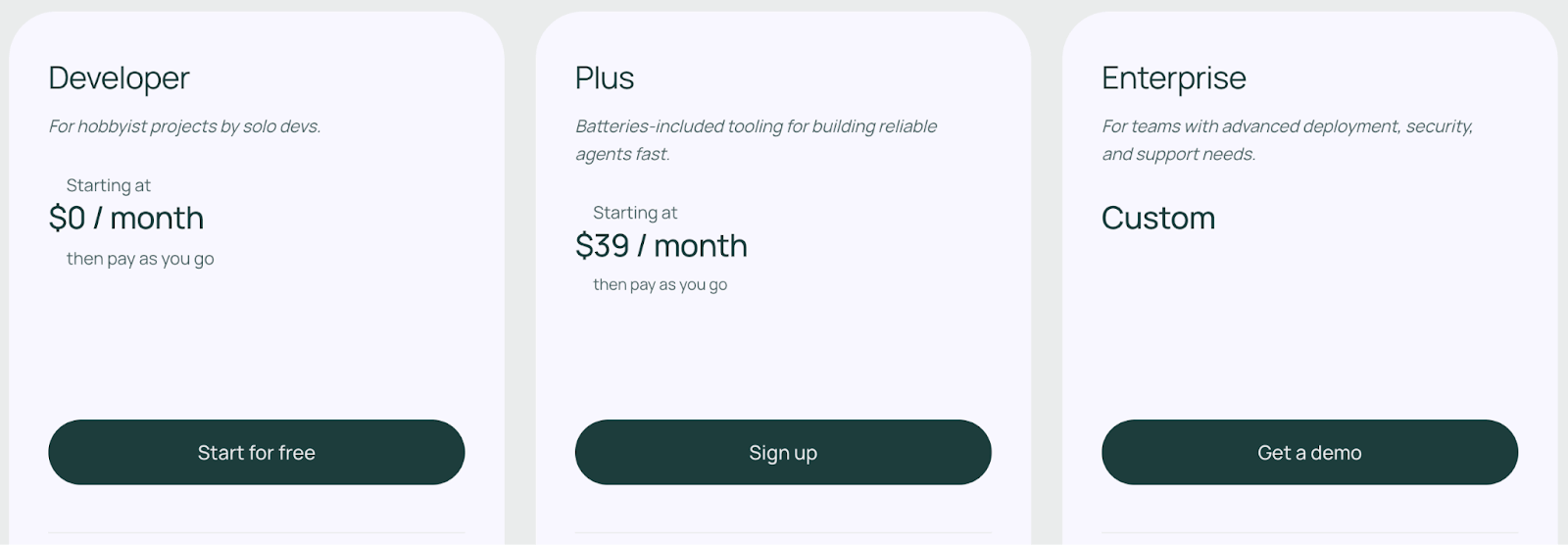

LangGraph

LangGraph employs a freemium model that separates the open-source library from its commercial platform.

The langgraph Python and JavaScript libraries are completely free (MIT license) and can be self-hosted without any usage limits from LangChain.

LangGraph Platform

This is the managed commercial offering with a tiered structure designed to scale with your needs and has three plans to choose from:

- Developer: Includes up to 100K nodes executed per month

- Plus: $0.001 per node executed + standby charges

- Enterprise: Custom-built plan tailored to your business needs

📚 Related article: LangGraph pricing guide

How ZenML Helps In Closing the Outer Loop Around Your Agents

You might be wondering: Pydantic AI vs LangGraph sounds like an either/or choice, but what if you could use them together or interchangeably as needed? That’s where ZenML comes in.

ZenML is an open-source MLOps + LLMOps framework that acts as the glue for integrating various AI tools into production workflows.

Rather than competing with Pydantic AI or LangGraph, ZenML complements them by handling the surrounding infrastructure and lifecycle concerns. Here’s how ZenML can help you leverage either or both frameworks seamlessly:

Feature 1. Pipeline Orchestration

With ZenML, you can encapsulate an agent-based solution into a reproducible pipeline. This means you could have a ZenML pipeline that first prepares some data, then runs a Pydantic AI agent step, then perhaps runs a LangGraph agent in another step (or even in parallel), and finally evaluates the results.

Feature 2. Reproducibility and Lineage

ZenML automatically tracks artifacts and lineage of each pipeline run. For agentic applications, this is a blessing as it tracks things like which prompts were used, what outputs were generated, what tools were invoked by the agent, etc., and version them.

In a multi-agent scenario with implicit state, ZenML’s tracking can save you from a lot of headaches. You get a central dashboard to visualize how data and decisions flow through the agents.

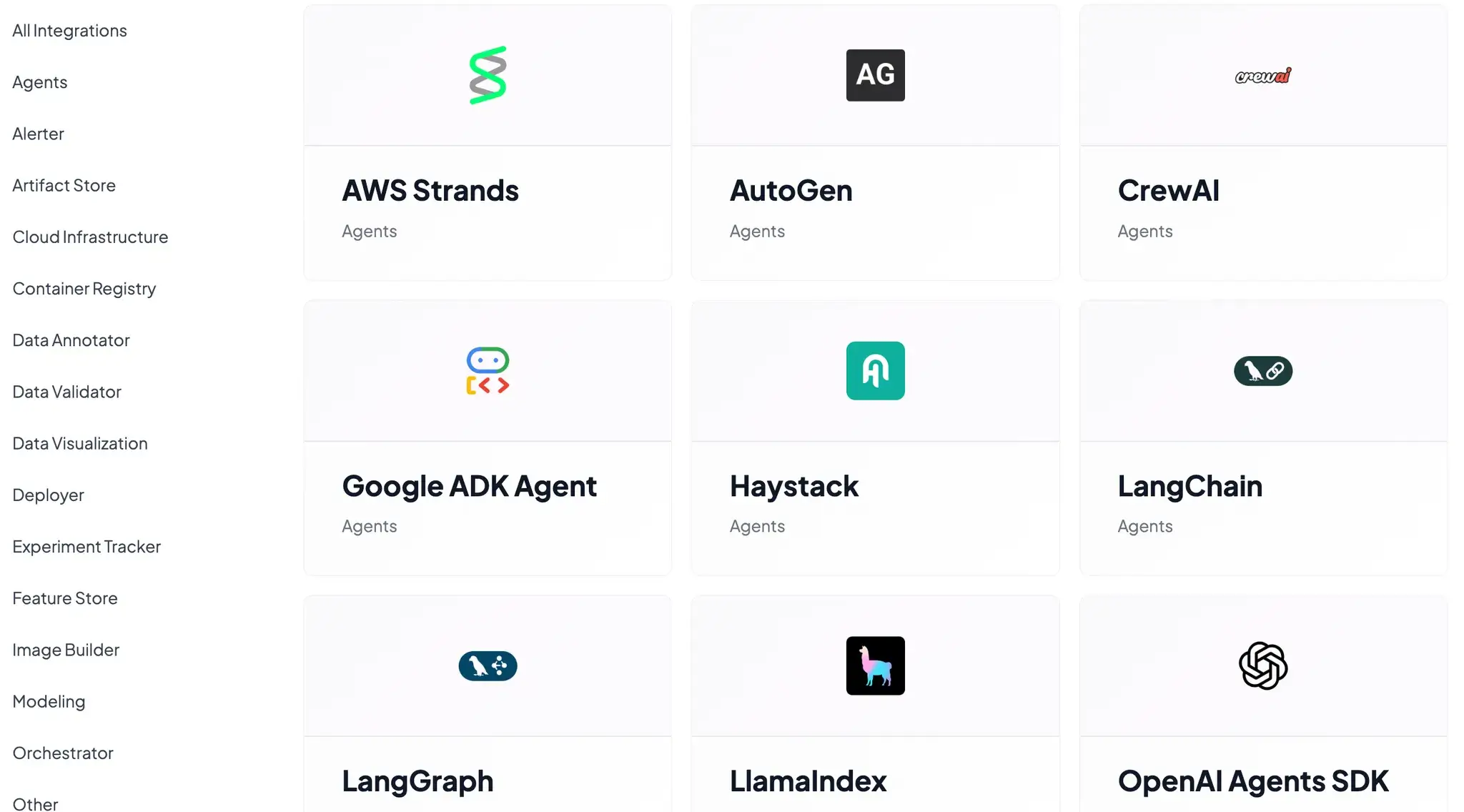

Feature 3. Mixed Framework Flexibility

Perhaps one of the coolest benefits is that ZenML frees you from vendor lock-in or framework lock-in.

You can use CrewAI, AutoGen, LangGraph, Pydantic AI, etc., in different parts of your project and still manage them uniformly.

For instance, ZenML would allow you to orchestrate a pipeline where one step uses a LangGraph agent to do a complex multi-agent research task, and another step uses a Pydantic AI agent to take that research and format it into a report.

ZenML acts as the production backbone for whichever agentic framework you choose. Pydantic AI and LangGraph are about what the agents do. ZenML is about how those agents are deployed, monitored, and kept reliable in the wild.

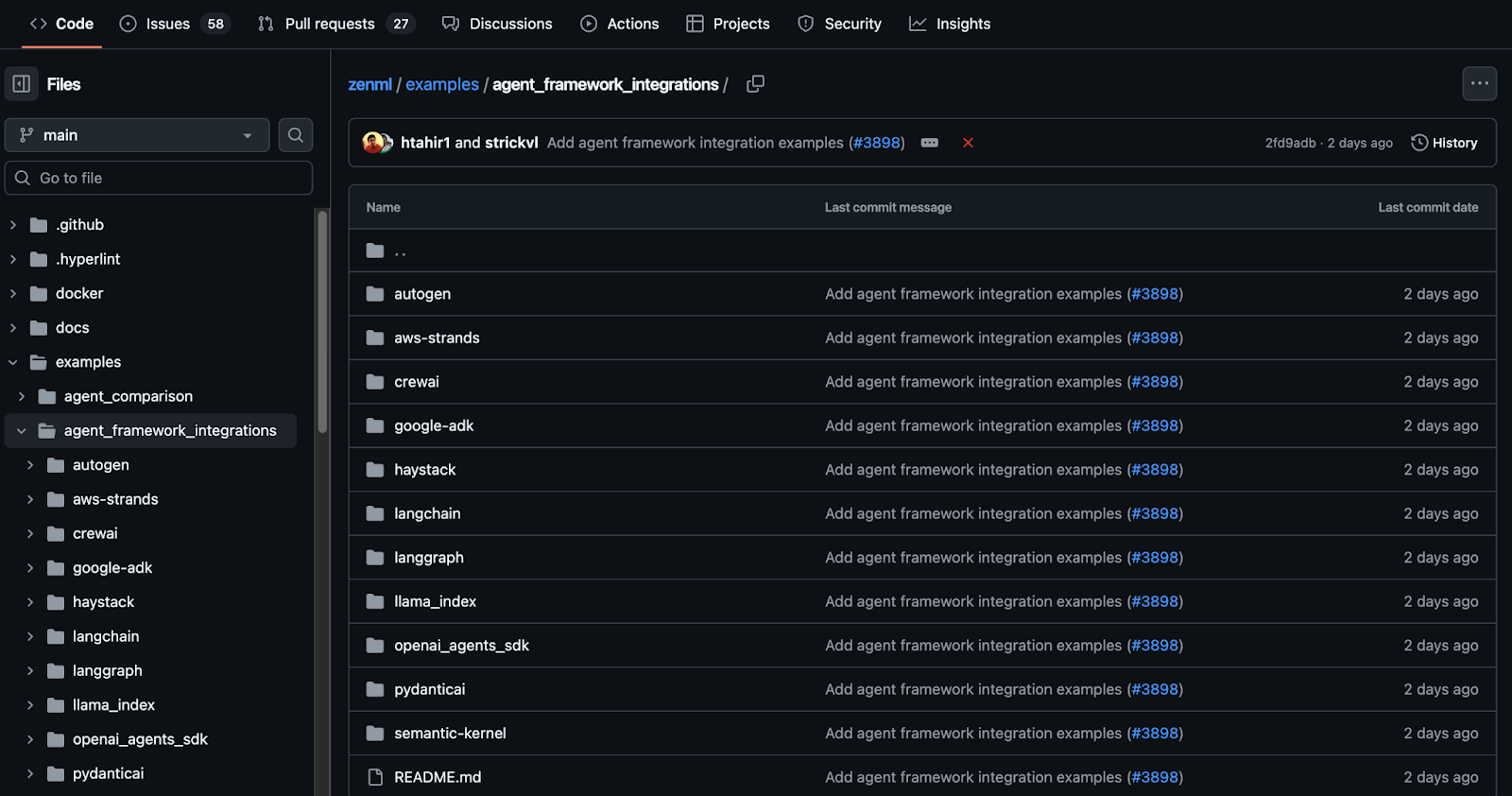

👀 Note: At ZenML, we have built several agent workflow integrations with tools like Semantic Kernel, LangGraph, LlamaIndex, and more. We are actively shipping new integrations that you can find on this GitHub page: ZenML Agent Workflow Integrations.

📚 Read more comparison articles:

Which Agentic AI Framework Should You Choose?

Pydantic AI and LangGraph represent two excellent but different approaches to building AI agents.

Pydantic AI brings software engineering rigor to agent development with its focus on type safety, validation, and Python-native patterns. It's the ideal choice when you want predictable, maintainable agents that integrate naturally with existing Python applications.

LangGraph excels when you need fine-grained control over complex workflows. Its graph-based architecture makes it easy to build sophisticated multi-agent systems with cycles, branching, and human oversight.

The choice between them often comes down to your specific requirements.

✅ Choose Pydantic AI for type-safe, maintainable agents in Python-centric environments.

✅ Choose LangGraph when you need explicit workflow control and want to leverage the broader LangChain ecosystem.

Regardless of your choice, consider using ZenML to handle the production deployment challenges. It provides the infrastructure, monitoring, and lifecycle management that both frameworks need to succeed in real-world applications.

If you’re interested in taking your AI agent projects to the next level, consider joining the ZenML waitlist. We’re building out first-class support for agentic frameworks (like LangGraph, CrewAI, and more) inside ZenML, and we’d love early feedback from users pushing the boundaries of what AI agents can do. With ZenML, you can seamlessly integrate whichever agent framework you choose into robust, production-grade workflows. Join our waitlist to get started.👇