In modern MLOps, choosing the right orchestration and pipeline tool can make a huge difference in productivity and reliability. There are several MLOps platforms on the market with unique approaches to building and running pipelines.

This Prefect vs Airflow vs ZenML comparison analyzes three leading platforms that take different approaches to ML pipeline orchestration.

Understanding how these platforms handle workflow orchestration, data versioning, scheduling, and integrations will help you make an informed decision for your ML infrastructure. We'll explore each platform's strengths, limitations, and ideal use cases to help you make the right decision.

Prefect vs Airflow vs ZenML: Key Takeaways

🧑💻 Prefect: An open-source, Python-native workflow orchestrator that turns Python functions into production data workflows. The platform is easy to use (define flows with @flow and @task), has robust state handling (retries, caching), and flexible scheduling. Prefect runs anywhere Python runs and provides automatic monitoring and failure recovery. It’s ideal for teams that need flexible, and Python-native workflow orchestration.

🧑💻 Apache Airflow: Dominates the enterprise orchestration space with its mature ecosystem and extensive community support. Its DAG-based approach and hundreds of pre-built operators enable teams to connect virtually any data source or service. Airflow is a general-purpose tool and works well if your pipelines involve complex ETL or if you already use it for data engineering.

🧑💻 ZenML: Focuses specifically on ML pipelines with built-in experiment tracking, model versioning, and deployment capabilities. The platform works like a higher-level abstractor that leverages other orchestrators (including Airflow and more) as execution backends while providing ML-specific features. ZenML is ideal when you want a Pythonic, ML-centric workflow with built-in tracking and flexible integrations.

Prefect vs Apache Airflow vs ZenML: Feature Comparison

Here’s a TL;DR of comparable features of Prefect, Airflow, and ZenML:

If you want to learn more about how each of the above features compares for the three MLOps platforms, read ahead.

In this section, we compare Prefect, Apache Airflow, and ZenML across the three most important MLOps features:

- Workflow orchestration

- Artifact and Data Versioning

- Built-in Scheduling

Feature 1. Workflow Orchestration

Workflow orchestration is coordinating the execution of steps in a pipeline. It’s how you define, schedule, and run your tasks in order.

Prefect

Prefect is a Pythonic orchestrator; you write data pipeline logic in plain Python. Using the @flow and @task decorators, you define a flow (pipeline) and its tasks. It then builds the dependency graph automatically.

Because it’s code-based, you can use standard Python features like loops, conditionals, exceptions, etc., to make dynamic workflows.

Prefect manages the state for each task and run, it tracks success, failure, and retries out of the box. For example, Prefect lets you restart a failed pipeline from the last successful state and even cache task outputs to skip re-computation.

What’s more, Prefect’s engine can run flows locally, in containers, on Kubernetes, or in the Cloud – it’s flexible. In short, the platform handles the scheduling, logging, and retries, so you can focus on writing the logic in Python.

Apache Airflow

Apache Airflow established the standard for workflow orchestration with its Directed Acyclic Graph (DAG) model. Each workflow is a DAG where nodes represent tasks and edges define dependencies. This explicit dependency declaration ensures tasks execute in the correct order and enables sophisticated scheduling patterns.

Airflow's operator model provides incredible flexibility for task execution. The platform includes operators for common tasks like running Python functions, executing SQL queries, or triggering external systems. The extensive operator ecosystem covers major cloud providers, databases, and ML platforms. When existing operators don't meet your needs, creating custom operators is easy with Airflow.

The Airflow scheduler continuously monitors DAG definitions and triggers tasks when their dependencies are met. The scheduler handles complex scenarios like task retries, timeouts, and SLA monitoring.

Airflow's UI provides comprehensive visibility into pipeline execution. It lets you monitor task progress, view logs, manually trigger runs, and analyze historical performance. The tree view and graph view offer different perspectives on pipeline execution, helping you quickly identify bottlenecks or failures.

ZenML

ZenML approaches orchestration through the concept of pipelines and steps. You build an ML pipeline by defining Python functions with @step and assembling them with an @pipeline decorator. ZenML itself then orchestrates the execution of those steps.

More importantly, the platform is orchestrator-agnostic. This means you choose a stack component like Kubernetes, Docker, or even Airflow to run your pipeline.

Under the hood, ZenML will create the necessary workflow, like a Kubernetes Job or an Airflow DAG, to execute each step in order. This design means you get a simple Python API for your pipeline, but ZenML handles running the pipeline on your infrastructure.

ZenML also automatically logs outputs and metadata for each step. In practice, our platform makes it easy to define end-to-end ML pipelines in Python without having to write low-level DAG definitions. Its built-in metadata store and artifact tracking give you visibility into the run, even though you’re using an external orchestrator.

Bottom Line

Prefect is excellent for dynamic, Python-centric workflows, particularly beneficial for smaller to medium-sized teams who prefer straightforward orchestration.

Apache Airflow is the mature, reliable choice for larger, data engineering-centric teams already familiar with DAG concepts and needing robust scheduling.

ZenML suits ML-focused teams looking to standardize and simplify their pipelines, ideal for rapidly iterating ML projects that can plug into different orchestration backends easily.

Feature 2. Artifact and Data Versioning

Tracking data artifacts and model versions is critical for reproducibility. In this section, we compare how each tool handles artifacts and data versioning.

Prefect

Prefect supports artifacts that you can attach to tasks. In Prefect v3, you can call APIs like create_link_artifact or create_markdown_artifact from within a task to register outputs (for example, output files or summary links).

These artifacts are then visible in the platform’s UI. Prefect lets you give multiple versions of the same artifact key (e.g., using the same key argument), enabling a simple lineage: the UI shows the chain of artifact versions with timestamps and associated task runs.

For instance, if two tasks create artifacts with key ‘irregular-data’, Prefect will track both versions under that key, along with descriptions and a link to the data.

What’s more, Prefect also logs each task’s state - success, failure, inputs, outputs - in its database. This provides some replay and recovery; the tool can then cache and resume tasks based on their inputs.

However, Prefect doesn’t automatically version arbitrary data – you must explicitly create artifacts or configure caching. Its focus is on providing hooks so you can manage artifacts via the UI if desired.

Apache Airflow

Airflow itself does not provide built-in data versioning. It is purely a workflow engine that tasks run and produce outputs that live wherever you configure them (databases or object storage).

Airflow’s internal metadata DB tracks DAG and task state (for scheduling) but doesn’t store task outputs. The only built-in mechanism for passing data between tasks is XComs, which are small pieces of metadata (up to a few KB).

In practice, model files or large datasets are typically written to external storage (S3, GCS, databases) by Airflow tasks. Airflow will not automatically record or version those outputs. If you need lineage or version control, you must build it yourself. In short, Airflow leaves artifact management entirely up to you.

ZenML

ZenML’s standout feature is its automatic artifact tracking and lineage. Every output produced by a ZenML step becomes a tracked artifact. These artifacts are stored in the configured artifact store, like S3, GCS, or a local filesystem, and recorded in ZenML's metadata store.

ZenML automatically manages these artifacts and their lineage, capturing the relationship between each artifact and the steps that produced it, thereby creating a full data lineage. This means every dataset, model, or file generated during a pipeline run is versioned by the platform.

In practice, this makes ZenML pipelines reproducible: rerunning a pipeline with the same code and inputs will find matching artifacts and reuse them.

Furthermore, the platform offers a Model Control Plane in its managed dashboard, which acts as a centralized model registry.

This Model Control Plane ties together related artifacts, including weights, metrics, and training data, for a specific use case, linking models to pipeline versions and artifacts for end-to-end lineage.

This lets you see precisely which code, data, and parameters generated any model version.

Bottom line

Prefect provides moderate built-in artifact management suitable for teams needing simple version tracking linked to tasks.

Airflow leaves artifact management entirely manual, which makes it less suitable for teams prioritizing built-in reproducibility and versioning.

ZenML offers comprehensive artifact and lineage tracking automatically, making it the best choice for ML teams requiring end-to-end reproducibility without manual intervention.

Feature 3. Built-In Scheduling

Built-in scheduling functionalities allow ML pipelines to be automated and run at predefined intervals or based on specific triggers, which is critical for continuous training, model retraining, and deployment in production environments. In this section, we compare how scheduling works for these three platforms.

Prefect

Prefect allows you to specify one or more schedules for any deployed flow, dictating when and how new flow runs are automatically created. It supports three main types of schedules: Cron, Interval, and RRule.

The Cron schedule is suitable for tasks tied to specific clock times and dates, like ‘every Monday at 9:00 AM,’ using a standard cron string and allowing for timezone specification to handle Daylight Saving Time (DST).

The Interval schedule is designed for deployments that need to run at a consistent cadence, not strictly tied to absolute clock time, for example, ‘every 10 minutes,’ creating new flow runs at regular intervals measured in seconds.

The RRule is the most powerful option for complex scheduling needs, suitable for calendar-based logic like ‘the last weekday of each month,' supporting iCal recurrence rules.

The core of Prefect's scheduling mechanism is the Scheduler service, which evaluates each deployment’s schedules and creates new runs. This service starts automatically when prefect server start is executed and is a built-in service of Prefect Cloud.

Apache Airflow

The Apache Airflow scheduler is a built-in component designed to monitor all tasks and Directed Acyclic Graphs (DAGs) and trigger task instances once their dependencies are complete.

It operates as a persistent service within a production Airflow environment. The scheduler initiates a subprocess to monitor and synchronize with DAGs, gathering parsing results and checking for active tasks ready to be triggered.

Initial DAG Runs are generated based on the minimum start_date of tasks, with subsequent runs created according to the DAG's timetable. For time-based DAGs, tasks are triggered only after the period they cover has concluded, ensuring data completeness.

Airflow supports running multiple schedulers concurrently to enhance both performance and resiliency, known as High Availability (HA). This HA scheduler leverages the existing metadata database for operational simplicity, avoiding complex consensus algorithms between schedulers.

To start a scheduler, simply run the command:

airflow scheduler

Your DAGs will start executing once the scheduler is running successfully.

ZenML

ZenML’s scheduling support comes via the orchestrators in your stack. Many ZenML orchestrator integrations (Kubeflow, AWS SageMaker Pipelines, Tekton, or even the Airflow Orchestrator) support Cron or interval triggers.

In ZenML, you can create a Schedule object for a pipeline that specifies a frequency (cron or interval), and it will register that with the chosen orchestrator.

For example, if you use the Kubeflow or Airflow orchestrator, ZenML will configure a CronJob or an Airflow DAG schedule behind the scenes.

In general, ZenML makes it straightforward to schedule pipelines, but the exact capabilities depend on the orchestrator. For many teams, this means you can use ZenML to define your pipeline once and then run it on a schedule using your preferred orchestration engine.

ZenML's decision to wrap existing orchestrator schedulers comes from its meta-orchestrator philosophy. This allows ZenML to inherit the robustness and features of underlying schedulers, without having to build and maintain its own complex scheduling engine.

The benefit is that you get a consistent ZenML API for scheduling while retaining the flexibility to choose your preferred underlying orchestrator, leading to simplified management and broader compatibility.

Prefect vs Airflow vs ZenML: Integration Capabilities

Integration capabilities determine how well the platform connects to data stores, compute resources, and other tools.

Prefect

Prefect is Kubernetes-native, which facilitates easy integration with cloud and container platforms. Any Kubernetes cluster can serve as its execution environment and use any cloud object storage, like S3 or GCS, for data persistence.

Prefect offers connectors for various tools across the ML lifecycle. These include integrations for

- DataFrames: Hugging Face, Vaex, Polars

- Data Validation: Great Expectations, Pandera

- Model Training: AWS Sagemaker

- Model Deployment: ONNX, TensorFlow, PyTorch, Scikit Learn

- Monitoring: MLflow, Whylogs.

Prefect's integrations are deeply tied to its Kubernetes-native architecture, making it a strong choice for teams already invested in or planning to adopt a Kubernetes-based infrastructure for their ML workloads.

Apache Airflow

Apache Airflow has a vast and mature integration ecosystem, primarily through its extensive provider packages, operators, and hooks. It is highly tool-agnostic and can orchestrate actions across various systems.

Airflow offers deep integration with major cloud platforms, including AWS, Google Cloud (GCP), and Microsoft Azure, with dedicated providers for services like SageMaker, Databricks, BigQuery, and Azure ML.

For ML/AI tools, it integrates with MLflow, Weights & Biases, Cohere, OpenAI, Weaviate, OpenSearch, and various vector databases, which allow you and your engineering team to automate the end-to-end model lifecycle.

Airflow also provides connectors for numerous databases and data systems, like PostgreSQL, MySQL, MSSQL, Oracle, and MongoDB, and robust data transfer capabilities.

ZenML

ZenML is designed to be integration-friendly at every layer. Its key abstraction is a ‘stack,’ where separate components for orchestration, artifact stores, metadata stores, and experiment trackers are plugged in.

ZenML has more than 50 built-in connectors across various MLOps categories, including orchestrators, artifact stores, container registries, experiment trackers, model deployers, and cloud infrastructure.

- Artifact Store: S3, Azure Blob Storage, GSC

- Cloud Infrastructure: AWS, Google Cloud, Azure

- Container Registry: Azure, Elastic, GitHub, Google Artifact Registry

- Data Visualization: Facets

- Experiment Tracker: Comet, MLflow, Neptune, Weights & Biases

- Orchestrator: Docker, HyperAI, Kubeflow, Modal, Tekton

Prefect vs Airflow vs ZenML: Pricing

Understanding the pricing models, including open-source availability and managed service options, is crucial for cost-effective MLOps platform selection, as it directly impacts the total cost of ownership and operational overhead.

Prefect

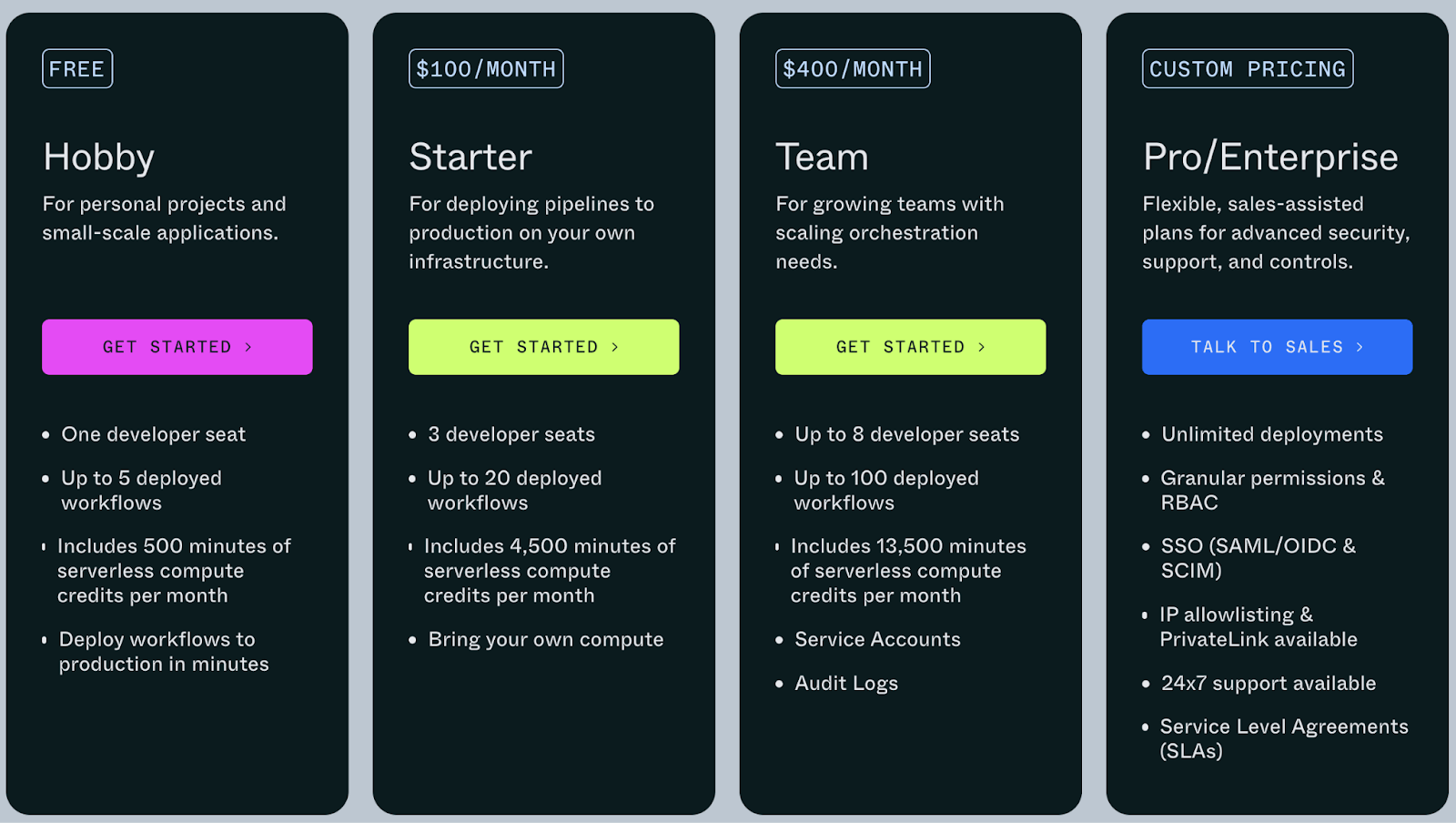

Prefect’s pricing is pretty straightforward; it offers 1 open source plan and 3 paid plans to choose from:

- Hobby: $0 per month - For personal projects and small-scale applications.

- Starter: $100 per month - For deploying pipelines to production on your own infrastructure.

- Team: $400 per month - For growing teams with scaling orchestration needs.

- Pro/Enterprise: Custom pricing - Flexible, sales-assisted plans for advanced security, support, and controls.

👀 Note: We have already written a guide that covers all the plans Prefect offers.

Apache Airflow

The open-source Airflow software is free to use under Apache 2.0. You can run it on your own servers without license fees. However, you do incur costs for compute, storage, and maintenance.

Managed Airflow services (AWS MWAA, Google Composer, Astronomer) charge based on usage. Airflow has no software cost, but you will have to pay for managed hosting or infrastructure.

ZenML

ZenML’s core framework is open-source and free. You can self-host the ZenML metadata store, container registries, etc., without license costs.

Apart from the open-source free plan, the platform offers a hosted control plane (ZenML Pro) for collaboration. Its pricing is transparent: the Basic Pro plan is $25 per month (1 workspace, 1 project, 1 pipeline, 30 runs).

The Scale plan is custom-priced and lets you customize everything - workspace, projects, pipelines, models, and pipeline runs per month.

The Scale plan also offers advanced deployment options, authentication, service account, compliance, and more.

Prefect vs Airflow vs ZenML: Which One Should You Choose?

Selecting between Prefect, Airflow, and ZenML requires understanding your team's specific needs and constraints. Each platform excels in different scenarios.

✅ Choose Prefect when your team values Python-native development and needs dynamic workflows. Prefect shines for data engineering teams building complex pipelines with varying computational requirements. The platform's modern architecture and developer experience make it ideal for businesses starting fresh without legacy orchestration infrastructure.

✅ Choose Apache Airflow when you need battle-tested reliability and extensive integrations. Airflow's maturity and community support make it the safe choice for enterprise deployments. If your organization already uses Airflow for data pipelines, extending it for ML workflows leverages existing expertise and infrastructure.

✅ Choose ZenML when you want purpose-built ML pipeline orchestration with flexibility in execution backends. ZenML's ability to run on top of Airflow or other orchestrators while adding ML-specific features provides a unique value proposition. Teams focused on the end-to-end ML lifecycle from experimentation to production should give ZenML a try.

Many successful ML teams use combinations of these tools. You might use Airflow for data pipelines, Prefect for dynamic feature engineering workflows, and ZenML for model training and deployment orchestration. The key is choosing tools that complement your team's skills and integrate with your existing infrastructure.

Ready to turn your ML chaos into MLOps magic? Book a ZenML demo today and discover how to build production-ready pipelines in minutes, not months.

📚 Related Comparison articles to read:

.png)