You might have seen this happen: a training pipeline that should take two hours suddenly runs for sixteen. No clear logs. No obvious failure. Just...slower. Your team scrambles to debug, only to discover that someone changed a parameter three weeks ago and no one documented it. This is how data and ML teams break down: not through complex failures, but through quiet erosion.

The core problem isn't just execution; it's reproducibility, visibility, and control. When you can't clearly answer ‘what ran, why it ran, and how to run it again,’ you don't have a workflow system. You have technical debt with a cron job.

Prefect, Temporal, and ZenML each attack this problem from different angles. In this Prefect vs Temporal vs ZenML article, we explain how they get the job done.

Remember, this isn't about picking the ‘best’ tool. It's about understanding which architectural philosophy matches your team's actual problem. In this comparison, we break down how each tool works, what it optimizes, and where it fits in your stack.

Prefect vs Temporal vs ZenML: Key Takeaways

🧑💻 Prefect: A workflow orchestrator designed for data pipelines. It excels at scheduling, retries, and observability without forcing users into a DSL or heavy infrastructure. The framework does not provide ML-native lifecycle concepts such as model versioning or dataset lineage.

🧑💻 Temporal: A durable execution platform designed for backend services. It’s great at running mission-critical code that must not fail, even if the server crashes or the network goes down. Temporal offers strong guarantees but requires teams to operate or depend on a dedicated workflow service.

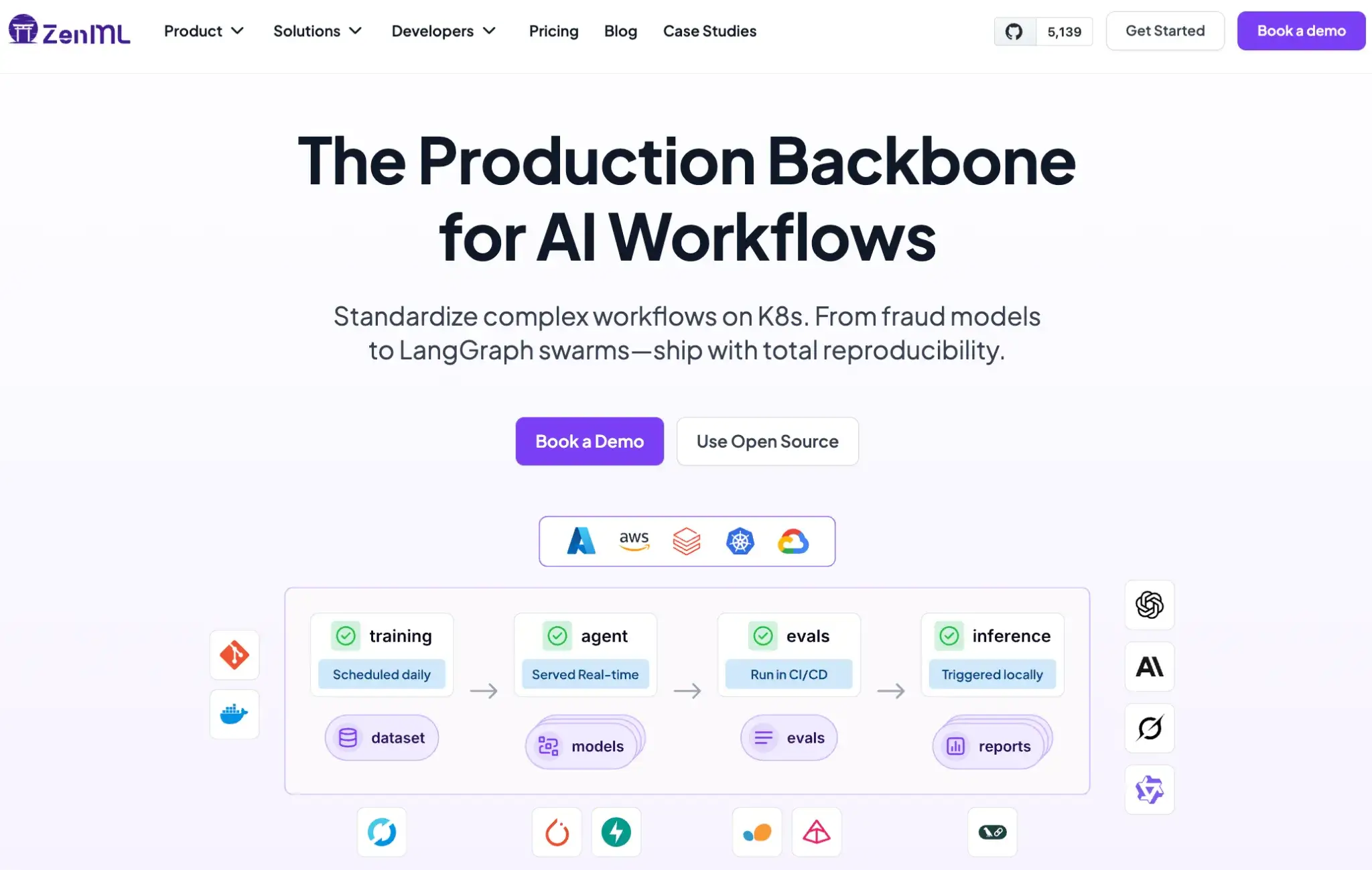

🧑💻 ZenML: An MLOps + LLMOps framework designed for machine learning lifecycles. It runs on top of existing orchestrators and manages metadata, artifacts, and lineage. Our product lets you write pipeline code once and deploy it across supported orchestrators and environments (e.g., Airflow, Kubeflow, Tekton, Kubernetes, SageMaker, Vertex AI, AzureML, and local).

Prefect vs Temporal vs ZenML: Features Comparison

While all three tools run code, the mechanisms they use are radically different. But before we dive deep, here's a quick comparison table:

Feature 1. Core Concept

Prefect

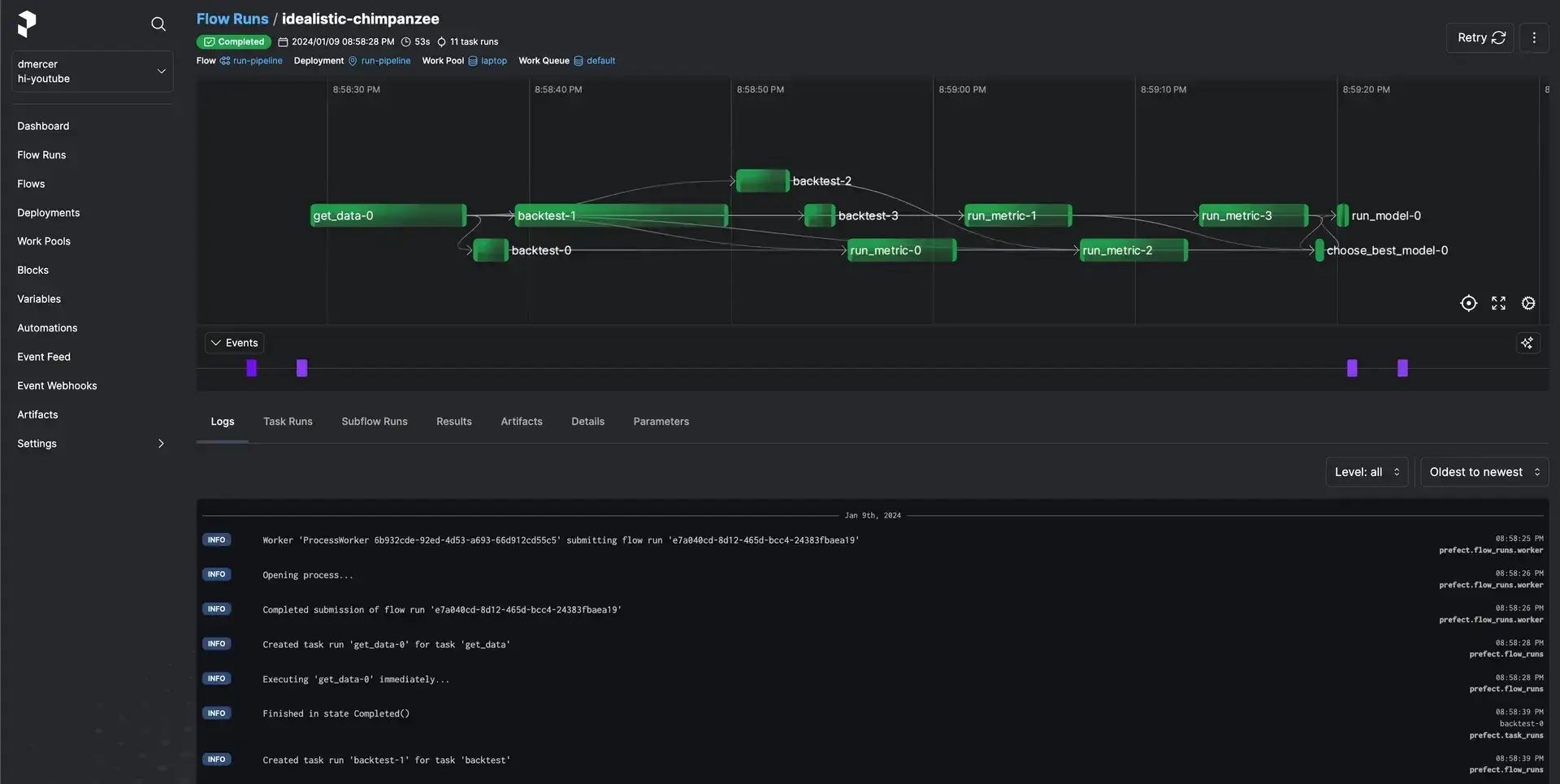

Prefect uses flows and tasks as its primary concepts. You write standard Python code and add decorators @flow and @task to designate the orchestration logic. A @flow is a function that encapsulates a workflow, and @task decorators mark smaller units of work within that flow.

For example, a simple Prefect workflow might be:

In this snippet, say_hello is a task and greeting_flow is the flow orchestrating it. Prefect handles execution order, caching results, retrying failures, etc., automatically without requiring you to rewrite your logic into a DSL (Domain Specific Language).

Temporal

Temporal uses workflows and activities, but unlike Prefect, these are typically defined as methods and functions in a Temporal SDK.

Consider a workflow as the master sequence and activities as the steps, similar to tasks, but with stronger isolation. Each activity can even run on a different machine or in a different language.

Using Temporal’s Python SDK, for example, one would write something like:

Here, GreetingWorkflow.run is the workflow definition, and say_hello is an activity. Temporal persists the Workflow's state event-by-event, which allows your workflow to resume exactly where it left off after a failure.

You can say it’s a built-in durability. You write your workflow in code as if it will never fail, and Temporal ensures it won’t. It’s a powerful model, though it requires more engineering setup than the other tools.

ZenML

In ZenML, the central concept is an ML pipeline, composed of one or more steps. You define a pipeline with the @pipeline decorator and each step with @step, very much like flows and tasks, but focused on ML use cases.

Each step is a Python function that performs one task, like data preprocessing, model training, and evaluation, and ZenML handles passing outputs to inputs and automatically tracking artifacts.

Here’s a basic example:

When you run basic_pipeline(), ZenML orchestrates the execution of basic_step and stores its output in an artifact store behind the scenes.

ZenML automatically tracks metadata for each pipeline run. This ML-centric design means the core pipeline and step abstraction in ZenML provides more built-in context, like dataset or model versioning, than generic task abstractions in Prefect or Temporal.

Bottom Line:

- Prefect wins for general Python automation due to its simplicity and low barrier to entry.

- Temporal is the clear winner for backend reliability, where systems must recover from failures seamlessly.

- ZenML leads the way for ML teams by treating data and models as first-class citizens rather than mere side effects of code execution.

Feature 2. Scheduling and Triggers

Scheduling answers when workflows run and what triggers them is an important functionality when building MLOps pipelines. Now, all three tools are good at scheduling, but each has its own set of functions.

Prefect

Prefect treats scheduling as a first-class citizen. You can define schedules in your deployment configuration (YAML or Python) using Cron strings, Intervals, or RRules.

Prefect also supports event-driven scheduling, where a flow runs in response to a webhook or an internal event. These are managed through deployments and visible in the UI.

This means you could schedule a pipeline to run daily at 9 AM and also configure it to run when a new file lands in cloud storage or when an API call is received. For most data and training jobs, this covers daily retraining, backfills, and ad hoc runs without additional systems.

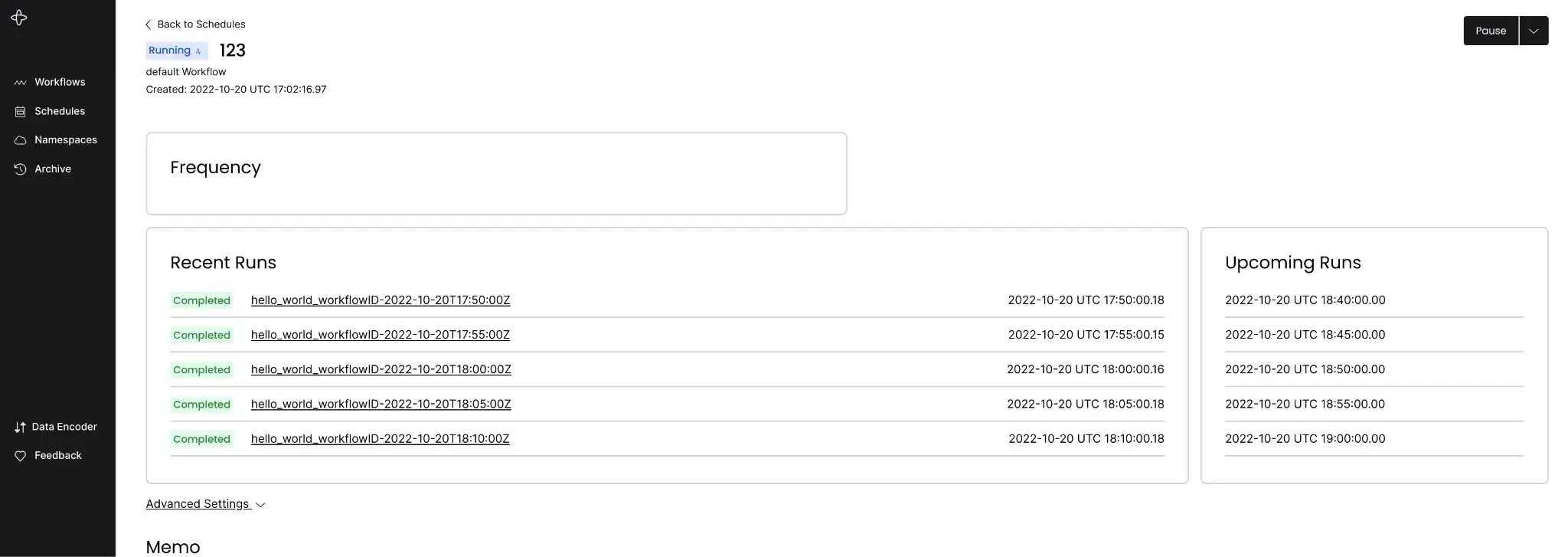

Temporal

Temporal includes native scheduling for time-based workflow. Its built-in scheduler is durable and state-aware, unlike standard external cron systems. You can define long-running schedules using durable timers and workflow sleeps, without relying on external cron jobs.

Workflows can wait for external input, resume instantly, and handle retries without losing state. This is because Temporal’s server actively manages the scheduled executions, providing options to pause, backfill, or update schedules programmatically.

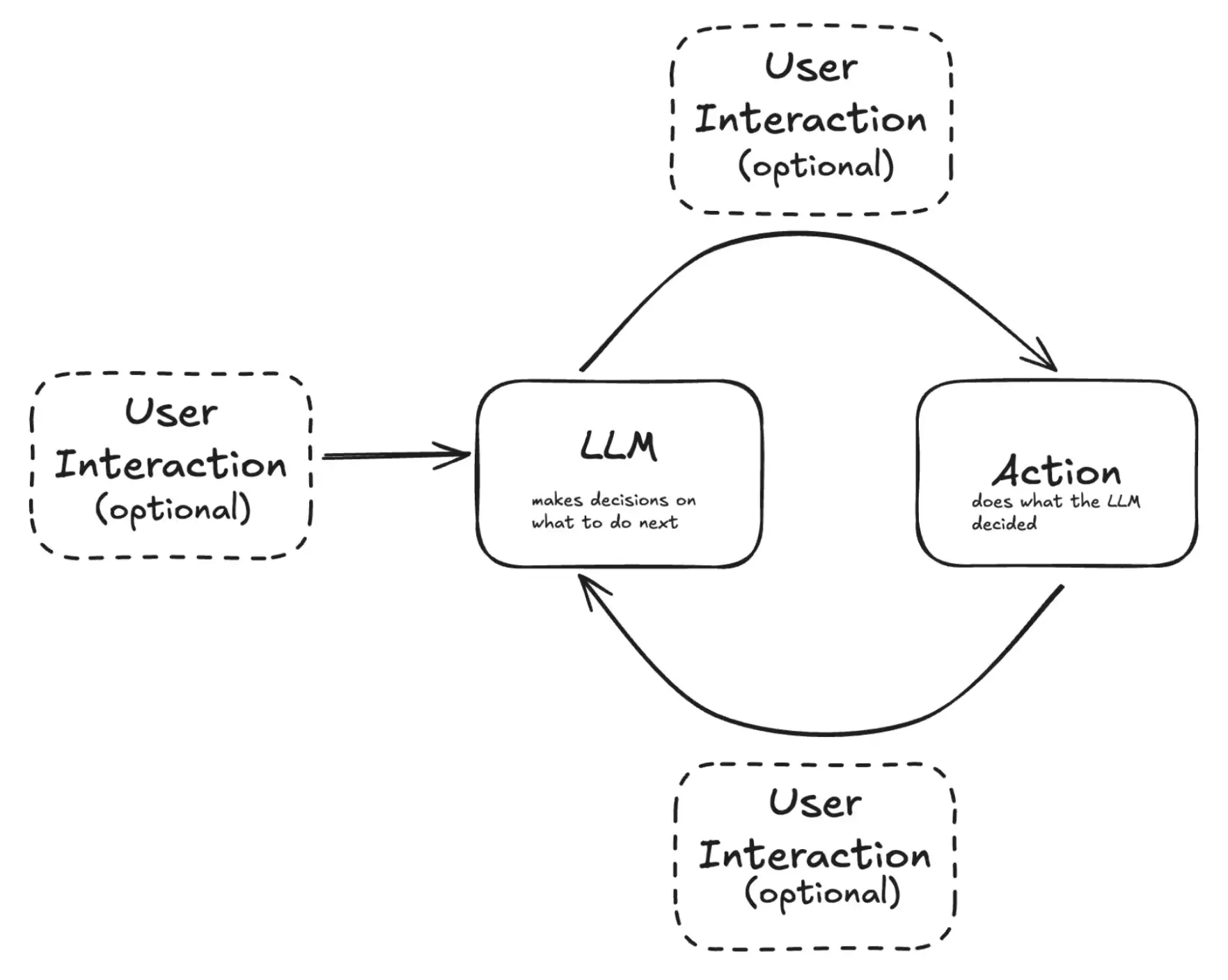

Apart from time schedules, triggers in Temporal typically involve signals. A user or API can send a signal to trigger a workflow. This signal mechanism is how Temporal supports event-driven behavior or human-in-the-loop patterns.

ZenML

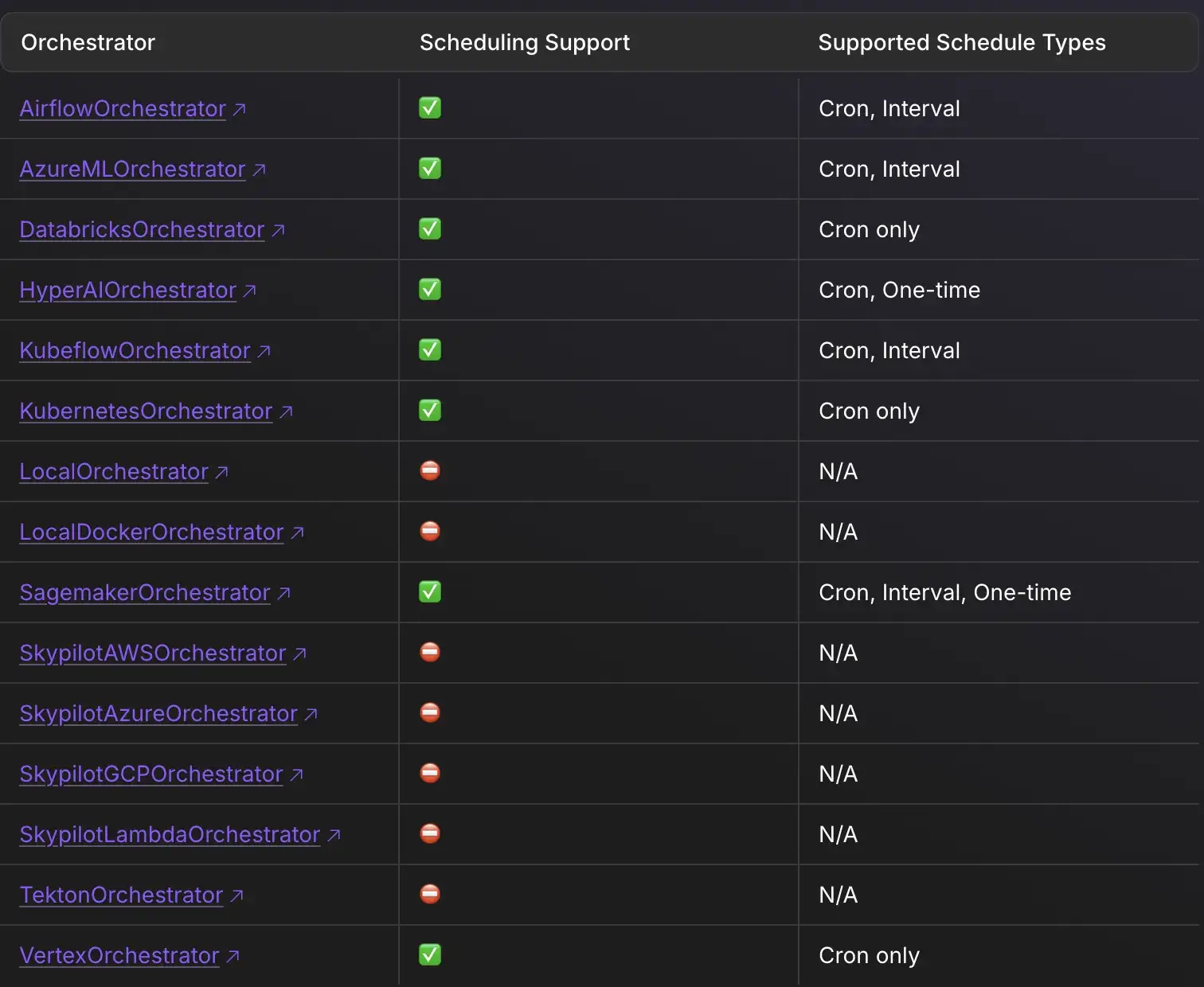

ZenML is an abstraction layer. It does not include a native scheduling engine; it delegates scheduling to the underlying orchestrator.

- If you run ZenML on Airflow, it creates an Airflow DAG with the schedule you defined.

- If you run on Kubeflow, it creates a Recurring Run. This gives you the flexibility to use the best scheduler for the job while keeping your pipeline logic independent.

There are several other orchestrators via which you can schedule jobs inside of ZenML:

If you think of the process above, it’s a win-win for both you and ZenML; you benefit from ZenML’s pipeline abstraction and tracking, and you use whichever scheduling mechanism fits, whether it’s cron, CI, or manual trigger. This is a conscious design choice to avoid duplicating what orchestrators can do.

Feature 3. Human In the Loop

Prefect

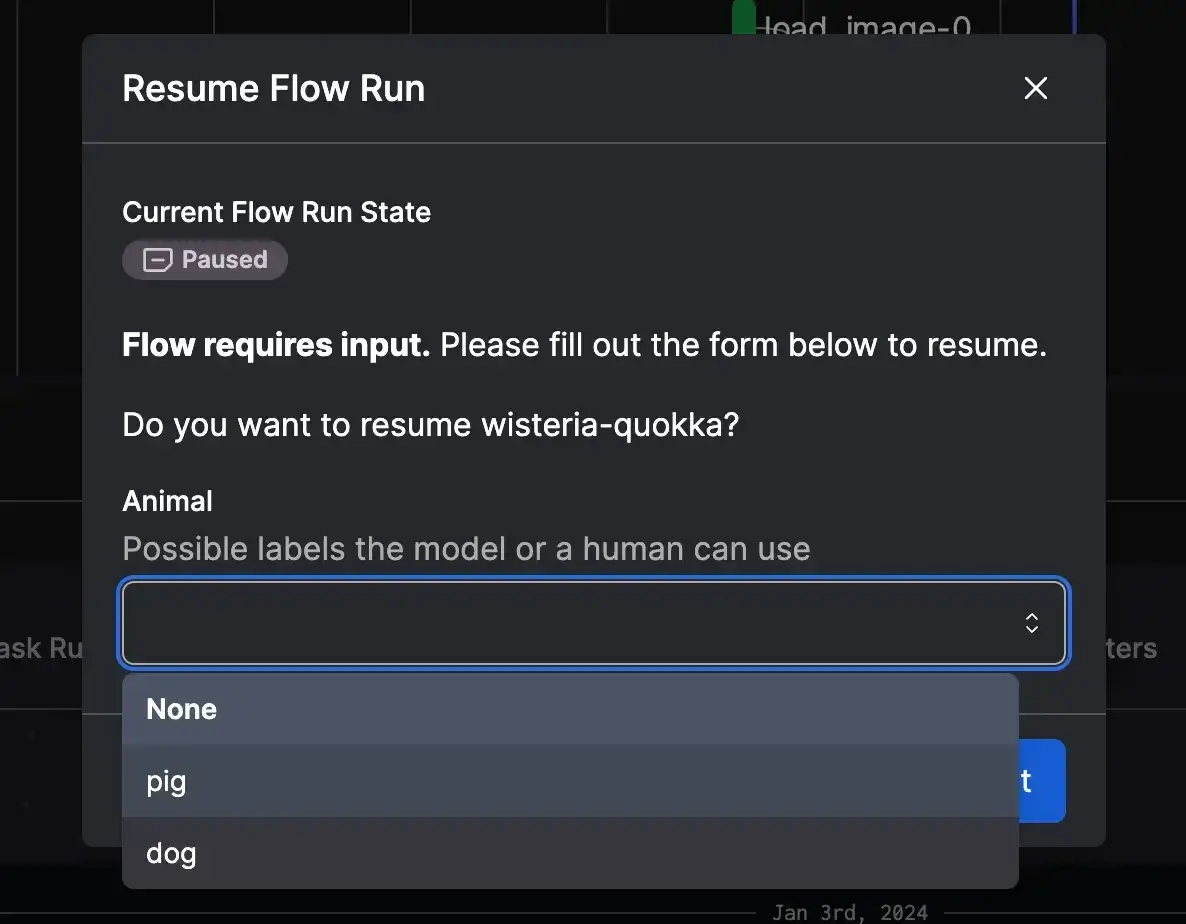

Prefect supports Interactive Workflows. You can pause a Flow run using explicit pause or state-based logic, then resume it via the UI or API. In practice, you might implement a human-in-loop step by having a task check for an approval flag. While not as out-of-the-box as specialized BPM tools, Prefect makes human-in-the-loop feasible with a bit of custom logic.

Temporal

Temporal is built for long-running workflows, including those that involve humans. A workflow in Temporal can wait indefinitely for a signal. For example, you can build an approval workflow that sends a notification to the approver, then calls workflow.wait_condition() and patiently waits for a signal.

Because the Temporal server retains state, the workflow can remain dormant for a long time without holding up any resources. This makes Temporal a good choice for long-running business processes.

It’s less UI-centric than Prefect but extremely robust programmatically.

ZenML

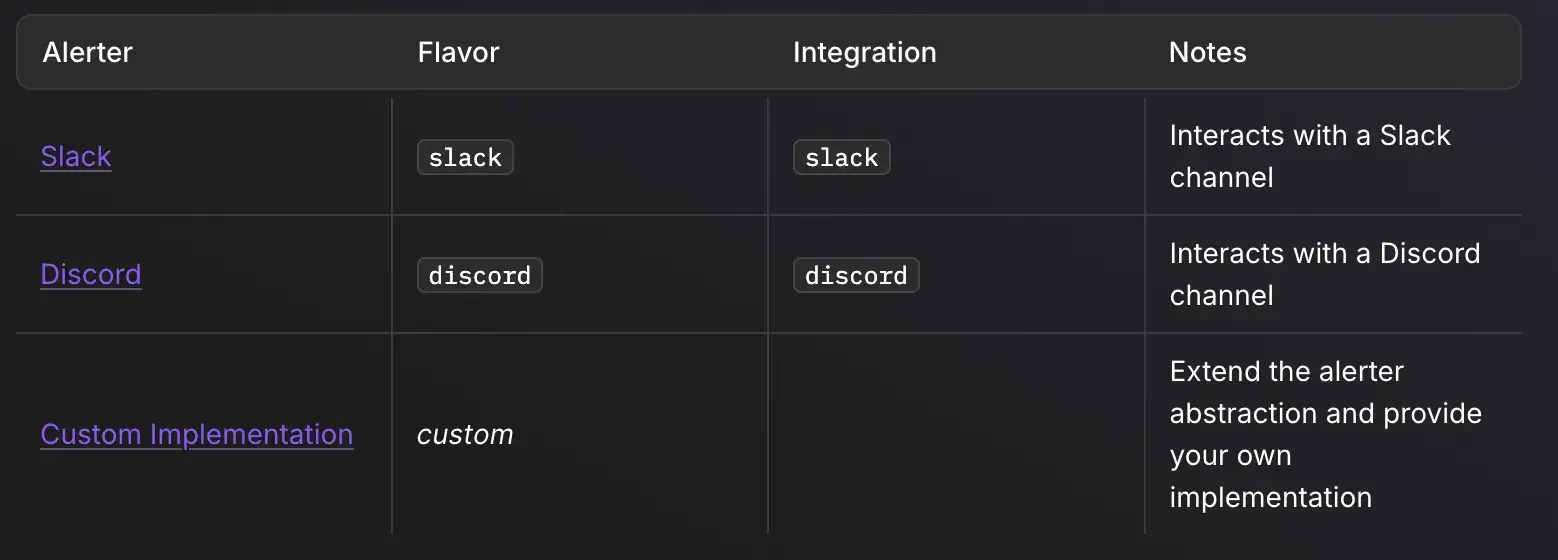

ZenML approaches human-in-the-loop from an integration perspective. While a ZenML pipeline won’t natively pause itself for a human without relying on the underlying orchestrator or external systems, you can incorporate steps that involve a human in the processes.

Let’s say you write a ZenML pipeline that sends a Slack/Discord message for approval and then polls for a response or receives a callback as part of a step. While this may require coding the mechanism in other tools, ZenML’s flexibility and focus on workflow portability allow you to implement human interaction at any pipeline stage using the tools you prefer.

Prefect vs Temporal vs ZenML: Integration Capabilities

An orchestrator is only as good as the tools it connects with. Especially in data/ML workflows, integration with external systems is crucial. This is an area where each of these tools differs significantly:

Prefect

Prefect offers a rich library of integrations with cloud platforms and services like AWS, GCP, Azure, Kubernetes, Snowflake, dbt, Slack, GitHub, and more.

These come with pre-built Prefect tasks or blocks that make it easy to interact with those systems. For example, using prefect-aws, you can quickly integrate an S3 upload or AWS Lambda invocation as a task in your flow.

Prefect’s Blocks provide a way to store configuration for those integrations via the UI or CLI, so you can reuse them securely across flows.

Temporal

Temporal takes a more agnostic, code-first approach to integrations. There is no official, first-party connector catalog in Temporal’s SDK; instead, you integrate by writing Activities that call whatever external service or library you need.

This means Temporal can integrate with anything you can code against, but you have to implement the integration logic.

ZenML

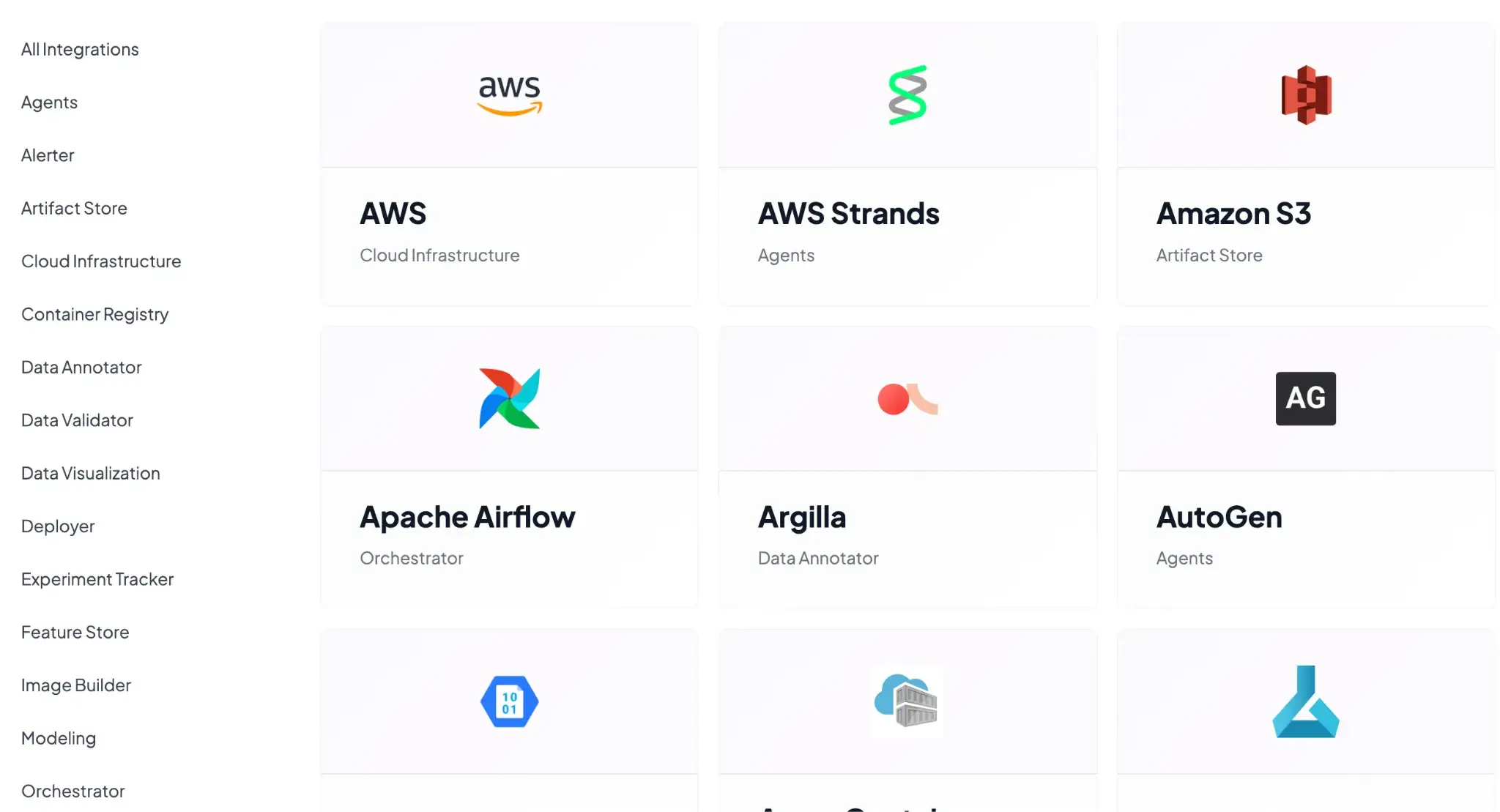

ZenML uses a Stack concept. It integrates with almost every tool in the MLOps lifecycle and many others in the LLMOps space. To name a few:

- Orchestrators: Airflow, Kubeflow, Tekton

- Experiment Trackers: MLflow, Weights & Biases

- Model Deployers: KServe, Seldon

- Step Operators: SageMaker, Vertex AI

You can swap these components out via a simple config change. For example, you can swap the stack component configuration for the experiment tracker, but if your step code directly uses MLflow APIs (for example, mlflow.log_metric). You will need to update the logging code to match the new tracker.

Prefect vs Temporal vs ZenML: Pricing

All three tools are open source. Self-hosting is free, but managed cloud services are available with varied pricing plans.

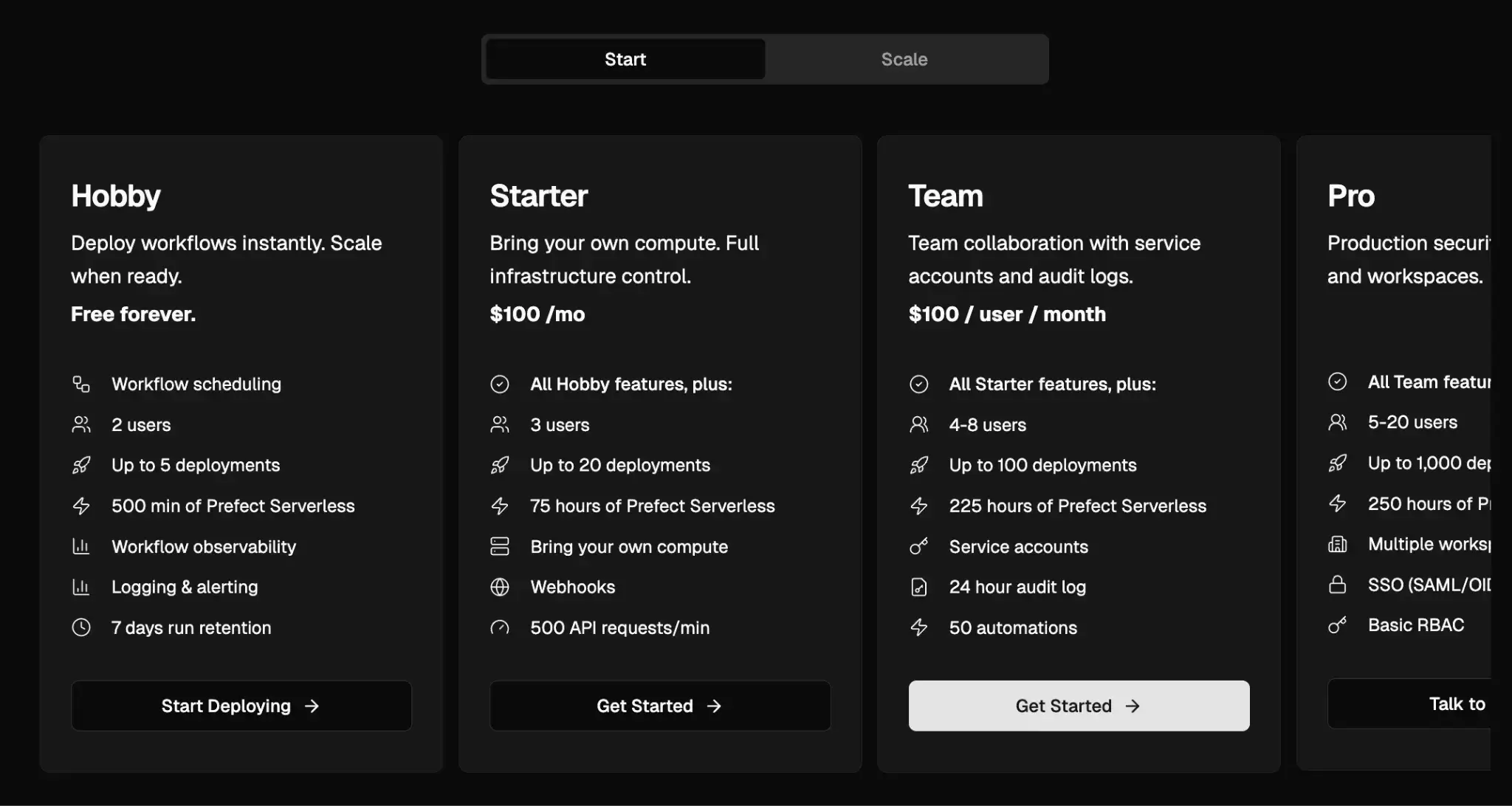

Prefect

Prefect offers a free forever plan for hobbyists and solo developers. Other than that, it has five paid plans:

- Starter: $100 per month (up to 3 users)

- Team: $100/user per month

- Pro: Custom pricing

- Enterprise: Custom pricing

- Managed: Custom pricing

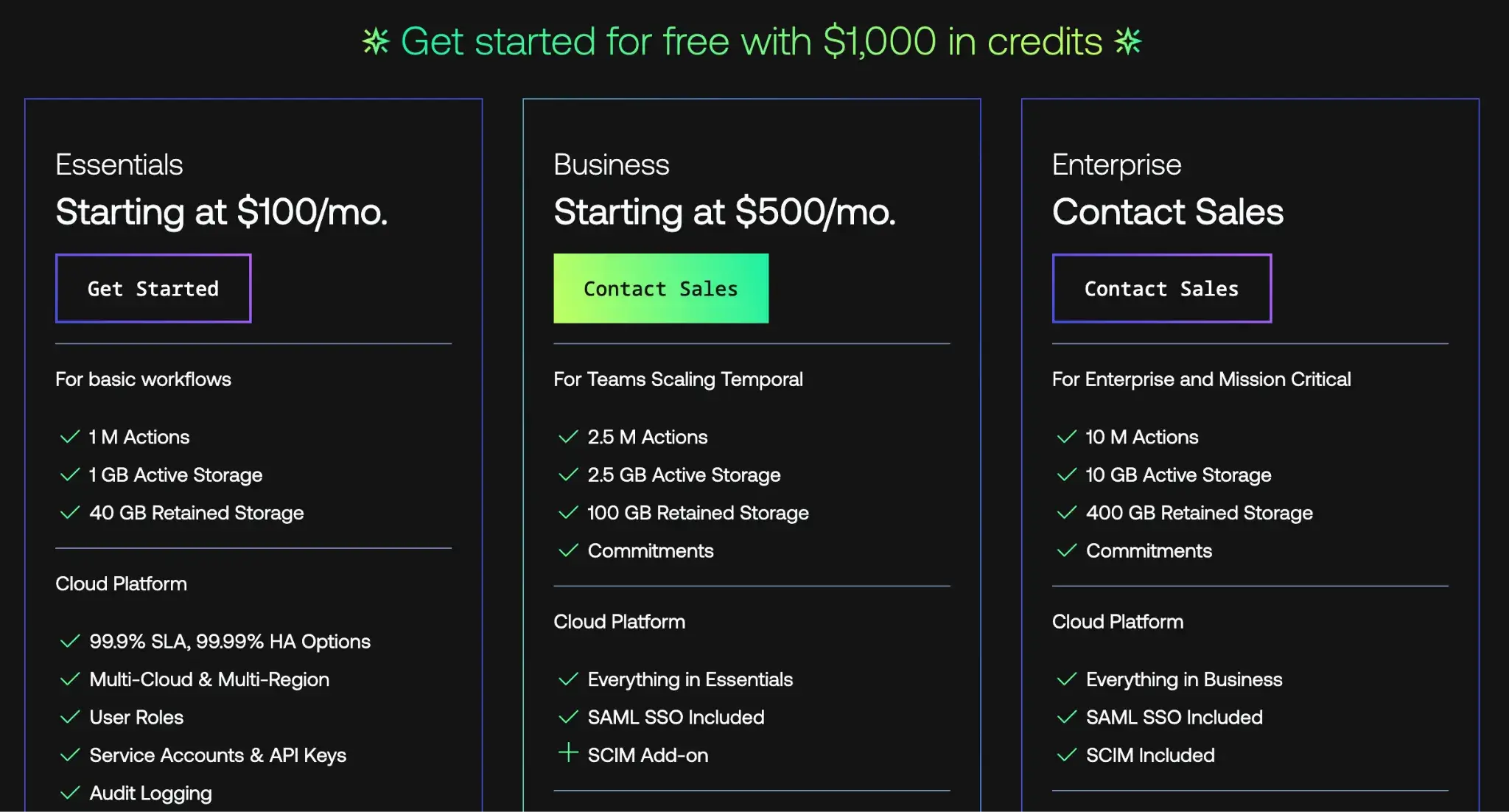

Temporal

Temporal has a free open-source version and three paid plans:

- Essentials: Starting at $100 per month

- Business: Starting at $500 per month

- Enterprise: Custom pricing

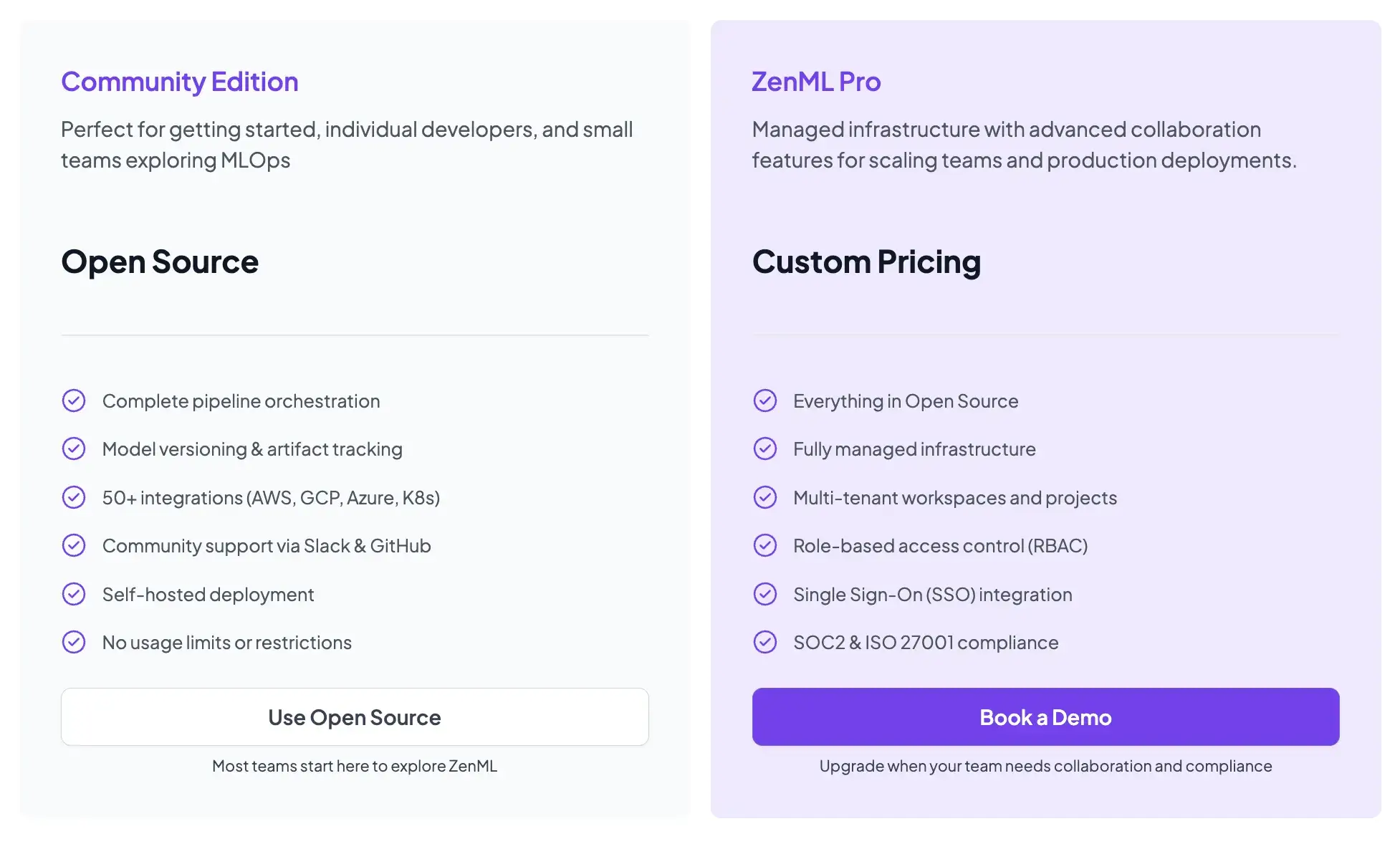

ZenML

ZenML is also open-source and free to start.

- Community (Free): Full open-source framework. You can run it on your own infrastructure for free.

- ZenML Pro (Custom pricing): A managed control plane that provides the dashboard, user management, RBAC, stack configuration, and enterprise features.

How ZenML Controls and Helps You With MLOps Outer Loop

Moving code from the Inner to the Outer loop is the biggest bottleneck in MLOps.

It typically requires rewriting Python scripts into glue code. You end up writing Airflow DAGs, Dockerfiles, or Kubernetes manifests. This adds friction and slows down the deployment cycle.

ZenML automates this transition. It gives you control over the Outer Loop. Here’s how:

- Infrastructure as Configuration: You run pipelines using a Local Stack in the inner loop. You switch your configuration to a Production Stack when you are ready for production. ZenML handles the translation logic automatically. You do not rewrite your code.

- Automated Containerization: The Outer Loop demands reproducibility. This usually requires Docker containers. ZenML can automatically build Docker images for pipeline steps, with the option to customize images when needed.

- CI/CD and Event-Driven Deployment: CI/CD systems or event-driven schedulers can trigger ZenML pipelines. You can set up rigorous outer loop policies. For example, you might want to retrain a model every time a new dataset arrives in S3. You might want to promote a model to production only if accuracy exceeds 90%. You define this using standard Python logic rather than complex orchestrator-specific DSLs.

- Auditability via Lineage: The Outer Loop requires strict governance. ZenML tracks the inputs, outputs, and parameters of every step automatically. You get a complete audit trail for your production models. If a production model fails, you check the ZenML dashboard to see exactly which data slice and code version created it.

📚 Read more comparisons between other MLOps frameworks:

Which One’s the Best MLOps Framework for Your Business?

The choice between Prefect, Temporal, and ZenML depends on your primary purpose:

- Prefect is the right choice when your problem is orchestration, not ML lifecycle management. If your pipelines move data and trigger training jobs, Prefect will do that cleanly.

- Temporal is the right choice when workflow correctness matters more than developer speed. If losing state is unacceptable and workflows span services and humans, Temporal is hard to beat.

- ZenML is the right choice when the problem is not running code, but understanding ML systems over time. If your team needs to answer which data produced which model, or move pipelines across environments without rewrites, ZenML fits naturally.

ZenML acts as the bridge. It allows you to leverage the orchestration power of tools like Airflow, Kubeflow, and many more, while providing the dedicated experiment tracking and model management interface that ML teams desperately need.