Selecting the right framework for multi-agent AI can determine how effectively your AI agents operate in practice. CrewAI and AutoGen are two top open-source frameworks you can use to build AI agents, each with a distinct philosophy.

Both are powerful in their own right – the best choice depends on whether you prioritize defined workflow control or dynamic emergent collaboration in your AI applications.

In this CrewAI vs AutoGen article, we break down the key differences between the two frameworks across features like orchestration, workflow design, prompting style, and human-in-the-loop support. We’ll also discuss how our platform, ZenML, can help you leverage either framework in production.

CrewAI vs AutoGen: Key Takeaways

🧑💻 CrewAI is a role-based orchestration framework designed to make autonomous AI agents collaborate as a structured ‘crew.’ It’s built on the analogy of a human team, where agents have specific roles, goals, and backstories that define their behavior.

🧑💻 AutoGen is a flexible, conversation-driven framework from Microsoft that enables multi-agent workflows through automated chat. It treats agent interactions as a dynamic conversation where multiple agents, including proxies for humans and tools, can contribute.

Framework and Maturity

The maturity and development history of CrewAI and AutoGen provide important context for adoption decisions:

👀 Note: The data in the table above is current as of August 2025 (metrics may change over time).

CrewAI is a newcomer. Its first tag landed in November 2023, yet it now averages over 1.3 million installs per month on PyPI and has crossed 35K stars across ∼1.6K commits. That growth pace signals fast production uptake for multi-agent workflows.

AutoGen dates back to October 2019. It has matured under Microsoft stewardship with 48K+ stars, 7.4K forks, and ~3.7K commits. Monthly install volume sits near 100K, suggesting a research-heavy but steady user base.

Before we go feature-by-feature, here’s a quick comparison overview of CrewAI and AutoGen:

CrewAI vs AutoGen: Features Comparison

Here’s a TL;DR of the feature comparison for CrewAI and AutoGen:

To understand how CrewAI and AutoGen differ, we compare them across several core features. Each feature highlights a different facet of building AI agent systems, from how multiple agents are orchestrated to how humans can be kept in the loop.

📚 Also read:

Feature 1. Multi-Agent Orchestration

Multi-agent orchestration refers to how each framework manages and coordinates a team of agents working together on tasks. CrewAI and AutoGen take fundamentally different approaches to orchestrating agents:

CrewAI

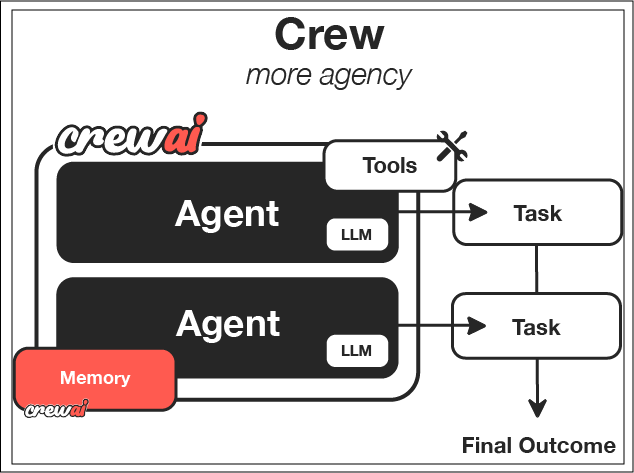

CrewAI organizes agents into groups called ‘crews’ that work together under a defined workflow. The framework uses an event-driven orchestration engine to manage agent interactions.

You start by explicitly defining a sequence or hierarchy of tasks, assign each task to a specific agent role, and CrewAI’s runtime ensures each agent does its part in order. This structured approach is akin to a project manager assigning duties to team members.

Each agent in a crew has a designated role (for example, Researcher, Writer, Validator) and will only handle tasks relevant to that role. CrewAI emphasizes this team analogy – it encourages you to think of agents as collaborative team members working together on a mission.

Because of this design, CrewAI excels at predefined workflows where the steps to solve a problem are known in advance. You get fine-grained control over coordination: the developer can decide when agents should communicate, what information they exchange, and how to handle their outputs.

The event-driven model also allows for complex control flows (conditional branches, parallel tasks, etc.) while keeping the process deterministic.

In short, CrewAI’s orchestration is top-down: the developer orchestrates how agents will cooperate via the crew and flow definitions.

AutoGen

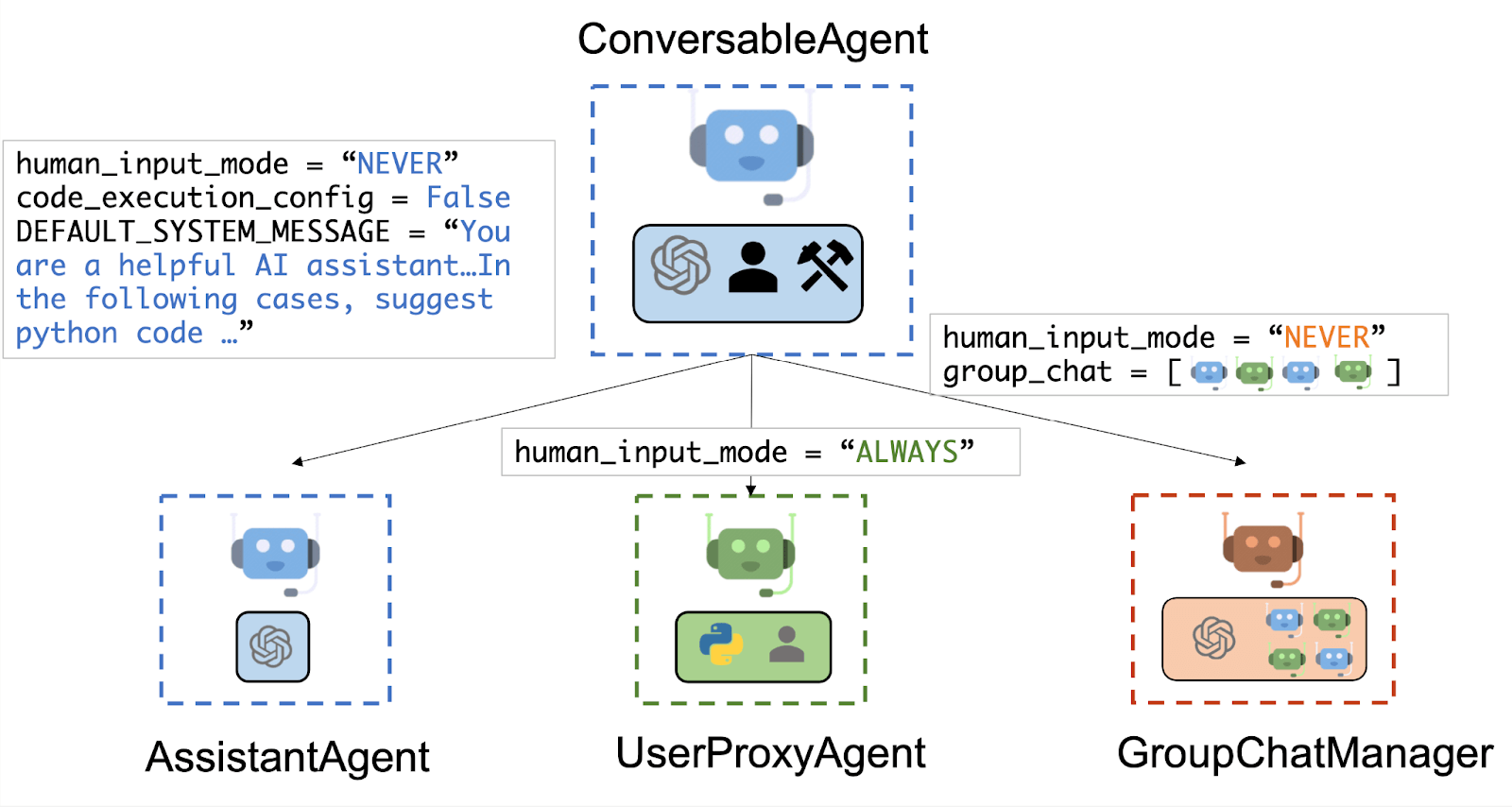

AutoGen approaches orchestration in a more organic, conversational way. It exposes an AgentChat abstraction where any number of agents (and even human users) converse in a shared session to solve a task.

There isn’t a fixed sequence of tasks; instead, each agent decides when to speak (i.e., produce an output) based on the ongoing conversation. One agent might ask another a question, or multiple agents can brainstorm together by exchanging messages.

This is a bottom-up, emergent form of orchestration: the overall solution arises from the dialogue between agents.

With AutoGen, you typically instantiate several agent instances, each possibly with a different role or specialty, and start a chat session with them. The framework handles the message passing and can incorporate rules about turn-taking or termination conditions.

For example, you might have a Solver agent and a Critic agent that talk to each other until they agree on an answer. There is no central ‘manager’ agent by default (though you could include one as part of the chat); coordination happens via the conversation itself.

This makes AutoGen quite flexible – agents can dynamically decide to follow up, ask for clarification, or attempt different solutions all within the chat loop. The trade-off is that you relinquish some direct control over the exact sequence of events.

AutoGen’s orchestration shines in scenarios where the problem is open-ended or the steps to solve it aren’t predetermined.

Feature 2. Workflow Authoring Interface

This feature addresses how developers create and define the agent workflows or conversations. A good authoring interface can greatly improve productivity and clarity when building multi-agent systems.

CrewAI

CrewAI is a code-first framework. The primary way to define agents and their workflows (called Flows in CrewAI) is through Python code and configuration files.

For this, you explicitly write the agent classes or use the provided abstractions (Agent, Task, Crew, etc.) to set up roles and tasks. However, CrewAI also provides tooling to simplify this process. It offers YAML template scaffolding and a CLI tool to generate project templates. This functionality is a lot more powerful and clear if you’re an experienced developer. Every step of the workflow is visible in your code, and version control for YAML/py files is straightforward.

There is also a Crew Studio (particularly in the enterprise version), which includes a visual task builder and other UI aids for designing workflows. But generally, expect to interact with CrewAI via code and text-based configs.

The only pain is a steeper learning curve if you’re not used to coding agent logic; you have to understand CrewAI’s abstractions (agents, tasks, crews, flows) and possibly LangChain integrations.

CrewAI’s philosophy in authoring is “explicit is better than implicit” – you define as much as possible up front (agent roles, tools, workflow structure), which reduces ambiguity when the agents run.

👀 Note: CrewAI’s strong ‘agent as team member’ concept comes through in authoring as well – when writing prompts or defining agents, you’re encouraged to clearly describe the agent’s role, backstory, and goals in the YAML or code, almost like writing a job description for a team member. This can lead to more consistent agent behaviors.

AutoGen

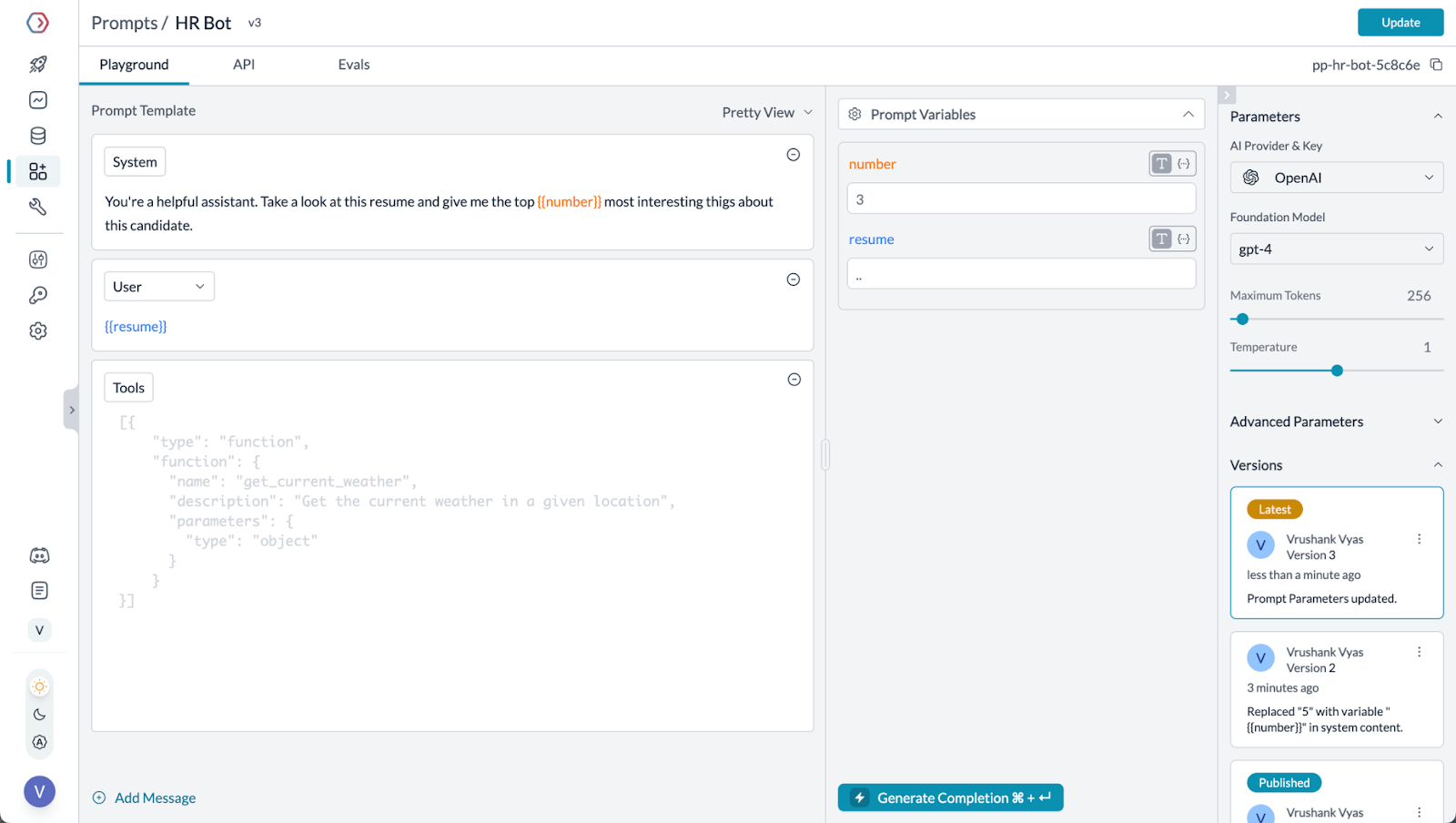

AutoGen provides a more user-friendly, low-code interface for authoring workflows, alongside its programmatic API.

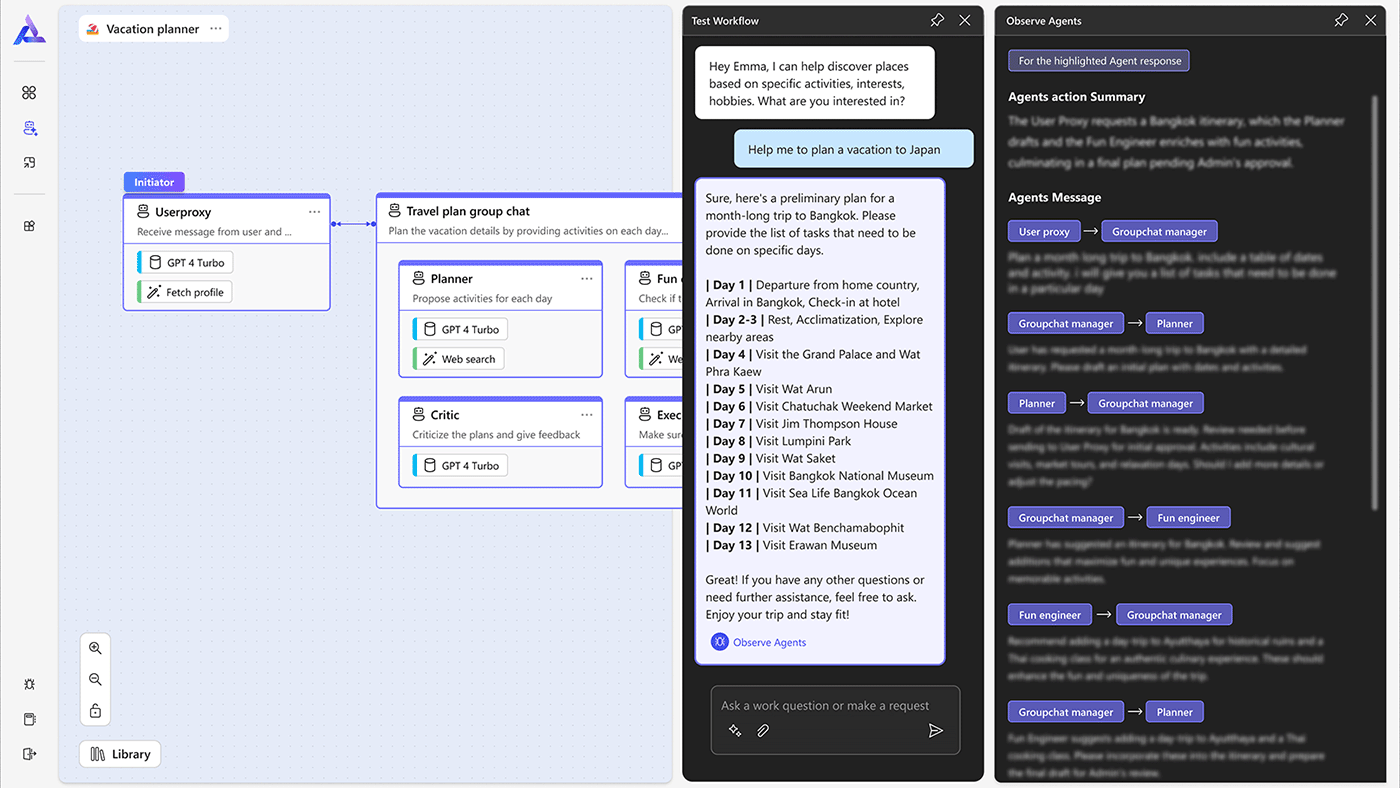

Microsoft introduced AutoGen Studio, a graphical UI that allows you to design multi-agent interactions without heavy coding.

In AutoGen Studio, you can configure agents (their roles, initial prompts, and tools) and then draw out the conversation flow or dependencies between agents in a canvas.

It’s a bit like designing a dialogue tree or flowchart: you can specify triggers for an agent to respond, loop in a human for input, etc., using a point-and-click interface. This is great for rapid prototyping or if you prefer visual design. The Studio can even export the workflow definition as JSON or code, which you could then refine further.

You still have the option to author AutoGen workflows purely in code if you prefer. AutoGen’s Python API lets you instantiate agents and start chats with full control.

But the key difference is that AutoGen’s default tooling aims to lower the barrier with a no-code/low-code approach. And that’s why AutoGen is a good tool for quick experiments – you can tinker with agent setups in the UI, run test conversations, and iterate quickly.

Feature 3. Prompting and Reasoning

This feature compares how each framework handles prompt management and the reasoning process of agents (i.e., how agents generate and refine their outputs).

CrewAI

With CrewAI, you have the ability to define per-agent prompt templates and reasoning steps explicitly.

Each agent can be given a carefully crafted prompt (often consisting of instructions that define its role and task), which is used whenever that agent is invoked. CrewAI also enables a workflow to be broken into explicit reasoning or verification steps.

For example, you might configure an agent to first output a draft reasoning or plan, pause for review, and then proceed to execution. These intermediate steps can be surfaced for a human to inspect or for logging purposes before the workflow continues.

This means you can see the chain of thought the agent is following and intervene if something looks off.

CrewAI’s design leans towards transparency and control in prompting: you decide what each agent knows and when it should reflect or stop for confirmation. Additionally, the framework has features for prompt customization and optimization.

Because CrewAI workflows are structured, you might also implement guardrails like ‘if Agent A’s reasoning is too long or contains XYZ, flag it for human review’ relatively easily.

In essence, CrewAI’s approach to reasoning is scripted but inspectable – you script the general flow of thought, but also get a window into that thought process as it executes.

AutoGen

AutoGen’s prompting approach is rooted in the LLM chat paradigm. Each agent in an AutoGen conversation operates by processing a sequence of messages (some from other agents, some from a system or human).

You typically provide each agent with a system prompt that defines the agent’s role and personality, and then allow the conversation to unfold.

The key feature here is that AutoGen automatically handles the agent’s reasoning as part of the conversation loop. Agents can output not just final answers, but also structured thoughts or reflections that are separate from their answers, thanks to built-in patterns like ReAct and self-reflection.

For example, an AutoGen agent might internally decide:

Step 1: I should clarify the question

Step 2: I should compute an answer

Step 3: I should double-check it.

AutoGen can surface parts of this internal decision-making as special messages (sometimes marked as the agent’s ‘thoughts’) which can then be used to improve subsequent responses.

There’s also an automatic reflection mechanism – after an agent produces an answer, it can critique its own output and attempt a revised answer if needed. All of this happens within the chat session, without you explicitly coding each step.

However, the downside is less direct control: you don’t explicitly insert a pause for reflection at a certain point – the agent decides when to reflect based on the conversation logic. Tuning this can require adjusting the prompts or using AutoGen’s design patterns to guide the agent.

Feature 4. Human‑in‑the‑Loop

Human-in-the-loop (HITL) capabilities determine how a human can intervene or collaborate with the AI agents during execution. Both CrewAI and AutoGen acknowledge the importance of human oversight in different ways.

CrewAI

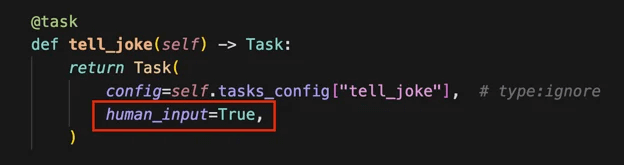

CrewAI includes a dedicated Human-In-The-Loop functionality. This feature lets you insert points in your workflow where a human operator can review or provide input. By setting human_input=True on a specific task, you can turn it into a formal review gate.

CrewAI’s HITL implementation is quite flexible: you can ask your team to verify intermediate results, provide missing information, or even decide which branch of a workflow to take next. Under the hood, CrewAI treats human input as another event in the event-driven flow – e.g., an agent might raise a ‘needs approval’ event, and the system will wait until a human responds with an approval or changes.

Because CrewAI was designed with enterprise use in mind, the HITL features align with real-world needs like compliance checking or expert review cycles.

For example, in an automated data analysis pipeline, CrewAI could generate a summary and then pause for a human analyst to confirm the findings before sending the summary to stakeholders.

From the developer’s perspective, enabling human-in-the-loop is often as simple as marking a task or using a particular task type that requires human confirmation.

AutoGen

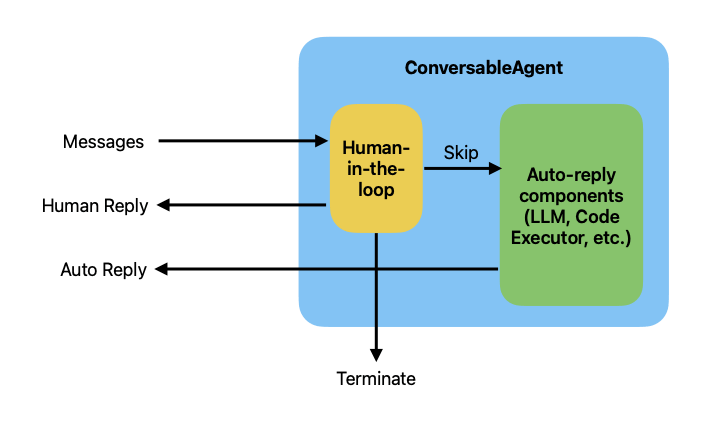

AutoGen also supports human involvement, but it manifests as part of the agent conversation itself.

In AutoGen’s architecture, you can include a special agent - often referred to as a UserProxyAgent that represents a human user in the AgentChat loop.

During the multi-agent chat, this human agent can be invoked at certain times to get input or approval.

For example, you might have two AI agents debating a solution and occasionally call on the human agent to vote or give additional info. AutoGen provides patterns for how a human can be prompted during an ongoing conversation – essentially, one of the agents defers to the human by sending a message like - Waiting for human approval, and the system pauses until the human responds.

After the human’s message is received, the AI agents continue the conversation with that feedback.

This means inserting a human is as easy as adding another participant to the chat team. The framework can do this either synchronously (halting until you reply) or asynchronously (the conversation ends and could be resumed later with more input).

AutoGen’s human-in-the-loop is quite useful for interactive applications – think of a scenario where agents are helping a user plan a trip: the user (human) might jump into the agent chat to steer preferences like I actually prefer hotels over Airbnbs, and then let the agents continue planning with that guidance.

One caveat with AutoGen’s approach is that it requires designing the conversation such that the agents know when to ask the human. You either have to build it into the prompt logic (e.g., an agent’s policy that, if uncertain, consults you) or use AutoGen’s team structures that automatically include a user step.

CrewAI vs AutoGen: Integration Capabilities

Beyond core features, you should consider how each framework integrates with the rest of your tech stack and external services. Integration capabilities include connecting to databases, APIs, third-party tools, or deployment pipelines.

CrewAI

CrewAI comes with its own integrations and also uses external Python libraries. It natively includes a library of 40+ built-in tools that comprise:

- LLMs: Groq, OpenAI, Anthropic

- Services: Revium, RagaAI, StartSE

- Education: PWC, DeepLearning, K2 Consulting

- Applications: Composio, Chroma, Cloudera

- Integrations: Notion, Slack, Replit

- Infrastructure: Microsoft Azure, MongoDB, Nexla

AutoGen

AutoGen, while a Microsoft project, is positioned as a more neutral integration hub. Its layered architecture (Core, AgentChat, Extensions) is designed to be extensible and encourages third-party integrations.

Its ecosystem is broad and diverse, and has integrations for:

- Other Agent Frameworks: LlamaIndex, CrewAI, and even LangChain itself.

- Observability Tools: AgentOps, Weave, and Phoenix/Arize.

- Data and Memory: Numerous vector databases (Chroma, PGVector), data platforms (Databricks), and memory services (Zep, Mem0).

- Prototyping: AutoGen Studio provides a no-code/low-code UI for building and testing agent workflows.

This positions AutoGen as a central orchestrator in a flexible, best-of-breed MLOps stack. The choice is a strategic one for development teams: LangGraph offers a polished, vertically integrated experience, while AutoGen offers maximum flexibility and choice in a horizontally integrated ecosystem.

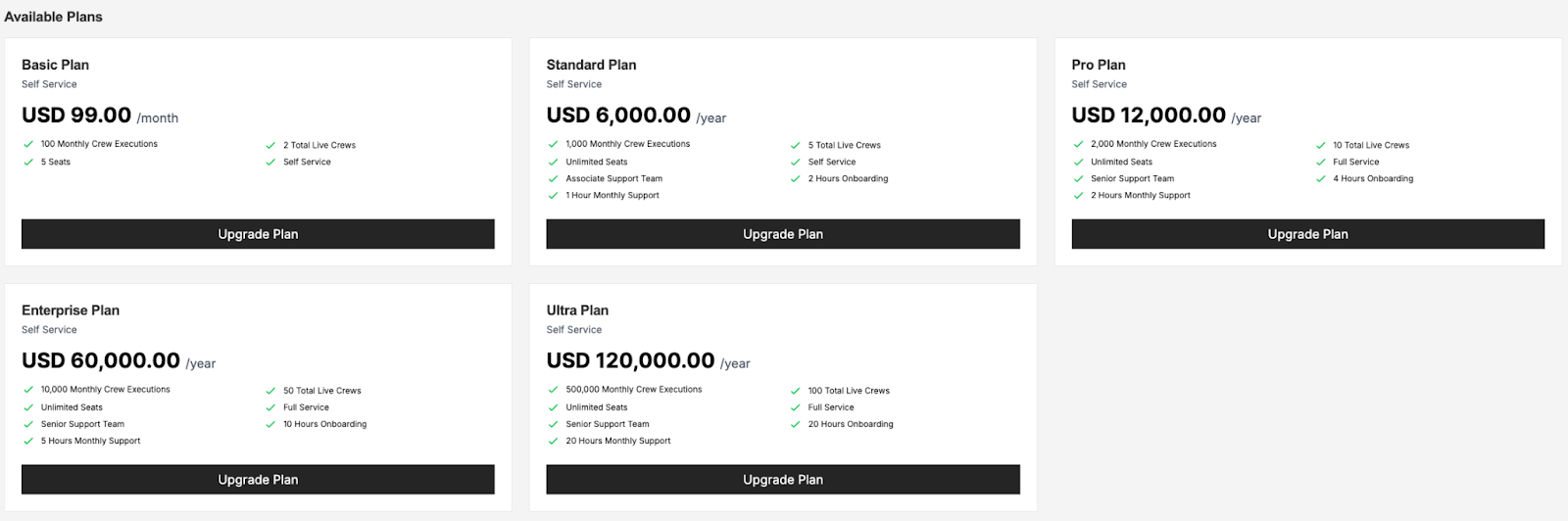

CrewAI vs AutoGen: Pricing

In this section, we discuss pricing for both CrewAI and AutoGen.

CrewAI

CrewAI’s core framework is also MIT-licensed and open-source. But the platform offers several paid plans to choose from:

- Basic: $99 per month

- Standard: $6,000 per year

- Pro: $12,000 per year

- Enterprise: $60,000 per year

- Ultra: $120,000 per year

📚 Also read: CrewAI pricing

👀 Note: CrewAI’s open-source core is the real asset. It gives you full control, zero licence cost, and no artificial limits when you run it on your own stack. The cloud plans add dashboards, hosting, and support, yet their price jumps fast. The Ultra and Enterprise tiers can reach six figures a year. Unless you face strict compliance needs or lack DevOps bandwidth, you can get more value by self-hosting and paying just for the LLM calls behind the scenes.

AutoGen

The AutoGen framework is also open-source (MIT license) and is completely free to use, with no official paid or managed ‘Pro’ version offered by Microsoft.

The costs associated with using AutoGen are entirely indirect and stem from the infrastructure you must provision and manage yourself. These costs typically include:

- LLM API Calls: Fees for using models from providers like OpenAI, Anthropic, or Azure.

- Cloud Compute: The cost of virtual machines or containers needed to host the agents.

- Third-Party Services: Subscription fees for any managed vector databases, observability platforms, or other tools you integrate into your stack.

While there are no officially managed plans, it’s possible to build a managed AutoGen service on a cloud platform like Microsoft Azure. The framework's free nature is part of a broader strategy to drive adoption of the surrounding Microsoft ecosystem, particularly Azure and Azure OpenAI services.

How ZenML Helps In Closing the Outer Loop Around Your Agents

Frameworks like CrewAI and AutoGen focus on the inner loop of agent development, defining roles, prompts, and interactions.

Deploying these agents into production, monitoring them, retraining models, and ensuring reproducibility constitute the outer loop. That’s where ZenML, our unified MLOps + LLMOps platform comes in.

Here are the features that will help you with this outer loop:

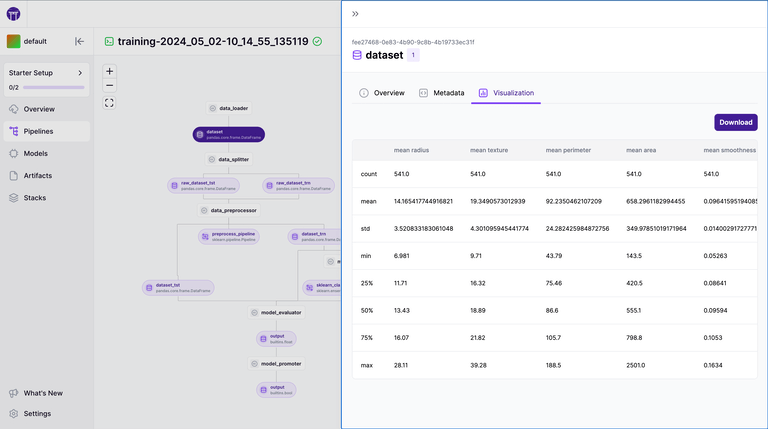

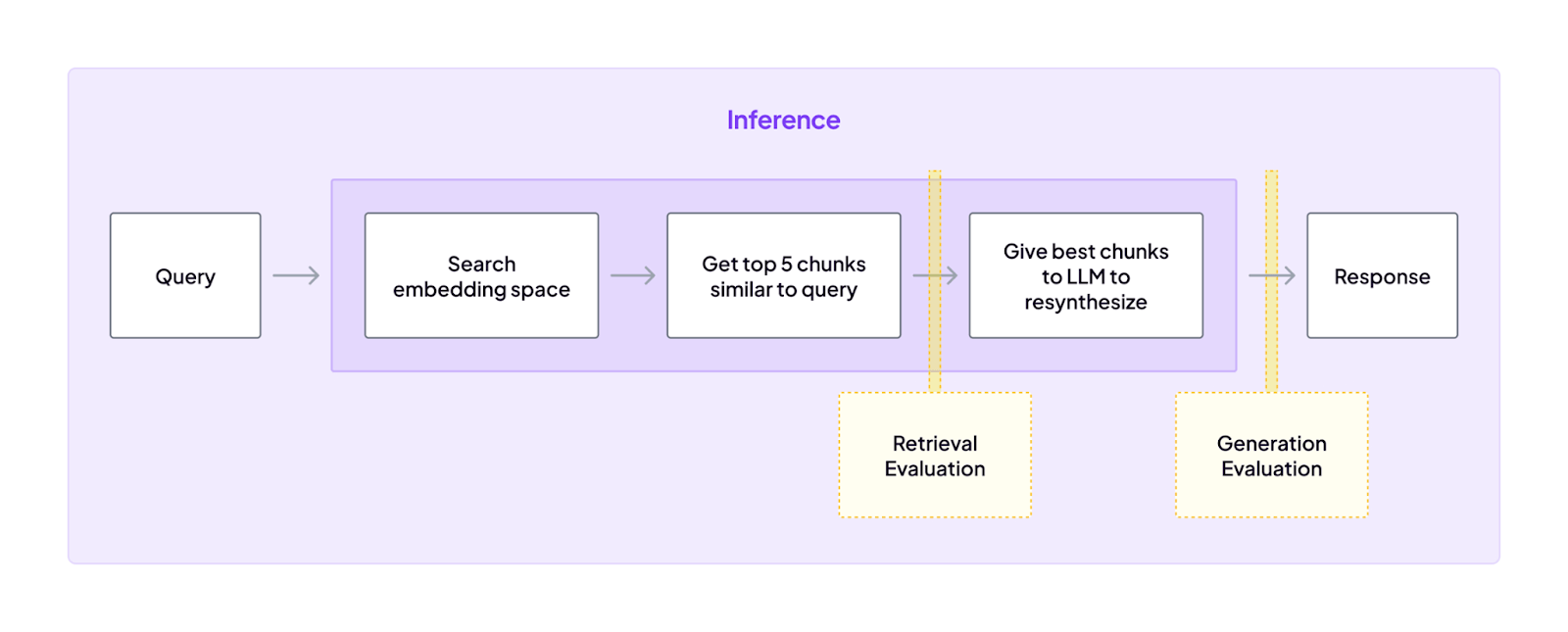

Pipeline‑Centric Orchestration: ZenML pipelines (@pipeline) can wrap an entire crew or AutoGen team. Steps (@step) can prepare data for retrieval‑augmented generation, execute the agent workflow, and evaluate outputs. Each run is versioned, reproducible, and portable across local machines and cloud compute.

Here’s a basic step you can create in ZenML:

And here’s a code for the pipeline:

Unified Visibility and Lineage: ZenML tracks artifacts, prompts, agent outputs, and dependencies. A central dashboard visualizes this lineage, enabling teams to debug failures, identify drift, and reproduce past runs. This is especially valuable for complex multi‑agent chats where state is implicit.

Continuous Evaluation: ZenML pipelines can include evaluation steps to test agent responses for correctness, bias, or hallucination. You can flag and/or re-queue bad runs automatically. CrewAI and AutoGen focus on execution, but ZenML provides the feedback loop for quality improvement.

Flexibility and Avoid Vendor Lock‑In: ZenML’s stack abstraction decouples code from infrastructure. You can mix a CrewAI crew and an AutoGen team in the same pipeline, run it on any supported orchestrator (Kubernetes, Airflow, etc.), and swap out vector databases or LLM providers without rewriting your agent code.

ZenML thus acts as the glue that moves agentic AI projects from notebooks to robust production systems. If you’re building complex workflows or need to meet enterprise compliance requirements, adopting ZenML alongside CrewAI or AutoGen will save you significant engineering effort.

📚 Other comparison articles worth reading:

Which Agentic AI Framework Should You Choose?

The choice between CrewAI and AutoGen depends entirely on your project's goals, your team's expertise, and your requirements for control versus flexibility.

✅ Choose CrewAI if your primary goal is to build highly reliable, auditable, and role-based agentic systems. It is the superior choice when you need predictable workflows that mirror human team structures, benefit from an opinionated and intuitive API, and want to deploy agents into structured business processes.

✅ Choose AutoGen if your primary goal is rapid prototyping, research, and exploring the emergent, collaborative capabilities of multi-agent conversations. Its flexibility, low-code Studio, and conversation-driven approach make it excellent for tackling complex problems where the solution path is unknown at the outset.

Bottom line: There is no one-size-fits-all answer. Evaluate the complexity of your task, the tolerance for uncertainty, and the environment in which your agents will operate. Then pick the framework that aligns best – or combine them to leverage the strengths of each.

If you’re interested in taking your AI agent projects to the next level, consider joining the ZenML waitlist. We’re building out first-class support for agentic frameworks (like CrewAI and AutoGen) in ZenML, and we’d love early feedback from users pushing the boundaries of what AI agents can do. With ZenML, you can seamlessly integrate whichever agent framework you choose into robust, production-grade workflows. Join our waitlist to get started. 👇