Vellum AI is an end-to-end LLM orchestration and observability platform designed to help teams build, deploy, and manage AI-powered apps and agentic systems. While Vellum offers a free entry point, the transition from free to paid is significant, ranging from $0 to $500 per month. Which brings us to the question: Is it worth the investment?

In this Vellum AI pricing guide, we break down the platform's pricing structure, examine key cost factors, and evaluate whether the investment makes sense for you.

P.S. We also explore how Vellum AI integrates with broader MLOps + LLMOps workflows and introduce ZenML as a complementary solution for managing the complete agentic AI lifecycle.

Vellum AI Pricing Summary

Here's a quick summary of Vellum AI's pricing tiers, along with key features:

Vellum AI is worth investing in when:

✅ You need an all-in-one LLMOps platform for building AI agents or LLM applications. Vellum provides prompt versioning, workflow orchestration, RAG tools, and evaluation in one package.

✅ Your team requires a collaborative platform for both technical engineers and non-technical members, like product managers and domain experts, to work on AI systems.

✅ Your team values a visual interface for rapid prototyping and interaction on complex AI agents while maintaining the flexibility to work with code.

✅ You value support and enterprise features. For companies in regulated industries or those who need VPC deployment and formal SLAs, Vellum’s enterprise offering ensures compliance like HIPAA via BAA and dedicated support.

However, you might look for Vellum alternatives if:

❌ Your team has more than 5 users, but can’t afford a custom Enterprise plan.

❌ You’re a solo developer or hobbyist who only needs a few hundred prompt calls or basic LLM testing.

❌ You need extensive MLOps capabilities beyond AI workflow orchestration. Vellum AI focuses primarily on the inner loop of AI development. If you’re building LLM-driven apps, then it’s good, but the moment you want to do ‘traditional MLOps,’ then you realise that it’s too opinionated or too focused on LLM functionalities.

❌ You already have a robust MLOps stack or prefer open frameworks. Vellum is a closed-source platform. If your team is comfortable using open-source libraries with your own monitoring and orchestration, you might find Vellum’s features nice-to-have but not worth the price.

Vellum AI Pricing Plans Overview

Vellum AI’s pricing is structured in three tiers: a Free ‘Startup’ tier, a fixed-rate Pro plan, and Enterprise plans with custom pricing.

Unlike many MLOps platforms that charge per user or per compute hour, Vellum AI charges for actual AI operations. The platform uses a combination of execution-based limits and feature tiers to differentiate between its plans.

The platform’s pricing approach makes costs more predictable for small teams that know their expected usage patterns. But at the same time, it requires enterprise upgrades for larger organizations.

The free plan lets you start building and deploying small-scale LLM apps at no cost, while the Pro plan ($500 per month) is the entry point for serious team or production use. Enterprise plans are negotiated for each customer.

👀 Note: Vellum does not publicly list pricing details on its website. You get to know about its accurate pricing when you’re inside your Vellum account. Here’s Vellum AI’s original pricing:

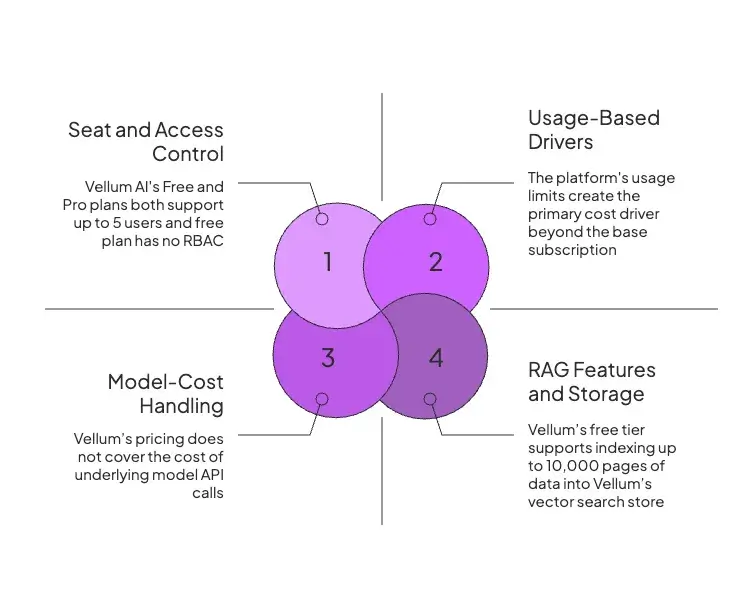

Vellum AI Pricing Factors to Consider

When evaluating Vellum’s pricing for your projects, ensure you are aware of the factors below:

1. Seat and Access Control

Vellum AI's Free and Pro plans both support up to 5 users. While this approach is economical for small teams, exceeding the limit will force you to a ‘Pro’ upgrade. Meaning, a significant jump in overall costs.

Role-Based Access Control (RBAC) is another key consideration tied to users. This feature is unavailable on the Free plan, which means all users share the same permissions.

Advanced role-based access control (RBAC) is only available in Pro and Enterprise plans, and allows you to assign roles like Admin, Editor, or Read-only to team members.

If your project requires strict separation of duties or multi-team collaboration (common in enterprise environments), you’ll need a paid plan.

2. Usage-Based Drivers

The platform's usage limits create the primary cost driver beyond the base subscription.

- Prompt Execution: Vellum limits the number of prompts you can execute per day. With the free plan, this limit is 50 prompts per day. The Pro plan has 5000 per day. If your AI app or agent handles dozens of user queries per hour, you’ll quickly exceed these thresholds.

- Workflow Execution: Likewise, Vellum limits the workflow execution you can run per day. The Free tier allows 25 executions, and the Pro tier allows 250 executions per day.

- Evaluation Runs and Testing: If you run A/B prompts or perform automated evaluations using Vellum’s ‘Bulk execution’ feature, factor that into usage. While Pro offers 5k execution calls a day ~150k per month, extremely test-heavy workflows might hit limits quickly. Put simply, the more aggressive you are with Vellum’s testing features, the more you inch toward the Pro limits.

👀 Note: There isn’t an option to simply pay for extra executions on the fly; hitting the limit means either you upgrade or you’re done for the day.

3. Model-Cost Handling

Vellum’s pricing does not cover the cost of underlying model API calls. You must manage these expenses separately through your relationships with model providers like OpenAI, Anthropic, or Google.

In fact, Vellum allows you to bring your own API keys. This means your $500 per month covers the platform’s features and infrastructure, but you need to budget for model cost on top of that.

The good part is that Vellum doesn’t seem to mark up API calls; you pay exactly what the provider charges. The downside is that if you use expensive models heavily, for example, GPT-5 thinking mode, your total cost could be pretty high.

4. RAG Features and Storage

If your goal is to build a RAG-first system, considering how Vellum handles data storage and retrieval queries is a must. As of now, Vellum’s free tier supports indexing up to 10,000 pages of data into Vellum’s vector search store.

Notably, large-scale RAG usage is a factor that might push you to enterprise or even require self-hosting your own vector DB outside Vellum.

To get an estimate, consider how often your AI app will query the index; while Vellum doesn’t explicitly meter search queries, heavy query usage may fall under general ‘workflow executions’ if done via workflows.

All Pricing Plans that Vellum AI Offers

Vellum AI currently offers three pricing tiers. Let’s see what each one has for you:

Free: $0 per Month

The Free plan serves as the entry point to the Vellum ecosystem. It’s designed for individuals and small teams for experimentation, learning, and early-stage development projects, where teams can assess the platform's fit for their needs.

Key Features

- Vellum's core toolset: Includes the prompt engineering playground, the visual workflow builder, document retrieval (RAG) capabilities, and evaluation tools.

- Usage: Get 50 Prompt Executions and 25 Workflow Executions per day. Allows indexing up to 10K pages of documents for RAG context.

- Team size: Up to 5 users within an organization.

- Max workflow runtime: 180 minutes is the limit on the maximum length that a workflow can run for.

Limitations

- Lacks role-based access control.

- External monitoring capabilities are restricted, which makes integrating with existing observability infrastructure difficult.

- VPC installation is not included.

- Single Sign-On (SSO), on-prem deployment, dedicated support, and SLAs are not included.

- The 5-user limit may quickly become restrictive as AI initiatives expand.

✅ Sign up if: You want to test Vellum’s interface and capabilities on a small project, do a proof-of-concept, or simply develop a prototype agent.

❌ Skip if: You find yourself hitting the daily limits regularly, or you need a feature like RBAC or more users

Pro: $500 per month

The Pro plan is priced at $500 per month. It doubles down on prompt and workflow execution limits, and is designed for professional teams that are ready to move their AI app into production.

Key Features

- Much higher usage allowance: Pro users can execute 5,000 prompts per day (100X from the free plan) and 250 workflow runs per day (10X from the free plan).

- Role-Based Access Control (RBAC): Invite team members and assign specific roles, such as Admin, Editor, and Member, and maybe some as Read-only viewers, to control who has access to what.

- Enterprise features: Integrations with external observability platforms like Datadog or custom webhooks, ability to handle image/table data in RAG, enterprise-grade support, and SLAs.

Limitations

- Still limited to 5 users maximum.

- A notable cost jump from free to $500/month.

- You still have limits; custom limits require upgrading to the Enterprise tier.

- VPC compliance and advanced compliance features will require Enterprise negotiation.

✅ Sign up if: Your team requires a collaborative workspace with production-grade features and doesn’t have more than five users or strict compliance requirements.

❌ Skip if: You need more than 5 users or significantly more usage, you’re basically edging into Enterprise territory.

Enterprise: Custom Pricing

Vellum AI's Enterprise plan uses custom pricing negotiated based on specific organizational requirements. It's typically an annual contract, likely in the tens of thousands of dollars per year.

Key Features

- Unlimited usage: Execution limits and user counts are custom-negotiated to match your scaling demands. You might get ‘unlimited’ usage (within fair use) or very high ceilings that are unlikely to be hit.

- More users and workspaces: User seats are unlimited/custom on Enterprise. You can onboard your entire data science and engineering team, and even folks from other departments if needed.

- VPC Install: Deploy within your own Virtual Private Cloud, either on a public cloud provider or on-premises. This deployment model ensures that all data, including prompts and documents, remains within the customer's network perimeter.

- Enterprise features: Custom Contracts, BAA, DPA, Single Sign-On (SSO) integration, detailed audit logs, and the ability to enforce data retention policies.

Is Vellum AI Expensive?

Vellum AI's pricing is polarizing. It’s not straightforwardly expensive or cheap; of course, the jump from ‘Free’ to ‘$500 per month’ is overwhelming. But let’s consider a few angles:

- If you were to replicate Vellum’s capabilities by assembling open-source tools, it would involve setting up multiple dependencies.

- For a company paying developers, $500 might equal only a few hours of an engineer’s time per month.

Key Takeaway: Vellum AI’s pricing is fair for what it offers. The price reflects the value of adopting their specific, collaborative development methodology. But teams that do not align with this model will likely find the platform expensive.

ZenML: An Affordable Alternative to Vellum AI

Really understanding Vellum's price-to-value ratio also requires placing it within the broader MLOps + LLMOps landscape. Think of it as the ‘inner loop’ and ‘outer loop’ of AI agent development.

Vellum is good at handling the inner loop: creating, debugging, and deploying AI agents. Essentially, the environment where the agent's core behavior is born.

However, a production-grade AI agent rarely exists in isolation. It is part of a larger, end-to-end process that requires robust lifecycle management. And this is where ZenML comes in.

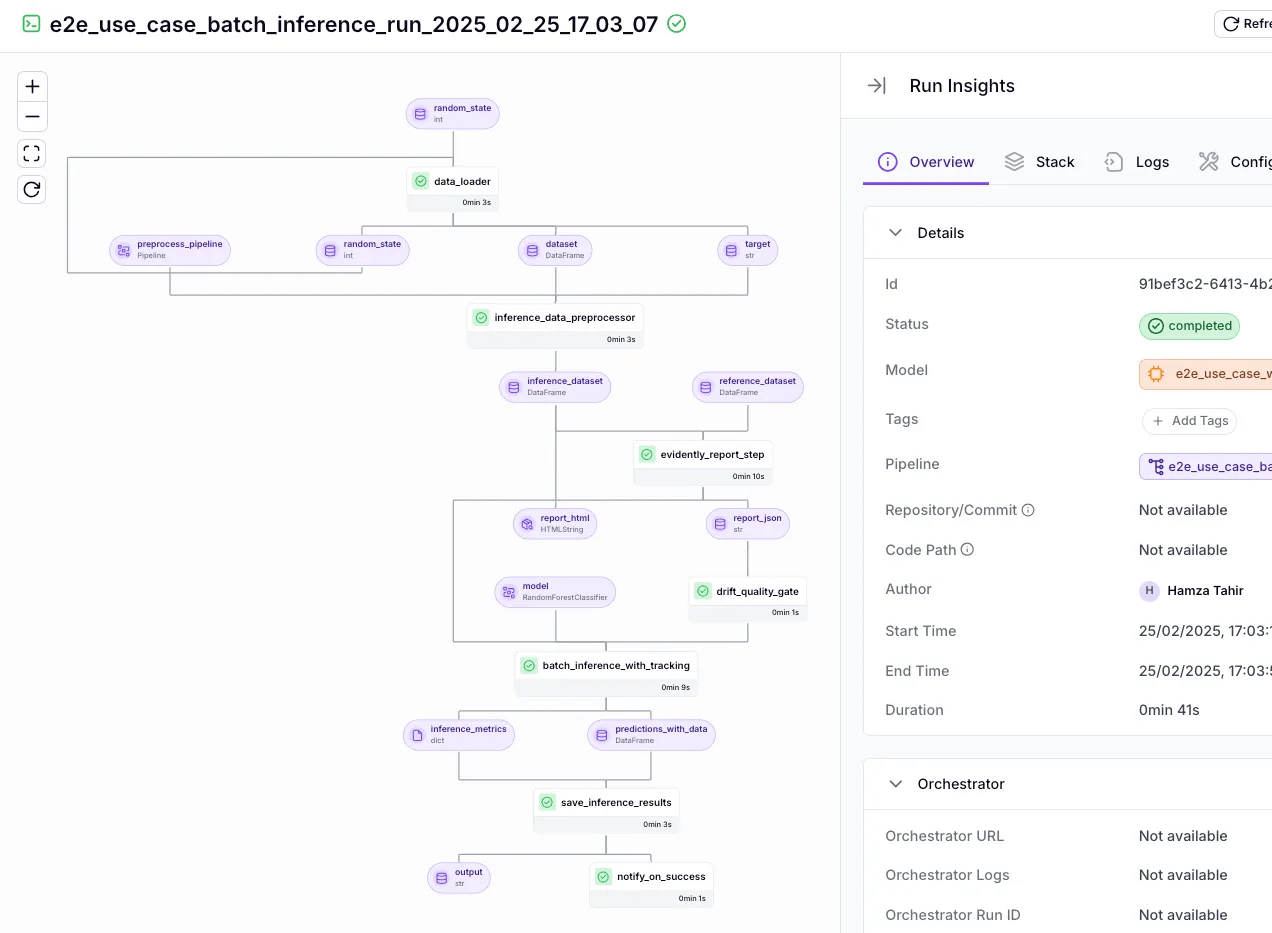

ZenML is an open-source MLOps framework that orchestrates entire machine learning workflows. Paired with Vellum, ZenML takes over the outer-loop responsibilities that Vellum alone doesn’t cover.

In practice, you can use Vellum to build and deploy an AI agent, then embed those agents within larger ZenML processes that handle data ingestion, preprocessing, post-processing, and integration with downstream systems.

Here’s how ZenML does it easily and affordably:

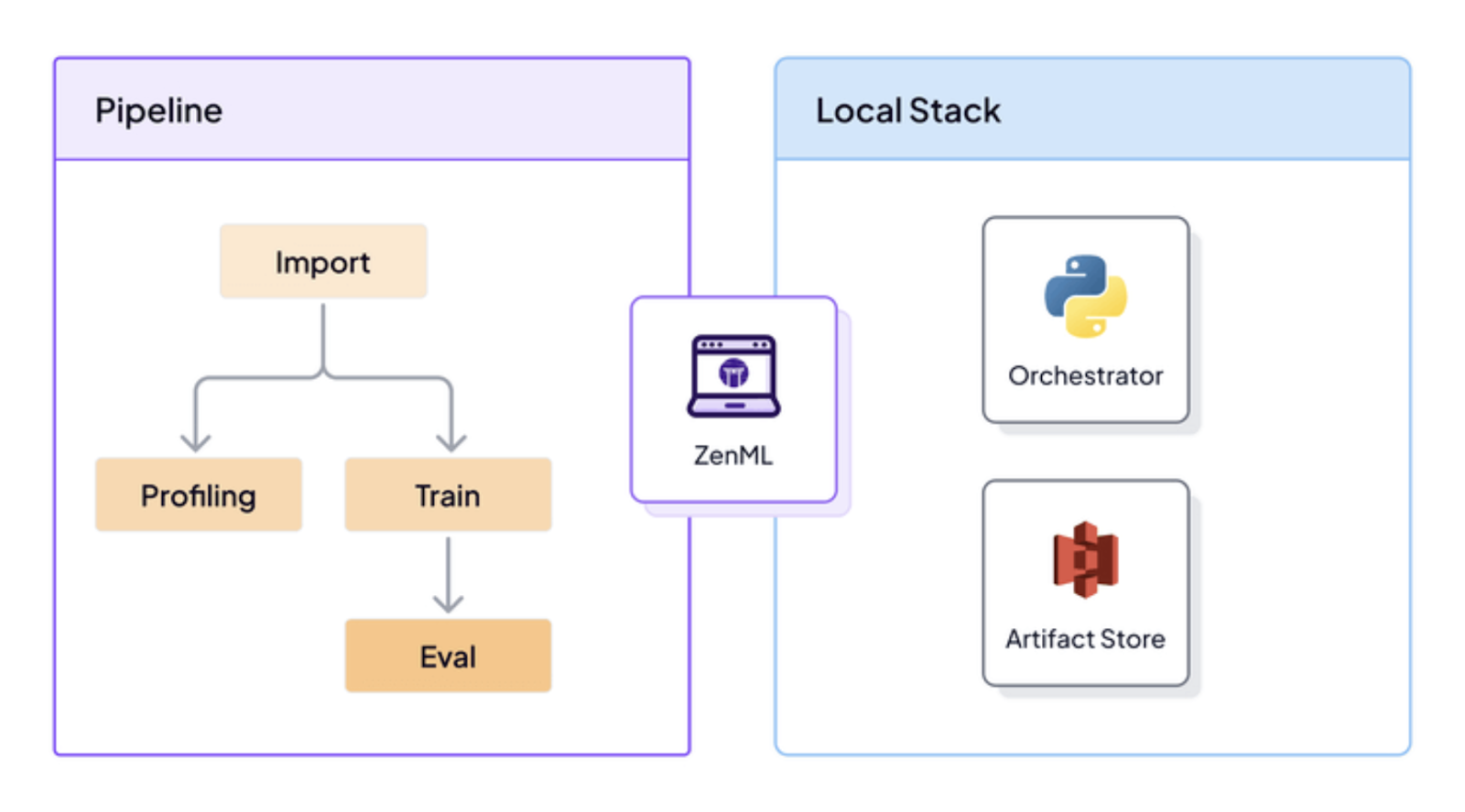

1. Pipeline Orchestration and Integration

ZenML allows you to create orchestrated pipelines that include your Vellum agent as just one step of a bigger process. You can embed Vellum prompts/workflows into larger ZenML pipelines that also handle data prep, post-processing, notifications, and more.

For example, you might have a ZenML pipeline that:

- Fetches and pre-processes some data (say, user queries or support tickets).

- Calls a Vellum-deployed workflow agent to analyze or respond (this is where the agent does its reasoning via Vellum).

- Takes the agent’s output and performs further actions – e.g., updates a database or sends an email.

ZenML's stack-agnostic nature also means you can seamlessly combine your Vellum agent with any other tool, like a specific vector database, a data validator, or a feature store, into a single, cohesive pipeline.

2. Visibility and Tracking

When you inject your Vellum agent as part of a ZenML pipeline, ZenML automatically logs inputs, outputs, and performance metrics.

Vellum’s own interface might show you per-prompt stats, but ZenML monitors the whole pipeline, including your AI app. So you get end-to-end traceability.

For example, you could trace a pipeline run from raw data ingestion, through the Vellum agent’s decision, to the final outcome, all in one lineage. Plus, it’s fully customizable, which means you can choose what metrics you want to track.

Feature 3. Artifact Store and Metadata Lineage

With ZenML, every output from a pipeline step becomes a versioned artifact with lineage. ZenML’s artifact versioning is seamlessly integrated into the pipeline. It automatically tracks every version, so you always know which dataset, model, and prompt version produced a given result.

👀 Note: At ZenML, we have built several agent workflow integrations with tools like Semantic Kernel, LangGraph, LlamaIndex, and more. We are actively shipping new integrations that you can find on this GitHub page: ZenML Agent Workflow Integrations.

📚 Other pricing guides to read:

Is Vellum AI Worth Investing In to Build AI Agents?

No doubt, Vellum AI is a powerful and collaborative platform for building sophisticated AI applications. Its free tier provides an excellent, risk-free way for teams to evaluate its capabilities.

However, its paid plans begin at a high price point and have limitations, most notably the 5-user cap on the Pro plan, which forces a quick decision about committing to a custom Enterprise contract.

The decision ultimately depends on your team's specific requirements. Teams that value rapid iteration and collaboration may find that the investment justifies itself.

For many teams, the optimal approach involves combining Vellum AI's AI development capabilities with complementary MLOps + LLMOps platforms like ZenML.

If you’re interested in taking your AI agent projects to the next level, consider joining the ZenML waitlist. We’re building out first-class support for agentic frameworks (like LangGraph, CrewAI, and more) inside ZenML, and we’d love early feedback from users pushing the boundaries of what AI agents can do. With ZenML, you can seamlessly integrate whichever agent framework you choose into robust, production-grade workflows. Join our waitlist to get started.👇