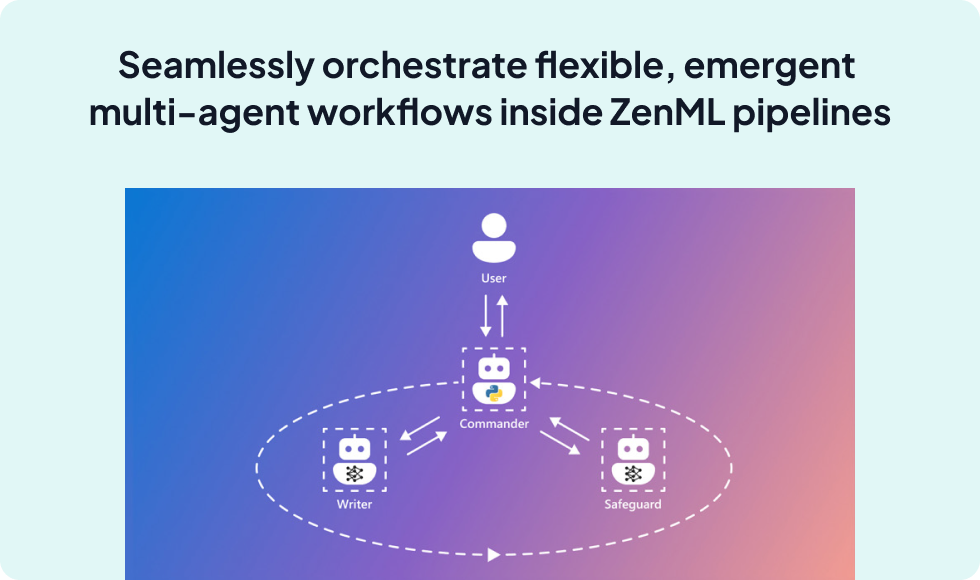

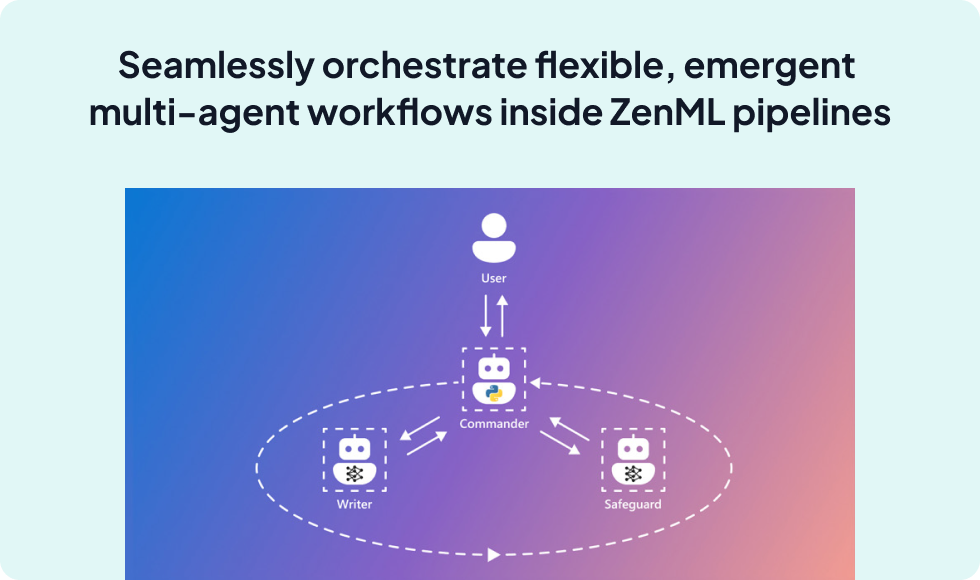

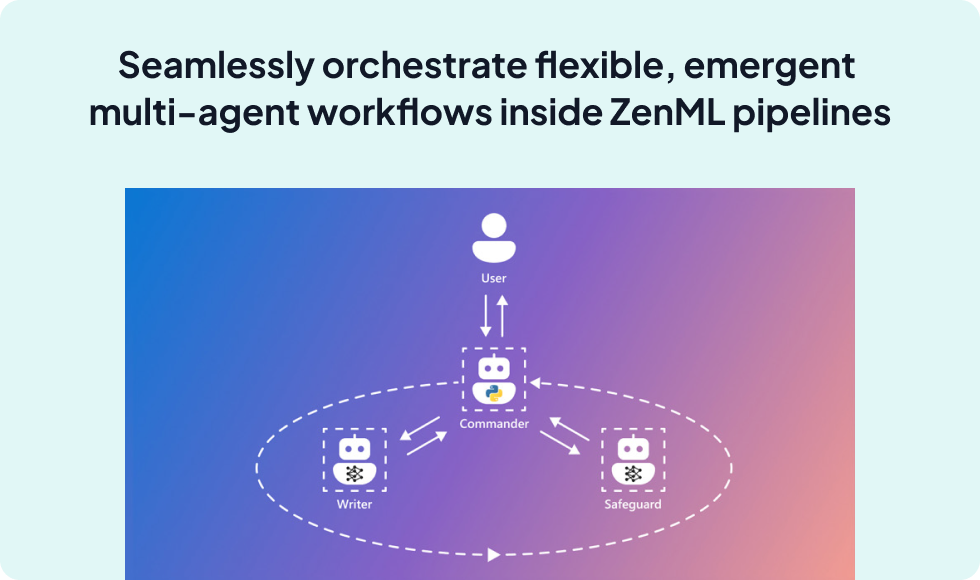

Seamlessly orchestrate flexible, emergent multi-agent workflows inside ZenML pipelines.

AutoGen is an open‑source, MIT‑licensed framework by Microsoft for building AI agents that interact through dynamic, conversational loops—even allowing human participants when needed. It excels at rapid prototyping of complex, emergent multi-agent behaviors through asynchronous messaging, role-based tools, and customizable memory modules.

Integrating AutoGen into ZenML enables you to embed these conversational agent workflows into reproducible, versioned pipelines. This brings production-ready orchestration, tracking, continuous evaluation, and deployment flexibility—so your AutoGen agents go from prototype to scalable, monitored system faster and more reliably Analytics Vidhya.

Features with ZenML

- Embed AutoGen's GroupChat agent workflows as pipeline steps for reproducible orchestration.

- Automatically track prompts, agent interactions, tool calls, and outputs in ZenML’s artifact store for full lineage.

- Add evaluation or validation steps post-agent execution to continuously monitor performance or quality drift.

- Maintain freedom to choose your preferred orchestrator (local, Kubernetes, Airflow, etc.) through ZenML’s stack flexibility.

Main Features

- Conversational Agent Execution. Execute multi-agent chat loops (with optional human-in-the-loop) within ZenML steps using AutoGen's async messaging model.

- Modular Tools & Memory. Integrate custom tools (Python functions, APIs) and memory systems with your agents—plugged into a pipeline structure that tracks each interaction step.

- Complete Observability. ZenML captures and versions everything—from conversation history to agent outputs—so artifacts can be inspected, reproduced, and compared later.

- Automated Quality Control. Insert evaluation steps (e.g., checking coherence or correctness of agent outputs) right after execution to flag failures and streamline quality feedback loops.

- Infrastructural Flexibility. Pipelines are portable across infrastructures and can combine AutoGen with other tools (e.g., vector DBs, RAG steps) thanks to ZenML’s provider-agnostic stack approach.

How to use ZenML with

AutoGen

from zenml import pipeline, step

from zenml.integrations.autogen import AutoGenAgentStep

@step

def agent_chat_step() -> str:

# Define and run an AutoGen multi-agent GroupChat

# (Placeholder—adapt with actual AutoGen API calls)

response = run_autogen_groupchat(...)

return response

@step

def evaluate_response(response: str) -> bool:

# Insert your evaluation logic (e.g., correctness, relevance)

return some_quality_check(response)

@pipeline

def autogen_agent_pipeline():

resp = agent_chat_step()

ok = evaluate_response(resp)

if __name__ == "__main__":

p = autogen_agent_pipeline()

p.run()

Additional Resources

ZenML GitHub Agent Integrations Repo

How to use AutoGen with ZenML

Official AutoGen Documentation