Which framework should you use to build efficient agentic AI – LangGraph or n8n? Both are powerful in their own right, but their building approaches are quite different.

By now, you might know that these LLM-powered agents are no longer in their experimental phase. They sift through documents, handle user queries, and even orchestrate other agents in production systems.

In this LangGraph vs n8n article, we break down the key differences in features, integrations, and pricing for both frameworks. We also discuss how you can leverage both together (with a tool like ZenML) to get the best of both worlds. So let’s get started.

LangGraph vs n8n: Key Takeaways

🧑💻 LangGraph: A code-first framework for building stateful, multi-agent AI applications as explicit graphs. LangGraph gives developers low-level control over agent workflows by representing each step as a node and connecting them with directed edges. The platform has built-in mechanisms for persistent memory and human-in-the-loop interaction, which makes it well-suited for long-running autonomous agents that need oversight or memory recall.

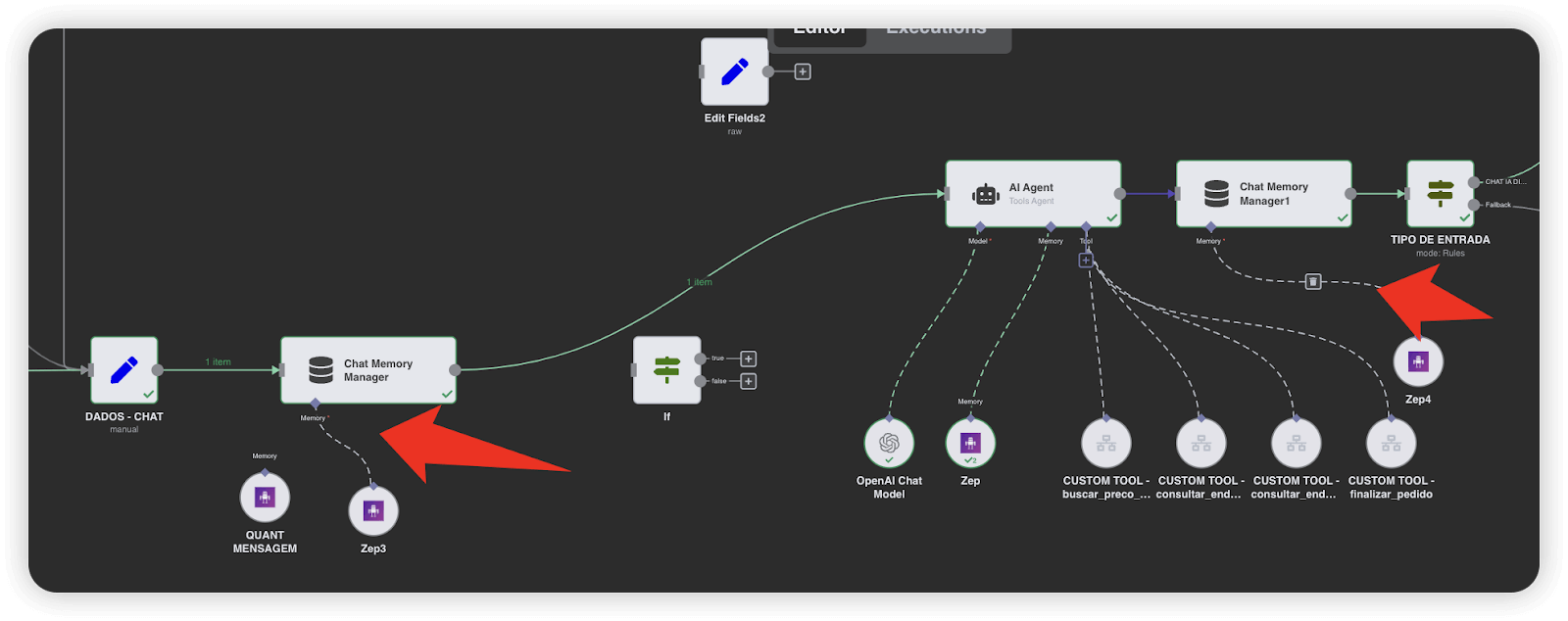

🧑💻 n8n: A visual workflow automation platform (open-source) that now supports AI and LLM agents. n8n lets you orchestrate multi-agent processes through a no-code interface – you create workflows by connecting nodes on a canvas, choosing from 500+ integrations including databases, APIs, and now LangChain-based AI nodes. It excels at quickly combining LLM agents with other tools (Slack, Gmail, databases, etc.) in one pipeline.

Recently Updated (November 2025): This comparison has been refreshed with the latest developments from both frameworks. LangGraph reached its 1.0 stable release in October 2025, marking a major milestone for production-ready agentic AI. n8n introduced a revolutionary new pricing model in August 2025 with unlimited workflows and execution-based billing. All feature comparisons, integration capabilities, and pricing information reflect the current state of both platforms as of November 2025.

Framework Maturity and Lineage

The maturity and development history of LangGraph vs n8n provide important context for adoption decisions:

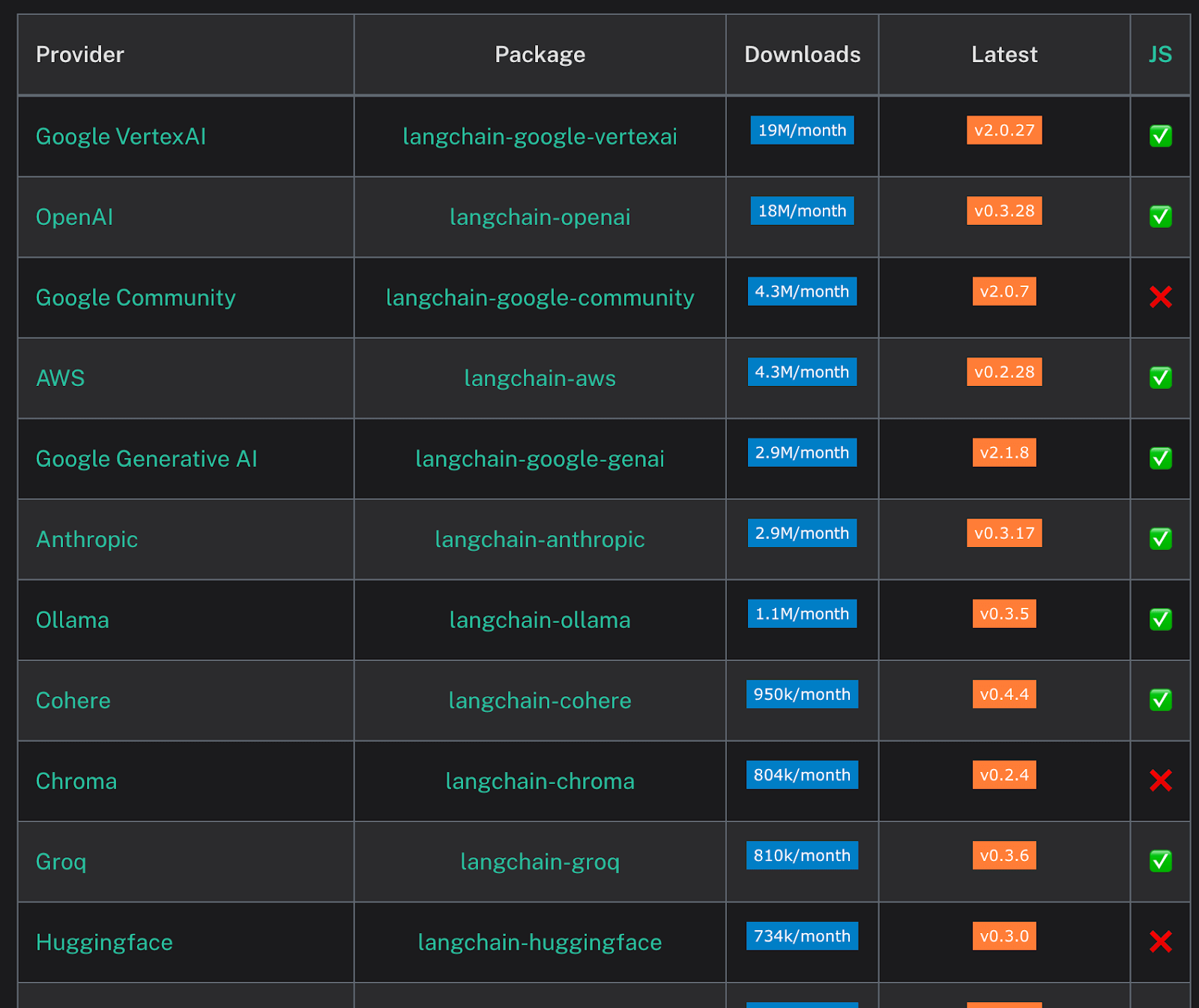

👀 Note: The data in the table above is current as of July 2025 (metrics may change over time).

LangGraph is a relatively new entrant (launched in early 2024) but has demonstrated rapid growth in the LLM developer community. Despite being only about 18 months in the wild, it has seen strong adoption, with over 7 million PyPI downloads in the last month alone – a sign of heavy use in production agent deployments.

n8n, by contrast, has been around since 2019 and matured into one of the most popular automation tools globally. Its GitHub repository has ~124,000 stars, placing it among the top 50 public repos on GitHub and nearly 15,000 commits of development.

LangGraph vs n8n: Features Comparison

The table below provides a high-level summary of how LangGraph and n8n stack up on core features, which we examine in detail in the following sections:

Now let’s compare these features in depth.

Feature 1. Workflow Modelling and Control-Flow Grammar

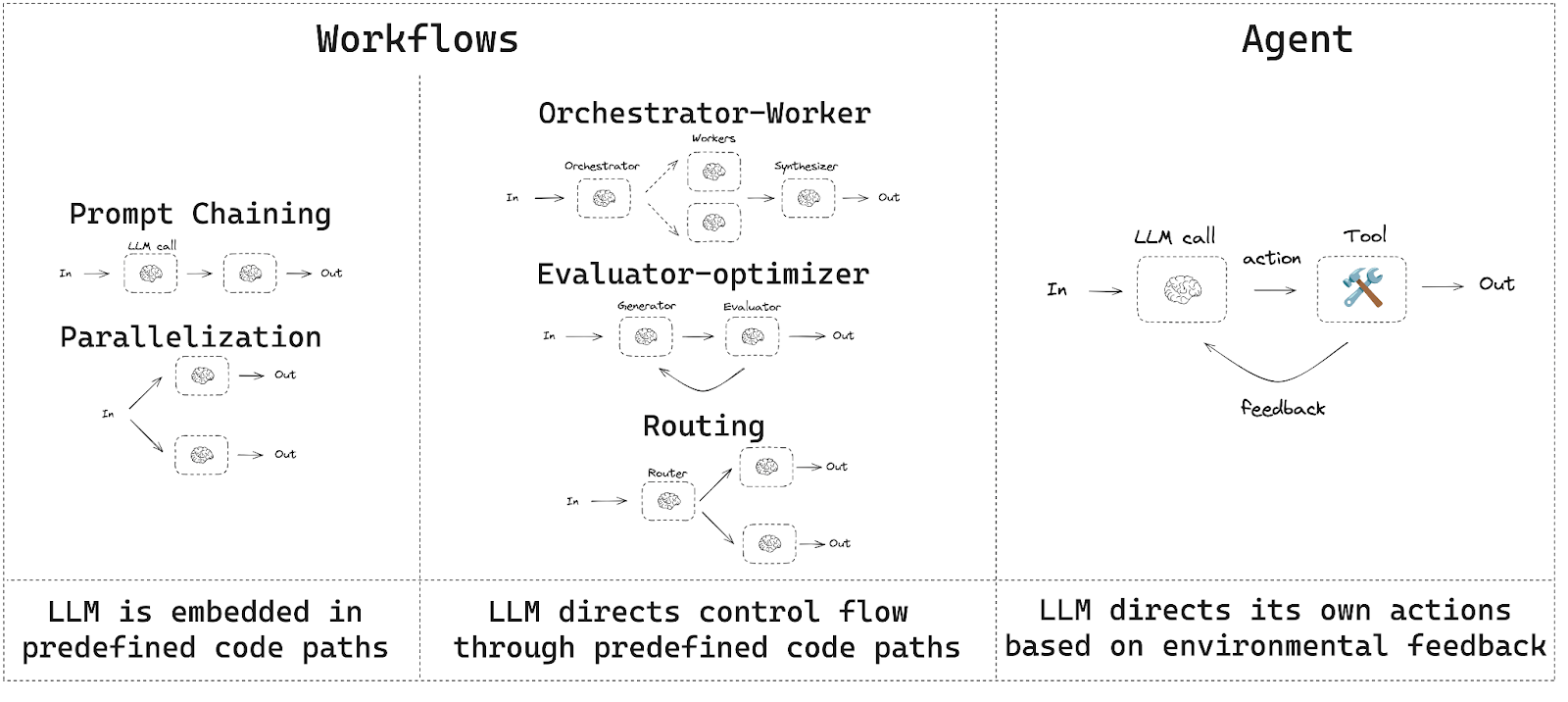

Workflow modeling defines how you structure and represent the logic of your automation processes, while control-flow grammar determines how execution moves through these workflows.

LangGraph

In LangGraph, a workflow is a StateGraph object defined entirely in Python code. Developers assemble graphs programmatically (in Python or TypeScript), connecting nodes with explicit edges that determine how state flows.

Because the graph is code, you can define loops, parallel branches, and conditional logic just as you would in a flowchart. This low‑level representation gives full control over execution order and makes dependencies explicit.

LangGraph’s control-flow grammar consists of two primary components:

- Nodes: Python functions that represent a unit of work, like an LLM call or a tool execution. Each node receives the current state as input and returns updates to that state.

- Edges: Define the path of execution between nodes. A standard

add_edgecreates a fixed, directed connection. The more powerfuladd_conditional_edgesuses a router function to dynamically determine the next node based on the current state.

n8n

In n8n, workflow modeling is visual and declarative. You build workflows using a drag-and-drop canvas UI, placing nodes and drawing connections between them to define the sequence of operations.

Each node performs a task; for example, call an API, run a code snippet, execute an AI prompt, etc., and the directed connections determine execution order and data flow.

n8n provides core nodes for control flow:

- IF Node: Splits the workflow into two distinct paths (true or false) based on a specified condition.

- Switch Node: Routes incoming data to one of several output branches based on the value of a field, which facilitates multi-path conditional logic.

- Merge Node: Combines data streams from different branches back into a single, unified path for subsequent processing.

Bottom line: LangGraph offers a programmatic graph DSL for workflow control, granting unrestricted complexity (at the cost of writing code).

n8n offers a user-friendly visual paradigm with a fixed set of control structures, ideal for quickly assembling workflows, although with some constraints on custom flow logic.

Feature 2. Multi-Agent Orchestration Patterns

Multi-agent orchestration defines how multiple AI agents collaborate, communicate, and coordinate to accomplish complex tasks that require diverse capabilities and expertise.

LangGraph

Multi-agent interactions are LangGraph’s bread and butter. Since LangGraph lets you define an arbitrary graph of LLM-powered nodes, you can explicitly model how multiple agents collaborate or compete within a single system.

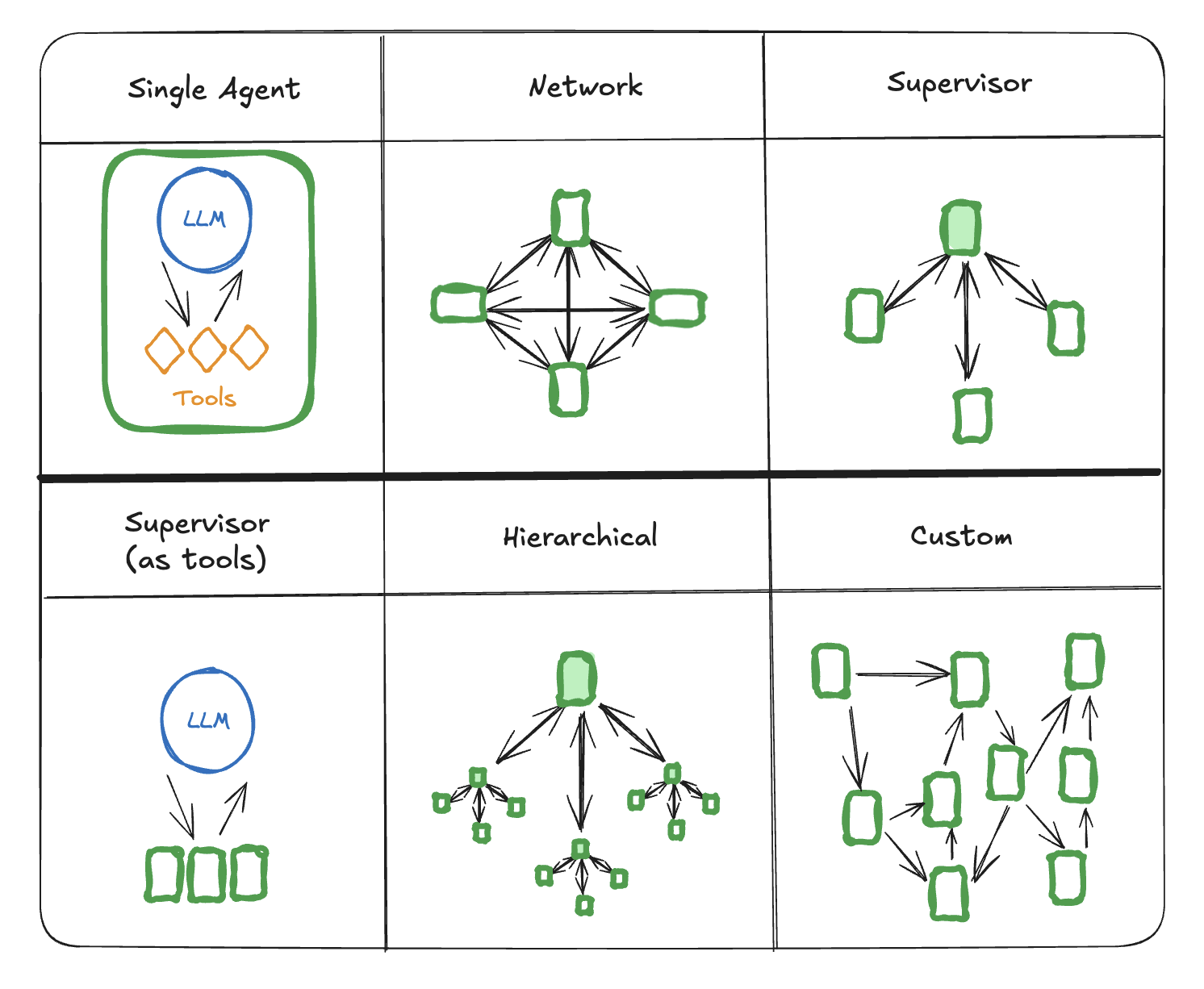

LangGraph has four architectures you can leverage for multi-agent orchestration:

- Network pattern: A set of peer agents connected through a router, passing messages along edges.

- Supervisor pattern: A supervisor agent oversees specialist agents and decides which one should act next. A variation uses tool‑calling models so the supervisor need not be an LLM itself.

- Hierarchical pattern: A top‑level agent decomposes a task into sub‑tasks, delegates to child agents, and integrates their outputs.

- Custom workflows: Developers can compose graphs arbitrarily, embedding sub‑graphs and external tools.

The handoff mechanism uses Command objects. When an agent finishes a step, it returns a Command specifying the next agent and any updated state.

This pattern decouples agents and lets you add new specializations without rewriting the orchestration logic.

The graph includes synchronous or asynchronous nodes, and lets you persist state to a database or vector store so that agents recover their context after a crash or across sessions.

n8n

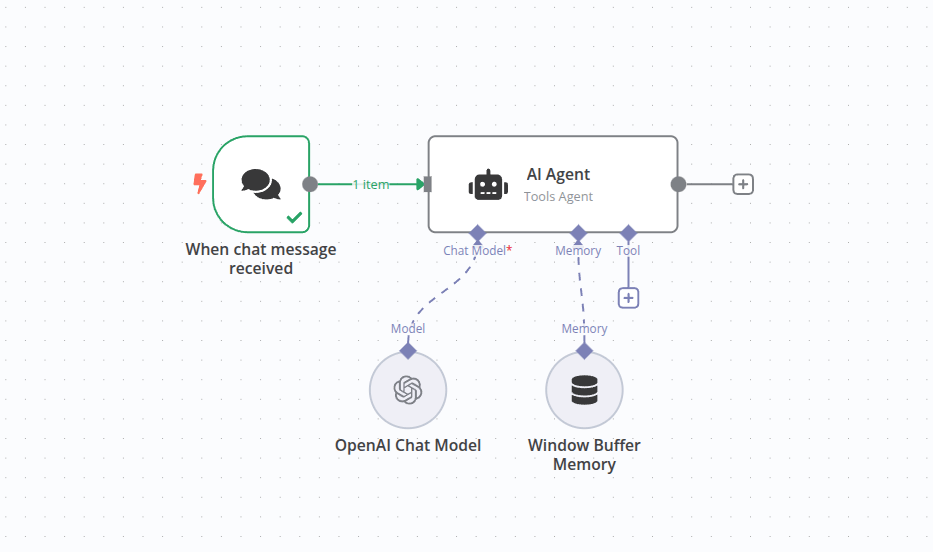

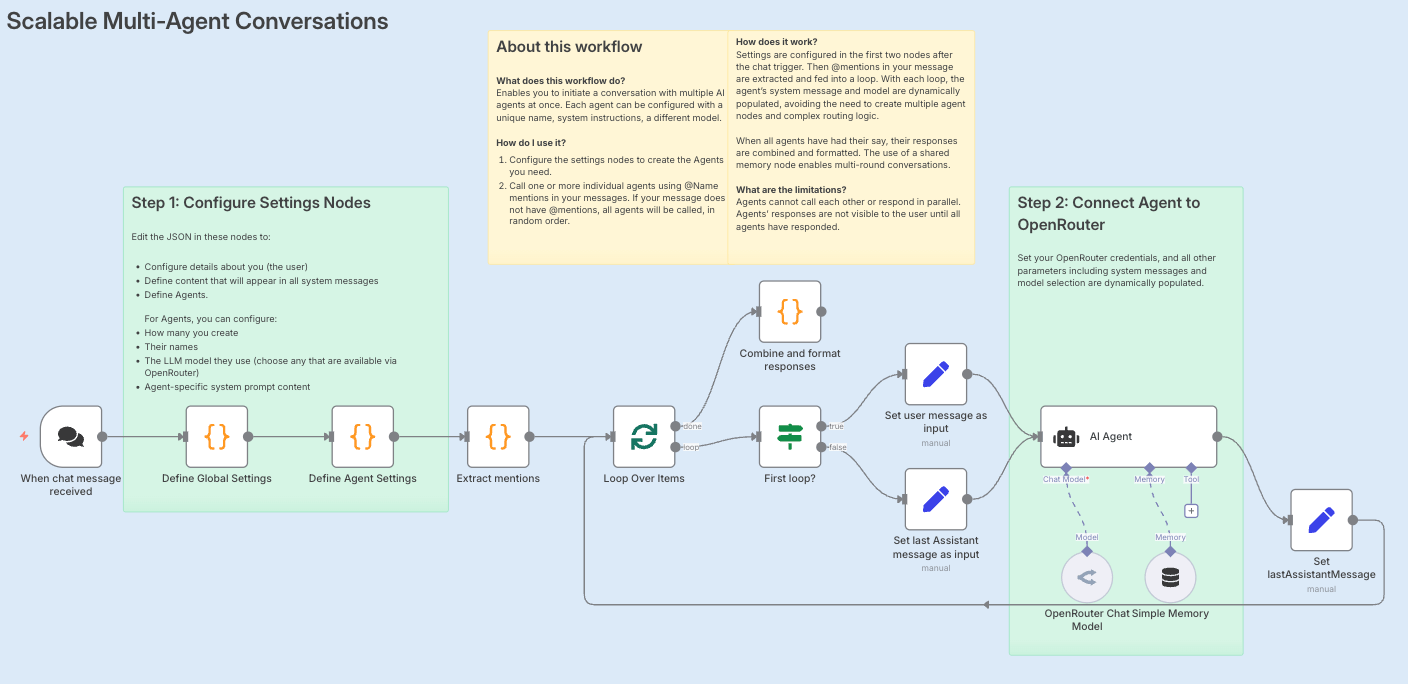

n8n approaches multi-agent orchestration by leveraging its integration with LangChain’s agent framework. Rather than you coding the coordination logic from scratch, n8n provides pre-built AI agent nodes that encapsulate common agent patterns.

For example, n8n has:

- LangChain Agent node: Execute a single-agent chain of thought with tools

- Tools Agent variant: An agent that is equipped with tool-use capabilities

- Plan and Execute Agent node: Implementing LangChain’s Plan-and-Execute multi-step agent paradigm.

So, orchestrating multiple agents in n8n often means chaining these agent nodes together or in parallel. n8n also has a concept of sub-workflows (via the Execute Workflow node), which you can use to spin off separate agent processes.

While n8n can’t match LangGraph’s unconstrained orchestration, it makes common patterns quite accessible.

Want two agents to talk to each other? You might create a loop in n8n where Agent A node runs, then Agent B node runs with A’s output, and repeat – essentially a simple conversation between two agents.

Want a tool-using agent? Use the Tools Agent node. For a planner/executor pair, use the Plan-and-Execute node.

Bottom line: LangGraph gives you full freedom to orchestrate agents in any pattern, but you must design the coordination logic.

n8n provides ready-made agent components that you can chain together, making multi-agent workflows easy to set up if they follow common paradigms.

If you want to build complex, highly tailored agent interactions, use LangGraph; if your goal is to get a quick multi-agent functionality with minimal coding, use n8n.

Feature 3: Short and Long-Term Memory

Memory management in agentic systems determines how agents retain context from previous interactions and accumulate knowledge over time.

LangGraph

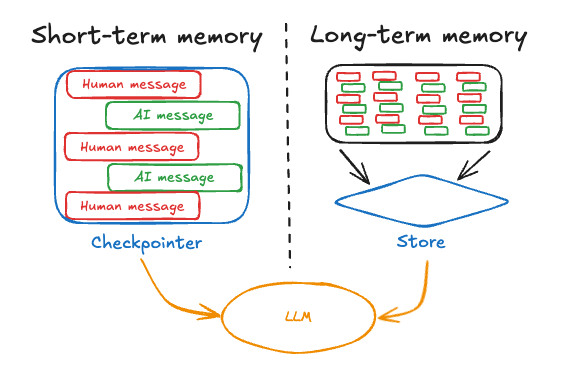

Memory is a first‑class concept in LangGraph. Each node can access and modify the graph’s state, and the runtime checkpoints this state after every execution.

This design makes agent workflows resumable and resilient to failures. LangGraph distinguishes between short‑term and long‑term memory:

- Short‑term memory is thread‑scoped: it stores the running conversation’s history and context. A

checkpointerpersists this memory so that agent workflows can be paused and resumed across sessions. - Long‑term memory includes semantic, episodic, and procedural memory types. Semantic memory stores facts about the user or domain and can be implemented via a vector database. Episodic memory captures experiences or episodes, useful for summarising past interactions. Procedural memory stores instructions or rules that help the agent decide how to act.

You can update memory synchronously on the main path or asynchronously in a background job. Because memory storage is abstracted, you have the option to plug in different databases or vector stores without changing the workflow code.

This flexibility makes LangGraph suitable for complex conversational agents that need persistent knowledge of users and tasks.

n8n

n8n treats memory primarily as chat history. The Simple Memory node persists conversation state by storing recent messages in the workflow.

First, set a session key and a context window length. In queue mode, later calls may reach a different worker because the memory sits in that worker’s process rather than in a shared database.

For more flexible storage, the Chat Memory Manager node provides operations to get, insert, or delete messages in an in‑memory vector store.

You can insert messages as user, system, or AI messages, override them, or remove them entirely. This is useful when you need to trim the conversation or inject context dynamically.

However, there is no built‑in concept of long‑term memory: to persist knowledge across sessions, you must connect n8n to an external database (e.g., via a database node or a vector‑store integration). n8n’s agent nodes do not automatically save their state between runs.

Bottom line: n8n’s memory capabilities are modular; you have to assemble them. Short-term memory is straightforward with the Simple Memory node. Long-term memory requires using static data or external storage.

Compared to LangGraph, n8n’s memory handling is less unified and not as deeply integrated into the execution engine. There’s no checkpoint/resume of an entire agent state mid-flow

Feature 4. Human-in-the-loop

Human-in-the-loop capabilities enable human oversight, validation, and intervention in automated agent workflows, which is critical for sensitive or high-stakes operations.

LangGraph

Real‑world agentic applications often require a human to supervise, approve, or correct an agent’s actions. LangGraph supports this through interrupts.

The LangChain team introduced an interrupt feature specifically to make human-in-the-loop interactions easier.

For example, an agent could raise an InterruptError when it needs clarification from a person, which the LangGraph runtime catches and uses to pause the agent.

A human can then inspect the agent’s state – all intermediate thoughts and variables are accessible – and even modify the state or correct an error, then signal the agent to continue.

Here are the actions a human can take:

- Approve/reject actions: Example - confirm an API call or email before sending it.

- Edit state: Allow a subject matter expert to correct a mistaken assumption in the agent’s context.

- Review tool calls: Inspect tool arguments before they are executed.

- Validate input: Ensure that the user‑submitted data meets constraints before continuing.

👀 Note: Because interrupts are built into the runtime, you don’t have to manage threads or manual pauses. The graph can be suspended and resumed across machines or time intervals, making it practical for long‑running, human‑supervised workflows.

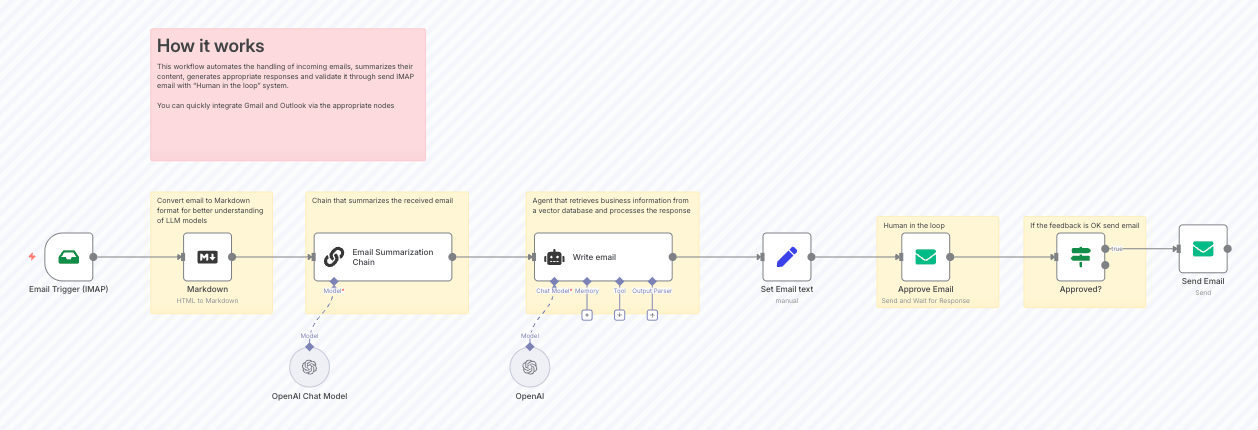

n8n

n8n brings humans into the loop with general‑purpose nodes instead of agent‑level interrupts. A Wait node pauses the workflow until a time you set, a webhook fires, or a form arrives.

When waiting for a webhook, n8n generates a unique resume URL, and you can configure authentication, HTTP method, response code, and timeouts.

The Form node lets you collect input from a user via a simple web form; combining a Form node with a Wait node implements human review loops.

One thing to note is that n8n’s waits are tied to its execution model: if you self-host n8n, you need to make sure the workflow is kept ‘active’ while waiting (n8n has an execution persistence so it can wait indefinitely as long as the process is running).

On n8n cloud, waiting workflows count against your active workflow quota but are fully supported. Essentially, waiting for hours or days for human input is fine in n8n. The workflow will just be in a paused state on the server.

Bottom line: LangGraph gives you built‑in, agent‑level interrupts for granular human checkpoints in long‑running, stateful workflows, while n8n relies on Wait and Form nodes for broader pause‑and‑resume steps, something that’s perfect for straightforward approval loops but without deep visibility into an agent’s internal state.

LangGraph and n8n in Late 2025: Production Maturity Arrives

The agentic AI landscape has shifted dramatically in the latter half of 2025, with both LangGraph and n8n achieving significant production maturity milestones.

LangGraph's Stable 1.0 Release

In October 2025, LangGraph reached version 1.0, becoming the first stable major release in the durable agent framework space. This milestone represents LangChain's commitment to production stability, with a promise of no breaking changes until version 2.0. The release came alongside LangChain 1.0, streamlining the entire ecosystem after three years of community feedback.

Recent additions to LangGraph include:

- Node-level caching: Cache individual node results to eliminate redundant computation and accelerate development cycles

- Deferred node execution: Postpone node execution until all upstream parallel paths complete, enabling more sophisticated flow control

- MCP endpoint support: Every deployed LangGraph agent now exposes its own Model Context Protocol endpoint, enabling seamless integration with any MCP-compatible client

- Direct state updates from tools: Tools can now directly modify graph state, providing unprecedented control over agent behavior

- LangGraph Supervisor library: A lightweight Python library simplifying hierarchical multi-agent system construction

- React integration hook: Single-hook integration for React applications with built-in thread and state management

The LangGraph Platform (renamed to "LangSmith Deployment" as of October 2025) reached general availability in May 2025, with nearly 400 companies deploying agents into production during the beta period.

n8n's Pricing Revolution and AI Enhancements

n8n fundamentally restructured its pricing model in August 2025, removing all limits on active workflows across every plan tier. The new execution-based pricing model charges based on workflow runs rather than individual steps or active workflows, dramatically simplifying cost forecasting for complex automations.

Key 2025 additions to n8n include:

- Evaluations for AI Workflows: A dedicated Evaluation node allows systematic testing of AI logic against datasets, with scoring capabilities using LLMs as judges

- Workflow organization features: Unlimited nested folders with drag-and-drop organization, plus workflow archiving for safe removal without permanent deletion

- Python task runner (beta): Secure Python sandbox built on isolated execution environments, replacing the previous Pyodide implementation

- Partial execution for AI tools: Test individual workflow segments without running the entire automation

- Auto-naming nodes: Automatically generate descriptive node names based on configuration

- Convert to sub-workflow: Extract workflow segments into reusable sub-workflows with a single click

Industry Context: Multi-Agent Adoption Accelerates

According to Gartner projections, 40% of enterprise workflows now include agentic AI components as of late 2025, up from negligible adoption just 18 months prior. Microsoft's May 2025 Build conference emphasized the "age of AI agents," introducing broad support for the Model Context Protocol across Azure AI Foundry, Semantic Kernel, and GitHub Copilot.

The trend toward multi-agent collaboration has intensified, with frameworks like LangGraph, CrewAI, and AutoGen enabling coordinated agent teams that negotiate, delegate, and orchestrate complex tasks end-to-end. Survey data indicates that 90% of respondents in non-tech companies either have deployed or are planning to deploy agents in production, with LangGraph capturing significant market share due to its maturity and comprehensive ecosystem.

Both LangGraph and n8n have positioned themselves as complementary tools in modern AI stacks: LangGraph for sophisticated agent orchestration with fine-grained control, and n8n for rapid deployment of AI-enhanced business automation with visual development. Teams increasingly use both frameworks together, leveraging n8n's integration capabilities to connect LangGraph agents into broader workflow ecosystems.

LangGraph vs n8n: Integration Capabilities

The ability to connect with other models, data sources, and tools is crucial for building real-world applications.

LangGraph

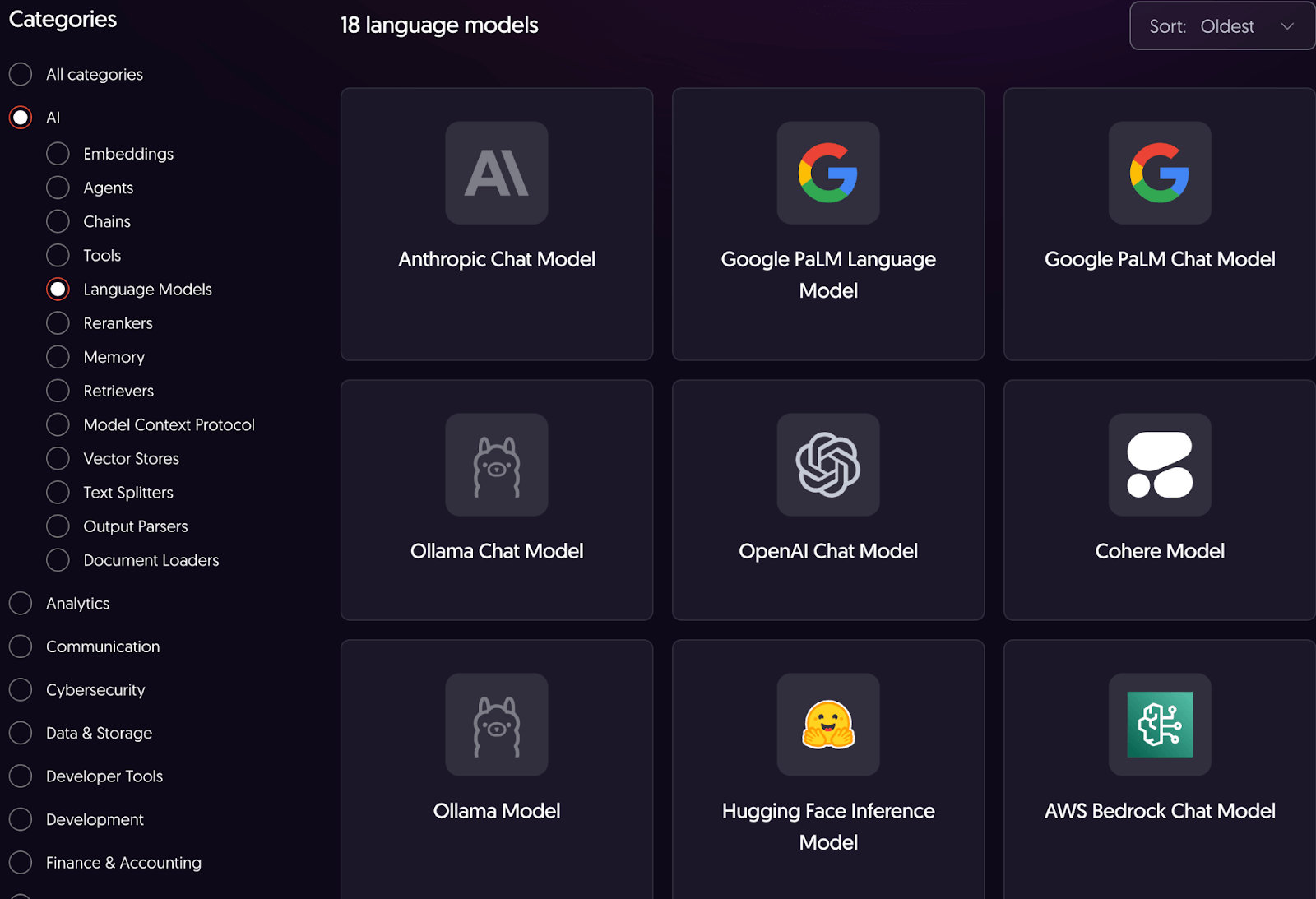

LangGraph’s strength lies in its deep, native integration with the broader LangChain ecosystem. This gives developers immediate access to:

- LangChain Components: The vast library of LangChain integrations for LLMs, document loaders, text splitters, vector stores, and tools can be used directly as nodes within a LangGraph graph.

- LangSmith: A best-in-class platform for observability, tracing, and debugging. Traces from LangGraph are automatically visualized in LangSmith, showing the step-by-step execution of the graph, which is invaluable for understanding and improving complex agent behavior.

- Extensibility: As a Python library, LangGraph can be integrated with any other Python package or API, offering limitless extensibility for developers.

n8n

n8n’s core value proposition is its massive library of over 500 pre-built node integrations, making it a central hub for business process automation.

- Breadth of Connectivity: These integrations cover everything from CRMs (Salesforce, HubSpot) and databases (Postgres, MySQL) to communication platforms (Slack, Discord) and marketing tools.

- LLMOps/MLOps Integrations: n8n provides robust integrations with all major LLM providers (OpenAI, Anthropic, Google Gemini), vector stores (Pinecone, Weaviate), and data loaders. The generic

HTTP Requestnode allows it to connect to any REST API, making it a viable component in a modern MLOps stack.

Bottom line: The integration philosophies of the two frameworks highlight their target audiences. LangGraph's ecosystem is centered on tools for the developer; components for building, debugging, and observing the agent itself.

n8n's ecosystem is centered on tools for the business; connectors for the SaaS applications that a company runs on.

LangGraph vs n8n: Pricing

Both frameworks offer free, open-source options alongside managed commercial services.

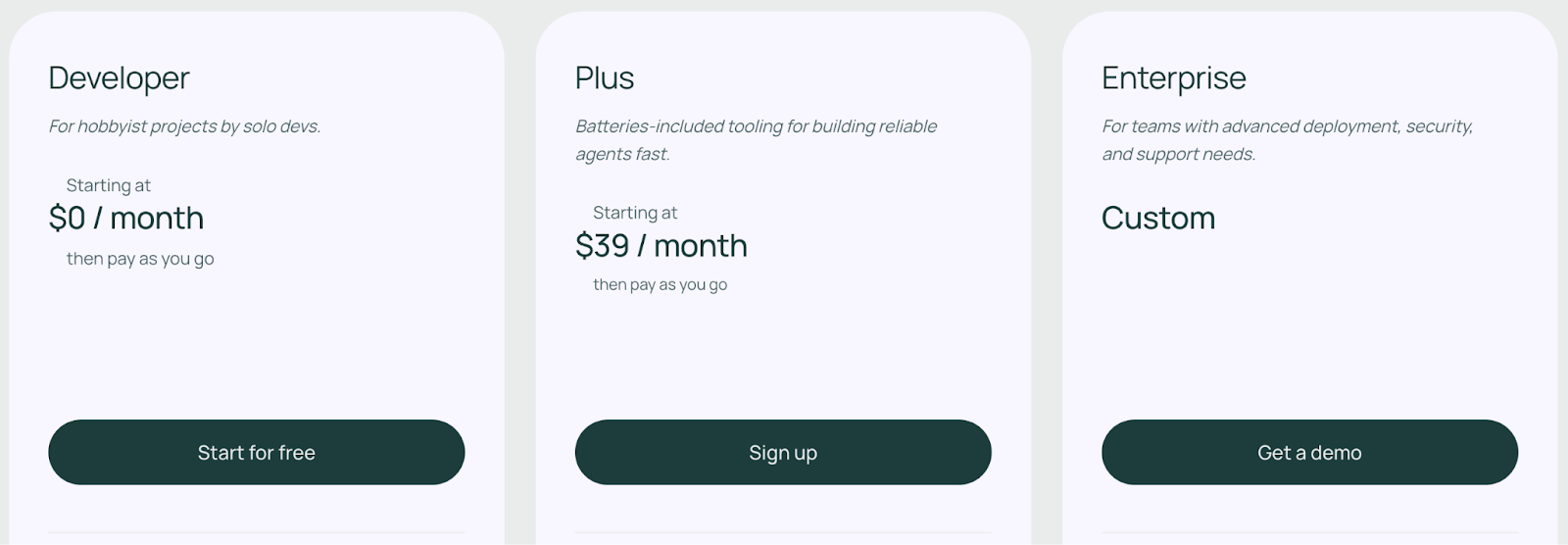

LangGraph

LangGraph employs a freemium model that separates the open-source library from its commercial platform.

The langgraph Python and JavaScript libraries are completely free (MIT license) and can be self-hosted without any usage limits from LangChain.

LangGraph Platform

This is the managed commercial offering with a tiered structure designed to scale with your needs and has three plans to choose from:

- Developer: Includes up to 100K nodes executed per month

- Plus: $0.001 per node executed + standby charges

- Enterprise: Custom-built plan tailored to your business needs

📚 Related article:LangGraph pricing guide

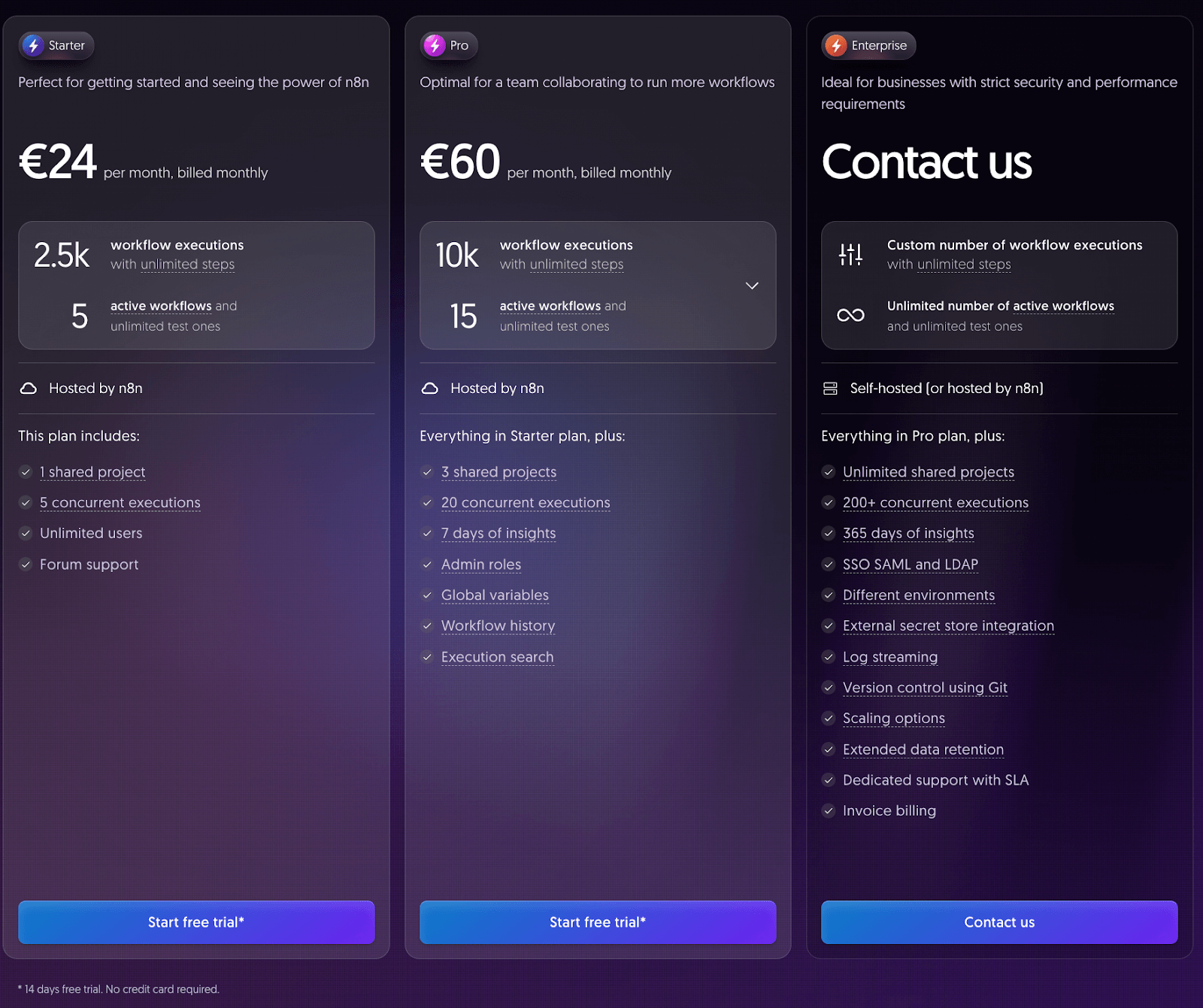

n8n

n8n offers three paid plans to choose from. Each plan comes with a 14-day free trial, no credit card required.

- Starter: €24 per month. 2.5k workflow executions and 5 active workflows.

- Pro: €60 per month. 10k workflow executions and 15 active workflows.

- Enterprise: Custom pricing. Custom number of workflow executions and infinite active workflows.

👀 Note: n8n also has a Community edition - a basic version of n8n that’s available on GitHub.

How ZenML Helps In Closing the Outer Loop Around Your Agents

Building a sophisticated agent is only part of the story. After an agent is deployed, you need to monitor its performance, manage data pipelines, retrain models, and integrate with the rest of your MLOps.

ZenML is an open‑source MLOps + LLMOps framework that focuses on this outer loop. While LangGraph and n8n orchestrate the inner loop of agent logic, ZenML handles the full lifecycle of your data and models.

Here’s how ZenML complements LangGraph and n8n:

- Pipeline management and reproducibility: ZenML lets you define data ingestion, processing, training, and evaluation pipelines as code. These pipelines are versioned and tracked, so they let you reproduce results and roll back to previous versions if something goes wrong. You can wrap a LangGraph graph or an n8n workflow inside a ZenML pipeline step to embed agentic behaviour into a larger ML workflow.

- Experiment tracking and observability: ZenML integrates with tools like MLflow, Weights & Biases, and ClearML for experiment tracking. It also supports custom visualisations and dashboards. When your agent uses LangGraph’s built-in tracing or n8n’s execution logs, ZenML can ingest those logs for cross‑pipeline observability, helping you compare different agent configurations.

- Deployment and serving: ZenML abstracts away infrastructure. You can deploy models and agents to Kubernetes, Vertex AI, or other platforms using uniform interfaces. For example, use LangGraph for an agent’s logic and then deploy it as a microservice via ZenML’s serving stack.

- Evaluation and continuous improvement: ZenML supports evaluation pipelines where you define metrics, evaluation datasets, and acceptance criteria. This is essential for agentic AI because LLM agents may drift or degrade over time.

- Visibility and governance: ZenML provides a central view of all your pipelines, models, and agents. It tracks lineage across data, code, and models, enabling teams to understand how an agent’s outputs were produced.

Because ZenML is composable, it doesn’t lock you into a single agent framework.

Updated for 2025: With LangGraph reaching 1.0 stability and n8n introducing unlimited workflows, production deployments of agentic AI have accelerated dramatically. ZenML's role becomes even more critical as organizations move beyond proof-of-concept to managing hundreds of agent deployments across development, staging, and production environments.

ZenML's pipeline abstraction works seamlessly with both LangGraph's stateful agents and n8n's workflow automation, providing a unified layer for:

- Version control and reproducibility across agent configurations, prompt templates, and workflow definitions

- A/B testing frameworks for comparing LangGraph agent architectures against n8n-based automation strategies

- Cost tracking and observability for execution-based pricing models (like n8n's new 2025 structure) and node-level optimization (like LangGraph's caching)

- Compliance and governance for agent actions, particularly important as enterprises deploy autonomous systems at scale

As the agentic AI market continues its projected doubling to $7.4 billion by end of 2025, having a production-grade MLOps platform like ZenML becomes essential for teams managing complex AI systems beyond simple chatbots.

You can experiment with LangGraph graphs and n8n workflows side by side, evaluate them with the same pipelines, and even combine them (e.g., using n8n to orchestrate data ingestion and LangGraph for the reasoning core).

ZenML thus bridges the gap between agent experimentation and production‑grade ML and LLM systems.

Common Questions About LangGraph vs n8n

Is LangGraph production-ready in 2025? Yes. LangGraph reached version 1.0 in October 2025, marking its first stable production release with a commitment to no breaking changes until 2.0. Nearly 400 companies deployed LangGraph agents during the platform's beta period, and it's now trusted by enterprises including Klarna, Replit, and Elastic for production workloads. The LangSmith Deployment platform (formerly LangGraph Platform) provides managed infrastructure, built-in observability, and one-click GitHub deployment for teams prioritizing operational simplicity.

How has n8n's pricing changed in 2025? n8n fundamentally restructured its pricing in August 2025, eliminating all limits on active workflows. All plans—from Starter to Enterprise—now include unlimited users, unlimited workflows, and unlimited steps. Pricing is based purely on execution volume, meaning you can build and test complex, data-heavy automations without worrying about hitting workflow or step quotas. This makes n8n particularly attractive for teams managing large numbers of automation scenarios.

Can LangGraph and n8n work together? Absolutely. Many teams use both frameworks in complementary ways: LangGraph for complex agent logic requiring stateful workflows and fine-grained control, and n8n for connecting those agents to business tools like Slack, databases, and external APIs. n8n can trigger LangGraph agents via HTTP requests, consume their outputs, and orchestrate multi-step processes that combine traditional automation with AI reasoning. This "best of both worlds" approach is increasingly common in production AI systems.

Which framework has better multi-agent support? LangGraph provides more sophisticated multi-agent orchestration with explicit support for network, supervisor, hierarchical, and custom workflow patterns. You can define complex agent interactions programmatically with full state management and memory persistence. n8n offers multi-agent capabilities through its LangChain agent nodes and workflow chaining, but the orchestration is less granular. If your primary requirement is coordinating multiple specialized agents with complex communication patterns, LangGraph is the stronger choice. For simpler multi-step AI workflows that primarily need to connect with business tools, n8n's approach may be sufficient.

What's changed with LangGraph's memory management? LangGraph expanded its memory capabilities significantly in late 2024 and early 2025, adding semantic search for long-term memory (finding relevant memories based on meaning rather than exact matches), cross-thread memory support allowing agents to share knowledge across conversations, and the LangMem SDK for structured long-term memory management. These enhancements position LangGraph as a leader in building agents that accumulate knowledge and improve over time, a critical requirement for production applications where agents need to remember user preferences, past interactions, and learned procedures.

Is n8n suitable for non-technical teams? Yes. n8n's visual workflow editor makes it accessible to business users and technical teams alike. The August 2025 feature additions—including auto-naming nodes, workflow folders, and improved evaluation tools—further reduce the learning curve. While n8n supports custom JavaScript code for advanced users, many workflows can be built entirely through the drag-and-drop interface using pre-built nodes. For teams without extensive Python experience but needing AI-enhanced automation, n8n provides a significantly lower barrier to entry than code-first frameworks like LangGraph.

What’s the Best Framework to Build Agentic AI?

The choice between LangGraph and n8n depends entirely on your project's goals, your team's expertise, and your requirements for control versus flexibility.

Updated Framework Selection Guide (November 2025)

✅ Choose LangGraph if:

- You need production-grade, stateful AI agents with complex decision-making logic

- Your team is comfortable writing Python or TypeScript code

- Fine-grained control over agent behavior, memory, and state transitions is critical

- You're building hierarchical or networked multi-agent systems

- Deep integration with the LangChain ecosystem (LangSmith, vector stores, retrievers) adds value

- Your use case requires persistent memory, semantic search, or long-running conversational agents

- You need built-in human-in-the-loop capabilities with interrupt-and-resume workflows

- New in 2025: You want to leverage Model Context Protocol endpoints for tool interoperability

- New in 2025: Node-level caching and deferred execution are valuable for your workflows

✅ Choose n8n if:

- Rapid development and visual workflow design are priorities

- Your team prefers low-code/no-code development with optional coding flexibility

- The primary goal is connecting AI agents to business tools (Slack, databases, CRMs, APIs)

- You need to automate processes across 400+ pre-built integrations

- Unlimited workflow creation without cost penalties matters to your use case

- Your organization prefers self-hosting for data privacy and security control

- New in 2025: You want systematic AI workflow evaluation with dedicated testing nodes

- New in 2025: Execution-based pricing with no limits on active workflows better fits your cost model

- New in 2025: Python sandbox capabilities for secure code execution within workflows are valuable

✅ Use both frameworks together when:

- LangGraph handles complex agent reasoning while n8n orchestrates business process integration

- You need to connect sophisticated AI logic to multiple external systems

- Different team members have varying technical skill levels (developers on LangGraph, operations on n8n)

- Your architecture benefits from separation between AI reasoning layers and system integration layers

- New in 2025: You're building production systems that require both MCP-compatible agents and traditional workflow automation

✅ Consider ZenML when:

- Moving from experimentation to production deployment requires reproducibility and versioning

- Multiple agent configurations need systematic evaluation and comparison

- Observability across both agent execution and traditional ML pipelines is required

- Your team needs unified tracking for experiments, deployments, and performance monitoring

- Governance, lineage tracking, and audit trails are compliance requirements

- You're managing the full lifecycle of AI systems beyond agent orchestration alone

📚 Relevant comparison articles to read:

🏆 Bonus: Use ZenML when you're ready to move any agentic application from experimentation to production. ZenML is essential when you need reproducibility, scalability, automated evaluation, and a unified platform to manage the entire lifecycle of your AI systems, regardless of the underlying framework.

ZenML's upcoming platform brings every ML and LLM workflow - data preparation, training, RAG indexing, agent orchestration, and more - into one place for you to run, track, and improve.

Enter your email address below to join the early-access waitlist. Be the first to build on a single, unified stack for reliable AI. 👇