Langfuse is a popular open-source observability tool for LLM applications, but it isn’t a one-size-fits-all framework.

As you move from proofs-of-concept to enterprise-grade systems, you’ll encounter architectural and governance constraints in Langfuse.

Thus, teams seek Langfuse alternatives that support high-volume data ingestion, integrated operational layers, and unified orchestration across the broader Machine Learning Operations (MLOps) and Low-Level Machine Learning Operations (LLMOps) lifecycle.

In this article, we briefly cover why you might seek a Langfuse alternative, what criteria to consider, and then dive into eight of the best alternatives.

TL;DR

- Why Look for Alternatives: Langfuse lacks an integrated 'AI gateway' layer. It only handles logging/tracing after the fact – not live routing, caching, or rate-limiting of LLM calls. It also relies on a single Postgres database, which can struggle at scale.

- Who Should Care: ML engineers and LLMOps teams running production apps that need secure, compliant, or self-hosted solutions capable of handling high volumes of LLM traffic.

- What to Expect: A comparison of 8 top Langfuse alternatives, from open-source options like ZenML and Arize Phoenix to managed platforms like LangSmith and HoneyHive, covering features, pricing, pros, and cons.

The Need for a Langfuse Alternative?

Even if Langfuse jump-started your LLM observability, as your application matures, your architectural or organizational needs might shift.

Teams often seek alternatives when they require active traffic management, stricter compliance with existing telemetry standards, or more predictable cost models at scale. Here are the three main reasons teams migrate away from Langfuse:

1. Requirement for a Single Control Plane (Gateway + Guardrails)

Some engineering teams expect a single "box" that actively brokers traffic: handling routing, failover, caching, quotas, and guardrails, while simultaneously providing observability.

Langfuse is intentionally designed as an observability, evaluation, and prompt management tool, not a runtime gateway. It excels at analyzing data after the fact but isn't built to be the active proxy governing your live traffic.

- The Driver: Teams often need multi-provider failover, traffic shaping, and runtime policy enforcement in one unified layer.

- The Reality: If you need a control plane at the edge, you are looking for a "true gateway" (like Portkey or Helicone) or a unified platform that includes gateway capabilities, rather than just a passive observer.

2. Standardization on OpenTelemetry (OTel)

Organizations with a mature, company-wide telemetry stack (using tools like Jaeger, Tempo, or Grafana) often prefer a single tracing model for everything: APIs, ETL pipelines, search, and LLMs.

For these teams, introducing a separate, domain-specific UI like Langfuse for LLMs creates a fragmented workflow.

Such teams often mandate "All tracing via OTel" to ensure LLM spans can be correlated with non-LLM microservices in the same dashboards.

While Langfuse can ingest OTel spans, teams prioritizing a ‘single pane of glass’ often prefer sending LLM traces directly to their existing OTel backends to maintain a unified view of their entire system infrastructure.

3. Cost Predictability at High Volume

As production traffic scales, usage-metered cloud pricing becomes unpredictable. High queries per second (QPS) or massive token counts might lead to variable monthly bills that are difficult for FinOps teams to forecast.

CFOs and budget holders often require strict budget caps or predictable, fixed-cost line items rather than variable spending based on traffic spikes.

While metered pricing in Langfuse is transparent, high-volume applications often drive teams toward self-hosted solutions (leveraging fixed infrastructure costs) or enterprise plans with flat-rate pricing to ensure the Total Cost of Ownership (TCO) remains within a fixed "budget envelope".

Evaluation Criteria

When evaluating Langfuse alternatives, we prioritized the following criteria:

- Deployment and Data Residency: Can you self-host or run the tool on-premises? Does it accommodate your data governance needs? Tools that offer open-source editions or flexible hosting got bonus points.

- Security, Compliance, and Privacy: Enterprise teams require SOC 2 compliance, encryption, and role-based access control. We looked at whether each platform supports SSO/SAML, audit logs, and isolation of sensitive data.

- Instrumentation and Integrations: How easily does the tool integrate with your LLM stack? We checked for OpenTelemetry support, SDKs in multiple languages, and native integrations with frameworks like LangChain or LlamaIndex. Minimal code changes for logging are a plus.

- Data Model and Queryability: Does the platform simply store unstructured logs, or does it provide a queryable store for traces and prompt metadata? We favored tools that make it easy to search, filter traces, and support advanced analytics or custom dashboards on top of the data.

With these criteria in mind, let’s examine the top Langfuse alternatives for LLM observability.

What are the Top Alternatives to Langfuse

Here’s a quick table comparing the best Langfuse alternatives:

1. ZenML

Best for: Teams that want a unified, open-source MLOps + LLMOps platform with governance, lineage, and evaluation baked into reproducible pipelines; so observability isn’t a sidecar but part of the system.

ZenML is an open-source AI platform that spans pipelines → deployment → agents. Instead of adding tracing after the fact, ZenML treats observability as metadata flowing through versioned pipelines: every run, artifact, and prompt/dataset can be tracked, compared, and gated with human approvals and alerts.

You can self-host the OSS core or use ZenML Pro for a managed control plane with RBAC/SSO and compliance.

Features

- End-to-end lineage and run tracking across pipelines, artifacts, and models to debug regressions and tie outputs back to exact inputs/config (a practical alternative to per-call tracing UIs).

- LLM/RAG evaluation workflows with guides and building blocks for retrieval and generation metrics; integrate evals into pipelines so quality gates run before promotion.

- Centralized model control plane for governance: version control, approval workflows, and audit trails around model moves between dev/stage/prod.

- Alerting and human-in-the-loop approvals (Slack/Discord alerters) to notify on failures, request deploy approvals, or block promotions until reviewers approve.

- Open and pluggable stack (experiment trackers, orchestrators, vector DBs, agent frameworks) so you can capture metadata from the whole LLM stack without vendor lock-in.

Pricing

ZenML is free and open-source under the Apache 2.0 license. The core framework and dashboard are fully available without cost.

For teams needing enterprise-grade collaboration, managed hosting, and premium support, ZenML offers custom business plans. These are typically usage- or seat-based and are tailored depending on your deployment model (cloud or on-prem).

Pros and Cons

ZenML’s strength is that observability, evaluation, and deployment live in one pipeline graph, improving reproducibility and auditability versus a standalone tracer. Teams get governance-ready features (RBAC/SSO and compliance on Pro) and practical human-in-the-loop controls via alerters, while keeping flexibility through broad integrations to avoid vendor lock-in.

The trade-off is that ZenML isn’t a drop-in, per-request tracing UI like Langfuse; if you want a call-timeline view out of the box, you either need to model it via pipeline lineage/evals or pair ZenML with a dedicated tracer.

2. LangSmith

LangSmith is a managed SaaS by the LangChain team. It’s purpose-built for debugging and monitoring LLM apps built using LangChain or LangGraph. If you’re already in the LangChain ecosystem, LangSmith provides deep integration and an interface to trace each agent’s chain of thoughts step-by-step.

Features

- Log every LLM call and visualize nested chains with token usage, latency, and intermediate outputs to pinpoint failures.

- Test prompts instantly in the playground and track live metrics like latency, cost, and errors with real-time alerts in custom dashboards.

- Run automated LLM-based and database evals to score response quality, detect regressions, and monitor overall app performance.

- Integrate with LangChain or OpenTelemetry to centralize logs across multiple frameworks with minimal setup.

- Collaborate through shared trace links and in-app comments; self-host via enterprise Kubernetes deployment for full data control.

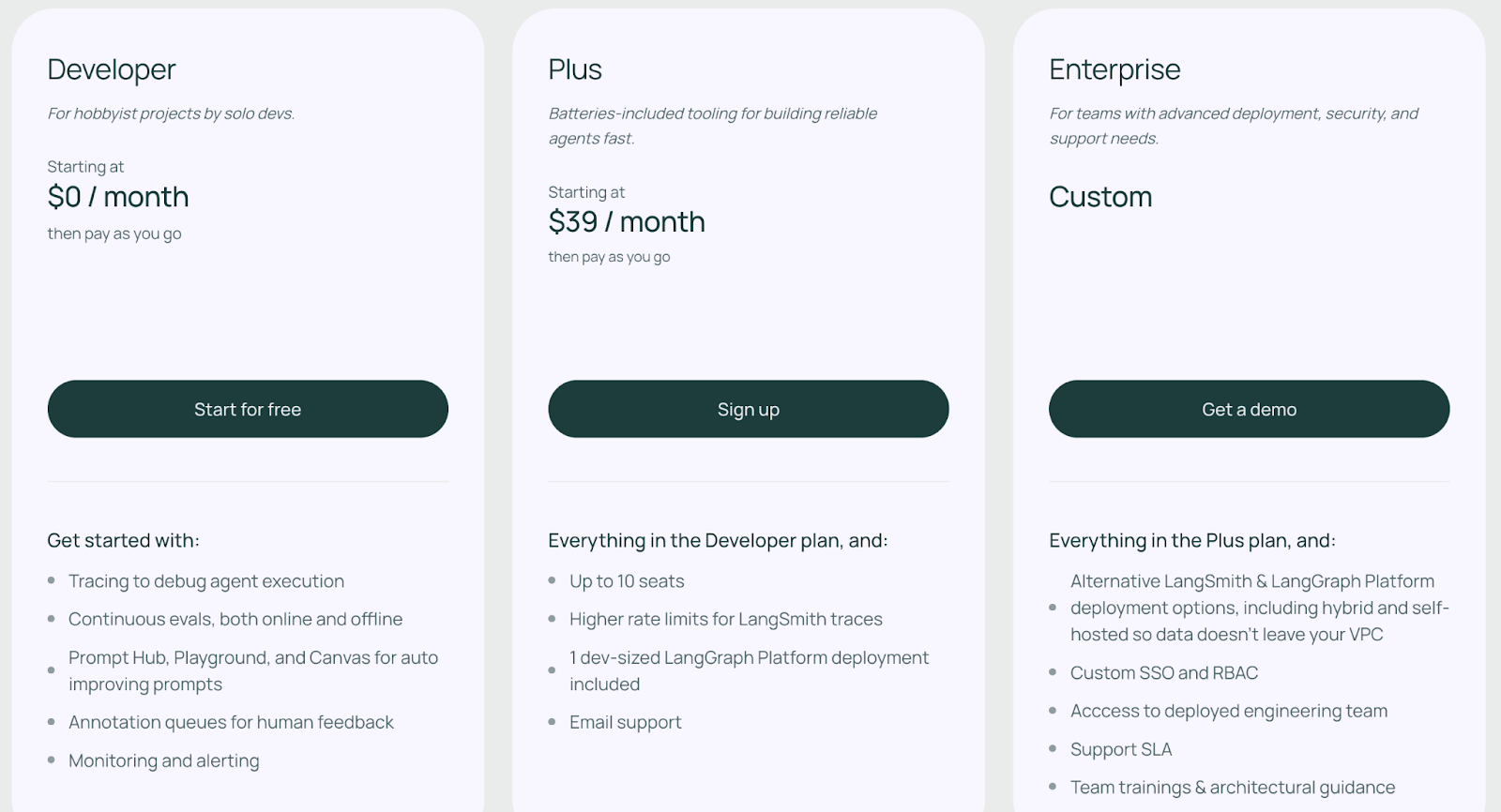

Pricing

LangSmith has a free developer plan that typically includes 1 developer seat and up to 5,000 traces per month. Other than that, it has two paid plans:

- Plus: $39 per seat per month

- Enterprise: Custom pricing

Pros and Cons

LangSmith’s biggest strength is its deep LangChain integration. It makes debugging intuitive for LangChain or LangGraph apps. Its combined observability and evaluation tools simplify quality tracking, offering clear dashboards, metrics, and insights in one place.

Its major drawbacks stem from its closed-source nature and a usage-based cost structure that can be unpredictable. Using its SaaS means storing prompts and responses on LangChain’s servers, which raises privacy concerns for regulated teams.

📚 Also read: Langfuse vs LangSmith

3. HoneyHive

HoneyHive is a proprietary, full-lifecycle platform for LLM development. Think of it as a modern AI observability platform that emphasizes both monitoring and evaluation.

Features

- Integrate instantly using its OpenTelemetry-based SDK to log prompts, model responses, and tool calls without vendor lock-in.

- Monitor LLM metrics in real-time dashboards with filters for latency, token cost, and request volume by model or user segment.

- Capture user feedback and run automated evaluators to detect PII leaks, schema errors, or factual issues as responses stream in.

- Curate datasets directly from production logs by collecting, labeling, and converting edge cases into eval or fine-tuning sets.

- Connect with LangChain, RAG pipelines, and vector stores like Pinecone to trace every component of your LLM workflow.

Pricing

HoneyHive offers a generous free Developer tier capped at 10,000 events/month and 30-day retention, with core observability features. The Enterprise plan includes optional on-prem deployment for regulated teams.

Pros and Cons

HoneyHive’s agent-centric design and dedicated focus on the dev-prod feedback mechanism make it highly effective for teams constructing sophisticated agentic systems. Its OTLP compatibility ensures flexibility across various frameworks.

The limitation is that it remains primarily a proprietary SaaS platform, with self-hosting and the most necessary governance features restricted to the custom-priced Enterprise tiers.

4. Braintrust

Braintrust is a proprietary LLM engineering platform focused on observability, evaluation, and analysis at scale. Its core is Brainstore, a database purpose-built for AI workloads that the company reports as ~80× faster on real-world benchmarks, enabling sub-second queries across terabytes of traces.

Features

- Request-level tracing with spans and sub-spans (inputs/outputs, metadata, metrics, scores) for online logs and offline eval runs.

- Fast trace exploration and diffing: search/filter millions of spans, view trees, bulk-select to datasets, and diff traces across experiments for A/B comparisons.

- Autoevals library with LLM-as-judge, heuristic, and statistical metrics; supports custom scorers and RAG-style checks.

- Datasets and experiments workflow: log production traffic or curated sets, run evaluations, compare experiment results, and promote winners.

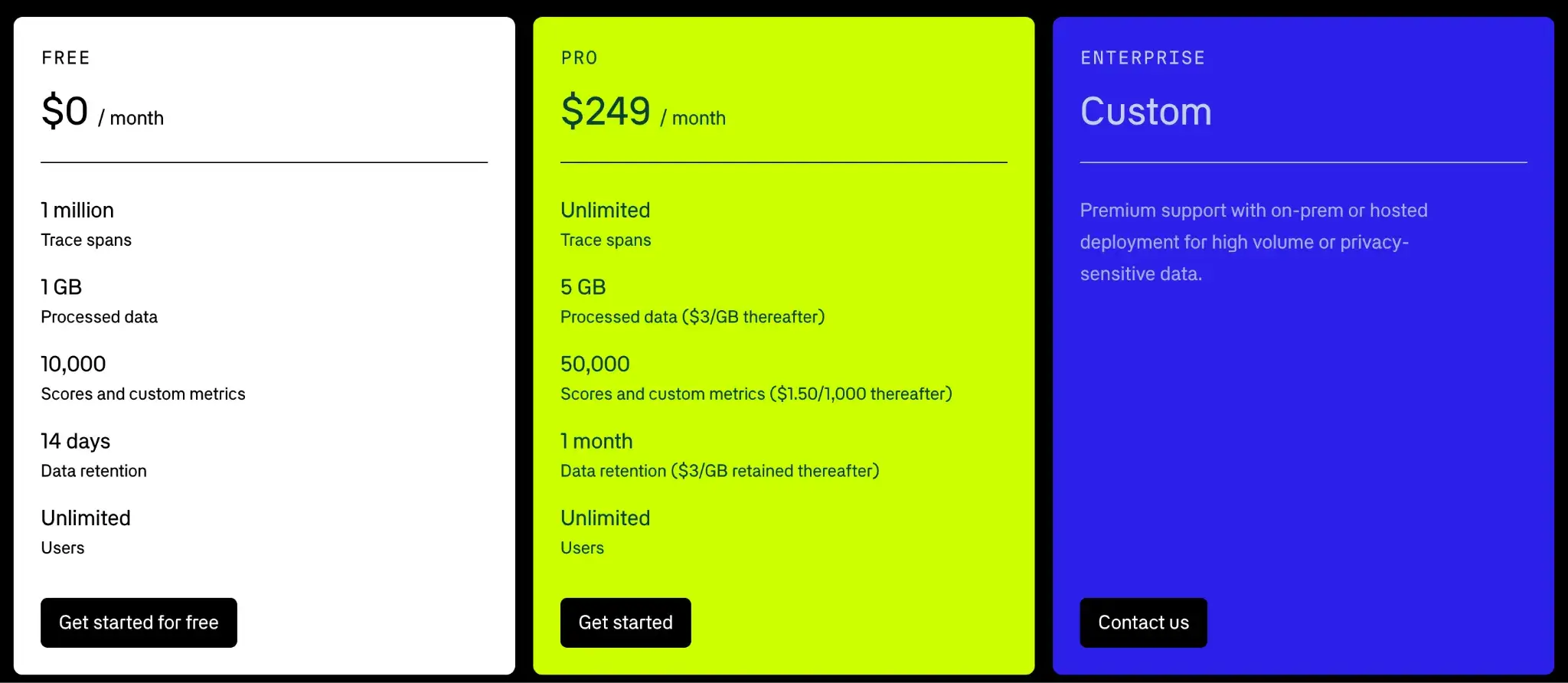

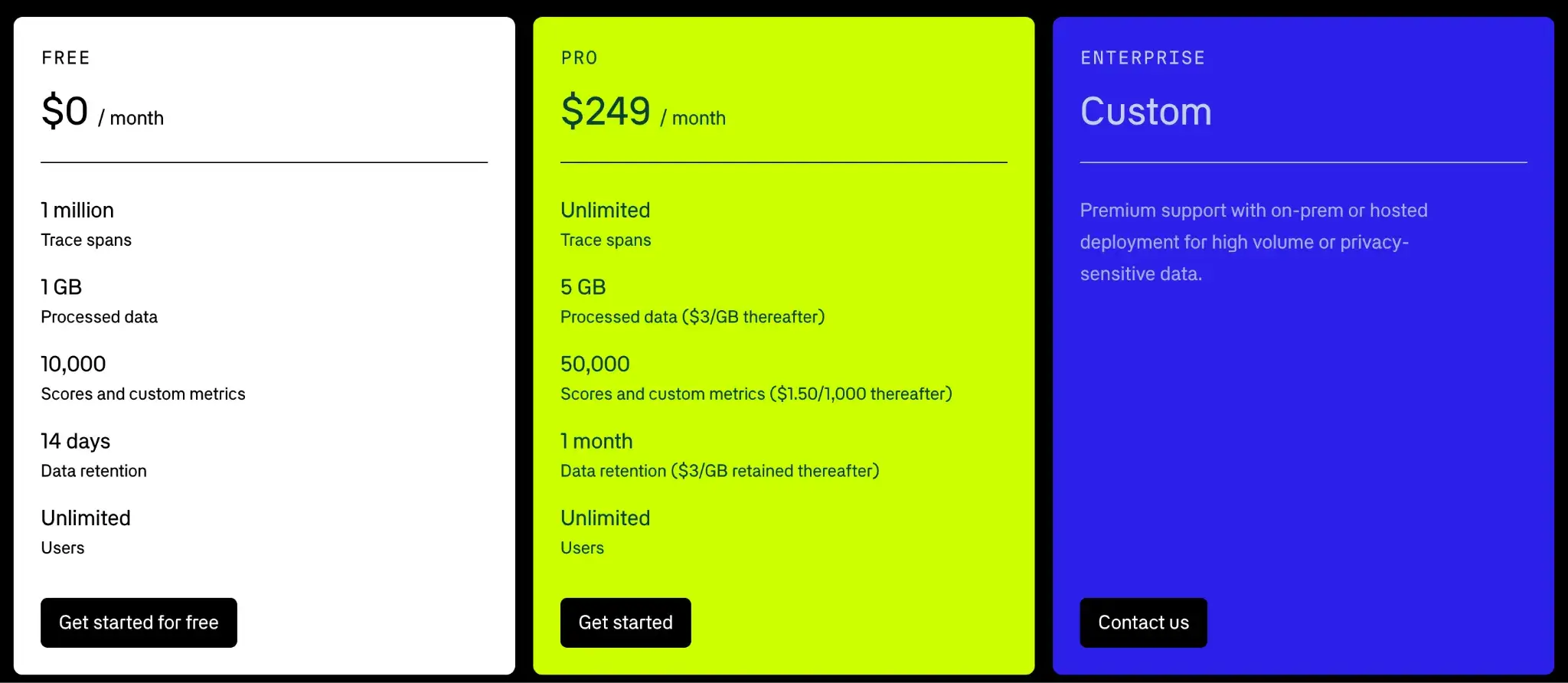

Pricing

Braintrist comes with a free plan that gives you 1 million spans, 1 GB processed data, 10,000 scores and custom metrics, and 14 days of data retention.

But if you want more, you can upgrade to two of the paid plans it offers:

- Pro: $249 per month

- Enterprise: Custom pricing

Pros and Cons

Braintrust's primary strength is a systematic and quantifiable approach to evaluation. Its Brainstore backend enables instant search and analysis across millions of logs. The eval-first design and hybrid self-hosted mode offer both speed and compliance, appealing to mature, data-heavy teams.

The core drawback is Braintrust’s pricing structure. Its premium price deters smaller teams. The pay-per-use model for evaluation scores becomes expensive as testing frequency and the evaluation datasets expand. Furthermore, self-hosting remains inaccessible outside the Enterprise tier.

5. Arize Phoenix

Phoenix (by Arize AI) is an open-source observability tool designed for local-first use. It’s essentially a Python library + web app that you run in a notebook or cloud instance to visualize traces, embeddings, and evaluation metrics for your LLM app.

Features

- Visualize all LLM calls through a self-hosted tracing UI that runs securely within your environment or notebook.

- Inspect model behavior interactively in Jupyter with embedding clusters, similarity plots, and retrieval coverage maps.

- Evaluate outputs using built-in LLM metrics for faithfulness, accuracy, and toxicity, powered by open libraries like RAGAS.

- Integrate easily via OpenTelemetry to capture traces from LangChain, LangGraph, or any custom pipeline without lock-in.

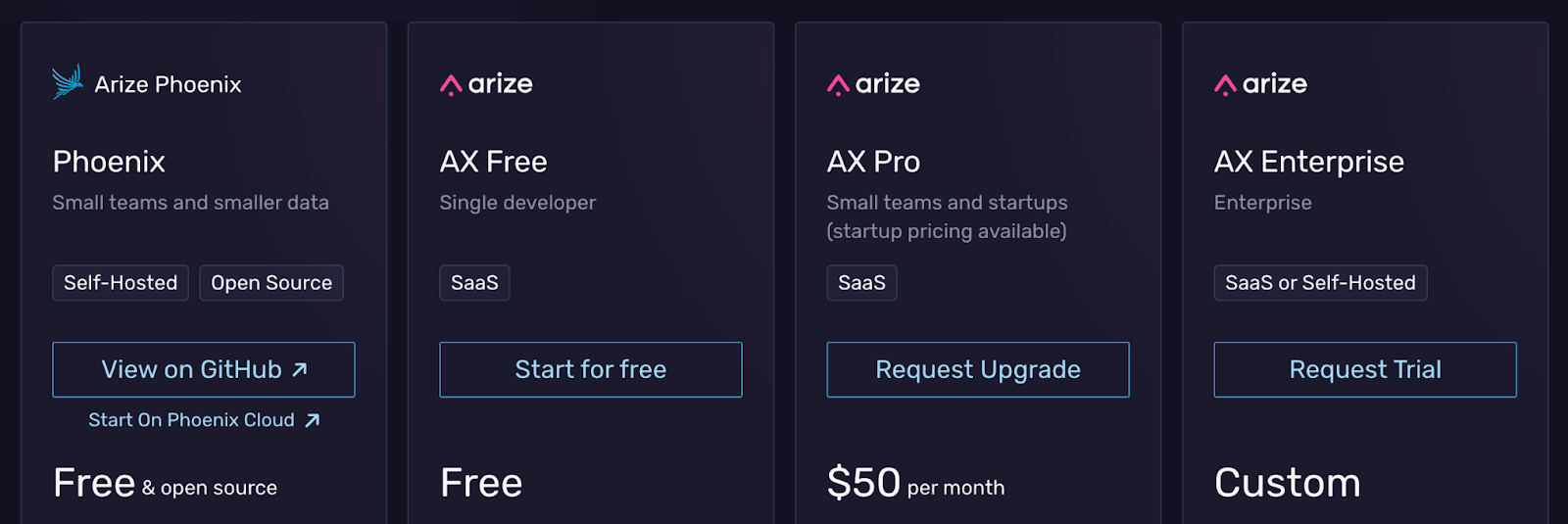

Pricing

Arize Phoenix is completely open-source and free for use as a standalone library. While designed for local use, it can integrate with the hosted ‘Arize AX platform’, which is a paid service and has the following pricing plans:

- Arize AX Free: Free

- AX Pro: $50 per month

- AX Enterprise: Custom pricing

Pros and Cons

Arize Phoenix stands out for being fully open-source, free, and privacy-first. Its local-first design lets teams trace and evaluate LLM behavior entirely within their environment. Its OpenTelemetry integration provides powerful embedded visualizations for debugging retrieval and model issues.

However, Phoenix requires manual setup and maintenance, unlike plug-and-play SaaS tools. You must host the UI, manage data storage, and configure scaling if handling large volumes. Collaboration features are limited unless you upgrade to Arize’s managed cloud, which adds cost and operational complexity.

6. Galileo

Galileo is an enterprise-grade LLM observability and governance platform. It’s the best Langfuse alternative if you need strong security and collaboration features while monitoring complex LLM agent systems. It provides prompt and model evaluation, agent monitoring, and guardrail enforcement in a single product.

Features

- Track every agent step and tool call to make complex LLM workflows transparent and fully debuggable.

- Leverage proprietary evaluation models like Luna-2 to score relevance, safety, and factual accuracy with enterprise precision.

- Enforce real-time guardrails that detect and block unsafe, PII-rich, or toxic outputs before they reach users.

- Secure sensitive data with SOC 2 compliance, advanced RBAC, and full audit trails across on-prem or cloud deployments.

- Collaborate through shared dashboards, annotations, and reports that connect LLM metrics with existing BI tools.

Pricing

Galileo offers a Free plan with generous limits: 5,000 traces per month, unlimited users, and unlimited custom evaluation runs. It also has two paid plans:

- Pro: $150 per month

- Enterprise: Custom pricing

Pros and Cons

Galileo’s greatest strength lies in its dedicated focus on proactive governance. Its real-time guardrails, audit logs, and SOC 2 compliance make it ideal for regulated industries. Its runtime protection capability is a key differentiator when compared to post-hoc observability tools.

However, access to the most robust features, particularly self-hosted deployment, is restricted behind the custom Enterprise pricing plan. Also, its newer LLM observability stack isn’t as mature as Langfuse, and its closed evaluation models limit transparency. Smaller teams might find its setup and pricing heavy for simpler observability needs.

7. PromptLayer

PromptLayer started as a way to log and version OpenAI API calls, and has since grown into a broader platform with prompt observability, version control, A/B testing, and even a visual workflow builder. It integrates tracing and analytics capabilities focused exclusively on the prompt lifecycle for deep optimization.

Features

- Record every LLM prompt through API wrappers and store them in a central Prompt Registry with full version history.

- Analyze prompt performance in real time using dashboards that track latency, cost, error rate, and usage trends.

- Run A/B tests or regression evaluations to compare prompt or model variants and detect regressions early.

- Design multi-step agent workflows visually in the no-code Agent Builder for faster experimentation and iteration.

- Integrate with OpenAI, LiteLLM, and Hugging Face APIs to log, cache, and retrieve prompt executions programmatically.

Pricing

PromptLayer offers a Free plan for individual use, limited to 5,000 requests and 7-day retention. Apart from that, it has two paid plans:

- Pro: $50 per month per user

- Enterprise: Custom pricing

Pros and Cons

PromptLayer is purpose-built for prompt engineering. It’s ideal for both engineers and non-technical collaborators. Features like A/B testing, an agent builder, and API integrations make it a strong choice for teams focused on optimizing prompt quality and iteration speed.

The core limitation is its narrow focus on the LLM call layer. It lacks trace depth compared to Langfuse and is less reliable for complex orchestration compared to full-stack platforms. As a closed platform, it also introduces vendor lock-in since logging depends on its SDK or API proxy.

8. Confident AI

Confident AI is a dedicated cloud platform built on top of the open-source DeepEval framework. If you’re looking for a Langfuse alternative that emphasizes robust evaluation and QA of LLMs, Confident AI is a strong contender.

Features

- Define and run automated LLM tests with DeepEval, using 40+ prebuilt or custom metrics to evaluate factual accuracy, tone, and relevance.

- Compare prompt and model versions through A/B testing and traffic splitting to identify the best-performing configurations in real time.

- Enable one-line tracing in LangChain, LlamaIndex, or custom pipelines to capture the complete prompt, retrieval, and response context.

- Monitor live LLM responses and set alerts for latency spikes or failed quality checks to ensure consistent model performance.

- Collect user feedback and convert it into evaluation labels for continuous prompt, model, and metric refinement.

Pricing

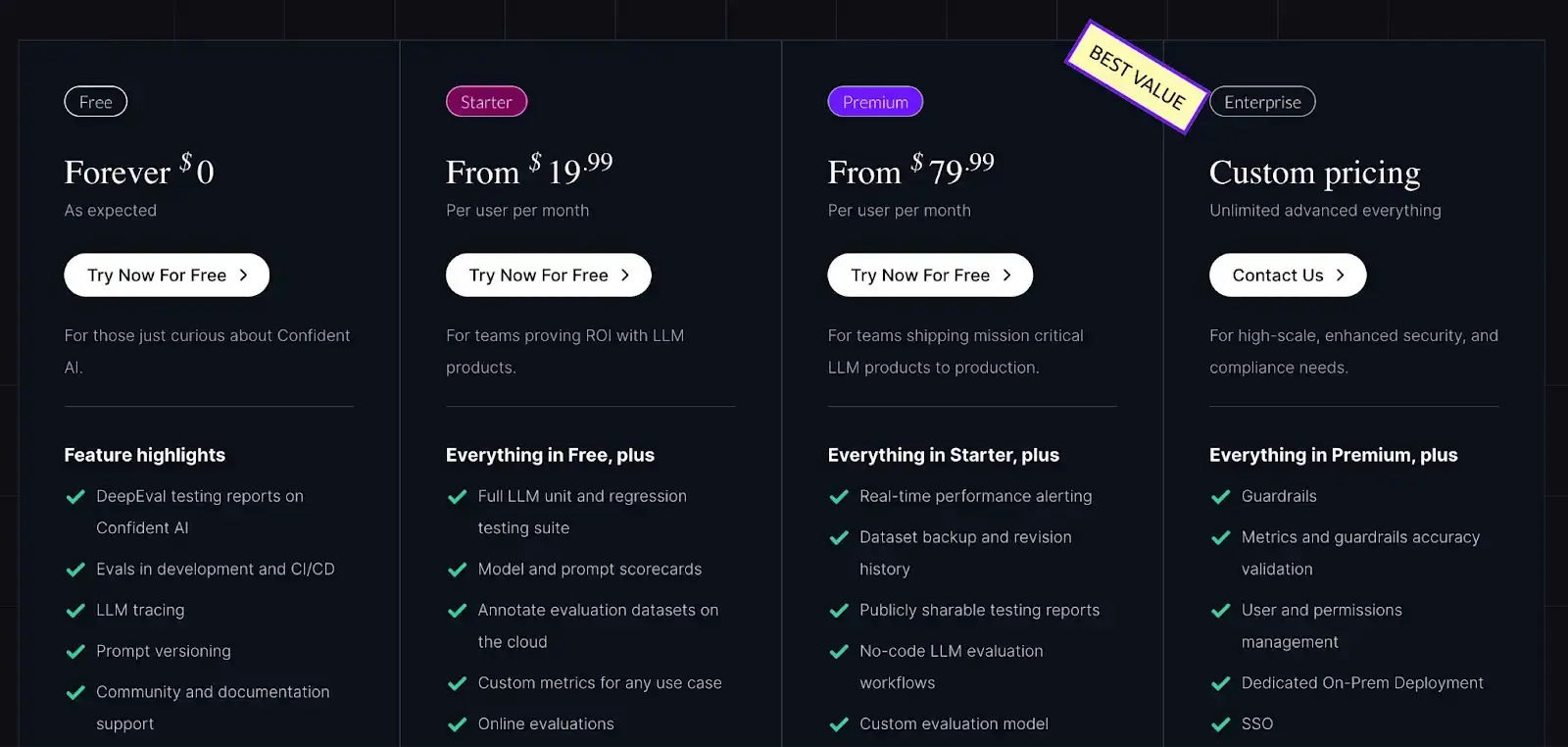

Confident AI is completely free and open-source. It also offers a free cloud tier for basic use. This is followed by three paid plans:

- Starter: $19.99 per user per month

- Premium: $79.99 per user per month

- Enterprise: Custom pricing

Pros and Cons

Confident AI’s open-source core (DeepEval) guarantees transparency and flexibility in metric definition. It brings structure and rigor to LLM development. Eventually, allowing teams to test prompts and verify quality assurance for LLMs, especially for sophisticated RAG systems.

The platform’s primary limitation is its focused scope. It expects users to define their own evaluation logic and generally requires integration with an orchestration tool (like ZenML) for pipeline management and model deployment.

The Best Langfuse Alternatives for LLM Observability

Each of these Langfuse alternatives offers a distinct path to tracing and improving your LLM-driven application. Consider your team’s priorities. Here are some alternatives we recommend:

- Galileo: for mission-critical safety and compliance.

- Arize Phoenix: for fast RAG iteration and local debugging.

- Braintrust and Confident AI: for systematic QA and quality benchmarking.

- ZenML: for unified LLMOps and auditability. It delivers the essential architectural foundation required to link application traces, evaluations, and prompts directly to a versioned, reproducible pipeline.

📚 Relevant alternative articles to read:

Take your AI agent projects to the next level with ZenML. We have built first-class support for agentic frameworks (like CrewAI, LangGraph, and more) inside ZenML, for our users who like pushing boundaries of what AI agents can do. With ZenML, you can seamlessly integrate whichever agent framework you choose into robust, production-grade workflows.