On this page

Langflow has become a popular choice for visually prototyping AI agents and workflows. It sits on a canvas and allows you to drag and drop components in the agent ecosystem.

However, as teams push Langflow into production scenarios, many ML engineers and Python developers run into its limits. Teams encounter critical issues with Langflow’s performance, operational limitations, and data risks.

This has spurred a search for Langflow alternatives that can better handle the demands of automated, agentic AI applications in the real world.

For this article, we tried and tested eight Langflow alternatives against a set of production-grade criteria to help you choose the right framework for building automated AI workflows.

The Need for a Langflow Alternative?

While Langflow is excellent for rapid iteration, it struggles to meet high production demands. Mind you, these are not isolated bugs but systemic issues representing a pattern. This creates a ‘prototyping trap’ where there is an initial development speed but significant future technical debt and security risks.

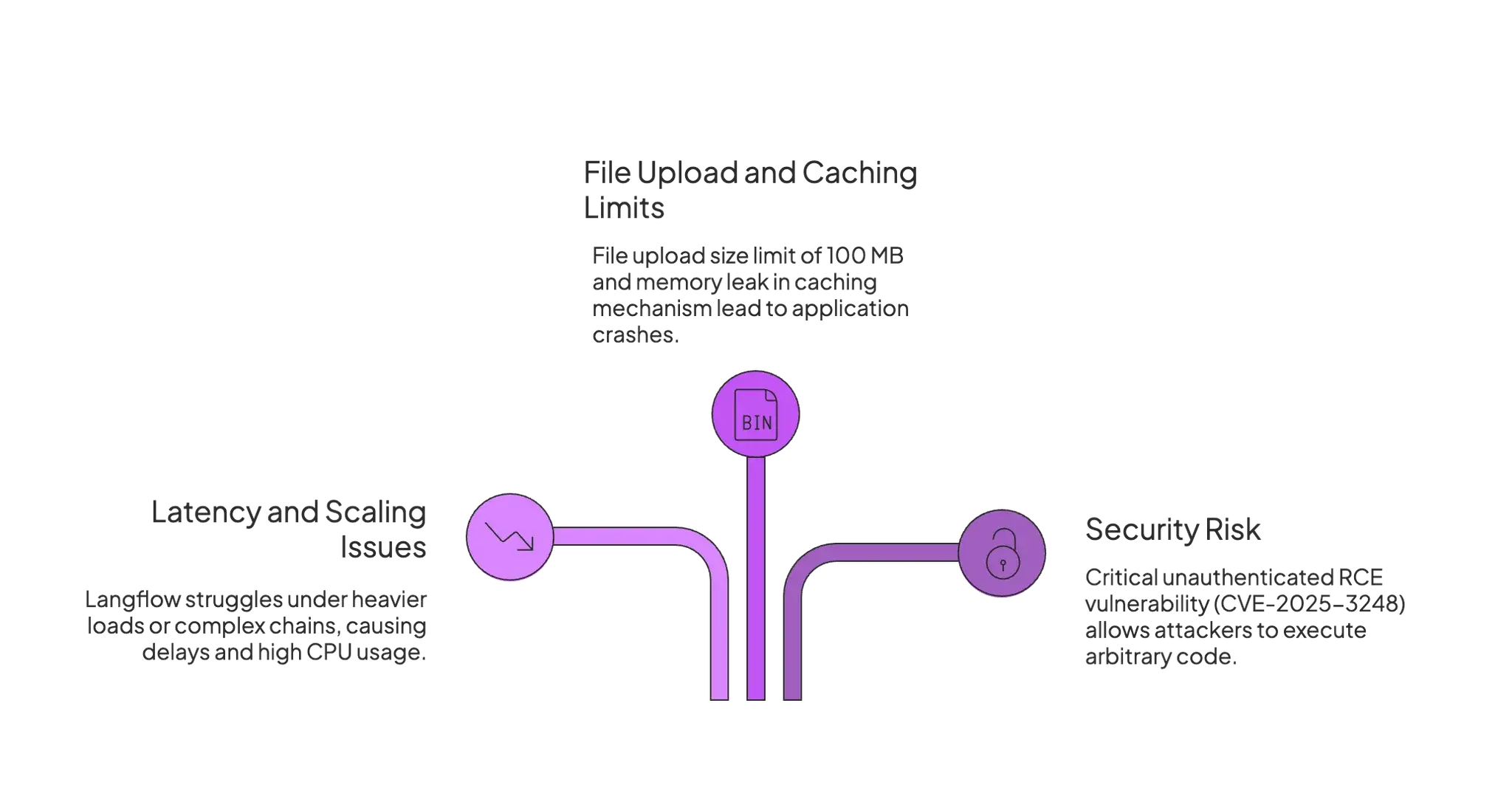

Reason 1. Latency and Scaling Issues

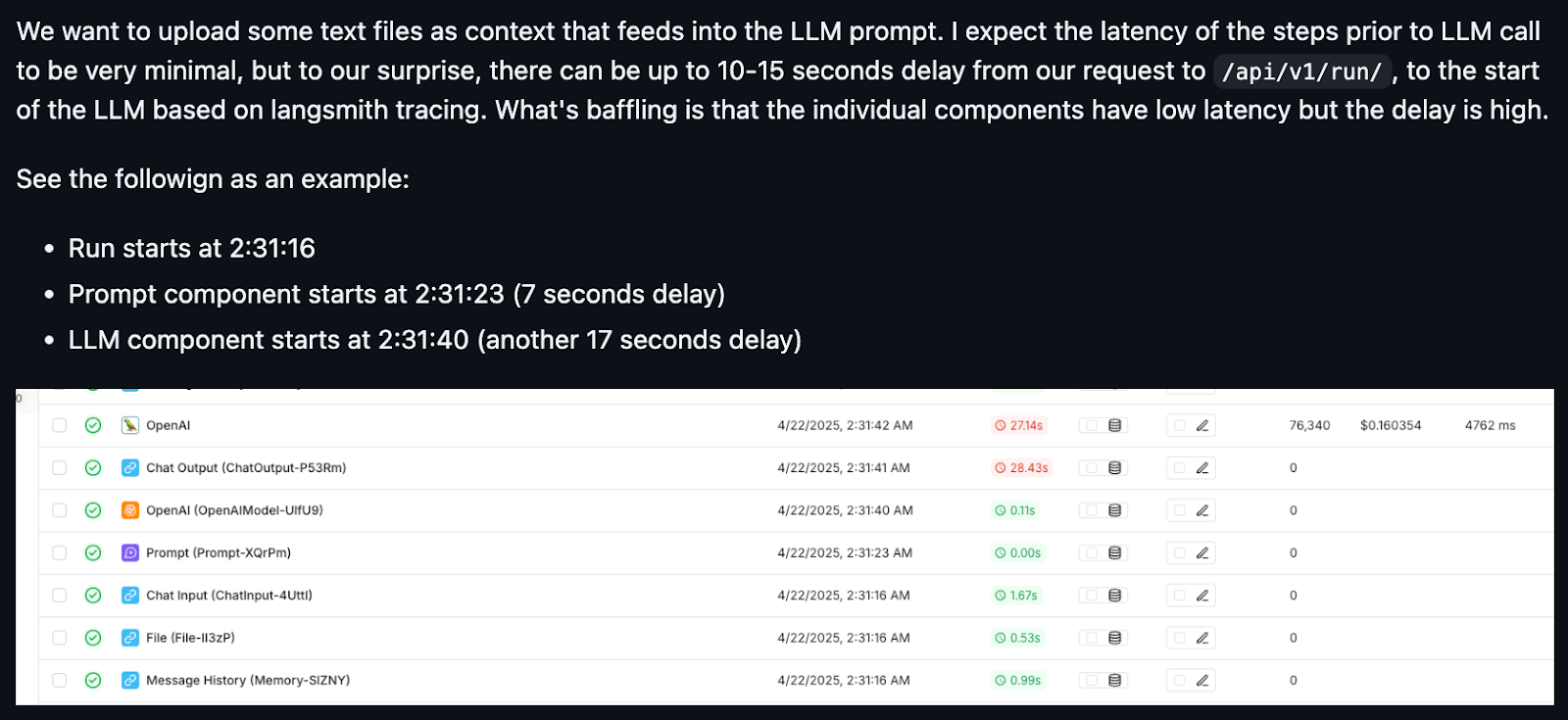

Langflow’s architecture struggles under heavier loads or complex chains. Community-reported issues on GitHub indicate delays of up to 10-15 seconds before an LLM call begins, and instances of CPU usage reaching 100%.

In short, if your application requires snappy responses or high concurrency, you may hit performance ceilings with Langflow.

Reason 2. File Upload and Caching Limits

The maximum file upload size in Langflow is 100 MB, leading to 413 Request Entity Too Large errors beyond this size. While this limit is configurable using environment variables, the need for manual, infrastructure-level changes is overwhelming.

Besides, Langflow’s caching mechanism has a known memory leak. Repeatedly uploading files or rebuilding components doubles down on memory usage. This results in frequent application crashes when handling large files and making Langflow an unreliable choice for data-intensive Retrieval-Augmented Generation (RAG) pipelines.

Reason 3. Security Risk/Patch Pressure for Self-Hosting

Langflow being open source means many opt to self-host it. But in 2025, a critical unauthenticated RCE vulnerability (CVE-2025-3248) was discovered in Langflow.

The flaw existed in the /api/v1/validate/code endpoint, which unsafely processes user-supplied Python code using exec() without any authentication checks. This flaw (patched in v1.3.0) allowed attackers to execute arbitrary code, embedding a malicious payload inside Python decorators.

What was once Langflow’s key strength (open-source) was, at that point in time, turned into a significant security liability.

Evaluation Criteria for Langflow Alternatives

We evaluated all Langflow alternatives against a consistent set of criteria addressing Langflow’s shortcomings and selecting a production-ready alternative.

1. Deterministic State and Graph Semantics

Evaluate whether the tool provides predictable and reproducible workflows. A production system must guarantee that, given the same inputs, it produces the same outputs.

This requires:

- Clear graph semantics with well-defined nodes and edges.

- Support for features like retries, resumable checkpoints, and idempotent operations.

- Stable public APIs and versioned graph definitions.

2. Orchestration and Concurrency Control

Assess the ability of Langflow alternatives to manage complex execution flows at scale. This includes:

- Support for parallelism to run tasks concurrently.

- Rate limits to manage API costs and prevent service overload.

- Cancellation and timeouts for long-running jobs.

- Backpressure handling when integrating with other systems.

Essentially, we favored frameworks that allow scheduling agents or tools in parallel and provide hooks for controlling asynchronous behaviors.

3. Persistence, Versioning, and Lineage

You need a full record of what ran, with which inputs, and why it produced a given result. This enables audits, rollbacks, and steady improvement. We assessed each tool’s capabilities for auditability and reproducibility. Essentially, we looked for:

- Persistent run history with searchable metadata.

- Versioning for prompts, tools, and workflow graphs.

- Artifact storage for inputs, outputs, and intermediate results.

- Lineage graphs that link code, data, and configurations.

- Easy export of run records for offline analysis.

This is necessary for debugging failures and rolling back to previous versions when needed.

What are the Best Alternatives to Langflow?

| Alternative | Best For | Orchestration Style | Deployment Model |

|---|---|---|---|

| LangGraph | Complex, stateful agent workflows with fine-grained control. | Code-first State Machine | Open Source Library / SaaS |

| FlowiseAI | Rapid visual prototyping with a focus on RAG and chatbots. | Visual Graph Builder | Open Source / SaaS |

| Dify | An all-in-one low-code platform for agents, RAG, and app deployment. | Visual Graph Builder | Open Source / SaaS |

| Microsoft AutoGen | Research and development of multi-agent conversational systems. | Conversational (Code) | Open Source Library |

| CrewAI | Building autonomous agent teams with role-based collaboration. | Role-based (Code) | Open Source Library / SaaS |

| LlamaIndex | RAG-first agentic applications with deep data integration. | Data-centric (Code) | Open Source Library |

| Semantic Kernel | Integrating agentic patterns into existing enterprise .NET/Java stacks. | Plugin-based (Code) | Open Source SDK |

| PydanticAI | Building type-safe, testable, and schema-enforced AI agents in Python. | Pythonic Control Flow | Open Source Library |

1. LangGraph

LangGraph, from the LangChain team, is a code-first agent orchestration framework. It’s best for strict, deterministic control over agent behavior and state, which makes it ideal for production scenarios where each step must be traceable.

Features

- Instead of free-form chains, LangGraph uses an explicit graph model. Each node represents an agent action or tool call. Edges define the flow (including conditions or loops). Checkpoints are written at each step, so you can pause, inspect, or retry from any node.

- Supports pausing the graph at any node to await human input or approval before proceeding.

- While not a RAG framework itself, LangGraph is well-suited for orchestrating advanced RAG strategies by defining nodes for retrieval, generation, and validation in a controlled, cyclical manner.

- As part of the LangChain ecosystem, LangGraph has access to a wide range of LangChain connectors and tools, including databases, web searches, and custom APIs.

Pros and Cons

LangGraph is praised for workflow transparency and control. You end up with a visual or programmatic blueprint of your entire agent pipeline. With its ability to branch and even loop under a coherent framework, it’s also excellent for workflows involving multiple agents in sequence or in parallel.

The major downside of LangGraph is the learning curve and some boilerplate required for defining graphs, nodes, and state handlers. For simple use cases, this might feel like over-engineering. Also, since it closely tracks LangChain’s evolving codebase, you might encounter version instability.

📚 Also read articles about:

2. FlowiseAI

FlowiseAI is an open-source visual builder for LLM workflows. Often touted as a faster, more enterprise-ready alternative to Langflow. It provides a visual canvas to design AI agents and pipelines, similar to Langflow’s interface, but with an emphasis on observability and quick iteration.

Features

- Offers a visual builder to construct chains of LLM calls, tools, conditions, etc. You can drag nodes for models, prompts, and data sources and connect them to outline the agent logic.

- Every run in Flowise is traceable. The platform provides execution logs and step-by-step traces of agent reasoning.

- Built-in HITL nodes let you pause a workflow and allow a human to review, edit, and approve tasks before the flow continues.

- Integrates evaluation metrics and even dataset-driven tests for your agents. For example, you can feed a set of Q&A pairs to your designed agent and get automatic metrics on accuracy.

Pros and Cons

Flowise is easy to use; it has a user-friendly UI, together with advanced features, that make it suitable for rapid prototyping. You have full visibility into the agent’s decision-making process via trace logs and can integrate external monitoring. Plus, being open-source, it has an active community and frequently updates new nodes and improvements.

However, being a visual tool adds another level of complexity. You see, the canvas soon becomes a visual clutter, and writing custom code nodes becomes necessary. Some deep integrations (and default nodes) are geared towards common APIs like OpenAI; using them with less mainstream models might require custom integration.

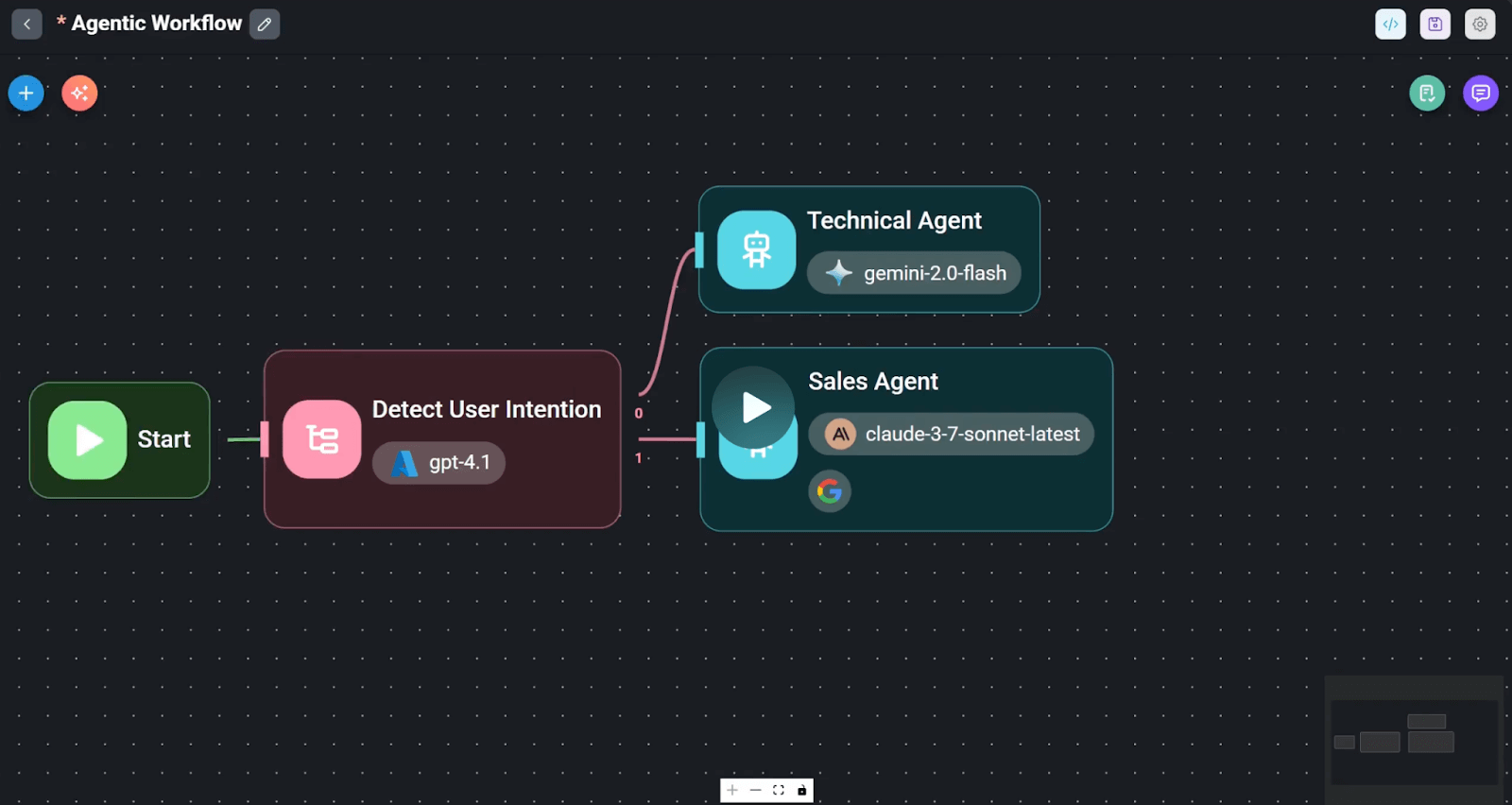

3. Dify

Dify is an all-in-one alternative to Langflow, covering agents, RAG, and MLOps aspects in one product. It’s designed for organizations looking to cover the entire application lifecycle from prompt design and vector database storage to deploying the agent as an app – without stitching together multiple tools.

Features

- Design AI-powered workflows using a drag-and-drop interface. Include branching logic, tool calls, and RAG (retrieval augmented generation) steps.

- A core strength is its robust, built-in RAG engine. You can upload documents or connect data sources, and Dify will handle embedding them and setting up a retrieval-augmented generation pipeline.

- Provides built-in observability tools, including dashboards to monitor application performance, token usage, latency, and the ability to version your workflows and prompts.

- Offers a Backend-as-a-Service (BaaS) model where every workflow is automatically exposed via a secure API. Mind you, Dify supports both a managed cloud service and self-hosted deployments.

Pros and Cons

Dify drastically cuts down implementation time by piecing together production and deployment tools under one roof. Its open-source nature makes it attractive for those worried about vendor lock-in.

However, in exchange for these benefits, you trade some flexibility. Dify’s components are not as configurable as dedicated standalone tools. For example, its vector search might not scale like a dedicated vector DB like Pinecone.

4. Microsoft AutoGen

AutoGen is an open-source multi-agent framework from Microsoft Research. At its core, AutoGen lets you spin up multiple LLM-based agents that talk to each other and humans in a conversation loop. It’s best for scenarios where you want a group-of-agents approach.

Features

- Offers strong multi-agent or conversation agent orchestration; unlike a single chain, agents can ask each other questions, verify ideas, or split tasks.

- AutoGen doesn’t force full automation; You can configure a

UserProxyAgentfor human inputs before executing code or proceeding with a task. - Built on Microsoft’s FLAML library, AutoGen uses natural language for orchestration rules. You can specify agent behaviors via prompts (system messages) rather than extensive coding.

- Built-in logging and integrations with partner tools like AgentOps help with detailed multi-agent tracking, metrics, and monitoring of costs and performance.

Pros and Cons

The biggest pro of AutoGen is flexibility in multi-agent conversations. Unlike Langflow, it doesn’t predefine graphs or sequences, which means agents can decide how to approach a problem together. AutoGen is also relatively easy to get started with if you’re familiar with Python: a basic two-agent loop can be set up in a few lines, using the provided agent classes.

However, given the freedom, AutoGen can fall behind in terms of predictability. Since agents keep chatting until they decide to stop, they may hallucinate or produce overhead dialogues.

📚 Also read articles on:

5. CrewAI

CrewAI is best for lean, Python-first multi-agent apps independent of LangChain. The framework is inspired by human teams (‘crew members’), where you define agents with specific roles and let them work in a turn-based sequence to accomplish a task.

Features

- Supports role-based agent design; you explicitly define each agent’s persona and duties (via a description and prompt). Each agent has a clear purpose and works within those boundaries.

- Agents can be equipped with memory to retain context throughout a task execution. It supports both short-term conversational memory and can be extended with tools for long-term knowledge retrieval.

- While primarily focused on autonomy, you can design workflows to pause and wait for human input. However, this is not a built-in primitive and requires custom implementation.

- CrewAI offers a wide range of integrations with leading observability platforms, including Langfuse, MLflow, Arize Phoenix, and OpenLIT, for comprehensive tracing and monitoring.

- Provides logs of each step an agent takes and the ability to replay or modify steps for debugging. If the outcome wasn’t as expected at, say, Agent 3’s turn, you can tweak that agent’s prompt or logic and rerun from there.

Pros and Cons

The structured nature of CrewAI is a clear advantage for reliability. Each agent’s contribution is isolated and can be tested and improved independently. CrewAI’s learning curve is fairly gentle for Python developers. You work with normal Python functions and decorators to set things up; no need to learn a new DSL or complex config.

On the contrary, CrewAI’s sequential hand-off can be limiting. Agents can’t spontaneously decide to change the workflow order – they follow the script. If the problem turns out to need a different approach mid-way, a CrewAI agent might be stuck because the framework is opinionated about the process.

6. LlamaIndex

LlamaIndex is best for building flexible RAG-first agent apps and systems. Recently, LlamaIndex introduced an AgentWorkflow system that extends it into agent orchestration. Its agent capabilities are built on top of this strong data foundation, making it ideal for knowledge-intensive tasks.

Features

- Supports multi-agent systems via its

AgentWorkflowclass. This allows agents to hand off control to one another to complete complex tasks that may require different tools or data sources. - An agent built with LlamaIndex can leverage vector indices, keyword tables, knowledge graphs, etc., to ground its responses in factual data. The framework handles chunking, embedding, and fetching relevant context for queries.

- Provides high-level abstractions for agents (like

FunctionAgentandReActAgent) and tools, which can be composed into custom, event-driven workflows. - LlamaIndex’s agents use a

Contextobject that acts as shared memory accessible by all steps in the workflow. This facilitates both short-term (chat history) and long-term (vector store-based) memory.

Pros and Cons

LlamaIndex provides a more structured and scalable agent workflow. It’s tested for Q&A over docs, and those strengths carry into agent workflows that need reading comprehension, data lookup, etc. Another pro is LlamaIndex’s emphasis on modularity: you can plug in different vector stores and define custom tools fairly easily. It also tends to play well with LangChain if you need to mix components.

However, LlamaIndex was not built as a general agent framework, so its agent orchestration capabilities are somewhat ‘thin’ around areas like multi-agent communication or elaborate branching logic.

📚 Also read articles on:

7. Semantic Kernel

Semantic Kernel is an open-source SDK from Microsoft designed to integrate LLMs into enterprise applications. It is built on a modular, plugin-based architecture that allows developers to connect LLMs to existing code and services. As of 2025, SK introduced multi-agent orchestration features that let multiple ‘skills’ (functions or agents) work together.

Features

- Supports multiple predefined orchestration patterns, including Sequential, Concurrent, Group Chat, and Handoff. This allows developers to model various collaboration scenarios.

- A plug-and-play architecture makes it easy to wrap enterprise APIs or databases. You define skills in C# or Python (e.g., a skill to send an email or query a database). The LLM can plan calls to these functions via natural language.

- Easily integrate a vector database or other storage as the memory store for agents to recall information across sessions. It also supports saving and loading session state, so an agent’s context can be persisted.

- As an enterprise-focused SDK, it is designed to integrate with standard logging and monitoring tools, like Azure Monitor and OpenTelemetry, for robust observability.

Pros and Cons

The major pro is SK’s strong software engineering paradigms. It’s also multi-language (C# and Python, with connectors to others), you can core in .NET, but maybe prototype some ideas in Python too. SK’s design makes it easier to test agents: since skills are just functions, you can unit-test them, and even simulate AI decisions.

On the flip side, SK can feel heavyweight for simple agent needs. The learning curve is a bit steep if you’re just looking to orchestrate a few API calls with GPT. Compared to Langflow’s simplicity or even LangChain’s Pythonic style, SK is more verbose and formal, which might slow down experimentation.

8. PydanticAI

PydanticAI is a Python agent framework built by the Pydantic team. It uses Pydantic models to bring type safety, validation, and structured outputs to LLM applications. Consider it the best Langflow alternative for typed tools, schema-safe agents, and testable graphs in pure Python.

Features

- Integrates with Pydantic Logfire for real-time debugging, performance monitoring, and tracing of agent runs.

- Uses Python's familiar control flow and agent composition to build your AI-driven projects, making it easy to apply standard Python best practices you'd use in any other (non-AI) project.

- Offers multi-LLM compatibility, whether you use OpenAI APIs, local models, or others, you could swap the underlying LLM without changing the rest of your code.

- Use Pydantic Graph to define graphs using typing hints. This is useful in complex applications where standard control flow can degrade to spaghetti code.

Pros and Cons

The biggest pro is that Pydantic feels natural if you enjoy FastAPI or Pydantic usage for APIs. Another advantage is its broad LLM support – it’s nice not to be locked into one provider and use different models while keeping your high-level logic the same.

However, you might find Pydantic’s approach too rigid for creative or open-ended tasks. For example, it’s tough to build an efficient storytelling agent or a conversational chatbot.

How ZenML Helps In Closing the Outer Loop Around Your Agents

All agentic AI frameworks - Langflow, LangGraph, CrewAI, and more - tackle the ‘inner loop’ of agent development. This includes designing how agents reason, what tools they use, and how they interact within a workflow.

However, they ignore the ‘outer loop’ - The one required to manage an agent as a production asset over its entire lifecycle.

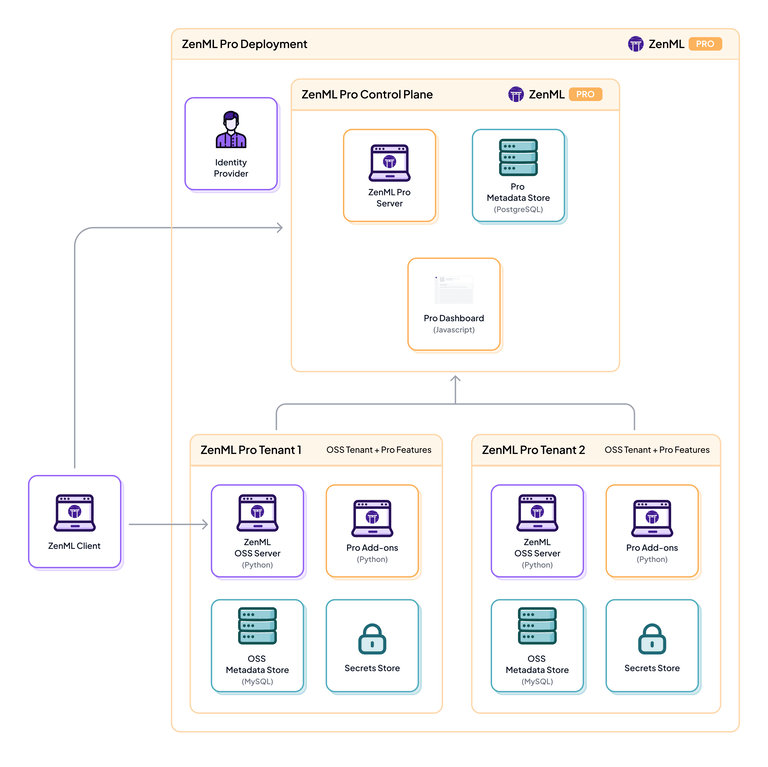

ZenML is an open-source MLOps + LLMOps framework that fills this gap. It provides the structure needed for versioning, deploying, monitoring, and continuously improving agentic systems.

Put simply, it addresses the operational realities of production:

- How is this agent versioned?

- How is it deployed to a staging or production environment?

- How is its performance and cost monitored over time?

ZenML is not an agent framework - but built to complement Langflow or any of its alternatives by providing end-to-end lifecycle management for your agents. Here are a few ways our platform helps close the outer loop:

1. Pipeline Orchestration Beyond the Agent

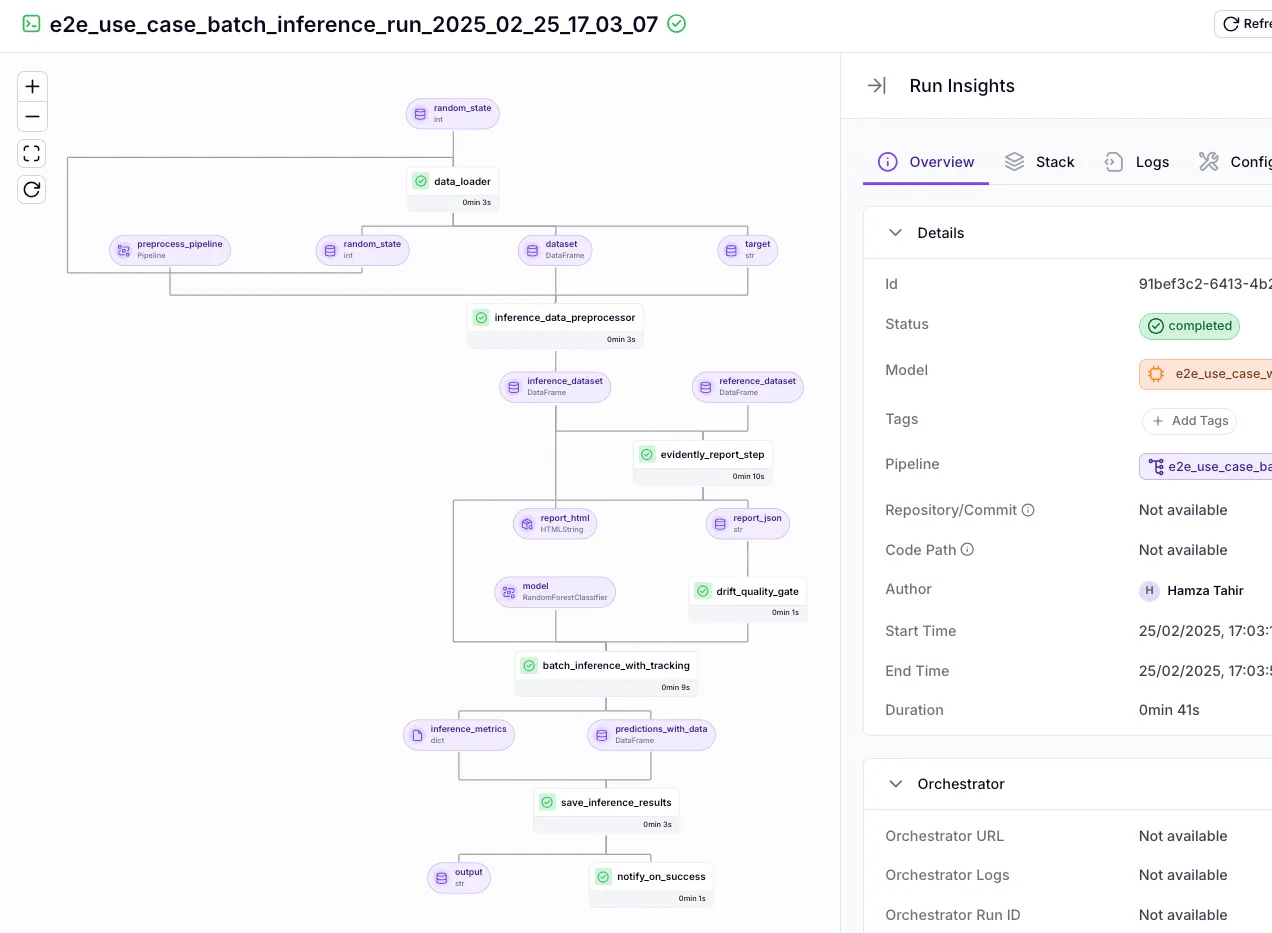

With ZenML, an agent built on any of the above frameworks can be embedded into a larger, end-to-end ZenML pipeline. For example, imagine a workflow where new data is collected daily, an agent analyzes it or responds to queries on it, and then the results are stored or trigger alerts.

ZenML can orchestrate this whole sequence: data prep → agent invocation → post-processing → notification.

This helps your agent become part of a reproducible, schedulable process (see the image above). You develop locally and then run the same pipeline in production on robust infrastructure (Kubernetes, Airflow, etc.) without changing your code.

2. Unified Visibility and Lineage

ZenML provides a centralized control plane that gives teams visibility over their entire AI system. It automatically tracks every pipeline run, logging all inputs, outputs, and artifacts.

For example, teams can trace a specific output back to the exact agent code, prompt version, and data that produced it. We thoughtfully added this function because it’s crucial for debugging, auditing, and ensuring regulatory compliance.

What’s more, ZenML provides a dashboard for inspecting and comparing these runs. This means if an agent made a flawed decision, you don’t have to comb through printouts – you can pinpoint the exact pipeline run and see each step’s details (including the agent’s actions and outputs).

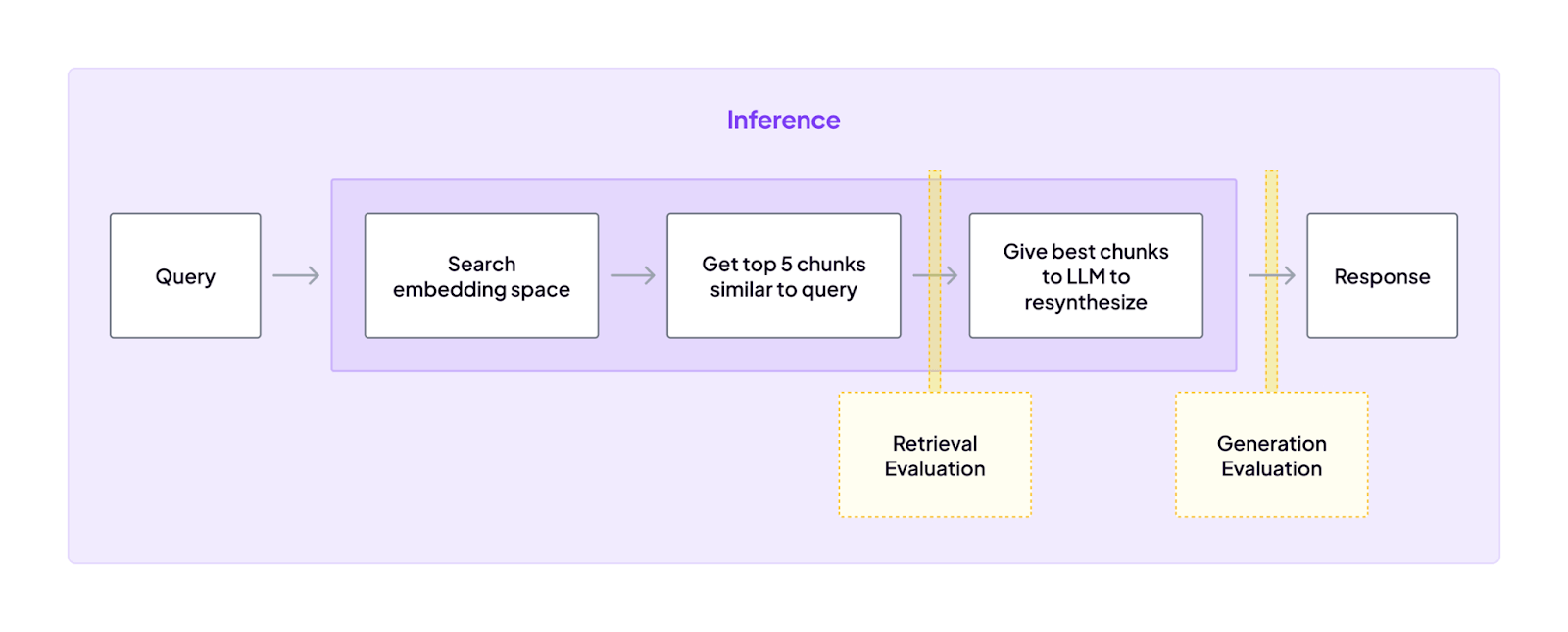

3. Continuous Evaluation and Feedback

Langflow and other AI agent builders help define an agent’s behavior, but they do not provide a systematic way to determine if that behavior is effective or if it degrades over time.

ZenML addresses this by enabling the creation of robust evaluation pipelines. You can configure pipelines such that after the agent produces an output, an evaluation step runs – for instance, scoring the agent’s answer against ground truth or using an LLM judge for quality.

If the evaluation finds the output unsatisfactory (e.g., accuracy below a threshold), ZenML can automatically flag it, trigger an alert, or even initiate a retraining pipeline for the agent’s model.

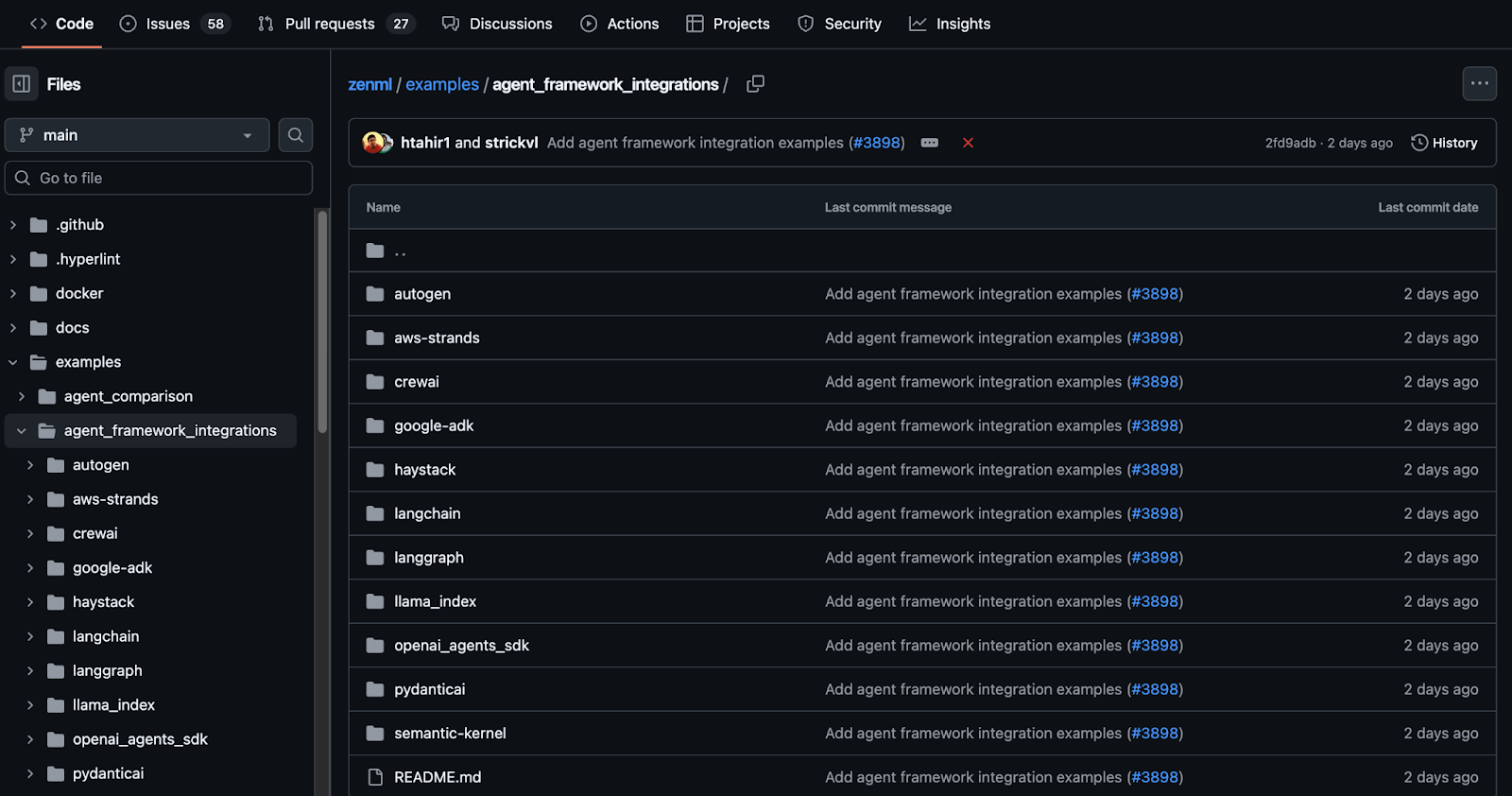

👀 Note: At ZenML, we have built several such integrations with tools like AutoGen, LangGraph, LlamaIndex, and more. We are actively shipping new integrations that you can find on this GitHub page: ZenML Agent Workflow Integrations.

The Best Langflow Alternative to Build Automated AI Workflows

After trying and testing Langflow alternatives thoroughly, one thing becomes clear: the ‘best’ choice isn’t one-size-fits-all. It depends on your priorities:

- For complex agent logic and stability: LangGraph paired with ZenML for orchestration gives you precision and repeatability.

- For fast prototyping with a UI: FlowiseAI plus ZenML is an ideal combo. You design visually and then rely on ZenML to deploy and monitor those designs in production.

- For enterprise and data-centric applications: LlamaIndex or Haystack, wrapped by ZenML pipelines for continual updates and evaluations, works brilliantly to handle knowledge retrieval.

If you’re interested in taking your AI agent projects to the next level, consider joining the ZenML waitlist. We’re building out first-class support for agentic frameworks (like LangGraph, CrewAI, and more) inside ZenML, and we’d love early feedback from users pushing the boundaries of what AI agents can do. With ZenML, you can seamlessly integrate whichever agent framework you choose into robust, production-grade workflows. Join our waitlist to get started.👇