As LLM apps grow in complexity, observability becomes mission-critical. LangSmith leads the category.

But its closed-source model and rising costs have left teams seeking alternatives that offer transparency, control, and predictable pricing.

If you're an ML engineer or Python developer feeling these constraints, this article is for you. We tested and analyzed the 9 best LangSmith alternatives for your LLM observability needs.

TL;DR

- Why look for alternatives: LangSmith’s per-trace pricing and limited retention can trigger huge bills as usage scales. Self-hosting is gated behind Enterprise deals, and most teams balk at sharing sensitive data with a closed-source SaaS.

- Who should care: ML engineers and MLOps teams building LLM agents or production pipelines with LangChain (or other frameworks) and want the same observability as LangSmith, but under open-source or flexible licensing.

- What to expect: An in-depth comparison of the 9 best LangSmith alternatives for LLM observability. Each tool is evaluated on cost and retention flexibility, deployment options, and tracing and monitoring features.

The Need for a LangSmith Alternative?

LangSmith is a polished observability suite, but it has several drawbacks in practice:

1. Cost and Retention Increase with Scale

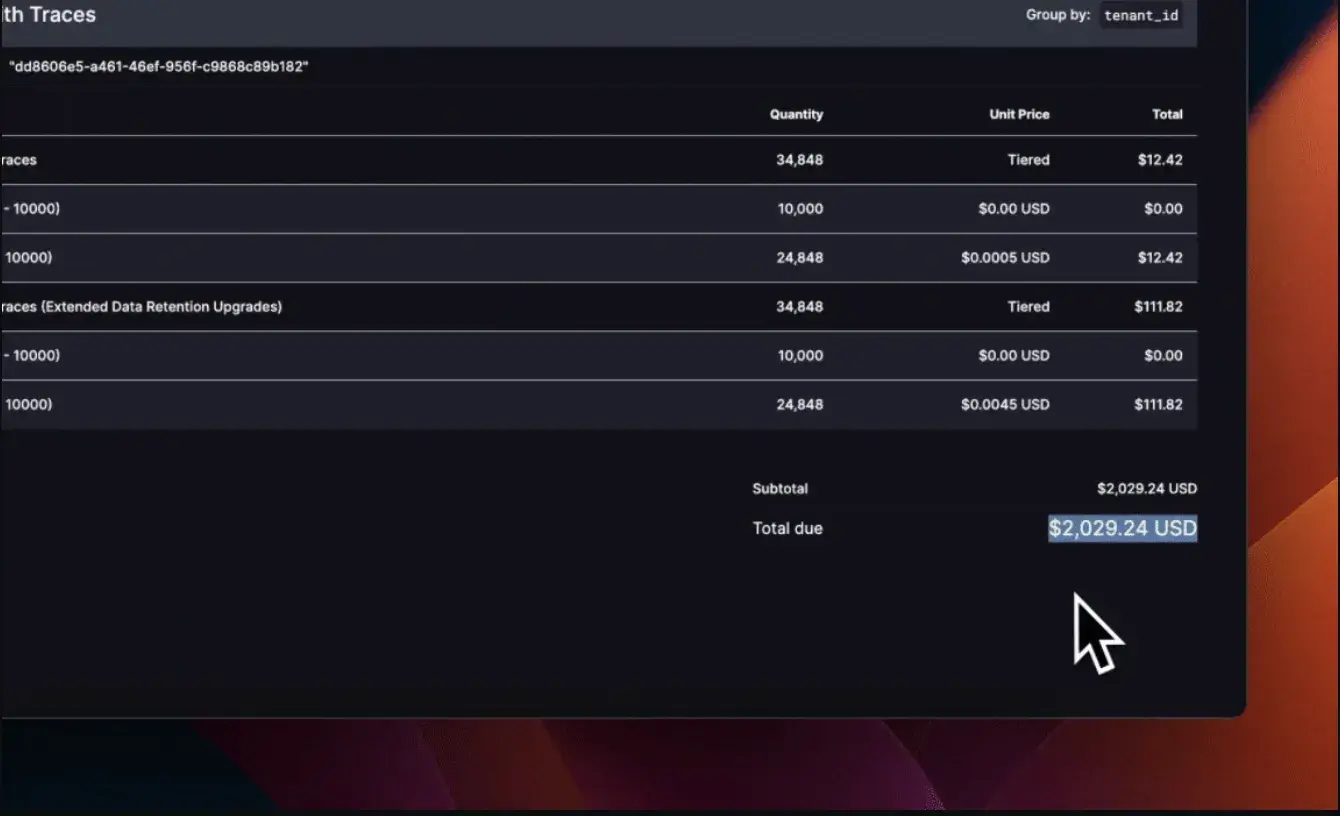

LangSmith's pricing becomes a major operational expense as your application scales. Its free tier is limited, and paid plans are based on data retention and the volume of traces.

By default, any trace that receives feedback or annotation automatically gets upgraded to the expensive extended tier. This means an agent workflow that logs or annotates frequently can easily incur very high charges.

Without strict retention controls, a busy workspace can hit monthly bills in the five-figure range. Its own documentation has reports of bills reaching thousands per month for high-volume teams.

2. Self-Hosting is Gated and Closed-Source

Many companies prefer an open model where they can inspect the code, deploy on-prem, and avoid vendor lock-in.

LangSmith is not open-source, and its self-hosted option is only available as an Enterprise add-on. Another twist is that the self-hosted version requires Kubernetes (production) or Docker (development). A high barrier to entry for teams that simply want to run the platform in their own Virtual Private Cloud (VPC).

3. Data Governance Concerns with SaaS Logging

Even for teams not requiring self-hosting, data governance and security are a top priority. Especially for businesses in finance, healthcare, or legal tech, regulatory regimes (GDPR, HIPAA, etc.) often require strict data deletion and on-prem control.

LangSmith logs every step in your LLM application and retains that data for up to 400 days by default. In short, teams with sensitive data or strict governance needs may prefer a self-hosted or open-source observability tool rather than a fully-hosted SaaS with fixed retention policies.

Sending detailed user or customer data to a third-party cloud is a red flag for many enterprises. If your prompts contain PII or proprietary content, would you want them stored off-site?

Evaluation Criteria

To provide a fair comparison, we evaluated each alternative through three key criteria:

- Total Cost and Retention Economics: Does the tool offer predictable pricing and flexible retention for logs and traces? We looked at the full cost of ownership. We prioritized tools that offer a clear and affordable path from a free open-source version to a scalable, self-hosted, or managed-cloud solution without punitive data costs.

- Deployment and Data Governance Fit: Can the tool be self-hosted or run on-prem without enterprise-level contracts? This was a primary driver. We assessed how each tool handles data privacy, like SSO, encryption, compliance, and allows you to control data.

- Observability Depth and Integration Effort: How rich is the tracing and monitoring? We evaluated the depth of its observability features, its compatibility with the OpenTelemetry standard, and its ability to work with various LLM frameworks, not just LangChain.

Once we had a clear picture, we shortlisted a list of 9 best LangSmith alternatives.

What are the Top Alternatives to LangSmith

Before we jump in, here’s a quick table listing out the top choices, key features, and pricing details:

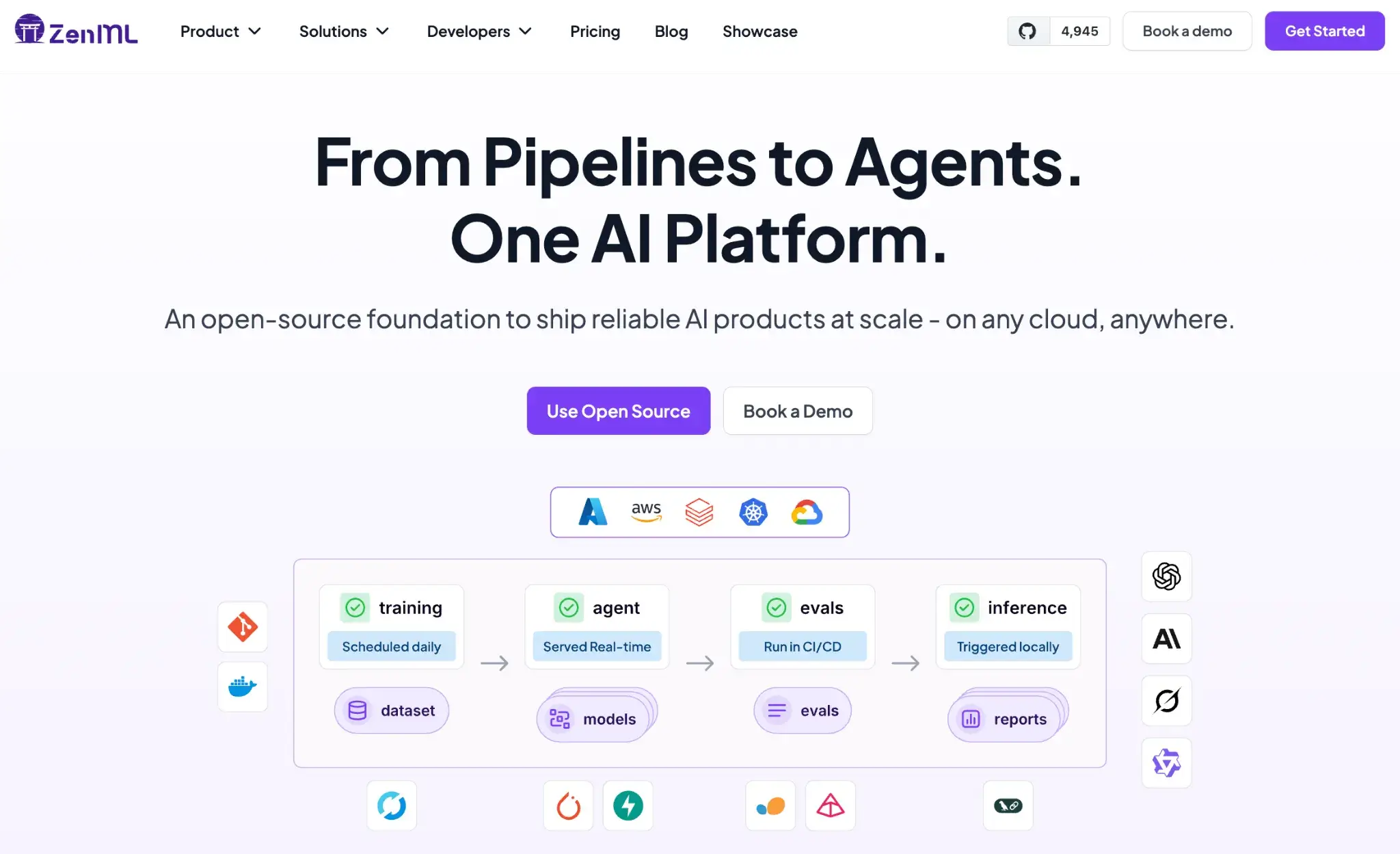

1. ZenML

Best for: Teams that need end-to-end LLM observability tied to reproducibility, lineage, and governance.

ZenML is an open-source MLOps + LLMOps framework that adds production-grade observability to your LLM stack while keeping reproducibility, lineage, and governance at the core.

Where LangSmith focuses on application-level traces, ZenML covers the full lifecycle: data → prompts → models → agents → deployments. That makes it a strong fit for ML teams that need LLM observability tied to versioned datasets, pipelines, and CI/CD.

Features

- Dashboard views: Analyze pipelines with DAG and Timeline views, browse run history, inspect step logs, and see runtime metrics (duration, timestamps, cache hits) and artifact lineage and previews; integrations like Evidently/GE/WhyLogs render as rich reports.

- Artifact visualizations: Auto and custom visuals (HTML, Image, CSV, Markdown, JSON) in the UI and notebooks; curated visualizations can surface key charts across Models, Deployments, Pipelines, Runs, and Projects.

- Metadata and metrics: Log and explore metrics/params as run or model metadata; programmatic access via the client.

- Prompt, dataset, and eval set versioning: Treat prompts and eval sets like code. Pin a run to a prompt version and rerun the same pipeline weeks later to verify a regression or reproduce a result.

- Pro features: Model Control Plane for model/version views and comparisons; experiment comparison tables and parallel coordinates for multi-run analysis.

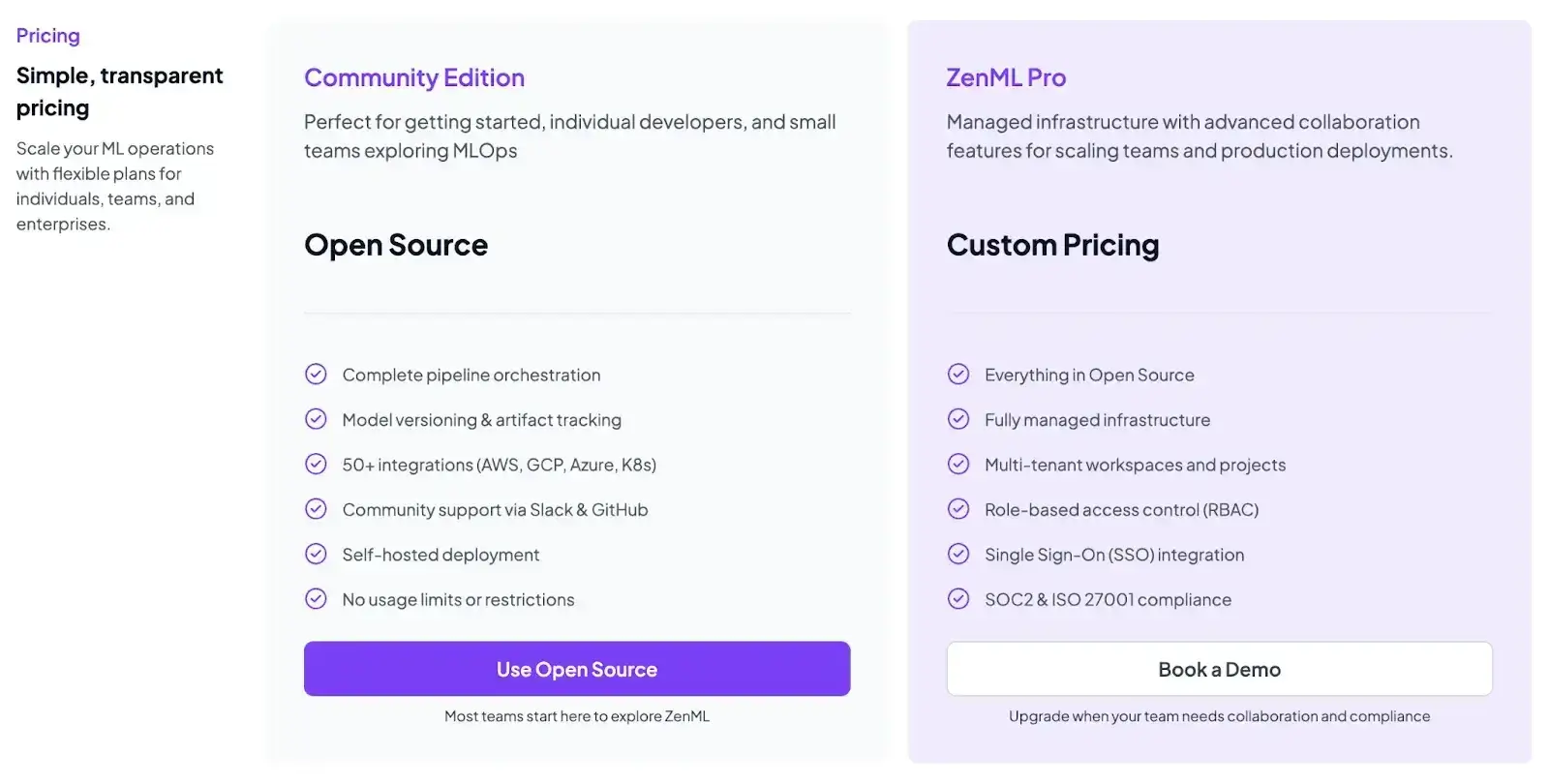

Pricing

ZenML is free and open-source under the Apache 2.0 license. The core framework and dashboard are fully available without cost.

For teams needing enterprise-grade collaboration, managed hosting, and premium support, ZenML offers custom business plans. These are typically usage- or seat-based and are tailored depending on your deployment model (cloud or on-prem).

Pros and Cons

ZenML offers a broader scope than LangSmith, combining LLM observability with pipeline reproducibility, lineage, and release management. It’s built for teams that want full control over their workflows and data, making it especially suitable for compliance-driven environments. Since ZenML can be self-hosted, teams can manage retention policies, apply PII controls, and keep all artifacts within their own storage systems.

On the downside, ZenML’s power comes with structure. It follows a more defined pipeline model, which requires designing pipelines and assets upfront. This setup delivers long-term benefits in control and repeatability but involves a steeper initial setup compared to simpler log-only tools.

2. Langfuse

Langfuse is an open-source LLM engineering platform and a very direct competitor to LangSmith. It’s designed from the ground up to provide detailed tracing, prompt management, and evaluation metrics for LLM applications.

Features

- Capture and visualize complex LLM operations with detailed trace trees for every session, including latency and nested calls.

- Collaborate with teammates by sharing trace URLs, adding comments on spans, and replaying full multi-turn conversations for debugging.

- Manage prompts independently from code using version-controlled templates, allowing fast iteration without redeployment.

- Track real-time token usage, latency, and model costs through built-in dashboards and receive alerts on budget overruns.

Pricing

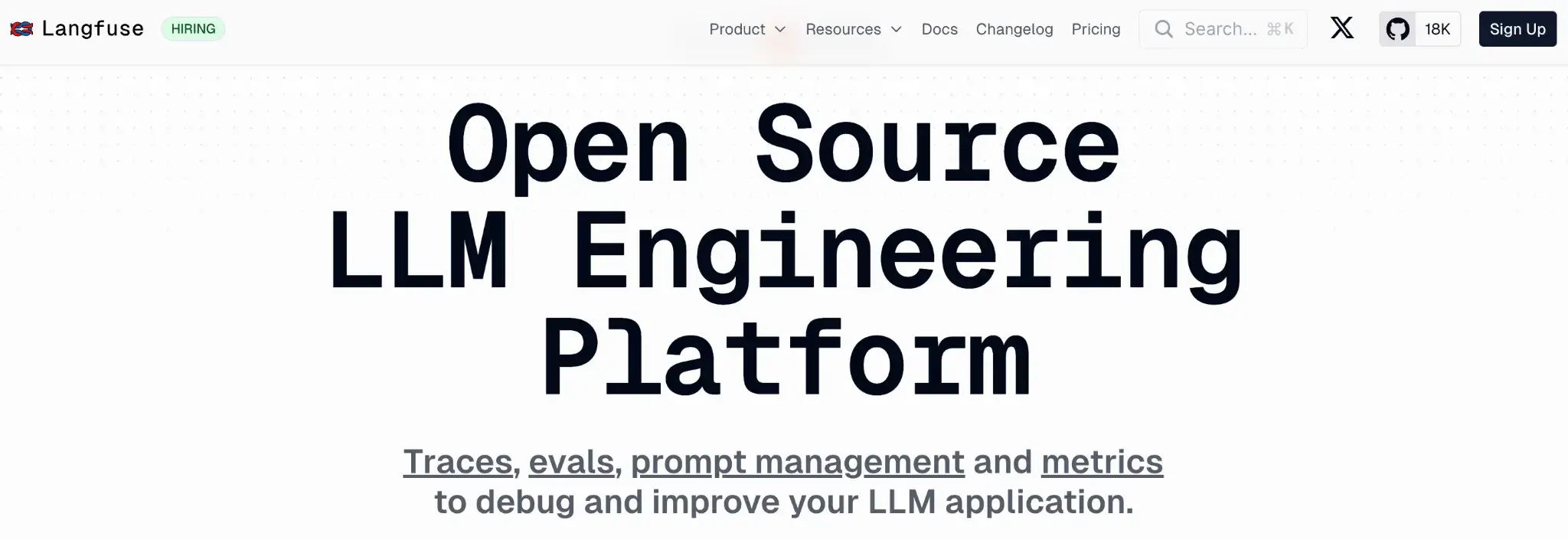

Langfuse is free and open-source to self-host. The company also offers a managed Langfuse Cloud with a generous free ‘Hobby’ tier and three paid plans:

- Core: $29 per month

- Pro: $199 per month

- Enterprise: $2499 per month

Pros and Cons

Langfuse’s strengths are its open-source roots and usability features. Because it’s OSS, you can inspect or even self-host it. Besides, it strikes a good balance of observability and tracking features.

The main con is that, as a product, it has a similar scope to LangSmith, focusing primarily on the LLM-application layer rather than the entire MLOps pipeline. Its UI, while robust, isn’t as polished as some commercial alternatives to LangSmith.

3. Arize Phoenix

Arize Phoenix is an open-source library from Arize, an ML-observability company. It emphasizes a “local-first” approach: you run Phoenix from your notebook or server. Phoenix implements the OpenTelemetry standard, so it can capture any LLM call instrumented through its SDK.

Features

- Run local or VPC-based traces to keep sensitive LLM data entirely in-house while debugging workflows.

- Instrument LangChain, LangGraph, and custom stacks through OpenTelemetry for standardized trace collection.

- Create and run ‘evals’ that score model outputs for hallucinations, drifts, and RAG relevance using built-in or custom metrics.

- Visualize retrieval behavior with embedding maps (UMAP), coverage charts, and threshold-tuning panels for RAG optimization.

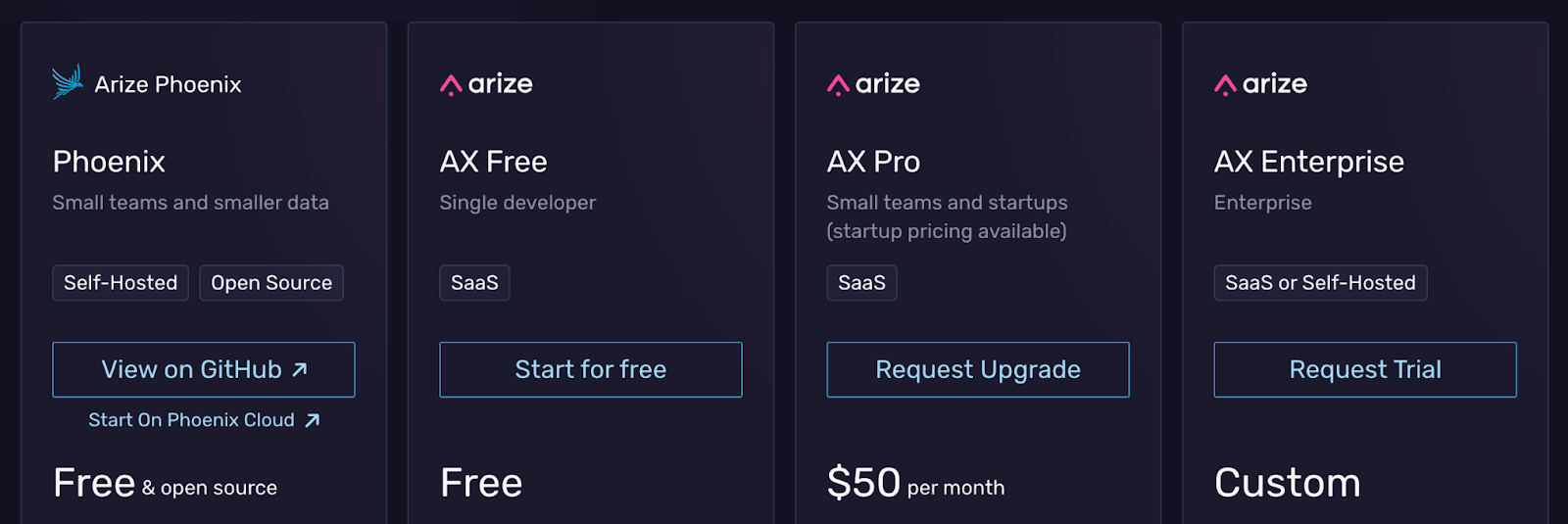

Pricing

Arize Phoenix is open-source and free to use. It’s designed to work as a standalone tool. But it can also be used with the ‘local’ component of Arize's full commercial platform, ‘Arize AX,’ which is a paid service and has the following pricing plans:

- Arize AX Free: Free

- AX Pro: $50 per month

- AX Enterprise: Custom pricing

Pros and Cons

Phoenix’s local-first nature is a huge pro for data privacy and rapid debugging. Its notebook-integrated UI excels at RAG debugging: you get detailed token-level views and can interactively try out prompts. The focus on RAG and embedding visualization is a key differentiator from LangSmith.

On the flip side, Phoenix is not a persistent production backend by itself. It requires running a heavy backend and has a learning curve. Its UI is robust but can feel complex for quick lookups. You would typically use it during development and then push your logs to a production system.

4. Helicone

Helicone is an open-source LLM observability platform that operates as a simple, high-performance proxy. Instead of installing an SDK, you just change your API's base URL, for example, openai.api_base, to point to Helicone, and it logs every request and response.

Features

- Use a single-line code change to integrate logging instantly across OpenAI, Anthropic, LangChain, and any OpenAI-compatible API.

- Replay full user sessions or multi-turn chat flows to debug each LLM call and inspect prompts, responses, and intermediate steps.

- Use real-time dashboards to monitor and break down your LLM costs via user, prompt, or custom properties, or forward data to analytics tools like PostHog for custom dashboards.

- Edit and version prompts directly in an interactive playground to test variations without changing your production code.

- Cache identical requests and enforce rate limits on a per-user or global basis through its built-in API gateway to secure LLM usage.

Pricing

Helicone offers a free plan with up to 10,000 API requests per month. This is generous enough for light usage. Above the free tier, Helicone has paid plans:

- Pro: $20 per seat per month

- Team: $200 per month

- Enterprise: Custom pricing

Pros and Cons

Helicone's biggest pros are its simplicity and open-source flexibility. Its one-line integration means almost zero engineering effort. You can get it running in minutes. It's lightweight and framework-agnostic.

The con is that it is less "deep" than LangSmith or Langfuse for observability. It operates at the request/response level, so it's less suited for visualizing complex, multi-step agent traces and lacks built-in evaluation features.

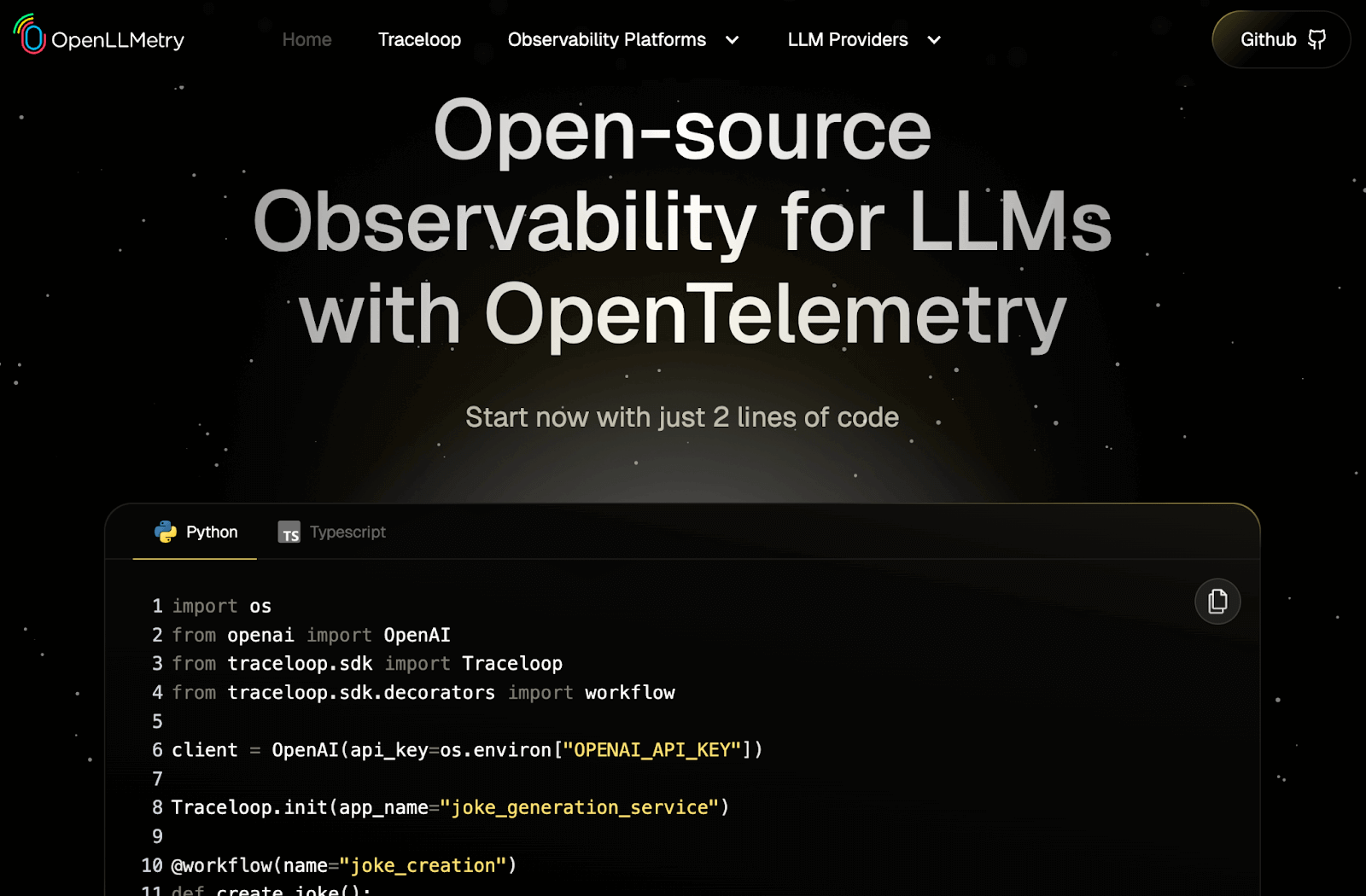

5. Traceloop OpenLLMetry

OpenLLMetry (from Traceloop) is an open-source instrumentation library, not a full-stack platform. Its one and only job is to make it easy to capture traces and metrics from your LLM application and send them to any backend you want, using the OpenTelemetry (OTel) standard.

Features

- Automatically instruments popular libraries (like OpenAI, LangChain, LlamaIndex) to capture prompts, model calls, and tool events with zero manual setup.

- Record every execution detail, including inputs, outputs, intermediate steps, and metadata like token counts or embeddings for full transparency.

- Explore multi-view dashboards such as Trace, Dataset, and Dialogue views to visualize your application flow and debug with precision.

- Run LLM-based evaluations directly on stored traces to assess correctness, detect hallucinations, and measure response quality over time.

- Send your traces to any OTel-compatible backend, including Jaeger, Grafana, Datadog, or even other tools on this list like Langfuse.

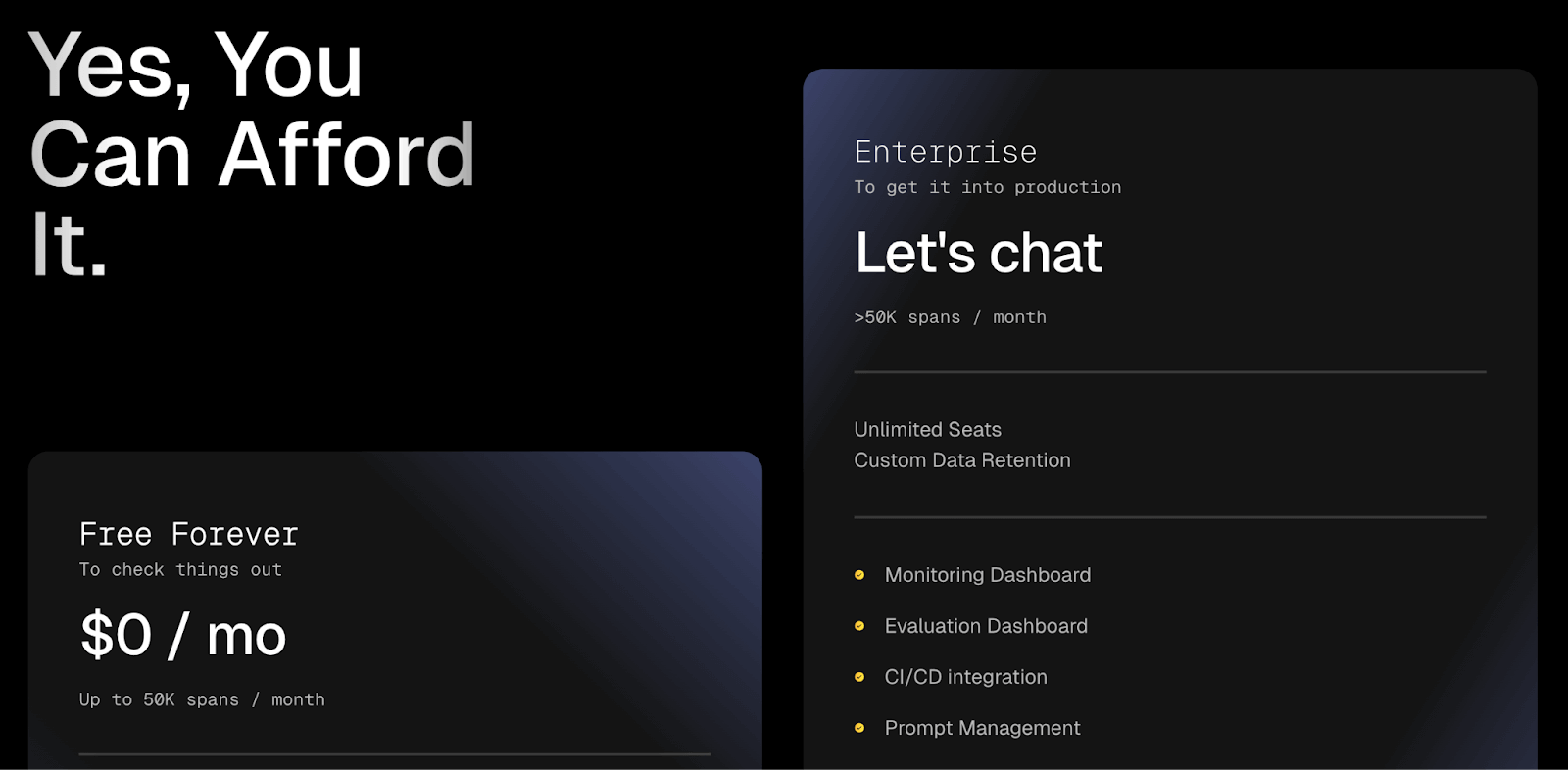

Pricing

OpenLLMetry itself is completely free and open-source. There are no usage fees for the library. If you use Traceloop AI’s cloud offering, they provide a free plan (up to 50,000 spans per month) and then custom enterprise pricing.

Pros and Cons

The major benefit of OpenLLMetry is flexibility and ownership. Because it uses open standards, you can run it on any platform. If you want total control, you can host the trace storage yourself.

The con is that it is only the instrumentation part. It doesn't provide the rich, LLM-specific UI, prompt management, or evaluation features of LangSmith or Langfuse. You must provide your own backend.

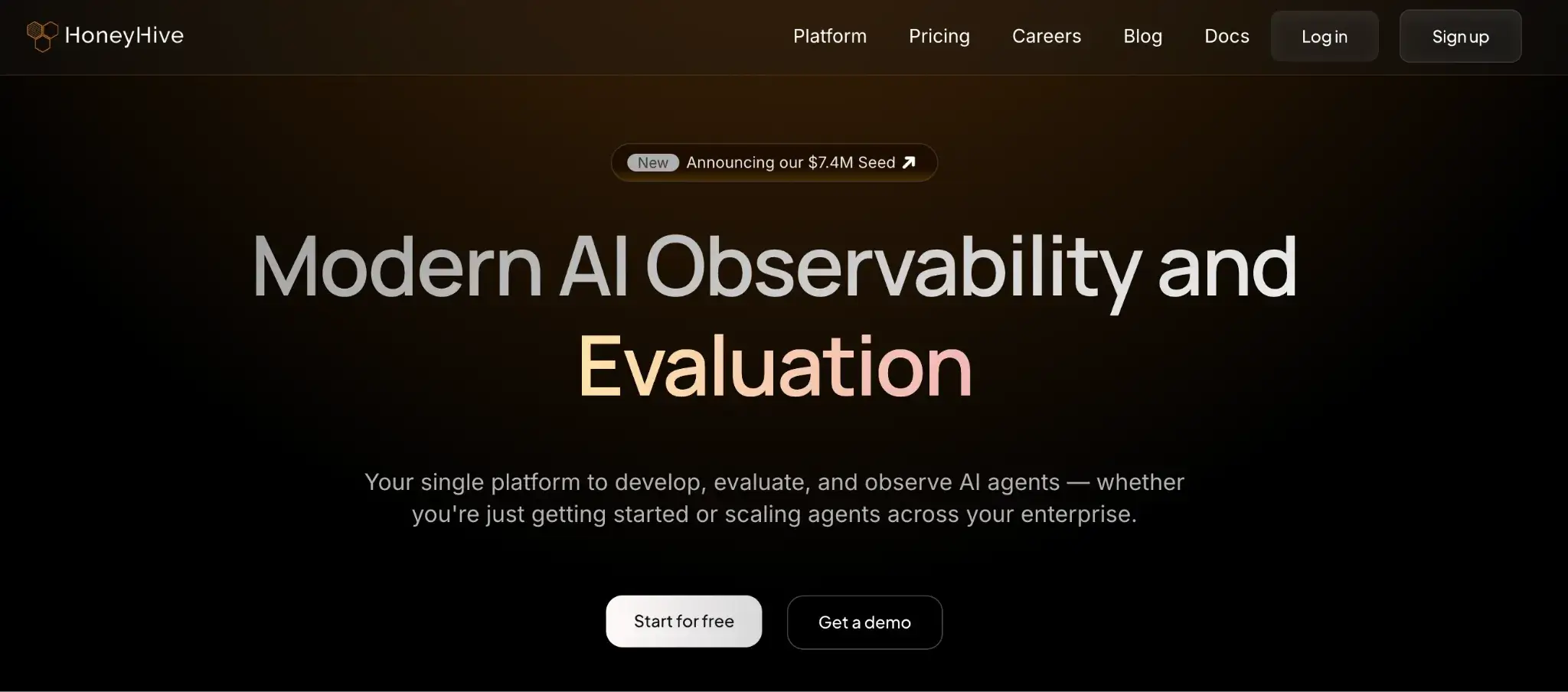

6. HoneyHive

HoneyHive is a comprehensive, proprietary platform focused on the full lifecycle of LLM application development, with a very strong emphasis on evaluation and the dev-prod feedback loop.

Features

- Ingest agent traces through OpenTelemetry (OTLP) to collect structured observability data from any LLM or agent pipeline.

- Replay complete chat and agent sessions to inspect every tool invocation, LLM exchange, and state transition in sequence.

- Visualize execution timelines and dependency graphs to understand how each step interacts and where delays occur.

- Filter logs by prompt, output, or user session to identify recurring issues and error clusters.

- Configure monitors and alerts that detect anomalies in latency, cost, or model errors and notify your team instantly.

Pricing

HoneyHive offers a generous free Developer tier capped at 10,000 events/month and 30-day retention, with core observability features. The Enterprise plan includes optional on-prem deployment for regulated teams.

Pros and Cons

HoneyHive’s appeal is its agent-centric focus and ease of use. The dev-prod feedback loop is a killer feature for teams serious about application quality. Since it’s OTLP-compatible, you’re not locked into a proprietary SDK. Compared to LangSmith, HoneyHive is more purpose-built for agents and includes session replays.

The con is that it’s SaaS-only, and like LangSmith, self-hosting is available only with Enterprise plans. (the paid tier). So pure on-prem users might hesitate. Also, HoneyHive is a newer platform with a smaller user base, so its ecosystem is still growing. While it offers many features, some teams might find the UI less mature than the incumbents.

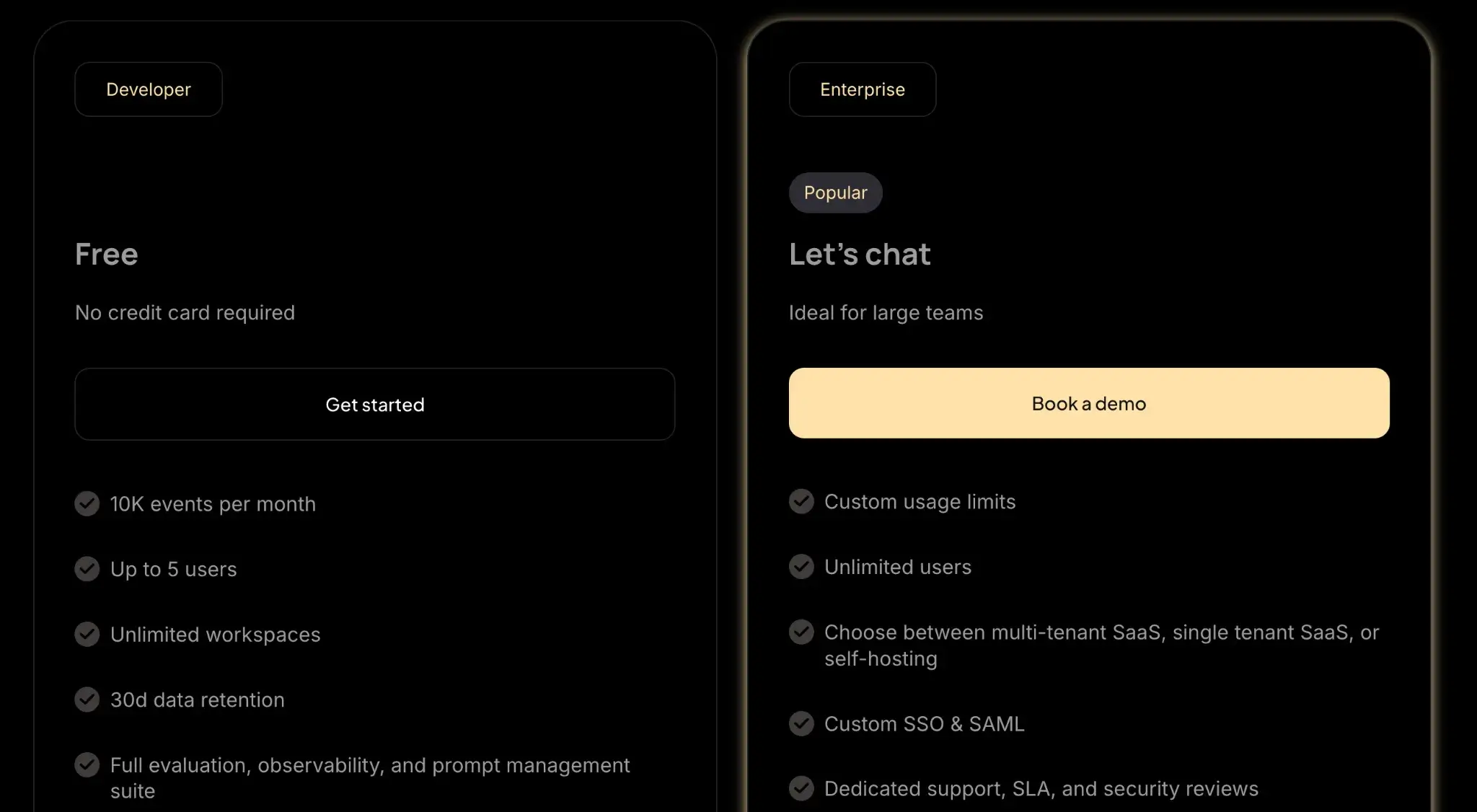

7. Tracely

EvidentlyAI is a well-known open-source library for ML model evaluation, monitoring, and drift detection. Tracely is their newer, open-source library (based on OpenTelemetry) for capturing LLM traces, which then feed into the main Evidently platform for analysis.

Features

- Connect your LLM traces directly to Evidently's powerful evaluation engine, which includes over 100 checks for things like data drift, concept drift, and model quality.

- Explore multi-view dashboards in Evidently Cloud to visualize timelines, conversation turns, and detailed trace spans step-by-step.

- Run automated evaluations on your classical ML models and your new LLM applications in one platform and with the same evaluation tools.

Pricing

Tracely itself is completely free (MIT-licensed). You can run it on any infrastructure at no cost. If you use Evidently AI’s hosted platform, you can pick from any of the following plans:

- Developer: Free

- Pro: $50 per month

- Expert: $399 per month

- Enterprise: Custom pricing

Pros and Cons

The big advantage of Tracely is that it’s free and OSS. You get fine-grained trace capture without paying per event. It integrates with Evidently (also OSS) for a full stack. It's an excellent choice for teams that want to apply rigorous statistical monitoring to their LLMs.

On the downside, Tracely doesn’t include its own UI, and you rely on a backend viewer. Also, the "full" experience, including the interactive trace viewer, is part of the commercial version.

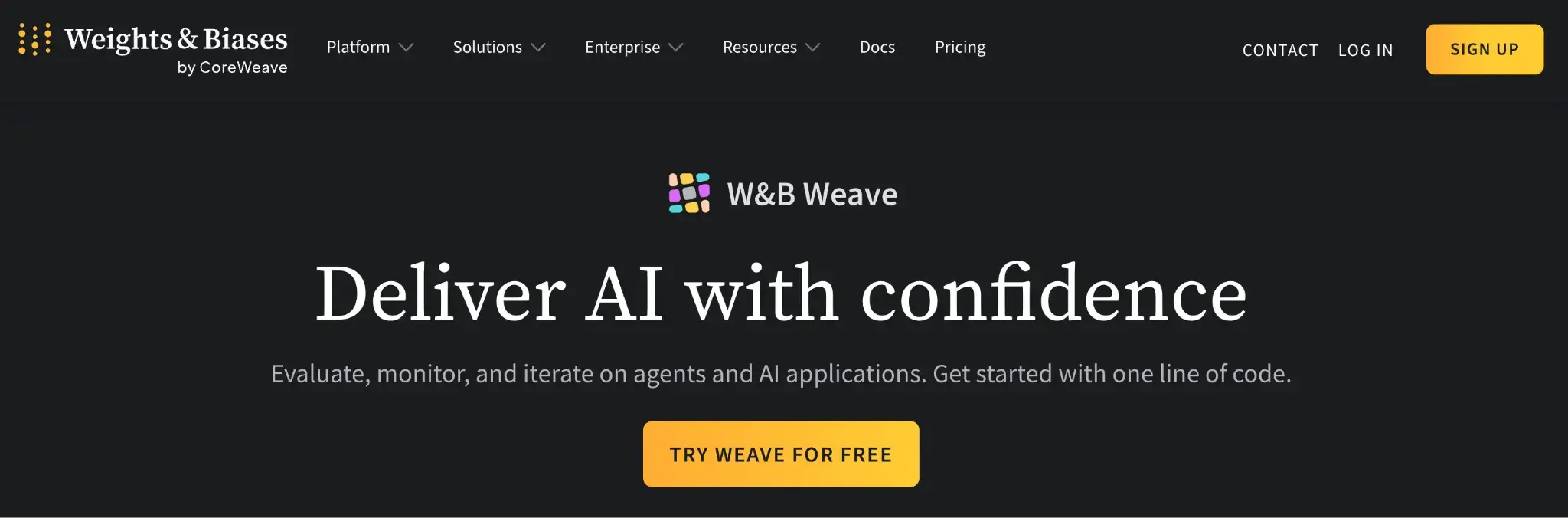

8. Weights & Biases Weave

Weights & Biases (W&B) is a dominant platform in the classical MLOps space for experiment tracking. W&B Weave is their set of tools for extending this capability to LLM applications, making it a natural choice for teams already in the W&B ecosystem.

Features

- Capture every LLM request and response within your W&B runs to create complete, query-level traces.

- Create and run evaluations that compare prompts, models, or datasets side by side to measure consistency and quality.

- Track live model performance for latency, token usage, and error rates, and define custom domain-specific quality metrics in production.

- Integrate traces with your existing W&B workspace to link experiments, visualize metrics, and share dashboards across your team.

Pricing

Weights & Biases is free for personal projects, with a generous 100GB storage limit. Then it has two paid plans:

- Pro: $60 per month

- Enterprise: Custom pricing

Pros and Cons

For teams already using W&B to track model training, Weave is a massive pro. It integrates LLM observability directly into their existing MLOps platform. You get the power of W&B’s dashboards and collaboration tools, plus the LLM-specific tracing.

However, Weave is not a standalone tool. It requires a W&B account and know-how of that system. Non-W&B users may find it heavyweight. Moreover, Weave is still maturing as a product, so some advanced observability features like multi-step session replay are less polished than specialized tools.

9. Opik by Comet

Similar to W&B, Comet is another leading MLOps platform for experiment tracking. Opik is their integrated toolset for LLM application observability and evaluation. At its core, it provides rich tracing as well as built-in evaluation and guardrail testing.

Features

- Offers an interactive UI that lets you record and visualize every trace and span from your LLM workflows, from data retrieval to final model response.

- Create and run evaluations that benchmark prompts, models, and datasets using built-in metrics like BLEU or LLM-judge scores.

- Automate continuous testing by integrating Opik into CI/CD pipelines and enforcing PyTest-style unit tests for every deployment.

- Monitor live production runs to detect failing prompts, drift, or degraded model quality, and feed those insights directly back into development.

- Ingest traces from any framework or language through native OpenTelemetry support for complete cross-system visibility.

Pricing

Opik is source-available (not strictly open-source) and can be self-hosted with full tracing features. While Comet offers three hosted plans:

- Free Cloud: Free

- Pro Cloud: $39 per month

- Enterprise: Custom pricing

Pros and Cons

Opik’s strength is that it is open-source with a full feature set. All of its tracing, evaluation, and guardrail functionality is included in the free code. Plus, it also has excellent integrations and is popular among many enterprise teams.

Like W&B, the main con is that it's part of a larger, comprehensive platform. This is a huge benefit if you're already a Comet user, but it might be too much if you're just looking for a simple, standalone trace logger.

The Best LangSmith Alternatives for LLM Observability

Each tool excels in different contexts, from agent monitoring to full-stack observability. Here’s how to choose the right one for your stack.

- If you need an open-source replacement > choose Langfuse. It is the most direct competitor, offering a clean UI, prompt management, and an easy-to-self-host open-source model.

- If you need full MLOps Reproducibility> choose ZenML. It helps you build truly reproducible, auditable, and versioned pipelines for your LLM and ML models.

- For Local-First and RAG Evaluation> choose Arize Phoenix. It’s great for debugging and evaluating RAG pipelines during development.

📚 Relevant articles to read:

If you’re interested in taking your AI agent projects to the next level, use ZenML. We have built first-class support for agentic frameworks (like CrewAI, LangGraph, and more) inside ZenML, for our users who like pushing boundaries of what AI agents can do. With ZenML, you can seamlessly integrate whichever agent framework you choose into robust, production-grade workflows. Join our waitlist to get started.👇