2025 is shaping up to be the year of Agentic AI. Two emerging open-source libraries in this space are Smolagents (from Hugging Face) and LangGraph.

Both aim to help you build agentic AI workflows that can call tools, remember past steps, and even collaborate with other agents, but both take different approaches under the hood.

In this Smolagents vs LangGraph comparison, we compare both frameworks across their key features, integrations, and pricing to help you choose the right tool for your multi-agent applications.

Smolagents vs LangGraph: Key Takeaways

🧑💻 Smolagents: A lightweight library from Hugging Face where agents ‘think in code.’ Its core philosophy centers on a Python-native developer experience. Agents log steps in memory but lack a persistent state. Safety relies on sandboxing, and the framework is free to use.

🧑💻 LangGraph: A low-level framework from the LangChain team for building stateful, multi-agent apps as explicit graphs of nodes and edges, with branching, loops, and hierarchical orchestration. Execution is safe since all logic is predefined and not coded. It’s ideal for developers building complex, predictable agentic systems within the LangChain ecosystem.

Smolagents vs LangGraph: Framework Maturity and Lineage

The maturity and origins of a framework provide important context for adoption. While both are relatively new, they originate from different backgrounds and show different community traction signals.

As of 27th September 2025

There’s a notable difference in numbers. While both Smolagents and LangGraph were released within weeks of each other, Smolagents' higher GitHub stars reflect a hyped market appeal and interest from the developer community, likely driven by its backing from Hugging Face. On the other hand, LangGraph comes from the well-established LangChain lineage, with a focus on enterprise readiness.

Smolagents vs LangGraph: Features Comparison

Before we dive into a detailed feature-by-feature comparison, here’s a table summarizing the core differentiators:

Features 1. Agent Types and Templates

The fundamental difference between Smolagents and LangGraph begins with how they abstract the very concept of an agent and its workflow. Their approaches reveal a deep philosophical split.

Smolagents

As the name suggests, Smolagents (Small Agents) provide a framework for writing LLM agents with minimal abstraction, using CodeAct (Code as Action). The framework offers two primary agent types :

CodeAgent: This is the default and most distinctive type. It uses an LLM to generate executable Python code snippets at each step of its reasoning process.ToolCallingAgent: This is a more conventional agent that generates JSON objects to specify which tool to call and with what arguments, similar to OpenAI's function calling.

In Smolagents, the workflow is not predefined and emerges dynamically from the Python script in which the agent is run. The developer writes standard Python logic, and the agent operates within that context.

In either case, you can customize the agent’s system prompt via optional templates or instruction strings. Smolagents also provides PromptTemplates classes for more structured prompt management.

Here’s an example to create and run a Smolagent that uses Python code to solve the task and return the result.

LangGraph

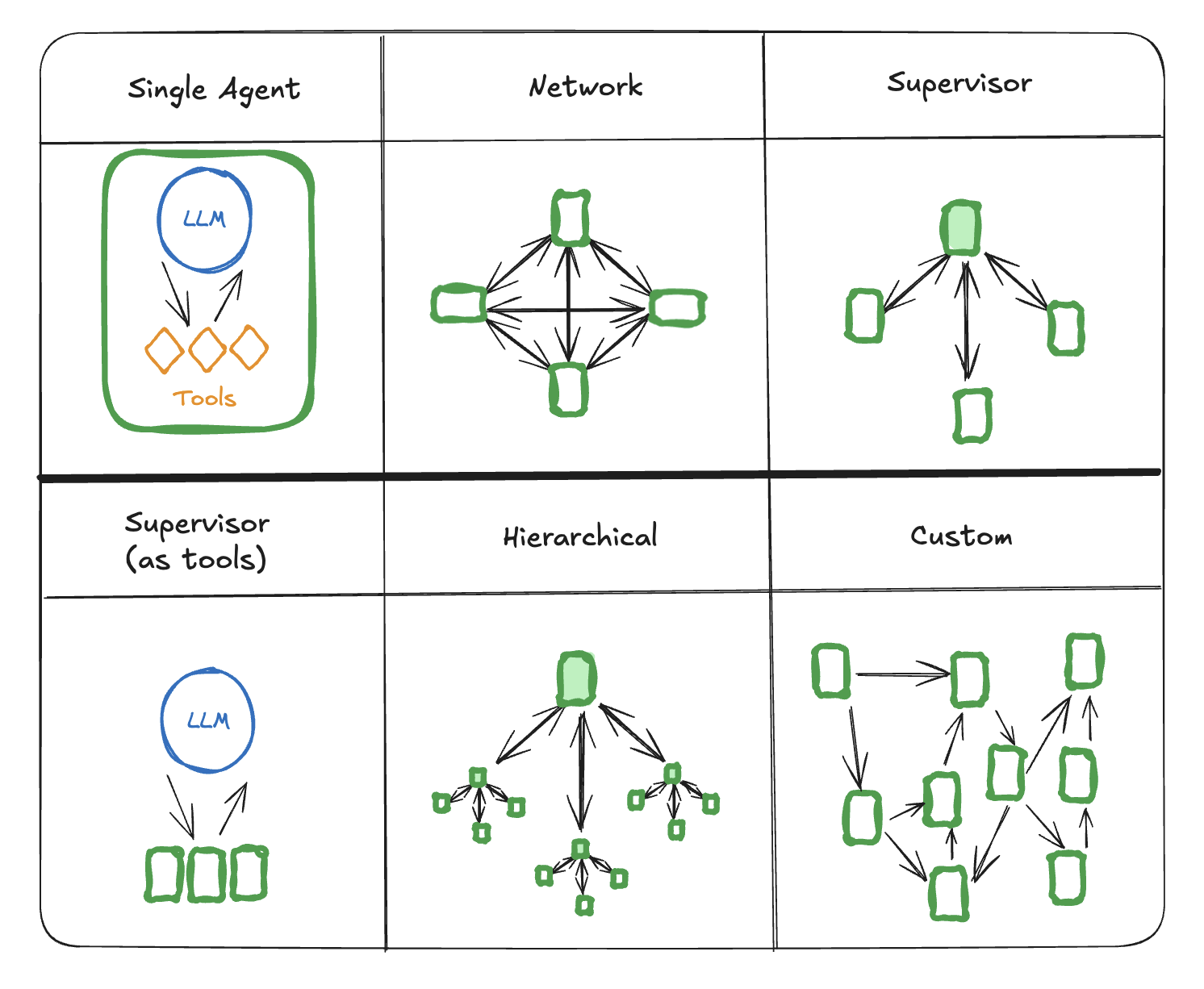

LangGraph does not have ‘agent types’ in the same sense as Smolagents. Instead, it takes a graph-based approach to define agent logic. Here, an ‘agent’ is typically a node or a collection of nodes and edges within a larger StateGraph or GraphBuilder object. Each node in the graph is either an LLM call or a Python function, and edges are rules that explicitly connect them.

Moreover, LangGraph provides architectural patterns, like Network, Supervisor, and Hierarchical, for composing multi-agent systems (as shown in the image at the top).

Unlike Smolagent’s PromptTemplates, prompts in LangGraph are provided as static strings or dynamic functions. They integrate with the underlying LangChain message schema; you supply them to the agent constructor as shown below.

Bottom line: LangGraph does look easy to work with, but there’s a huge tradeoff in flexibility. Your agents are deterministic and function within the patterns and flows.

Smolagents treats an agent as a flexible, code-generating entity, and the 'workflow' is simply the Python environment it executes in.

Feature 2. State and Memory

An agent's ability to remember past interactions is what makes it unique. Here again, Smolagents and LangGraph take vastly different approaches. Let’s see how:

Smolagents

Agents in Smolagents inherently remember what happened during a single run. Each step produces memory entries that are stored in the AgentMemory class. This object primarily functions as a detailed log that records the history of a single agent run. You can inspect or replay a run after it finishes.

LangGraph

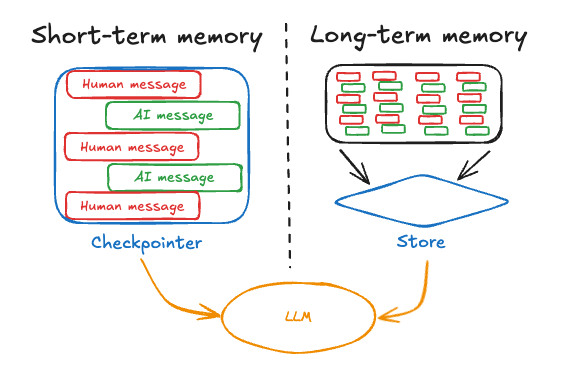

Persistence is a core design in LangGraph. By default, you can attach a checkpointer, like an InMemorySaver or a database, when compiling a graph. Each time the graph advances and meets a checkpoint, LangGraph automatically saves the complete state of the graph after every step. If something crashes, LangGraph can resume from the last checkpoint.

To use memory across calls, LangGraph provides constructs for long-term memory, such as:

- Semantic Memory: Facts about the world or a user (e.g., 'The user's name is Alex').

- Episodic Memory: Records of past experiences, often used for few-shot learning (e.g., 'Last time I was asked to write a report, these were the successful steps I took').

- Procedural Memory: Learned rules or processes for how to perform tasks.

In short, LangGraph’s state management is built to be durable and flexible, far beyond the simple in-memory step log of Smolagents. No wonder it’s sought out for production-grade agent building.

Bottom line: Smolagents maintains in-memory logs of each agent’s steps (agent.memory) and can replay a run, but it does not persist state across sessions by default.

LangGraph persists every run’s state via checkpoints, which allows easy debugging and true multi-turn memory. This also positions LangGraph for more sophisticated, enterprise-grade applications like long-term personal assistants or adaptable systems.

Feature 3. Execution Paradigm and Action Format

Now, this is a core differentiator between Smolagents and LangGraph. And by far, the most defining feature for both tools.

Smolagents

Smolagents’ flagship is its CodeAgent. Instead of generating structured data like JSON to request a tool execution, the CodeAgent generates and executes Python code snippets at each step to perform actions.

What this means is: A single block of generated Python can write loops, define variables, and call multiple tools sequentially.

In practice, when you call agent.run(prompt), Smolagents passes the prompt to the model, gets back a Python snippet, and immediately runs it.

For instance, instead of calling the model once per tool, the model writes code to call three tools in a loop. The approach greatly reduces the number of LLM calls for multi-step tasks.

Hugging Face’s team reports that CodeAgents often use 30% fewer LLM steps and costs than classic ReAct-style JSON agents favored by LangGraph.

For example, to find a user's age and comment on it, a CodeAgent can generate and execute a multi-line script in a single step.

LangGraph

LangGraph follows a more traditional and structured approach, often leveraging a model's built-in tool-calling capabilities.

An LLM-powered node outputs either entire messages or structured requests that the graph interprets. This request specifies a single tool and its arguments. The graph then routes the state to a dedicated ToolNode, which executes that specific tool call and returns the result.

The model’s job is to decide which function to call next or what message to send based on the graph definition, rather than writing code.

Bottom line: LangGraph trades Smol’s code-first flexibility for an explicit, debug-friendly workflow where every action is pre-coded.

Feature 4. Safety and HITL

How a framework ensures safe and controlled execution is critical, especially when agents can take real-world actions. The safety models of Smolagents and LangGraph are direct consequences of their core paradigms.

Smolagents

Smolagent's primary safety concern arises from the CodeAgent's ability to write and execute code. To mitigate risks, Smolagents enforces safety by sandboxing the code.

- Restricted imports: By default, imports are disallowed. Only the tools you pass in, along with common print or math functions, are callable. Any attempt to import an unauthorized module or perform a forbidden operation will cause the agent to error out at that step.

- Sandboxed execution: You can further isolate code execution in secure environments by using Smolagent’s integrations with external sandboxed execution solutions like E2B, Modal, Docker, or a Pyodide+Deno WebAssembly sandbox. Hugging Face also offers a hosted sandbox you can leverage directly, removing the need to manage your own setup.

- Human-in-the-Loop (HITL): Smolagents supports

step_callbacksfor HITL control. For example, you can register a function to run after aPlanningStep. This lets a human operator pause the agent, review the generated plan, and either approve or modify it before execution continues.

LangGraph

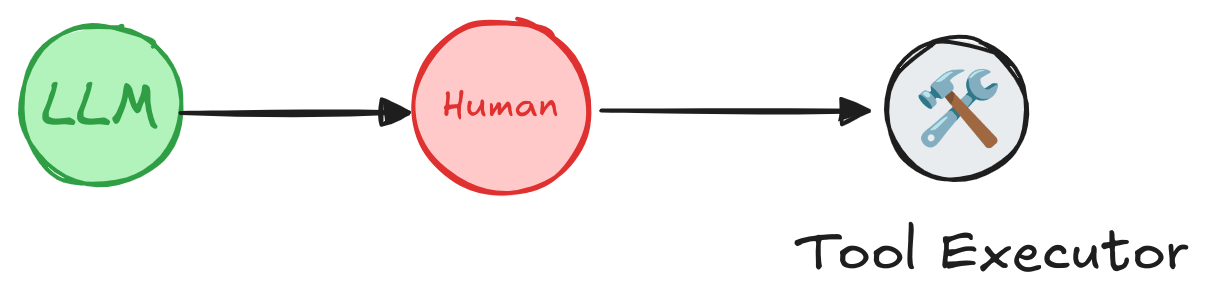

LangGraph's safety model is inherent because of its explicit graph architecture. Since agent logic is defined in nodes and prompts, it can only transition between those actions along the edges that you’ve created.

It also provides human-in-the-loop support as a fundamental feature. You get options to introduce human oversight:

- Static Interrupts: When compiling the graph, you can specify

interrupt_beforeorinterrupt_afteron any node, forcing the graph to pause at that point every time. - Dynamic Interrupts: A node's function can call the

interrupt()primitive, which will pause execution dynamically based on the current state.

When interrupted, a human can inspect the entire graph state, modify any part of it, and then resume execution. This offers a fine-grained level of control at any point in the workflow.

Bottom line: Smolagents offers greater expressive power with its code-as-action paradigm, but requires the management of an external sandboxing environment for security.

LangGraph provides intrinsic safety through its structured design, but requires the developer to explicitly define all possible actions and transitions upfront.

The choice depends on whether a developer prefers to constrain the agent's environment (Smolagents) or its behavior (LangGraph).

Smolagents vs LangGraph: Integration Capabilities

No agentic framework operates in isolation. Its ability to connect with LLMs, data sources, and other tools in the MLOps ecosystem plays a crucial role.

Smolagents

Smolagents is tightly integrated with the Hugging Face ecosystem. It supports any HF model, Hugging Face Inference API providers, and even LangChain MCP tools or Hub Spaces as tools.

You can easily share and download tools, and even entire pre-configured agents, directly from the Hub.

For observability, Smolagents offers integrations with third-party platforms like AgentOps to track and analyze agent performance.

LangGraph

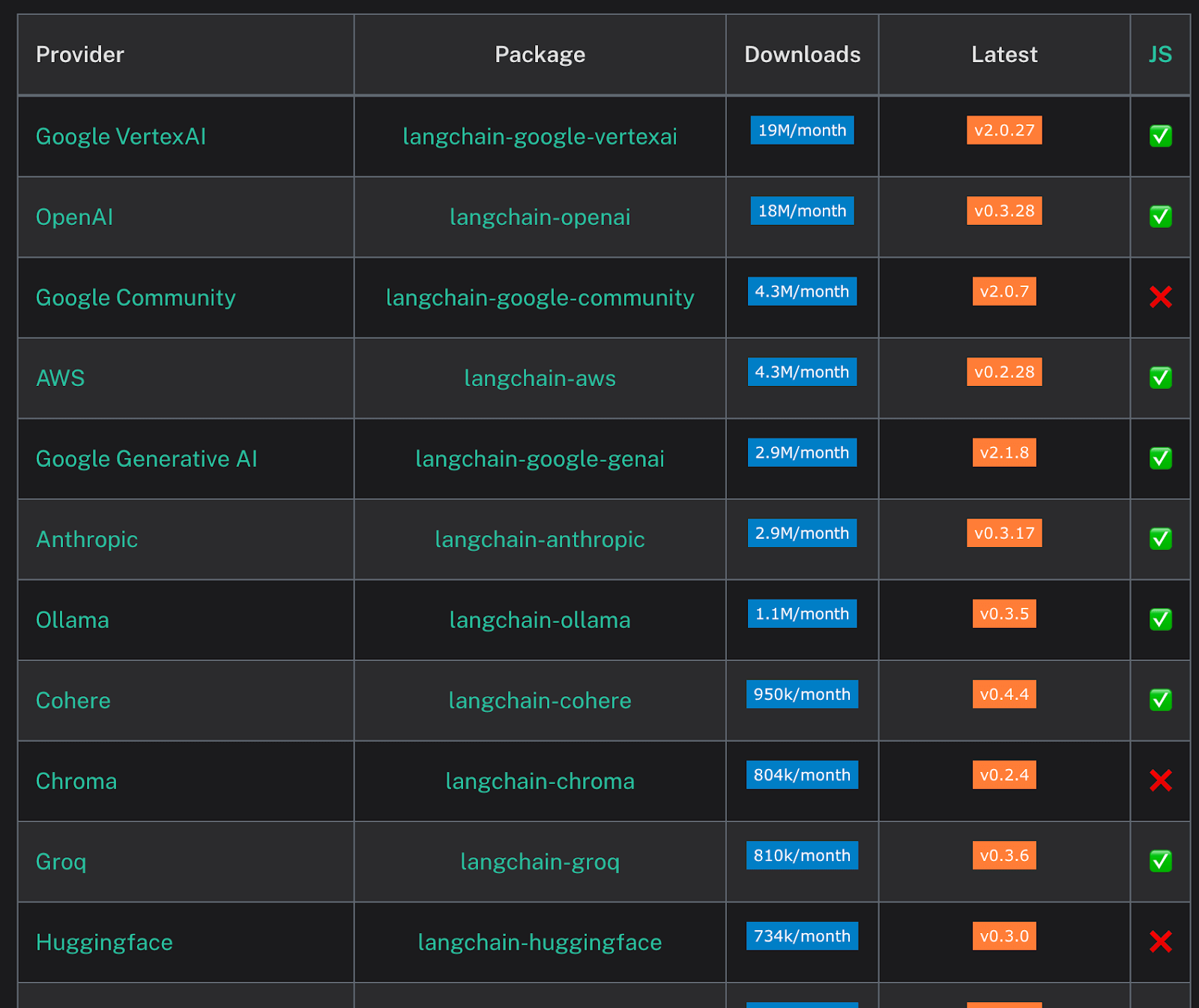

LangGraph sits on top of the LangChain framework, so it has access to everything LangChain supports, including:

- LangChain Components: The extensive library of integrations for LLMs, document loaders, text splitters, vector stores, and tools.

- LangSmith: Traces from LangGraph are automatically visualized in LangSmith, providing a step-by-step view of the graph's execution and the agent's reasoning process.

Smolagents vs LangGraph: Pricing

Both Smolagents and LangGraph frameworks are built on open-source cores and are free to use. On the sidelines, both have commercial offerings.

Smolagents

The core smolagents library from Hugging Face is open-source and free to use.

LangGraph

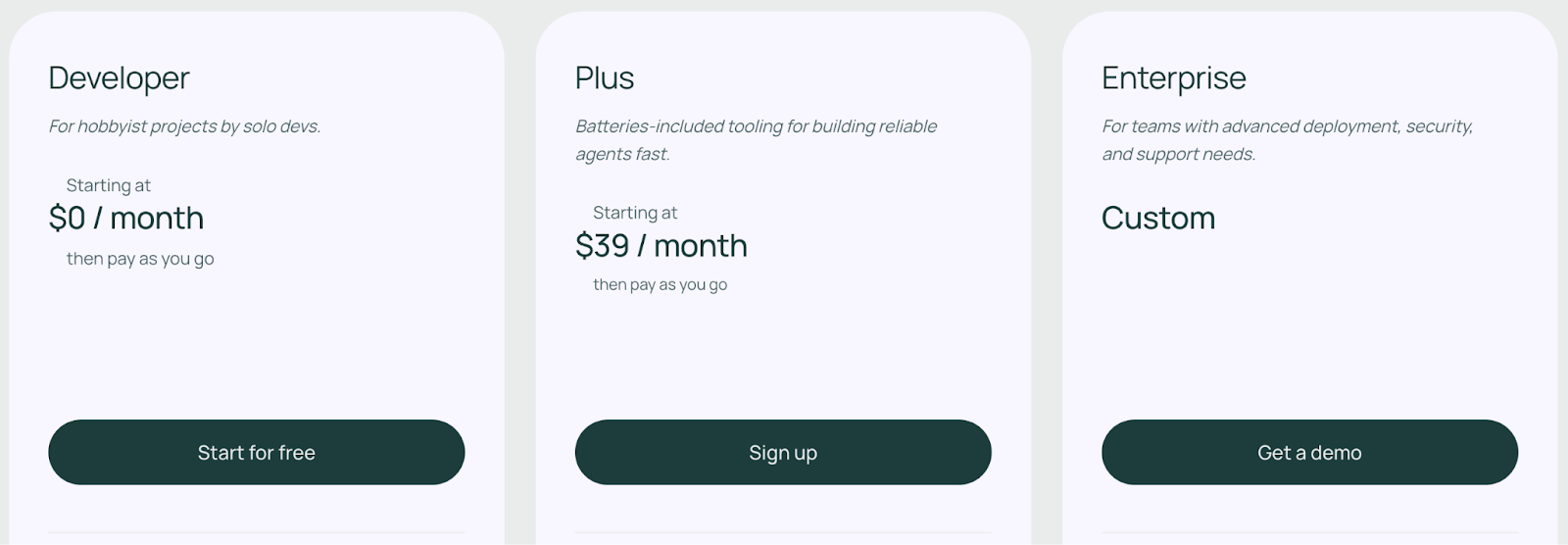

The core LangGraph library is also open-source and free to use. LangChain, the company behind the framework, offers a managed service that comes with a tiered pricing model:

If you just use the LangGraph Python (or JS) library on your own, there’s no cost; it’s MIT licensed.

Beyond that, LangChain offers three paid plans for LangGraph:

- Developer: Free

- Plus: $39 per month

- Enterprise: Custom

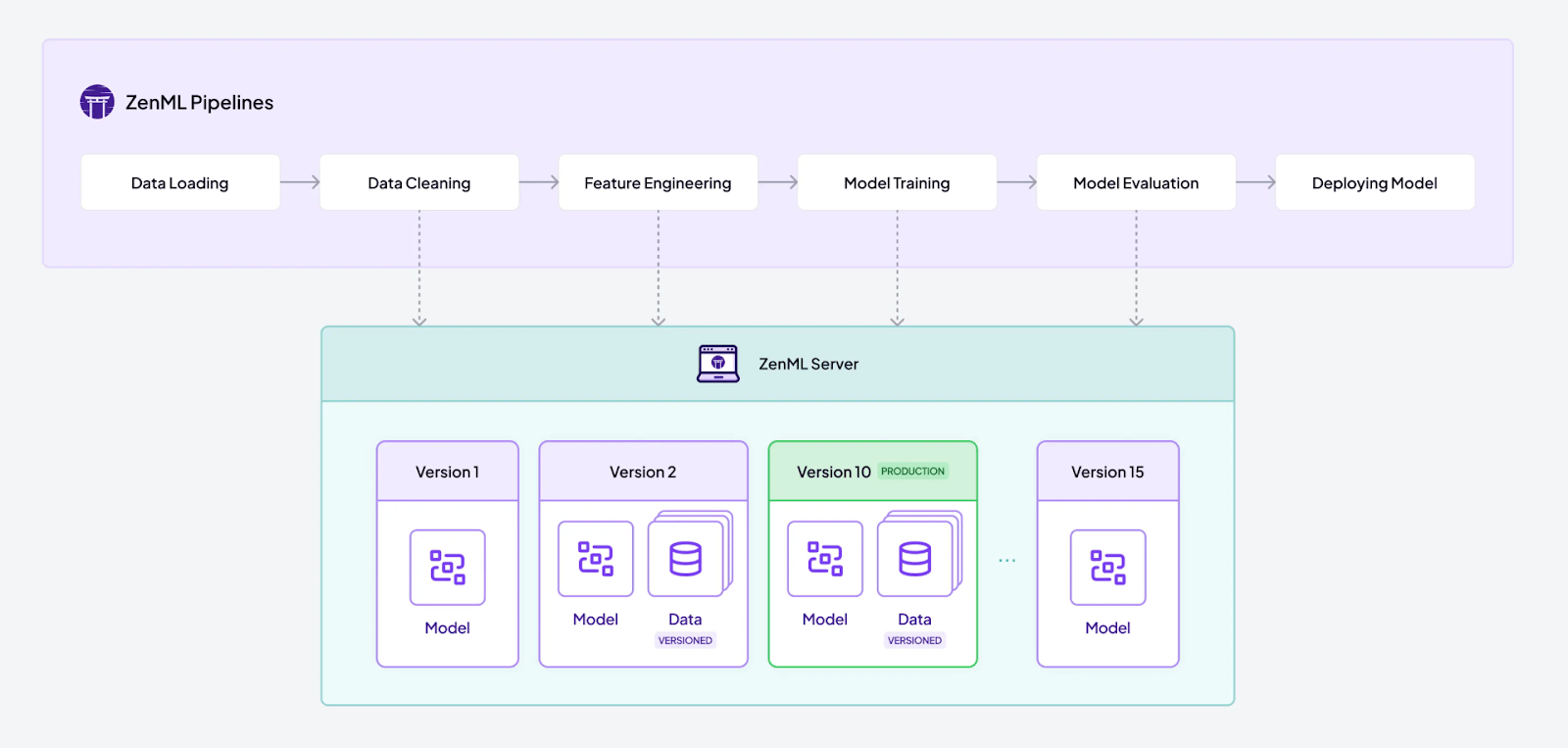

How ZenML Manages the Outer Loop when Deploying Agentic AI Workflows

Both Smolagents and LangGraph provide excellent tools for the 'inner loop' of development. However, building a production-grade agent requires solving the 'outer loop'.

The outer loop involves the entire lifecycle of deployment, monitoring, evaluation, and ensuring reproducibility. This is where ZenML complements both frameworks.

ZenML is an open-source MLOps + LLMOps framework that serves as the unifying outer loop for your AI agents. It complements agents built with Smolagents or LangGraph frameworks by governing the entire production lifecycle.

Here’s how ZenML adds value:

1. Embed Agents in End-To-End Pipelines

In ZenML, you can wrap an entire Smolagents or LangGraph workflow inside a ZenML pipeline step.

Within a single pipeline, you can manage data preparation for RAG, the agent's execution, and the subsequent evaluation of its output. Basically, ZenML connects every step in a defined sequence where outputs from one step can flow as inputs to others.

The end-to-end orchestration makes the entire process versioned, reproducible, and easily browsable on any connected infrastructure.

2. Unified Visibility and Lineage

ZenML automatically tracks and versions every part of your pipeline, including input prompts, agent responses, the LLMs used, and any data artifacts.

If you tweak your Smolagents or LangGraph agent, ZenML can version those changes. The async helps automatically adjust your pipeline for minor changes that might otherwise lead to a completely different behaviour of agents. With ZenML, you know exactly what changed and when.

Our central dashboard provides a complete history of all runs, allowing your team to trace errors, compare outputs across different versions, and systematically debug failures.

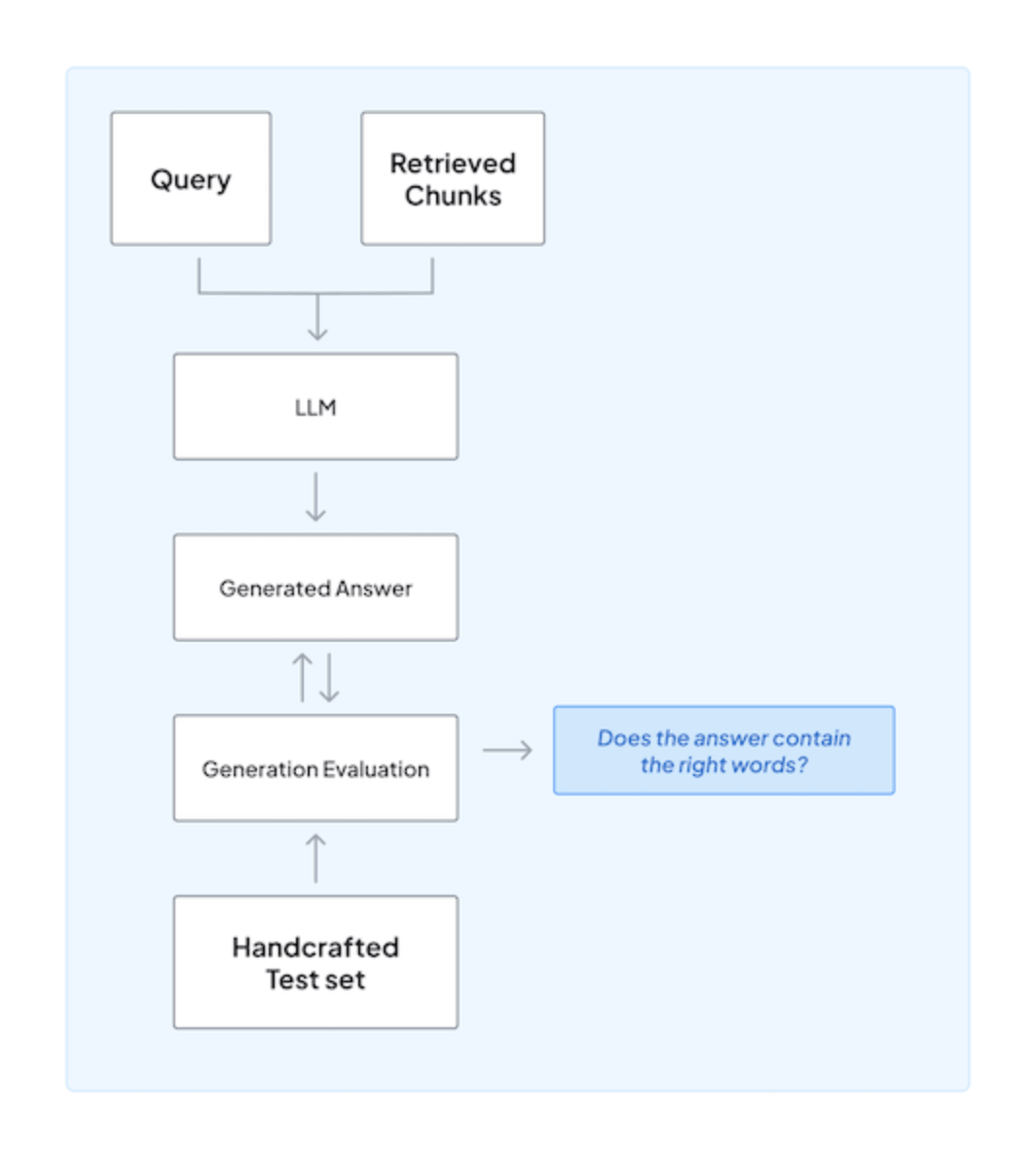

3. Continuous Quality Checks

ZenML pipelines can include dedicated evaluation steps that run automatically after each agent execution. These steps can measure output quality against predefined metrics, flag bad runs, and trigger alerts or fallbacks. This enables continuous quality monitoring and A/B testing of agents in a production setting.

4. Mix and Match Tools (avoid lock-in)

ZenML is framework-agnostic. Its component-and-stack model decouples your code from the underlying infrastructure. This means you can combine Smolagents, LangGraph, and even traditional ML models within a single, coherent pipeline. This approach avoids vendor lock-in and lets you use the best tool for each part of your application.

In short, Smolagents and LangGraph define what the agent does; ZenML governs how that agent lives, scales, and evolves in a production environment.

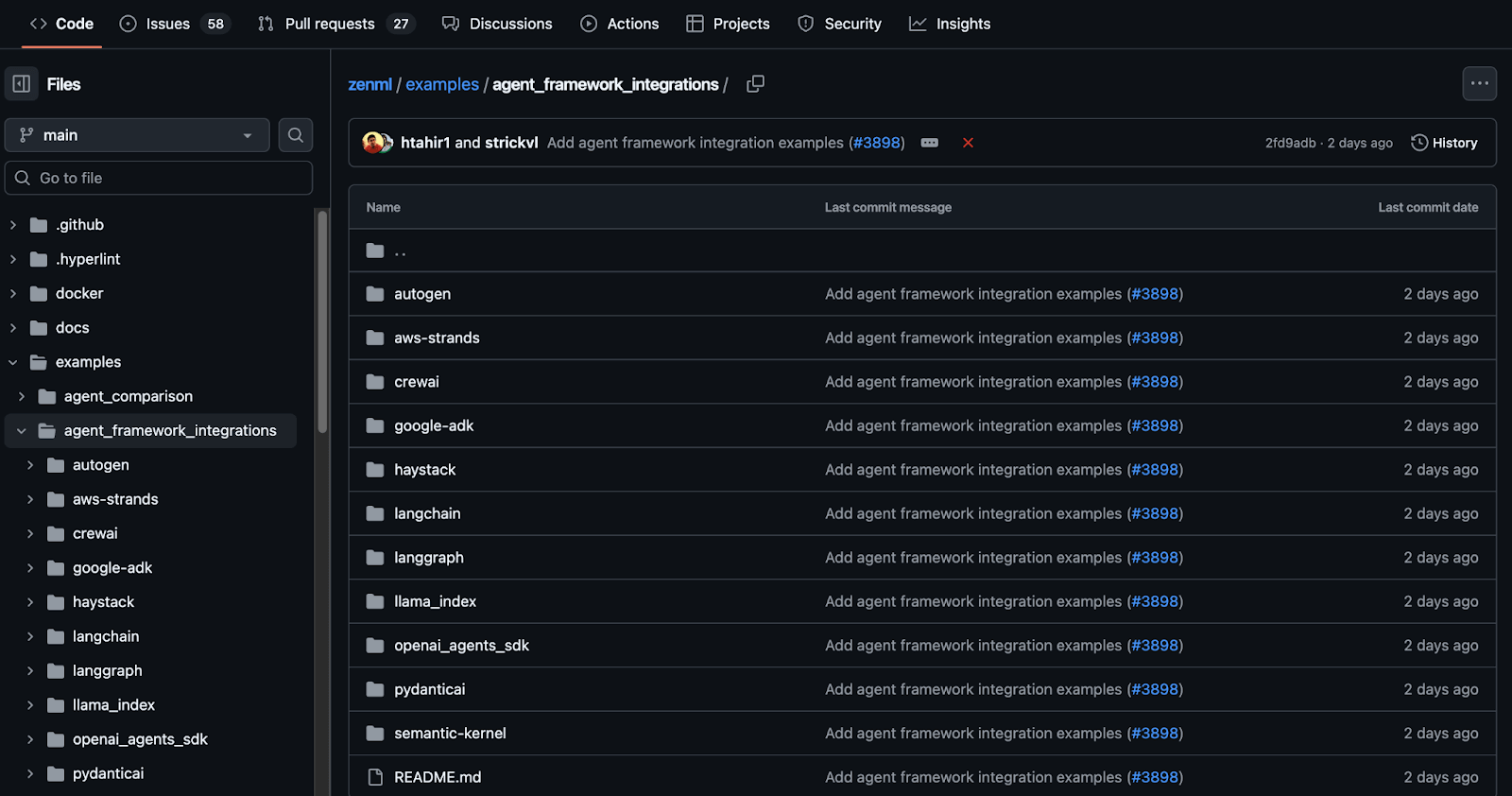

👀 Note: At ZenML, we have built several such integrations with tools like LangChain, LlamaIndex, CrewAI, and more. We are actively shipping new integrations that you can find on this GitHub page: ZenML Agent Workflow Integrations.

📚 Other comparison articles to read:

Which One’s Easier to Build and Run AI Agents: Smolagents vs LangGraph?

The decision between Smolagents and LangGraph is not about finding a universally ‘better’ framework. It’s about selecting the tool that best aligns with your project's architectural philosophy, your requirements for control versus flexibility, and your team's development workflow.

✅ Choose Smolagents if: You prioritize a ‘pure Python’ developer experience and your agent's logic is best expressed through dynamic, executable code.

✅ Choose LangGraph if: You are already invested in the LangChain ecosystem and want to build predictable systems with cyclical logic, intricate branching, and durable state management. Leverage its extensive integrations and the powerful observability of LangSmith.

Ultimately, both frameworks are pushing the boundaries of what is possible with agentic AI. As you move from building a prototype to deploying a production-grade system, the challenges of the 'outer loop' become critical.

✅ ZenML provides the essential MLOps and LLMOps layer to manage pipelines, evaluation, and scaling, giving you versioned workflows and robust experiment tracking for any agent you build.

If you’re interested in taking your AI agent projects to the next level, consider joining the ZenML waitlist. We’re building out first-class support for agentic frameworks (like LangChain, LlamaIndex, and more) inside ZenML, and we’d love early feedback from users pushing the boundaries of what AI agents can do. With ZenML, you can seamlessly integrate whichever agent framework you choose into robust, production-grade workflows. Join our waitlist to get started.👇