On this page

AutoGen uses a free-form approach for building LLM applications. It enables AI agents to communicate with each other in a chat loop – potentially with human oversight – to solve complex tasks.

However, AutoGen’s freeform approach comes with trade-offs. Its flexibility at times leads to unpredictable workflows and even self-sabotage if agents loop or ramble.

Moreover, its lack of transparency and visibility into agents’ functioning makes many developers look for an alternative to AutoGen.

We tested the top 8 AutoGen alternatives so you don’t have to. We’ll briefly explain why you might look beyond AutoGen, define key criteria to evaluate alternatives, and then dive into each tool’s features, pricing, and pros/cons.

TL;DR

- Why Look for Alternatives: AutoGen's conversation-first model can be challenging to manage, resulting in unpredictable behavior and high token costs. Recent breaking changes and a project fork have also created instability for teams deploying agents in production.

- Who Should Care: ML engineers, Python developers, and teams building production AI agents who require more structured, efficient, and maintainable frameworks for building and deploying multi-agent systems.

- What to Expect: Analysis of 8 alternatives to AutoGen, ranging from visual orchestration platforms to code-first frameworks. Each offers unique strengths in comparison to AutoGen for agent development. All solutions are either open-source or have free tiers, with some offering enterprise plans for managed services.

The Need for an AutoGen Alternative?

Let’s face it: AutoGen revolutionized multi-agent development with its conversation-centric approach. However, its core production has several limitations that might have made you look for alternatives, like:

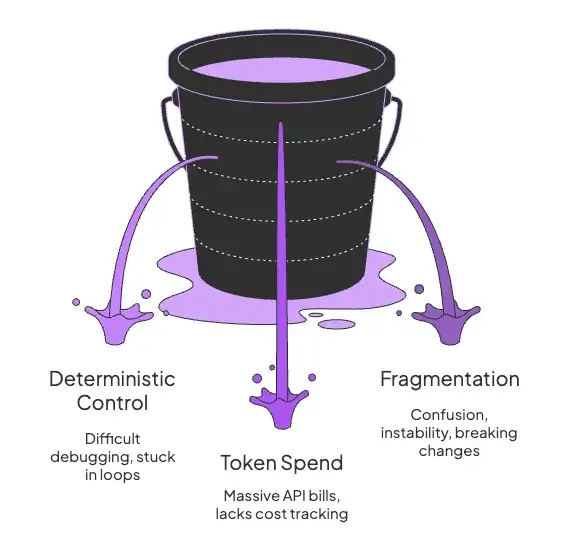

1. Need for Deterministic Control

AutoGen agents determine their own sequence of actions through agent-to-agent conversations. Two AI agents chatting independently feel ‘futuristic.’ Until they spiral off-topic or get stuck in loops, this lack of determinism makes debugging difficult. Also, you can’t always reproduce a conversation because there are trace logs.

Many teams seek an alternative that lets them define explicit logic or constraints on agent behavior. Frameworks with graphs or stepwise plans (rather than free-form chats) offer a more predictable and debuggable alternative where every step and transition is clearly defined.

2. Token Spend and Efficiency Concerns

Multi-agent conversations in AutoGen can generate massive API bills. Each turn in a discussion adds to the token count, and with multiple agents contributing, costs can escalate quickly.

Put simply, the framework lacks built-in features for session-level cost accounting and transparency. You might discover expensive conversation patterns only after receiving your API bill.

Teams often switch to alternatives that provide stricter path control and more transparent cost tracking to keep their operational expenses in check.

3. Fragmentation and Breaking Changes

AutoGen’s development trajectory has experienced significant changes. This includes: a major rewrite from Microsoft (v0.4) and a community-led fork (AG2) that continues the original v0.2 line.

This split has caused confusion and instability. It has forced teams to choose between two different codebases with distinct feature sets and development philosophies.

The official migration guide flags breaking changes when upgrading to 0.4, which spooks production users. As a result, engineers sometimes find it more practical to switch to a different framework rather than adapt to the new architecture.

Evaluation Criteria

We weighted each AutoGen alternative against four key questions:

- How does the framework let you define the agent’s workflow or logic?

- Does the tool support multi-agent collaborations?

- How well does the framework integrate with LLM providers and external tools?

- Can you monitor, debug, and optimize agent behavior?

1. Core Orchestration and Control

We assessed how each framework defines and manages workflows. Does it use a rigid pipeline, a flexible graph, or a conversational model? We looked for features that give developers fine-grained control over agent execution, error handling, and state management.

2. Multi-Agent Patterns

We looked for frameworks that allow complex patterns, like hierarchical, parallel, and turn-taking teams. Essentially, we evaluated each framework’s ability to support different modes of agent collaboration.

3. Model and Tool Backend Coverage

A framework’s utility depends heavily on its ecosystem. We evaluated how easily each alternative integrates with various LLMs like OpenAI, Anthropic, vector databases, and custom Python tools. A strong integration layer reduces boilerplate and accelerates development.

4. Observability and Cost Control

For production systems, understanding what an agent is doing and how much it costs is non-negotiable. We looked for built-in features like logging, tracing, token usage tracking, and human-in-the-loop capabilities for monitoring and intervention.

The more insight and control an alternative gives you, the easier it is to manage an agent’s performance and expense over time.

What are the Best Alternatives to Autogen

| Autogen Alternative | Best for | Key Features | Pricing |

|---|---|---|---|

| ZenML | Teams seeking a production-grade orchestration framework for multi-agent and LLM workflows | - Pipeline-based orchestration - Secrets management - RAG with ZenML | Both free and paid |

| LangGraph | Building cyclical, stateful agent workflows with explicit control flow | - Graph-based control flow - Explicit state management - Tokens streaming | - Free - Paid: $39 per month |

| CrewAI | Creating deterministic agent teams that mimic human organizational structures | - Role-based agents - Sequential task execution - Hierarchical team structures | - Free - Paid: $25 per month |

| LlamaIndex | Knowledge-intensive apps where agents need to reason over large document sets | - RAG-focused workflows - Document processing - Knowledge graphs | - Free - Paid: $50 per month |

| Smolagents | Developers who want a minimal, opinionated foundation for custom agent systems | - Code-first agents - Tight HF integration - Minimal abstractions | Free |

| Agno | Python-native toolkit for building structured, production-grade AI assistants | - Supports multi-modal documents - Built-in SQL and data processing tools - Integrations with web frameworks, logging, and monitoring tools | - Free - Paid: $150 per month |

| Ag2 | Existing AutoGen users looking for stability and backward compatibility | - AutoGen v0.2 continuation - Backward compatible - Community-driven | Free |

| Haystack | Building complex, customizable RAG and agentic search applications | - Modular pipelines - Production RAG - Extensive components | Free and custom plans |

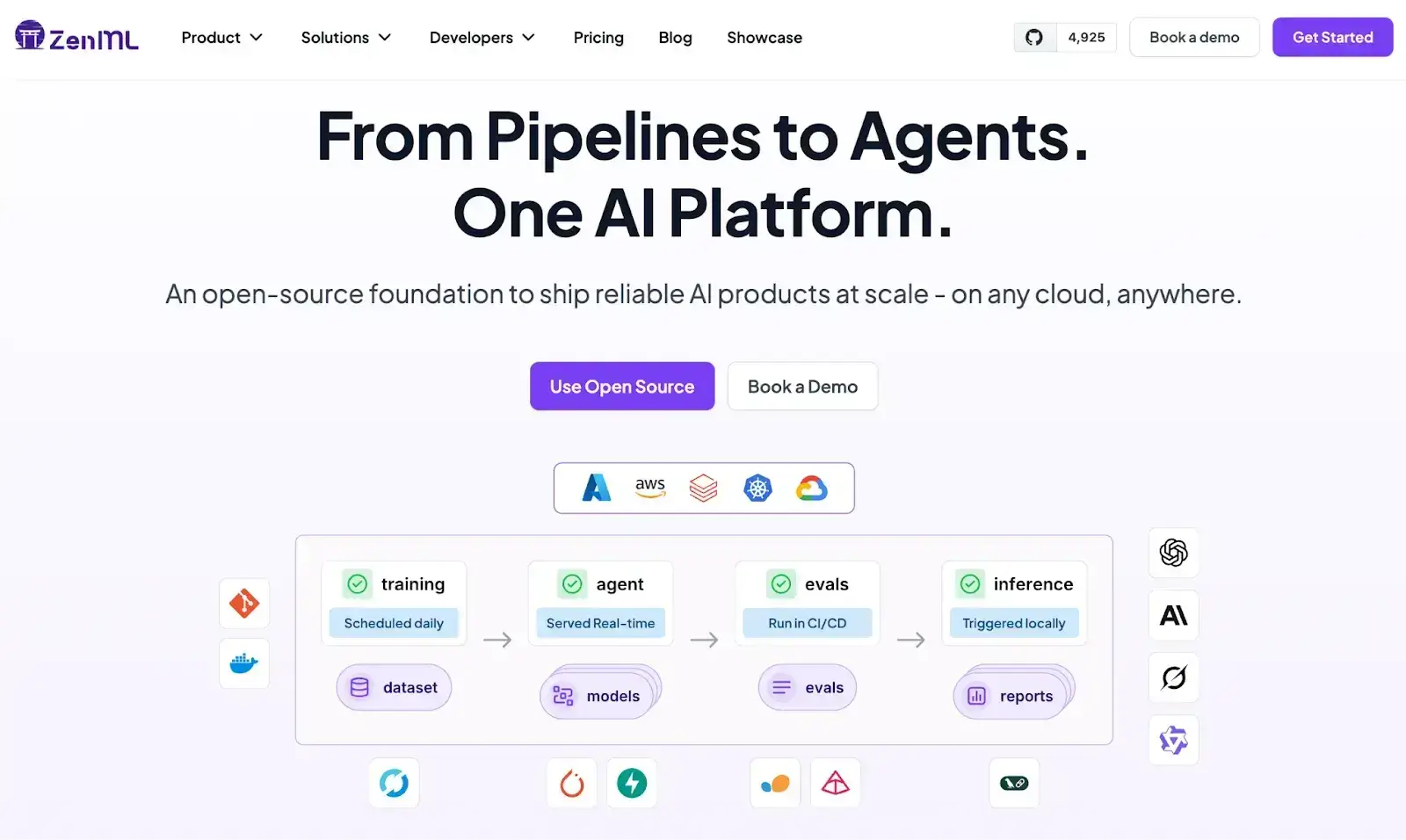

1. ZenML

ZenML is the best AutoGen alternative for teams seeking a production-grade orchestration framework for multi-agent and LLM workflows. Unlike AutoGen’s conversation-first model, ZenML offers deterministic control, built-in observability, and reproducibility across every experiment.

It unifies LLMOps and MLOps under one architecture, making it ideal for building structured, scalable AI agents and RAG pipelines in production.

Key Feature 1. Pipeline-Based Orchestration

ZenML’s quickstart project demonstrates how quickly you can spin up a reproducible AI pipeline. Each step (data ingestion, model inference, evaluation) runs inside a standardized, version-controlled workflow.

These pipelines abstract infrastructure details; you can switch from local runs to cloud environments (AWS, GCP, or Kubernetes) with zero code change. This design solves one of AutoGen’s biggest issues: unpredictability.

By treating every LLM call or agent step as a pipeline node, ZenML guarantees traceability, modularity, and reproducibility, ensuring experiments remain consistent across runs.

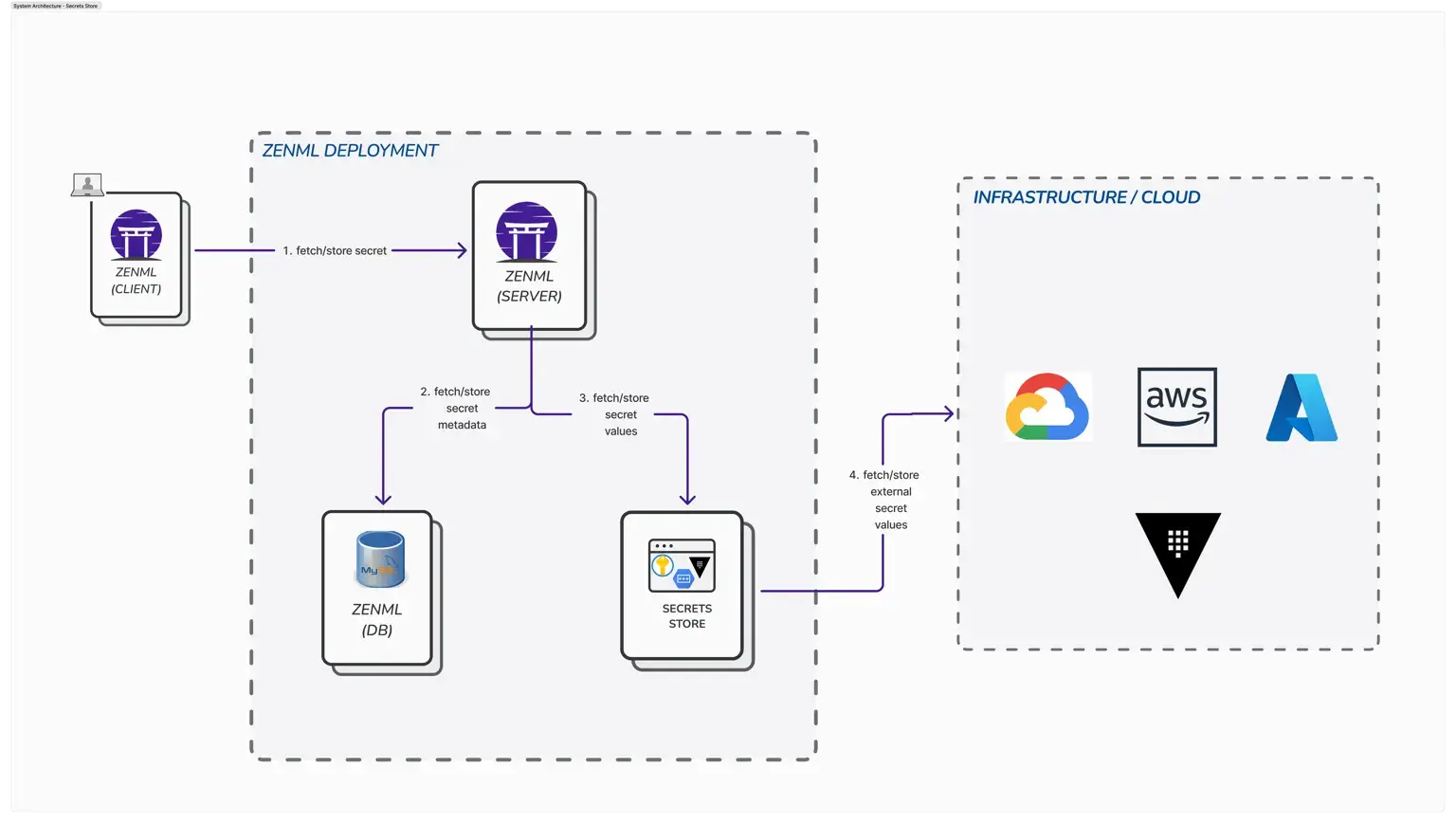

Key Feature 2. Secrets Management

ZenML simplifies credential handling with centralized secrets management that integrates directly into your pipelines. Sensitive API keys, database passwords, or LLM tokens can be stored securely in backends like AWS Secrets Manager, GCP Secret Manager, or Vault.

This lets you build, run, and deploy multi-agent workflows without ever exposing secrets in your code or logs.

Unlike AutoGen, which requires manual environment setup for key management, ZenML’s approach standardizes security across environments, helping enterprises comply with DevSecOps and governance requirements while maintaining developer productivity.

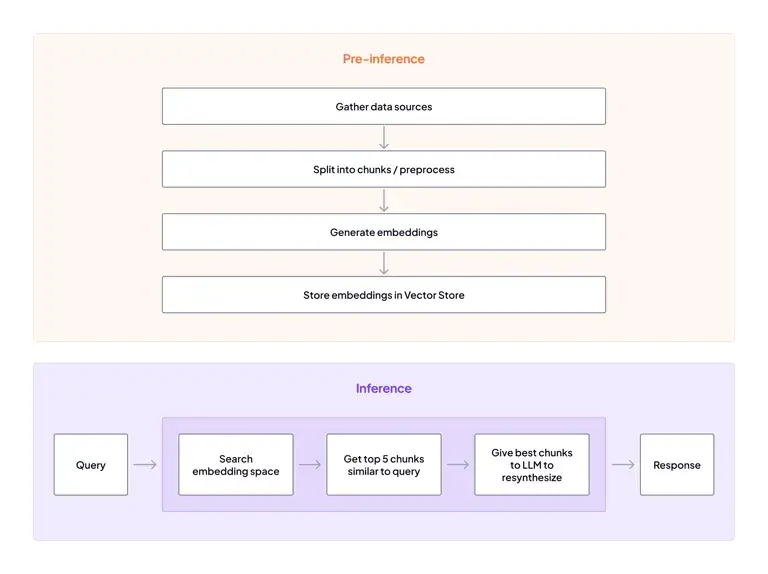

Key Feature 3. RAG with ZenML

ZenML provides an end-to-end Retrieval-Augmented Generation (RAG) template, allowing teams to construct production-ready RAG pipelines with retrievers, vector stores, and evaluators.

You can integrate your preferred vector database (like Weaviate, Pinecone, or FAISS), add data loaders, and monitor retrieval and generation metrics in one place. Every pipeline step: from embedding generation to LLM evaluation, is tracked and versioned.

This makes it easier to benchmark models, debug context drift, and optimize retrieval performance. ZenML transforms RAG experimentation into a scalable, observable production workflow.

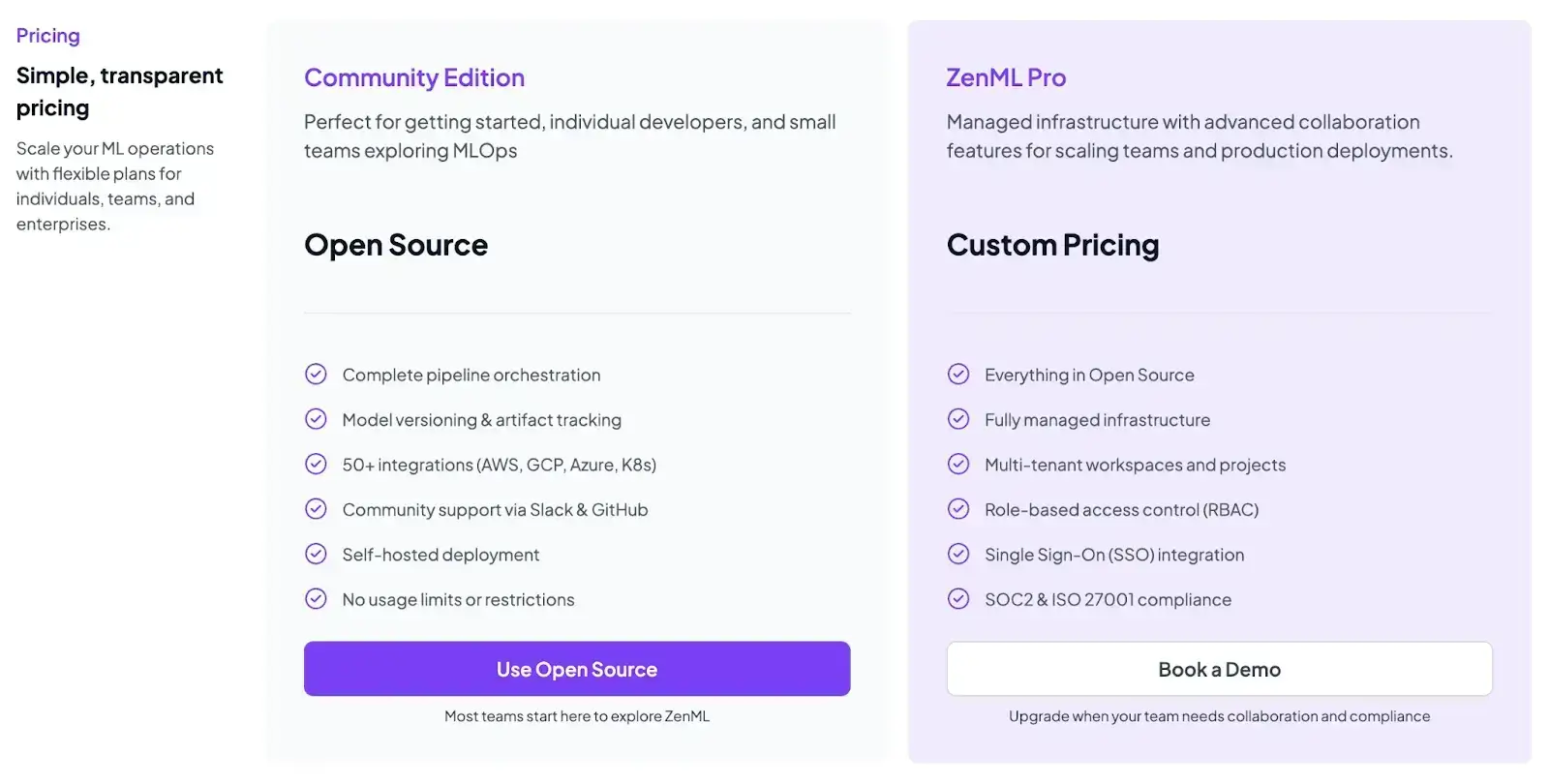

Pricing

We are upgrading our platform to bring every ML and LLM workflow into one place for you to run, track, and improve. Think of processes like data preparation, training, RAG indexing, agent orchestration, and more, all in one place.

Pros and Cons

The main advantage of ZenML is its end-to-end orchestration and reproducibility. It lets teams manage the entire lifecycle of LLM and MLOps workflows with integrated artifact tracking, secrets management, and infrastructure flexibility. With strong integrations (LangChain, MLflow, Hugging Face) and cross-environment portability, ZenML is ideal for enterprise-grade reliability and scalability.

But remember, ZenML is not a specialized LLM observability or a one-click QA solution – it’s a framework.

2. LangGraph

LangGraph, from LangChain, is a code-first framework for building stateful, multi-agent workflows with explicit graph structures. It offers a graph-based orchestration model that allows you to visualize your entire LLM workflow using nodes and edges, where a node represents a step (such as an LLM call, a tool use, or a condition), and edges define the flow.

📚 Also read: LangGraph alternatives

Features

- Structure your agent’s logic as a directed graph (DAG) with explicit nodes and edges representing an agent action or decision with clear paths.

- Built-in state management helps agents maintain memory state between nodes for long-term context across the graph.

- Supports real-time token-streaming that enables downstream nodes to start work before an upstream agent finishes its entire output.

- Has built-in mechanisms to pause graph execution for moderation via human approval, correction, checkpoint, etc.

- Conditional routing and cycles allow complex control flow, including loops, branches, and dynamic path selection based on agent outputs.

Pricing

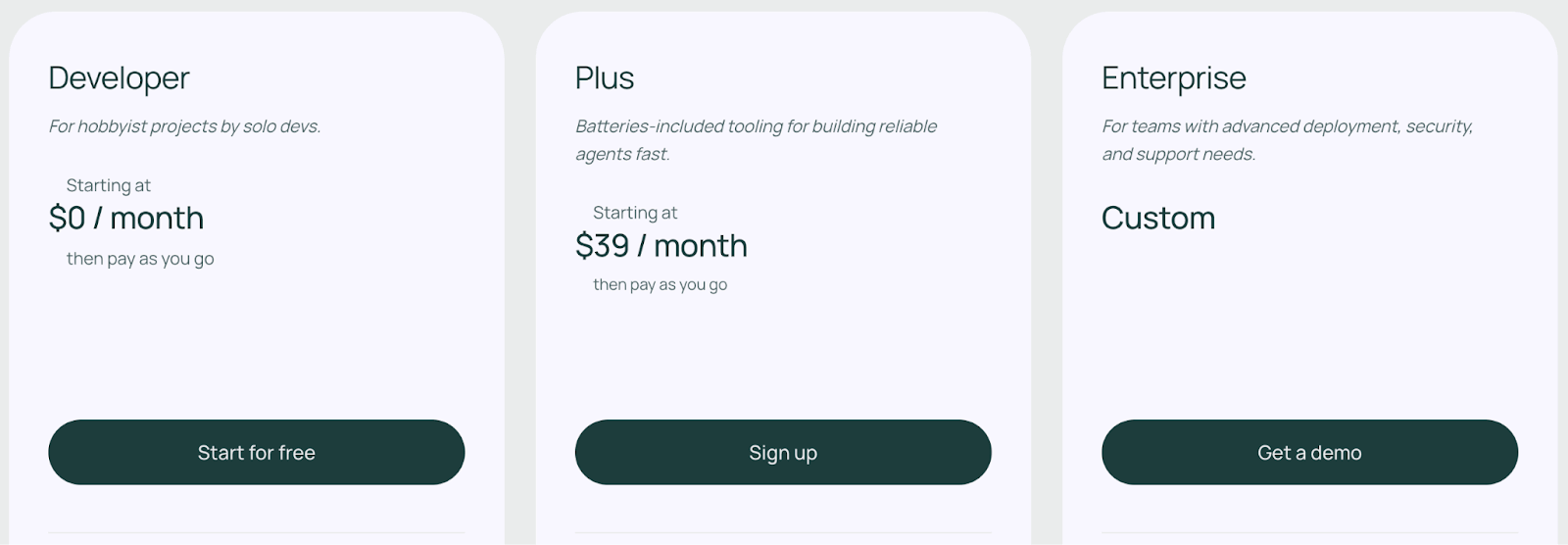

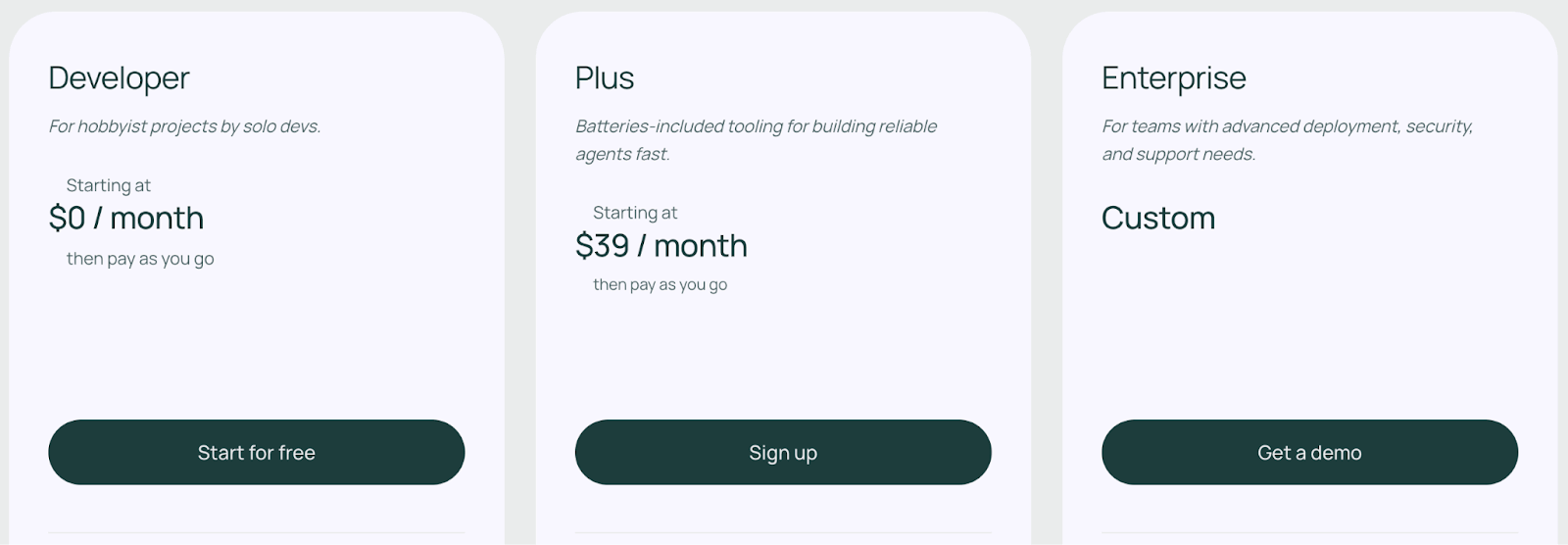

LangGraph is open-source and free to use. Its hosted version offers three plans:

- Developers: Free

- Plus: $39 per month

- Enterprise: Custom pricing

📚 Also read: LangGraph pricing

Pros and Cons

LangGraph’s biggest pro is transparency and control. It’s much easier to pinpoint where an agent’s chain failed with a visual DAG than by parsing an AutoGen chat log. Since LangChain, you have access to a rich set of tools and the ability to incorporate custom Python code at any node.

The trade-off is that it requires more boilerplate code to define the graph, state, and nodes compared to setting up a simple GroupChat in AutoGen. It’s a Python-first solution, which appeals to developers who found AutoGen or other GUI tools too limiting

3. CrewAI

CrewAI is a framework inspired by the idea of a human ‘crew’ working together. It enables you to create role-based agents and orchestrate them in a guided sequence to achieve a common goal.

📚 Also read: CrewAI alternatives

Features

- Use different collaboration patterns like sequential, hierarchical, and turn-based to execute tasks in a structured and deterministic manner.

- Built-in memory systems allow agents to recall past interactions and build knowledge over time without manual intervention.

- Record and reply agents run to inspect each step and even modify a step’s outcome, then resume execution from that point to test fixes.

- Equip agents with pre-built tools, like web search, code execution, web scraping, or add custom tools using Python functions.

- Integrate with third-party observability and ML monitoring tools, like Langfuse, Arize Phoenix, MLflow, and more.

Pricing

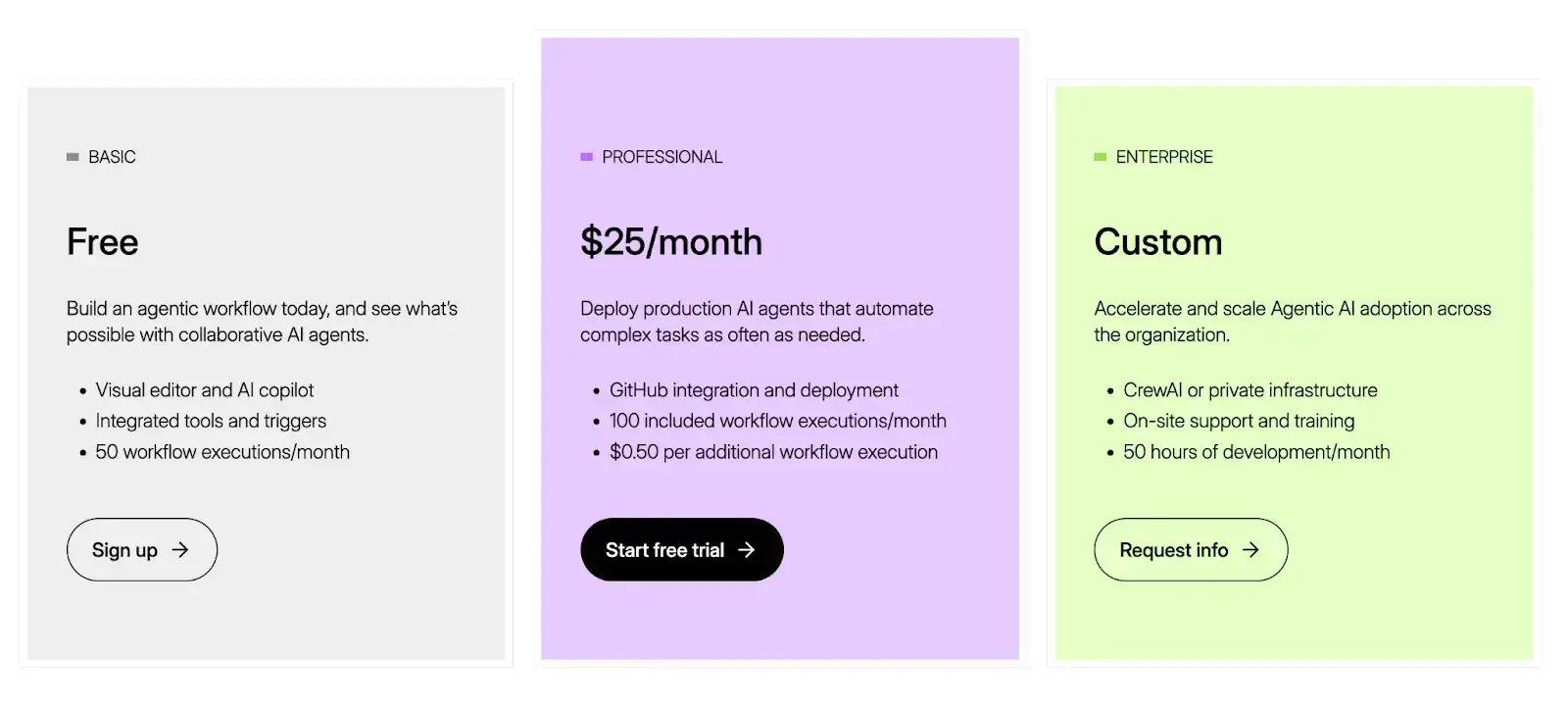

CrewAI’s core framework is licensed under the MIT license and is open-source. Other than that, it offers cloud-hosted plans to choose from:

- Basic: Free

- Professional: $25 per month

- Enterprise: Custom pricing

Pros and Cons

CrewAI’s structured approach makes multi-agent systems more manageable than AutoGen’s conversational chaos. Role specialization creates predictable behavior patterns and clear accountability. Additionally, non-developers can read and write YAML definitions for agents, which improves cross-team collaboration.

The downside is that CrewAI’s rigid sequence is less flexible than AutoGen’s dynamic chat model for open-ended queries. Also, CrewAI doesn’t natively allow concurrent agent execution. While turn-based execution is great for determinism, it can be sub-optimal for speed if some agents could work in parallel.

4. LlamaIndex

LlamaIndex is a popular data framework for building context-aware LLM applications with deep Retrieval-Augmented Generation (RAG) capabilities. If your agent needs to ingest and reason over custom data (documents, databases), LlamaIndex is often a better fit than AutoGen alone.

📚 Also read: LlamaIndex alternatives

Features

- Orchestrate multi-step workflows on a Directed Acyclic Graph (DAG) model that lets you create event-driven workflows with explicit steps.

- Use built-in modules like the

FunctionAgentandAgentWorkflowto orchestrate multi-step tasks that involve using tools or querying data in a structured way. - Built-in advanced indexing and retrieval tools that help you build agents that reason over your documents, databases, or APIs.

- Handle hundreds of data sources and millions of tokens with features like automatic text chunking, embedding caching, and incremental index updates.

- Agents in a LlamaIndex workflow communicate indirectly through a shared

Contextobject. This provides a structured way to pass state and information between agents without relying on parsing conversational history.

Pricing

LlamaIndex is free to use (open-source) for its core Python library. LlamaCloud, its managed service, offers a free tier and three premium tiers:

- LlamaIndex Starter: $50 per month - 50K API credits, 5 seats

- LlamaIndex Pro: $500 per month - 500K credits, 10 seats

- LlamaIndex Enterprise: Custom pricing

📚 Also read: LlamaIndex pricing

Pros and Cons

LlamaIndex provides superior document understanding capabilities compared to AutoGen’s generic approach. The framework’s specialized components for retrieval and synthesis produce more accurate responses from knowledge bases. Built-in evaluation metrics help optimize RAG performance systematically.

However, LlamaIndex offers limited multi-agent orchestration features. It excels at single-agent knowledge tasks but lacks AutoGen’s conversational coordination capabilities. Teams often combine LlamaIndex with other frameworks for complete agent systems.

5. Hugging Face Smolagents

Smolagents is a minimalist agent library by Hugging Face that prioritizes simplicity and code-centric agent design. It’s essentially a lightweight alternative to AutoGen for scenarios where you want to spin up an agent in a few lines of Python without heavy frameworks.

📚 Also read: Smolagents vs LangGraph

Features

- The entire

smolagentslogic is only on the order of ~1,000 lines of code; there’s no sprawling class hierarchy or complex schema to learn. You can understand and modify how the agent works more easily than with a larger framework. - Has both a CodeAgent that writes its actions in Python code and a ToolCallingAgent, which supports usual JSON/text-based tool-calling for scenarios where that paradigm is preferred.

- Supports sandboxed execution environments and integrates with tools like E2B or Docker to run code safely.

- Integrate any large language model (LLM), whether it’s hosted on the Hub via Inference providers, accessed via APIs such as OpenAI, Anthropic, or many others via LiteLLM integration, or run locally using Transformers or Ollama.

- Comes with command-line utilities (smolagent, webagent) for quickly running agents without writing boilerplate code.

Pricing

Smolagents is an open-source library from Hugging Face and is completely free to use. You can install it via pip and use it without any license fees. There is no paid tier for smolagents itself – Hugging Face provides it as part of their open-source ecosystem.

Pros and Cons

The primary advantage of Smolagents is its simplicity and flexibility. It provides a clean slate for experienced developers to build highly customized agentic systems without the overhead of more opinionated frameworks. It is a great choice if you find AutoGen’s abstractions too restrictive or complex.

The downside is that it requires more hands-on coding to achieve what other frameworks provide out of the box. It lacks the advanced orchestration features present in AutoGen. No built-in support for complex multi-agent patterns or conversation management.

6. Agno

Agno (formerly Phidata) is a Python-native toolkit for building structured and production-grade AI assistants. It’s a solid AutoGen alternative if you want code-centric control with a ready runtime and an optional Agent UI for quick testing.

Features

- Offers multi-agent orchestration as a first-class feature, you can build agents with tools, knowledge, and shared state, and organize them as teams so they can collaborate on tasks.

- Supports multi-modal by default, so agents can handle text, images, audio, and video inputs/outputs.

- Built-in SQL and data tools enable agents to query databases, analyze datasets, and generate visualizations without custom tool implementation.

- Built with production in mind, offers easy integrations with web frameworks like FastAPI and tools for logging and monitoring.

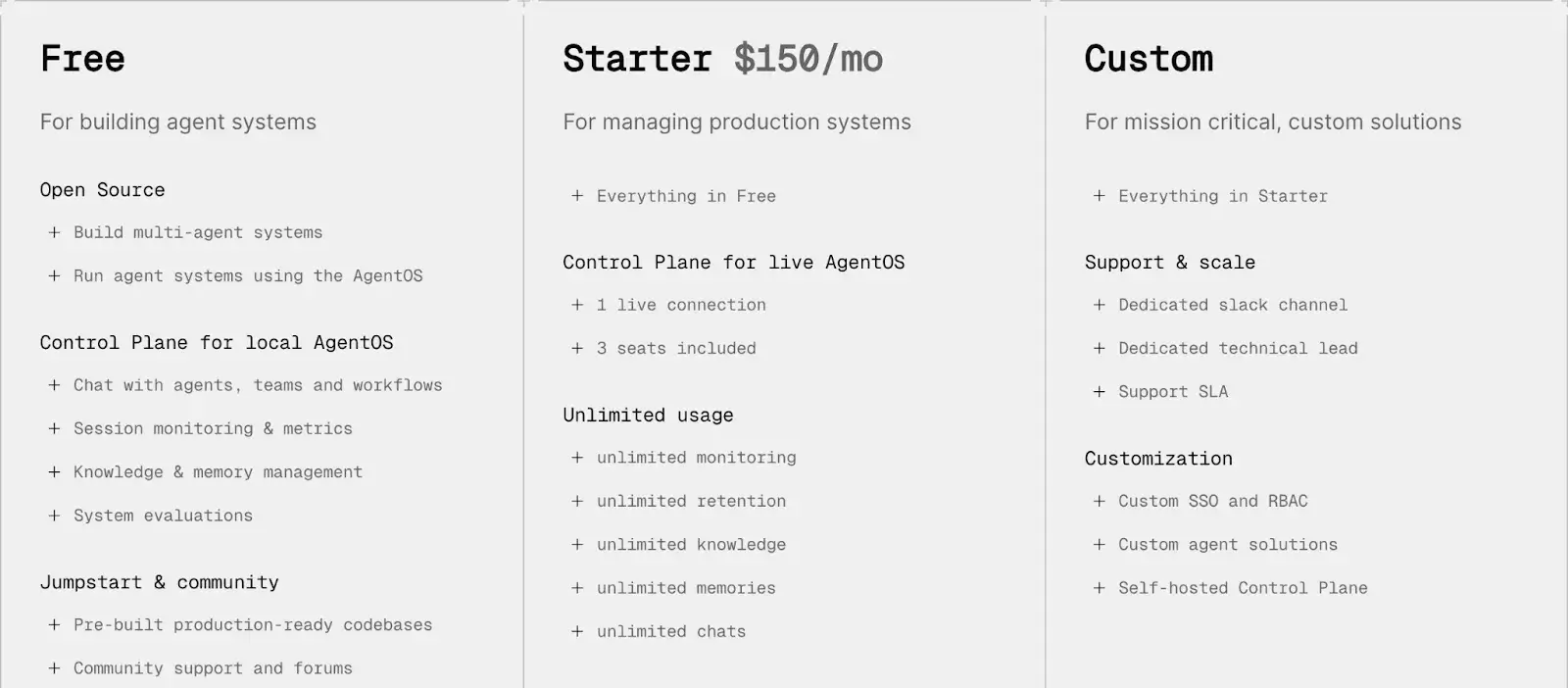

Pricing

Agno is completely free to get started (open source). It offers two paid plans as well:

- Starter: $150 per month

- Custom: Custom pricing

Pros and Cons

Agno’s biggest pro is its high performance and low overhead. It claims extremely fast agent instantiation and a minimal memory footprint in benchmarks. It includes a UI interface tied into the runtime for testing, management, and monitoring. This reduces ‘plumbing’ work when going from prototype to deployable agent. Built-in session and memory management let agents maintain state across interactions.

As a relatively new project (especially under its rebranded name), the community, third-party extensions, and long-term stability are still evolving. Some features may be experimental or change over time. Also, for small-scale or one-off agents, the built-in FastAPI + UI stack could be overkill. You might end up with unnecessary infrastructure, where a minimal library would suffice.

7. Ag2

Ag2 (Agent Gen 2) is a community-driven fork of the original AutoGen project, created to preserve the v0.2 API and continue its development line independently of Microsoft’s v0.4 rewrite. It is the go-to choice for users who were comfortable with the original AutoGen and want to avoid breaking changes.

Features

- Get a familiar orchestration model to AutoGen where agents message each other and share a chat session. You can have any number of agents (AI or human) in the loop, and define their personas/capabilities.

- Retains all the core concepts that made AutoGen popular, including

AssistantAgent,UserProxyAgent, and theGroupChatorchestration model. - Supports agent-to-agent conversions like AutoGen, but with better guardrails, you can configure each agent’s level of autonomy and add a human to approve or intervene on certain steps.

- Provides better trace logs of the messages exchanged and their order, along with token counting and spend monitoring via OpenTelemetry.

Pricing

Ag2 is a community-led, open-source project and is completely free to use. All source code is available on GitHub, and you can pip install ag2 (also aliased as autogen) to get started.

Pros and Cons

The main advantage of Ag2 is stability. It provides a safe harbor for developers who built applications on the original AutoGen API. If you are facing migration challenges with AutoGen v0.4, switching to Ag2 is a simple solution. It’s effectively the direct upgrade with better logging and scaling.

Ag2 inherits AutoGen’s fundamental architecture limitations. While improvements help, core issues around conversational unpredictability remain. For very structured use cases, AG2 might feel like ‘overkill’ when a simpler pipeline would do.

8. Haystack

Haystack by deepset is a comprehensive, open-source framework for building LLM applications with strong RAG capabilities. It offers a more structured alternative to AutoGen’s conversational approach through composable components.

Features

- Build complex workflows by assembling and chaining reusable components (Nodes) for data processing, retrieval, generation, and more in a modular pipeline sequence, have branching logic, or even loops.

- Support for dense vector search, sparse keyword search, and combinations (hybrid) for finding relevant context.

- Built-in agent patterns that can reason about a query, decide which tools to use, and perform multi-step operations to find an answer.

- Integrates with vector databases, LLM providers, and document stores, as well as built-in support for tracing and evaluation, allowing you to monitor the performance of your pipelines.

Pricing

Haystack is an open-source framework and is free to use. Deepset, the company behind it, also offers a managed platform called Deepset Cloud with enterprise features and paid plans.

Pros and Cons

Haystack’s greatest strength is its production-ready, modular design for data-intensive applications. It’s well-engineered, with built-in support for things like document formats, databases, caching, retry logic, and model integration. You also benefit from a strong community and good documentation.

The framework requires more setup compared to AutoGen’s simpler conversation model. Especially when it comes to multi-agent collaboration or scenarios requiring dynamic agent interactions, HayStack might feel limited.

The Best AutoGen Alternatives to Build Agents and Applications

If you’re moving beyond AutoGen’s conversational chaos and want frameworks purpose-built for production-grade agent orchestration, here are the top three alternatives to consider:

✅ ZenML: Best for teams building deterministic, production-ready multi-agent and RAG pipelines with full observability and infrastructure control.

✅ LangGraph: Ideal for developers who prefer explicit graph-based control over agent workflows and want to visualize state transitions clearly.

✅ CrewAI: Perfect for teams that want structured, role-based collaboration between agents and a simpler learning curve.

Each of these frameworks tackles AutoGen’s unpredictability in its own way. ZenML, however, stands out for engineers who want the same rigor they apply to MLOps; now extended to LLMOps.

If you’re interested in taking your AI agent projects to the next level, consider joining the ZenML waitlist. We’re building our first-class support for agentic frameworks (like LangGraph, CrewAI, and more) inside ZenML, and we’d love early feedback from users pushing the boundaries of what AI agents can do. With ZenML, you can seamlessly integrate whichever agent framework you choose into robust, production-grade workflows. Join our waitlist to get started.👇