Flowise is a popular open-source platform for building AI agent workflows on a visual interface. But it has some limitations that might push you to look for an alternative.

Some of the most concerning drawbacks we found are scaling challenges, feature gaps, and the lack of essential enterprise features.

In this article, we’ll cover what Flowise is, why you might need an alternative, key criteria for evaluating tools, and a detailed analysis of seven Flowise alternatives.

TL;DR

- Why Look for Alternatives: Flowise faces production challenges, including high RAM usage, memory leaks, and reliability issues under load. Teams often encounter breaking chains during updates and suffer from gated enterprise features.

- Who Should Care: ML engineers, Python developers, and teams building production-grade AI agents who need better scalability, stability, and enterprise features than what Flowise currently offers.

- What to Expect: In-depth analysis of 7 alternatives - code-based frameworks like AutoGen, Griptape, etc., to no-code platforms like n8n and Botpress, each with unique strengths for agent orchestration and workflow automation.

What is Flowise?

Flowise is an open-source generative AI platform that lets you build AI agents and LLM workflows through a visual drag-and-drop interface. It provides modular nodes that you can connect on a canvas to create chatbots, Q&A systems, and other AI-powered applications, without code.

The platform is structured around two primary modules: ‘Chatflow,’ which is used for creating single-agent systems, like chatbots; and ‘Agentflow,’ which helps in building multi-agent systems and complex workflow orchestration.

Overall, Flowise is great for quickly whipping up an AI demo or internal tool. But often falls short of production requirements without significant engineering effort or upgrading to paid, enterprise-focused tiers.

Why is there a Need for a Botpress Alternative?

Even if you jump-start your agent prototype, you might eventually need an alternative when pushing to production or scaling up.

Here are a few reasons why:

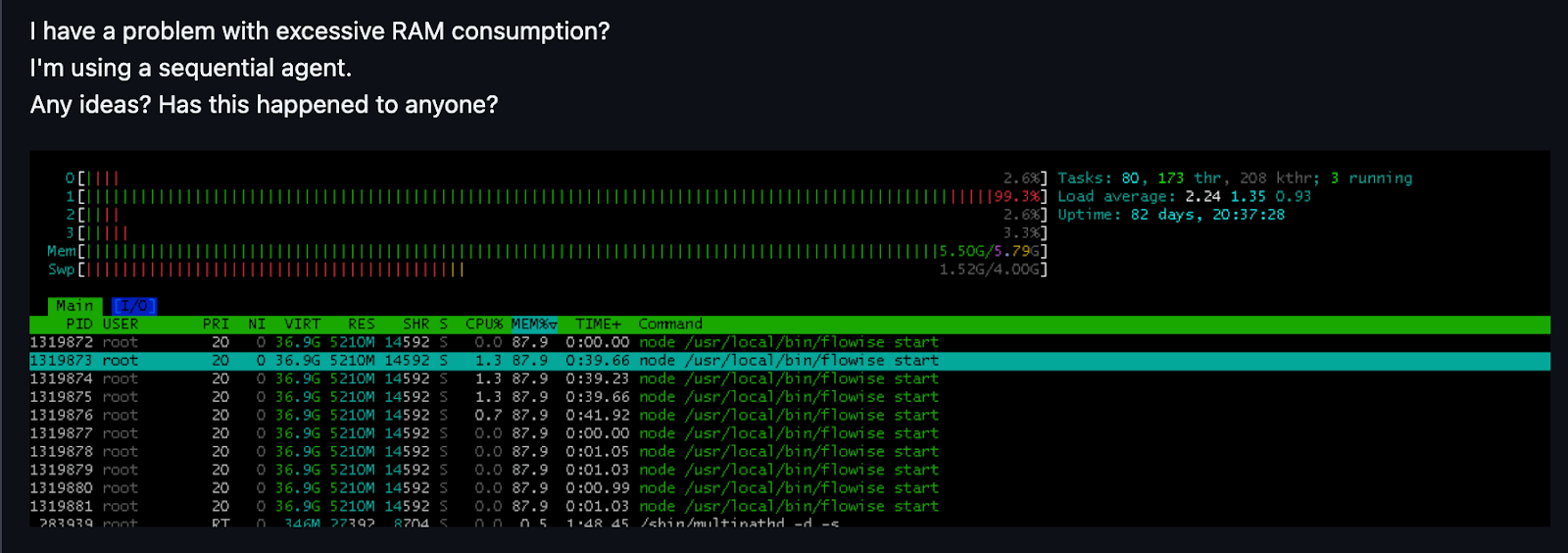

Reason 1. Scaling and Performance Pain in Production

A common issue with Flowise is its performance under load. Community forums and GitHub discussions contain reports of high RAM consumption and memory leaks, particularly during long-running flows or bulk document upserts.

For example, one GitHub user shares how each new request on Flowise builds a graph that never gets freed, consuming more memory until the server goes down, unless you implement a custom caching workaround.

Reliability issues under load have also surfaced. On hosting platforms like Render, users saw their Flowise server restart or flows disappear when using certain memory-intensive modules.

While horizontal scaling is technically possible, Flowise’s official guidance is minimal and requires a trial-and-error approach, which teams are not willing to take at this stage.

Reason 2. Upgrade Friction and Breaking Changes

Maintaining a production application on Flowise is tricky due to its history of upgrade friction. Flowise’s official documentation acknowledges the potential for breaking changes between versions, a fact that is well-supported by user experiences.

One Reddit user highlighted that updating their Flowise instance caused loss of all agents, tools, and chatflows - essentially a complete data wipe.

This high instability forces teams into a difficult position: either they avoid updates and risk falling behind on new features, or they look for an alternative to Flowise.

Reason 3. Enterprise Features Gated or Still Maturing

Multi-user workspaces, role-based access control (RBAC), single sign-on, audit logs, and other security/governance features are gated behind Flowise’s Cloud and Enterprise plans.

Continuous requests from users for finer-grained file or folder-level RBAC suggest teams want tighter controls than what’s currently available in OSS.

For professional teams, these features are non-negotiable. For example, if you want multiple team members collaborating on flows with granular permissions, the OSS edition won’t suffice.

Additionally, some features, like the new workspaces and RBAC model, are still maturing. This leaves you with two options: either to wait for Flowise to get production-ready, or pick a Flowsie alternative from our list.

Evaluation Criteria

We evaluated Flowise alternatives through a lens focused on production readiness, essentially using three criteria:

1. Fit For Your Stack (Python-first)

Flowise is a Node/TypeScript app with a web UI. Our first criterion was to find production-grade tools that must integrate smoothly into your existing MLOps stack.

We assessed and evaluated the alternatives for:

- Native Python SDK with first-class types and Pydantic models

- Custom tool development in Python without JavaScript/TypeScript glue layers

- Integration with popular libraries: LangGraph, LlamaIndex, CrewAI, AutoGen, OpenAI Tools, and function calling

- Container images, Helm charts, Terraform support, and CUDA or CPU-only deployment options

2. Orchestration and Runtime Model

We evaluated how the alternatives orchestrate flows or chains:

- Does it support DAG, graph, or event-driven flows for long-running agent patterns?

- Can you checkpoint progress, resume interrupted runs, and track complete lineage?

- What trigger options are available: scheduling, webhooks, queues, or streaming token output?

3. Agent Pattern and HITL

Finally, we assessed the level of complexity each alternative can handle.

- Does it support multiple agents collaborating?

- Can agents use different tools and switch strategies?

- How are conversation history or intermediate results stored and passed along?

What are the Best Alternatives to Flowise

Here’s a table to summarize the best Flowise alternatives:

Code-Based Flowise Alternatives

Let’s first look at four code-first frameworks that serve as Flowise alternatives. These require you to write Python (or C#/Java) code to define your agents and workflows, but offer greater flexibility and integration for developers:

1. AutoGen

Microsoft's AutoGen is a framework for developing LLM applications that use multiple, collaborating agents to solve complex tasks. Unlike Flowise's visual node-based approach, AutoGen focuses on dynamic systems where the workflow is not a predefined graph but emerges from the conversation between agents.

Features

- Build autonomous agents using a conversation framework where agents communicate via diverse conversation patterns, including hierarchical chats and dynamic group chat, to complete tasks.

- Use OpenAI function calling or custom Python functions to deploy specialized agents that perform calculations, web searches, code execution, etc.

- Configure how autonomous the agents are. Supports human-in-the-loop via

AssistantAgentandUserProxyAgentabstractions, where a human can act as one of the participants or approve steps. - Supports asynchronous execution using Python

asyncio, you can stream agent messages in real-time. This is useful for long responses or when multiple agents are working in parallel.

Pricing

AutoGen is completely free to use under the MIT license, with all source code available on GitHub.

👀 Note: You’ll still pay for any API usage, but the framework itself costs nothing.

Pros and Cons

AutoGen’s best advantage is its autonomous, conversation-driven architecture. Unlike Flowise’s single-flow paradigm, AutoGen’s dynamic flow building tops scenarios where the solution path isn't predetermined. Plus, it’s Python-first and well-integrated with Python tool stacks.

However, this freedom often leads to unpredictability. Since agents converse freely, there’s a high risk of inefficient API calls and high token costs in a production setting. Designing interactions requires careful prompting and oversight. For instance, if you don’t configure human feedback correctly, agents might get stuck calling the same tool in a loop.

📚 More articles that showcase AutoGen:

2. Griptape

Griptape is a Python-native framework for building structured, secure, and production-ready AI agents and workflows. It’s a go-to alternative to Flowise for developers who want complete coding control without the unpredictability of conversational frameworks.

Features

- Designed for Python developers, every tool or agent is a Python object you can import and extend, which means better IDE support and type checking compared to Flowise’s JSON-like flows.

- Unlike Flowise’s sequential flows, Griptape supports non-sequential DAGs in its workflows. You can have parallel branches, converging nodes, and complex routing in a single workflow.

- The modular tool system allows chaining multiple tools together, with intelligent routing based on event-based triggers or task requirements.

- Griptape's

Task Memorykeeps large data volumes or sensitive information off the prompt that is sent to the LLM. This enhances security and reduces token costs, directly addressing major production concerns.

Pricing

Griptape is completely free to use as an open-source framework. It also offers Griptape Cloud, a managed platform with a free tier that includes 10MB of data ingestion, 1,000 retrieval queries, and one hour of runtime, and a pay-as-you-go Developer plan.

Pros and Cons

Griptape’s strengths are its clean design and performance. Developers often find it more ‘predictable’ and easier to maintain than prompt-centric frameworks. It helps avoid boilerplate while prioritizing production concerns. Plus, its focus on speed and non-blocking execution is great for production usage. You can also integrate custom models or tools using Python functions to extend capabilities.

On the contrary, you might find it ‘too code-centric’; even though Nodes exists, Griptape’s full power is realized in Python. Also, certain high-level features, like one-click deployment or built-in monitoring, might require using Griptape Cloud. If you strictly self-host, you’ll need to implement logging or monitoring.

Semantic Kernel is Microsoft's open-source SDK for integrating LLMs into new and existing applications through a plugin-based architecture. Rather than building standalone agent systems, it focuses on embedding AI reasoning directly into enterprise applications and services.

Features

- Support for multiple AI model backends like OpenAI, Azure, local models, and connectors for OpenAI, HuggingFace, Azure Cognitive Search, makes it easy to swap LLM providers without rewriting your app.

- Built-in planning capabilities that can break complex tasks into executable steps. You define an Agent with available actions (plugins), and the Agent Orchestrator uses a planner to decide which action to execute to fulfill an objective.

- Native support for .NET, Python, and Java, making it accessible to enterprise teams working in diverse technology stacks.

- Built-in memory capabilities for managing conversational context and support RAG patterns to ground model responses in specific, reliable data sources.

Pricing

Semantic Kernel is free and open-source. The only pricing considerations are the infrastructure you run it on and the API calls to LLMs or other services your agents use.

Pros and Cons

Semantic Kernel offers three big pros for enterprises: enterprise-grade architecture, strong backing from Microsoft, and support for multiple programming languages. The plugin architecture promotes code reuse and makes it easy to share AI capabilities across different applications.

The flip side of being an SDK is that it lacks a visual interface or turnkey UI. It’s not a ready-made chatbot builder. You must write the code to use it, handle deployments, and possibly build any user interface needed on top. This means more initial work compared to a plug-and-play tool like Flowise.

📚 More articles that showcase Semantic Kernel:

4. Haystack by deepset

Haystack is a production-ready, open-source Python framework for building customizable LLM applications, with a focus on creating advanced RAG and agentic search systems. It’s an ideal Flowise alternative for teams that need to build complex, high-performance data and search pipelines to power their AI agents.

Features

- Build complex workflows by combining components like retrievers, generators, rankers, and processors in flexible pipeline configurations that can be easily modified and tested.

- While not as advanced as dedicated agent frameworks, Haystack supports the creation of agentic pipelines that include branching, looping, and tool use through standard function-calling interfaces.

- Offers pre-built nodes for everything: document indexing, retrieval, generative QA, summarization, etc. All you need to do is assemble these into a linear or DAG Pipeline.

- Supports advanced techniques like hybrid retrieval, re-ranking, and self-correction loops, offering much deeper and more powerful RAG functionality than the basic setups available in Flowise.

Pricing

Haystack’s core version is open-source and completely free under the Apache 2.0 license. Its creator, deepset, also offers commercial products, including a free ‘Studio’ for visual prototyping and a custom-priced ‘Enterprise’ plan that provides managed services and dedicated support.

Pros and Cons

Haystack’s greatest pro is its production-readiness for RAG applications. If your main goal is a ‘ChatGPT over my documents’ or a domain-specific QA system, Haystack provides all the pieces. It’s well-documented and has a strong community.

However, not being an agent orchestrator at core adds some complexity. For example, if you need an agent to do more than retrieve knowledge, you’ll find Haystack Agents limiting. Also, because it’s code-based, some developer effort is required to build and maintain pipelines, unlike Flowise's visual interface building.

No-Code Flowise Alternatives

No-code alternatives are platforms where you build and deploy AI agents without heavy programming, often via graphical interfaces. They target a broader audience (including non-engineers) and typically integrate many out-of-the-box features like UI, analytics, and connectors to services. Three notable ones are:

5. n8n

n8n is a low-code workflow automation platform that extends beyond AI agents to general business process automation. While not specifically built for LLMs, it’s a popular Flowise alternative for teams that need to integrate AI agents into broader business process automation.

For example, instead of building a complex agent in Flowise, you might use n8n to design: trigger -> get input -> call OpenAI completion -> do something with the result.

Features

- Provides a browser-based, visual editor for building complex workflows with IF/ELSE branching, looping, and error handling.

- More than 1,000 pre-built integrations or ‘nodes’ allow connecting n8n workflows with databases, APIs, cloud services, and popular business tools.

- Support for dual-model development allows you to build workflows visually and with custom JavaScript or Python nodes to implement complex logic.

- Allows passing data between nodes. You can also use global variables and temporary storage. While it doesn’t have a sophisticated memory for conversations, you can implement one by storing context in variables or in an external DB via nodes.

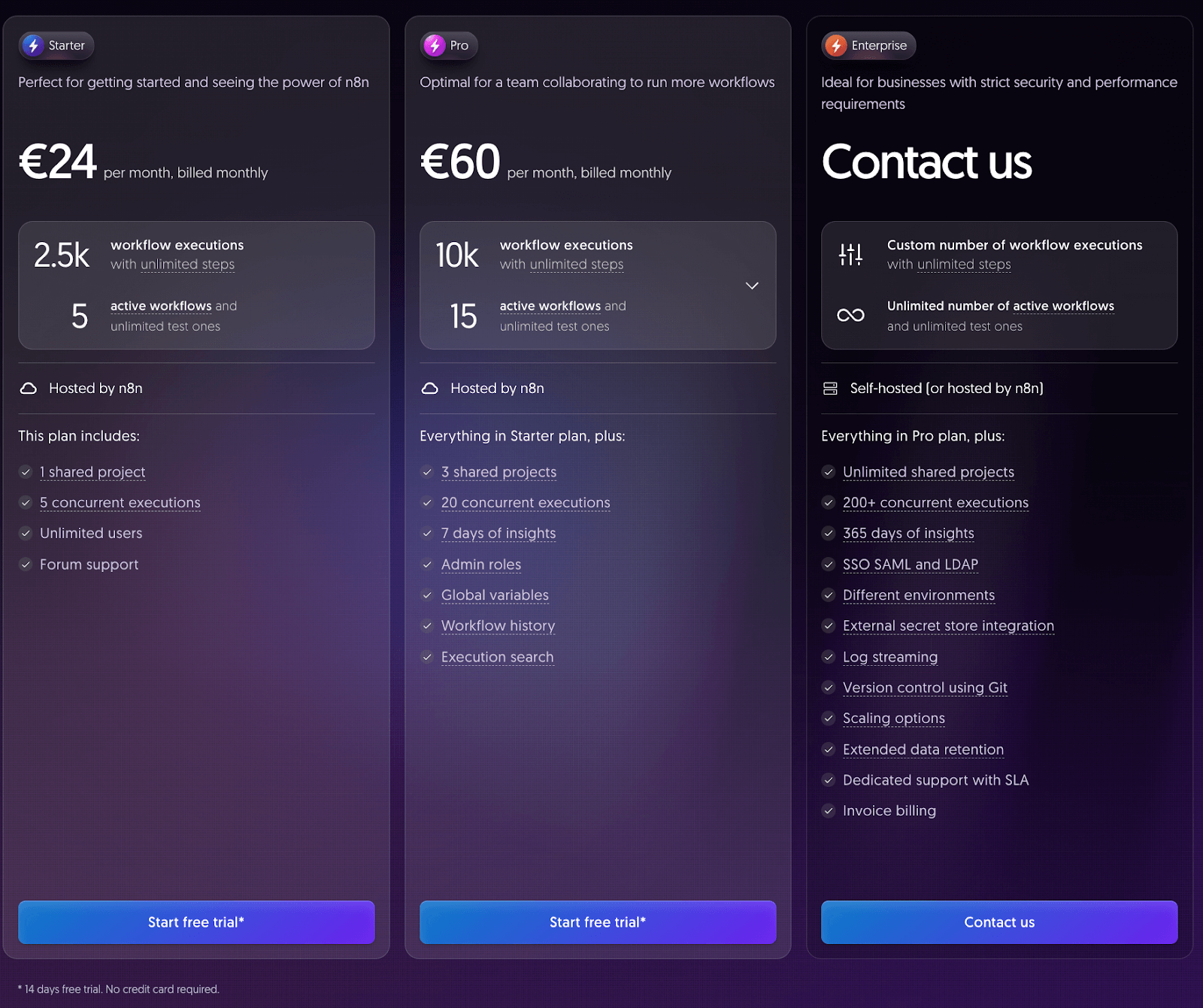

Pricing

n8n offers three paid plans to choose from. Each plan comes with a 14-day free trial, no credit card required.

- Starter: €24 per month with 2.5k workflow executions.

- Pro: €60 per month with 10k workflow executions.

- Enterprise: Custom pricing. Custom number of workflow executions and infinite active workflows.

Pros and Cons

n8n's core strength is its unique blend of a visual editor with code-level customization. The visual interface is intuitive, and the self-hosted option provides flexibility for teams with specific infrastructure requirements. Error handling and debugging tools are well-developed compared to many visual workflow platforms.

However, n8n lacks the AI-specific features that make Flowise attractive, like built-in LLM integrations and agent-specific patterns. Building complex conversational agents requires more manual configuration, and the platform's general-purpose nature means less optimization for AI workloads specifically.

📚 More articles than showcase n8n:

6. Botpress

Botpress is an end-to-end platform for building LLM-powered chatbots and AI agents that can operate across channels. It provides a more focused alternative to Flowise for teams building primarily conversational agents and customer-facing AI systems.

Features

- Offers a drag-and-drop interface optimized for dialog design, with built-in patterns for common conversational scenarios like slot filling, confirmation dialogs, and context switching.

- Supports omnichannel coverage; build an agent once and deploy it across numerous channels, including web chat, WhatsApp, Messenger, and Slack.

- Built-in NLU and human-in-the-loop capabilities allow for intent recognition, entity extraction, and safe transfer of chat to a human agent.

- Adds hooks and actions system using custom code integration while maintaining the visual development experience for non-technical team members.

- Has dedicated dashboards for real-time workflow stats, like number of interactions, fallback rates, satisfaction ratings, etc., and detailed conversation logs.

Pricing

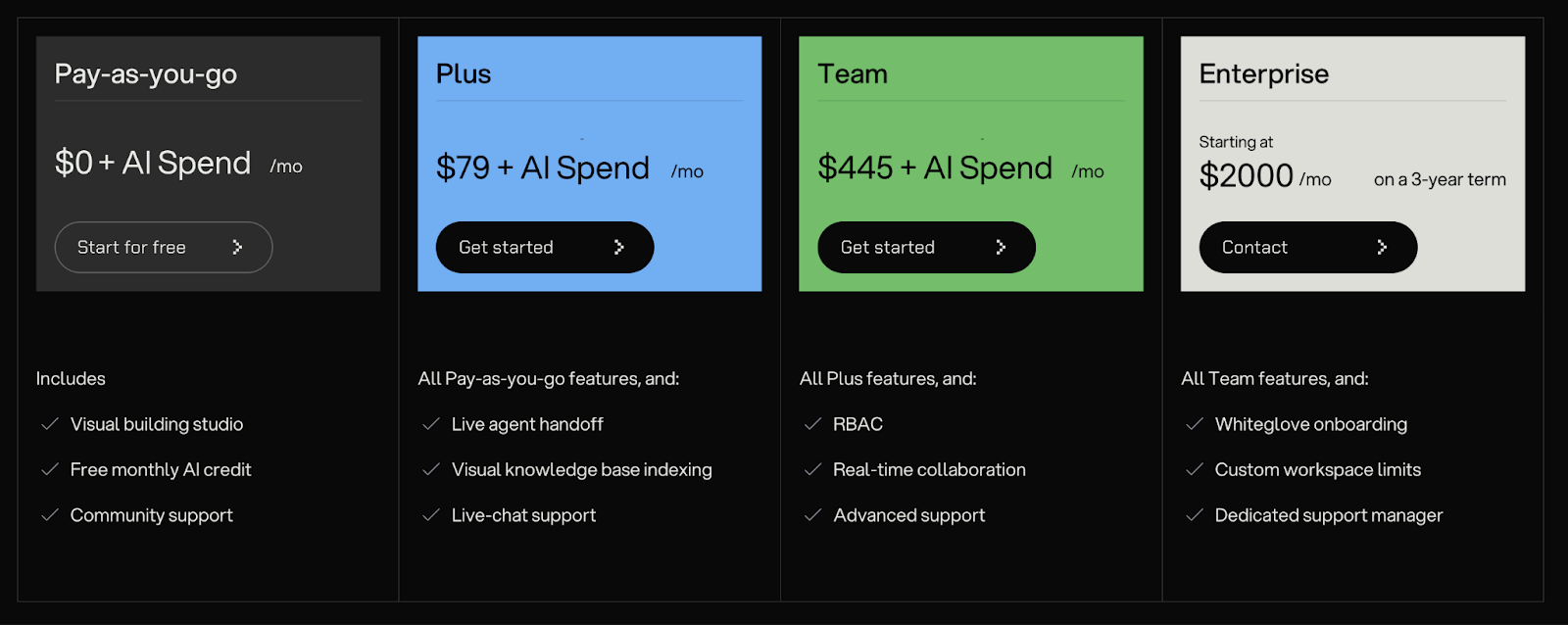

Botpress offers a ‘Pay-as-you-go’ plan that is free to start. Other than that, it has three paid plans:

- Plus: $79 per month

- Team: $445 per month

- Enterprise: $2,000 per month

Pros and Cons

Botpress excels at conversational AI with sophisticated dialog management. In general, it’s a more powerful yet easier-to-use alternative to Flowise for non-developers. Thanks to its polished UI, robust multi-channel support, and useful built-in AI components

The main con is less flexibility in custom agent logic. It’s primarily for conversational interfaces. If you want a multi-step agent that can be used beyond conversation, like an autonomous coding agent, Botpress will not fit well. Also, because Botpress abstracts a lot, you’ll find it less transparent in how the LLM is reasoning.

7. Relevance AI

Relevance AI provides a no-code platform specifically designed for building AI agents and workflows. It allows you to create custom AI agents simply by describing their functions in natural language.

In a way, Relevance AI is tackling a similar space as Flowise, but aiming to be more user-friendly and enterprise-ready.

Features

- Choose from preset agent templates or create agents by specifying what tools or data they have and describing their objective in plain language. Relevance AI automatically generates underlying logic for the agent.

- Supports Approvals and HITL steps together with Team features, including sharing, version control, and role-based permissions for managing agent development across organizations.

- Built-in connectors for popular services and the ability to create custom API integrations without coding allow agents to interact with external systems.

- Get complete visibility with a centralized command center dashboard for monitoring agent runs, activities, outcomes, and credit usage.

Pricing

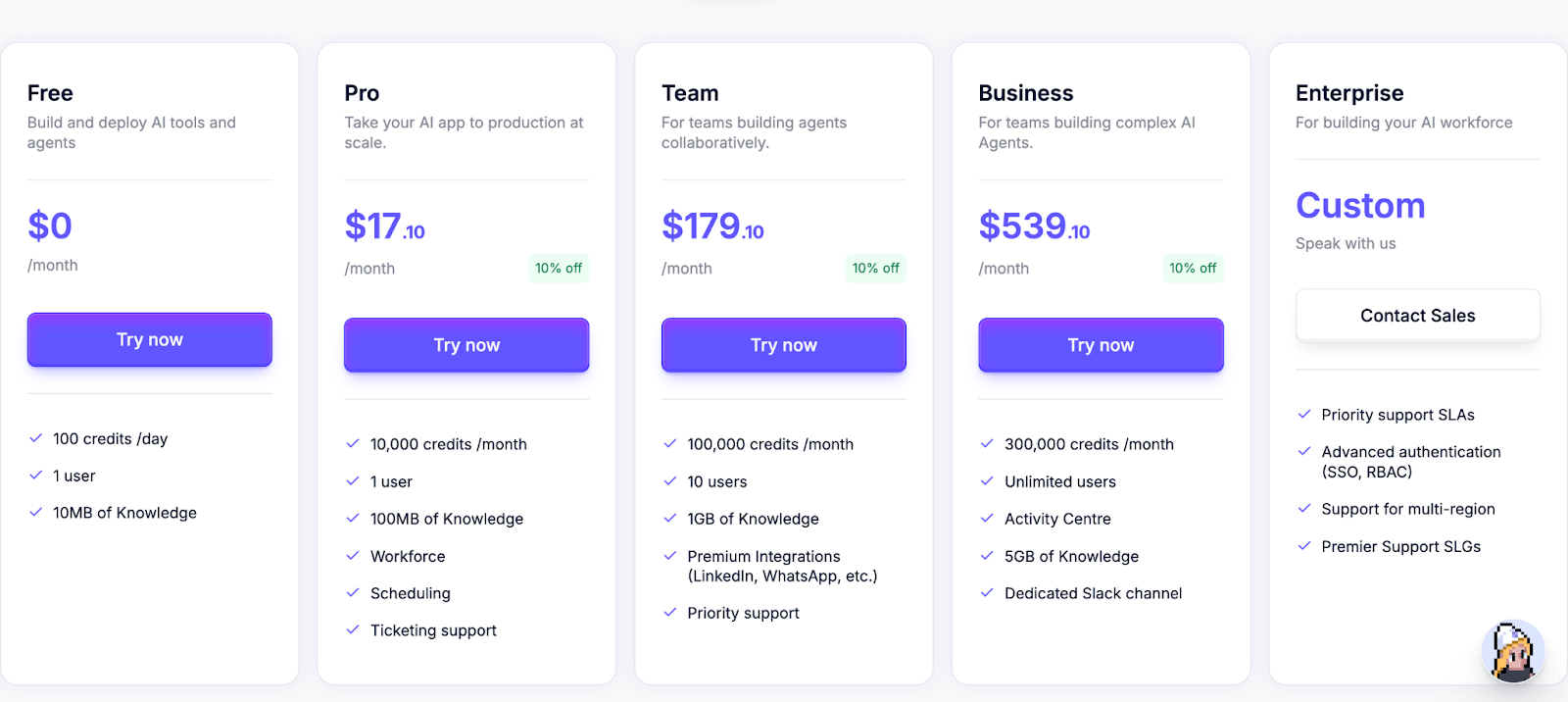

Relevance AI offers a generous free plan along with four paid plans:

- Pro: $17.10 per month

- Team: $179.10 per month

- Business: $539.10 per month

- Enterprise: Custom

Pros and Cons

Relevance AI's key advantage is its accessibility and the speed at which non-technical users can build and deploy powerful agents. Its visual development approach of Flowise, with more polished UX and a professional template library, aids development.

The main drawback is that, as a proprietary, high-level platform, it offers less transparency and control over the underlying mechanics compared to open-source or code-based frameworks.

Compared to Flowise, Relevance AI is not open-source; it’s a proprietary SaaS. If Relevance shut down or changed terms, you’d have to migrate. With Flowise or LangChain, you have the code and can run it yourself.

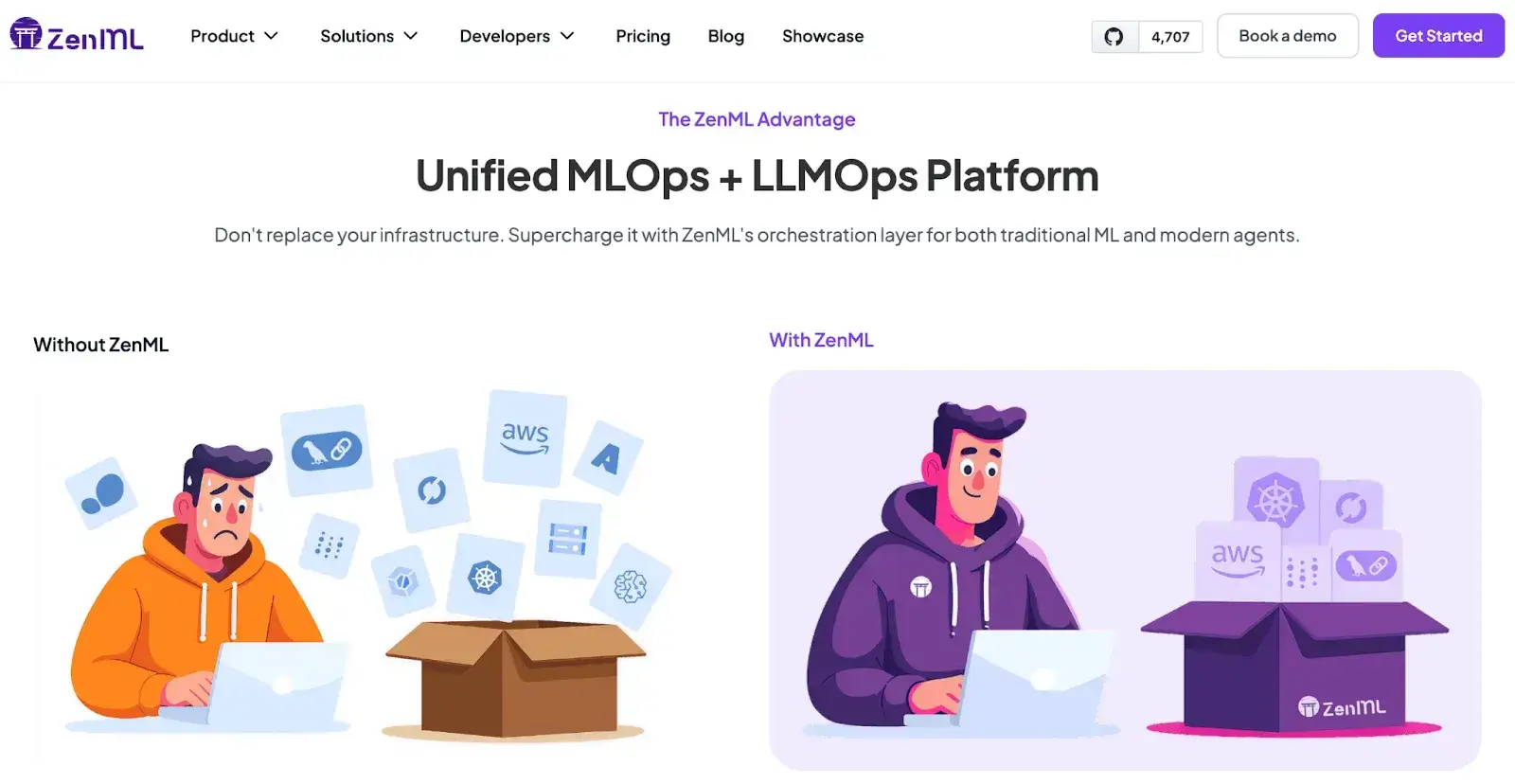

How ZenML Helps In Closing the Outer Loop Around Your Agents

While code-based alternatives for Flowise excel at the ‘inner loop’ of agent development, they often fall short in the ‘outer loop’ of production lifecycle management.

This outer loop involves the entire MLOps + LLMOps lifecycle: deploying, monitoring, evaluating, and iterating on the agent in a systematic and reproducible manner.

Code-first agent-building frameworks themselves do not solve these MLOps challenges, and therefore, ZenML was built for it.

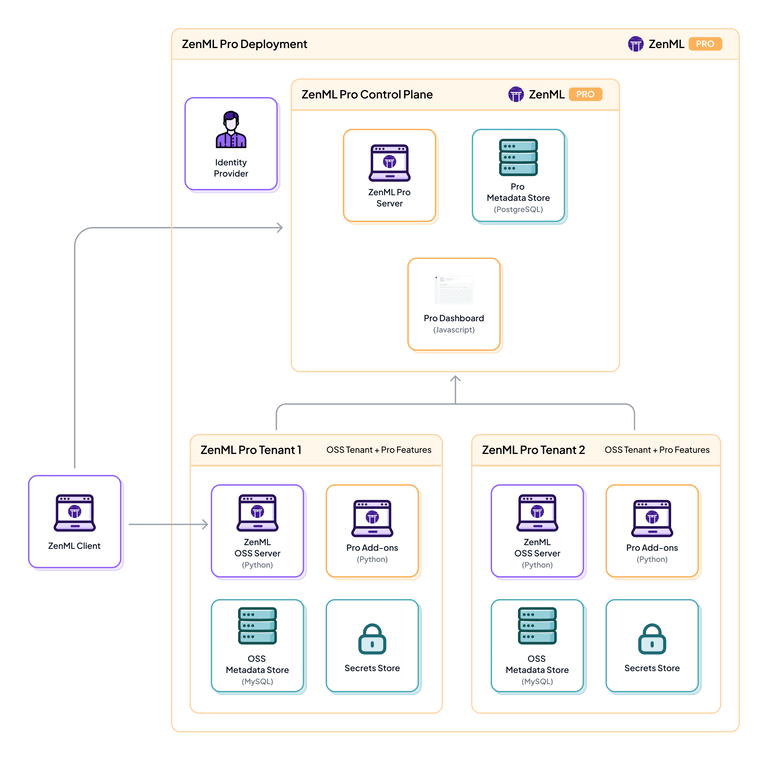

ZenML is an open-source MLOps + LLMOps framework that works as the glue and guardrails around your agent frameworks built atop Griptape, AutoGen, and other code-first tools. It doesn’t replace their functionality; rather, it complements them by managing the lifecycle and infrastructure aspects of your AI workflows.

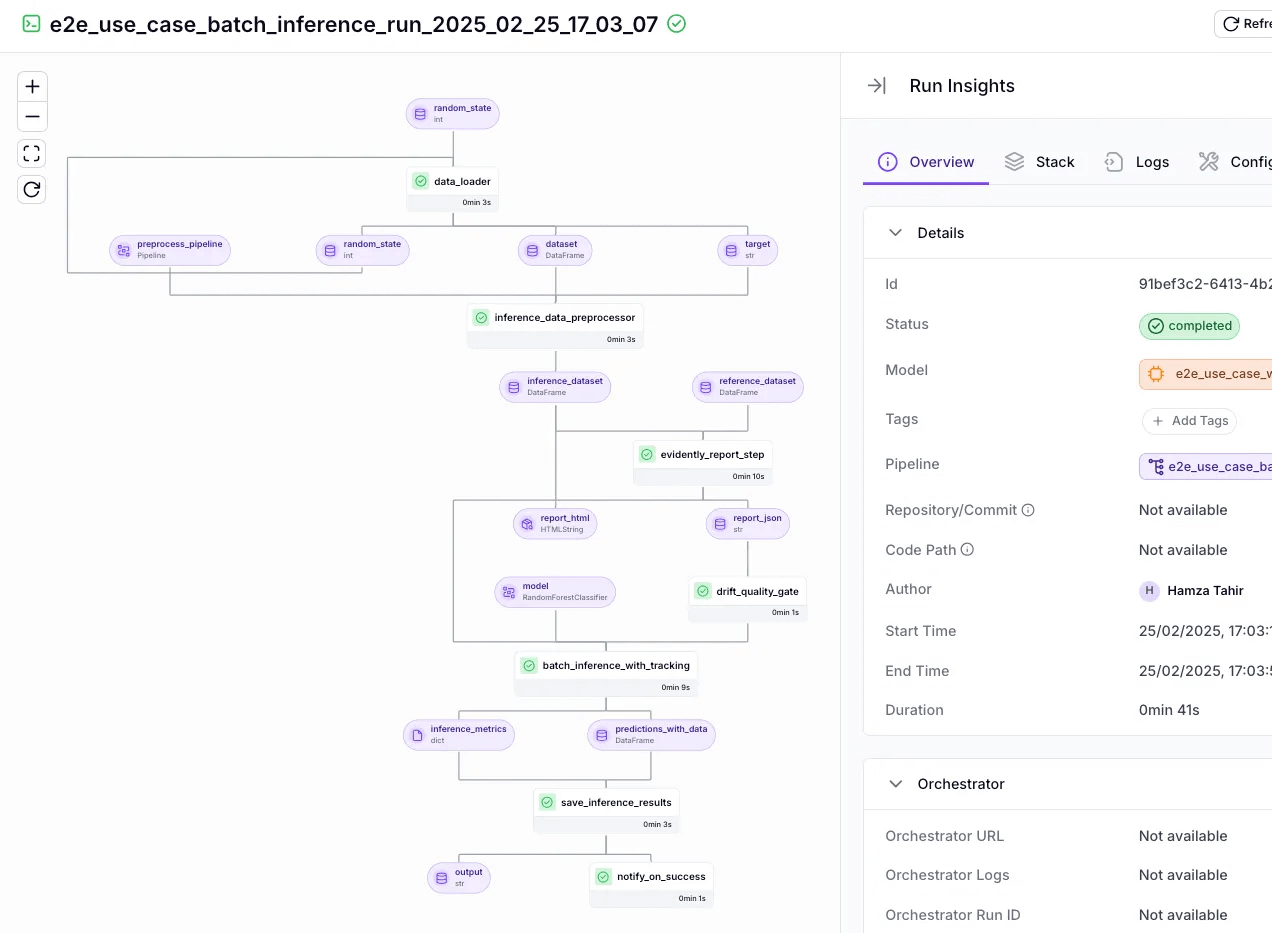

ZenML allows you to embed your agent's code, whether it's built with Griptape, AutoGen, or another framework, inside a larger, versioned pipeline. This pipeline can orchestrate the entire end-to-end process, from data preprocessing and RAG index creation to agent execution and final evaluation.

Here are ways ZenML helps in boosting your agentic AI journey:

1. Unified Pipeline Orchestration

ZenML allows you to embed your agent's code, whether it's built with Griptape, AutoGen, or another framework, inside a larger, versioned pipeline.

For example, you might have a pipeline where one step spins up a Haystack or AutoGen agent to analyze some data and produce an output, and another step takes that output and generates a report.

ZenML handles scheduling and executing these pipelines, so you can incorporate agent steps alongside traditional ML steps seamlessly. This means your agent isn’t running in a silo – it becomes part of a reproducible, automated pipeline.

2. Experiment Tracking and Visibility

A primary challenge with AI agents is their ‘black box’ nature. When an agent's behavior changes, it can be difficult to determine the cause.

With ZenML, you get complete visibility and lineage tracking. It automatically tracks every artifact, parameter, input, output, and metric from your agent runs.

Say you prompt the agent differently or use a different model, you’ll have a record of which version performed better and why. Essentially, you gain visibility over the entire process: not just what the agent decided, but also the surrounding context, including dataset version, tool versions, etc.

3. Reproducibility and Versioning

One of ZenML’s core values is making ML workflows reproducible. With ZenML, you can version your pipelines and pipeline runs, including the agent code and configuration used. Later, if your agent starts behaving oddly or performance drifts, you can pinpoint when the change happened by comparing runs.

LLM agents might change behavior due to external factors, and without an outer loop tracking, it’s hard to debug. ZenML fills that gap by ensuring you know exactly what code, model, and data went into each agent execution.

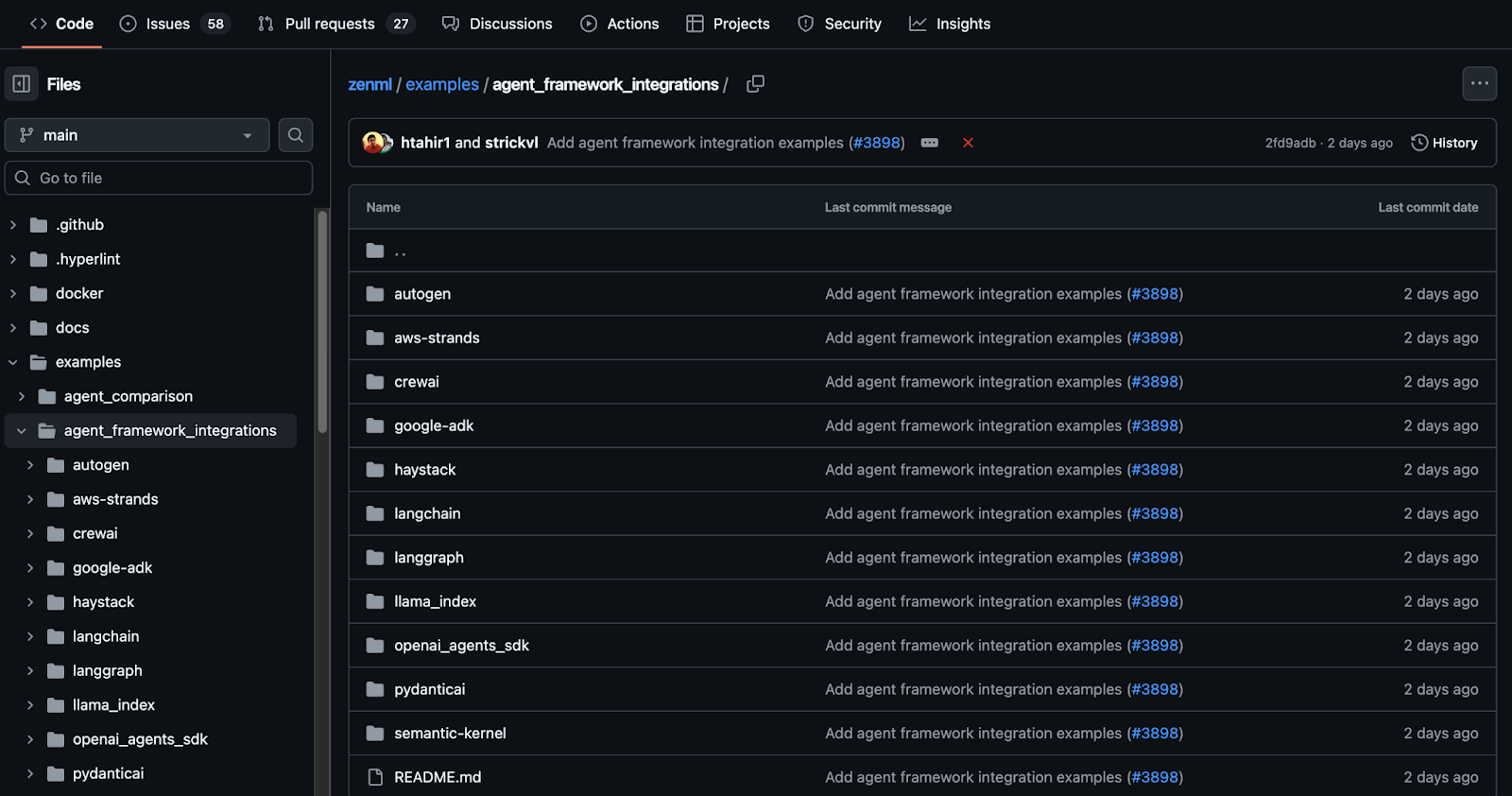

👀 Note: At ZenML, we have built several agent workflow integrations with tools like Semantic Kernel, LangGraph, LlamaIndex, and more. We are actively shipping new integrations that you can find on this GitHub page: ZenML Agent Workflow Integrations.

The Best Flowise Alternatives to Build Automated AI Workflows

While Flowise offers a user-friendly AI agent building experience, scaling up to real-world production demands a different approach and a complete shift in tooling.

The right choice depends entirely on your team's skills and project requirements.

✅ Code-based options like AutoGen and Griptape offer maximum flexibility, control, and customization for Python-first teams.

✅ No-code platforms like n8n and Botpress provide visual development experiences with broader accessibility.

The key is matching tool capabilities to your specific use case: conversational AI applications benefit from Botpress's dialog expertise, while complex multi-agent systems might thrive with AutoGen's flexible conversation patterns.

Regardless of which code-based framework you choose, managing the ‘outer loop’ of deployment, monitoring, and iteration is non-negotiable for production success.

✅ ZenML provides this essential MLOps layer, bringing governance, reproducibility, and visibility to your agentic workflows.

If you’re interested in taking your AI agent projects to the next level, consider joining the ZenML waitlist. We’re building out first-class support for agentic frameworks (like LangGraph, CrewAI, and more) inside ZenML, and we’d love early feedback from users pushing the boundaries of what AI agents can do. With ZenML, you can seamlessly integrate whichever agent framework you choose into robust, production-grade workflows. Join our waitlist to get started.👇