On this page

Semantic Kernel (SK) has rapidly matured as a .NET-based agent framework, but many teams find its Python/Java support, efficiency, and abstraction layers still lacking.

As ML engineers and Python developers building agentic AI systems, it’s worth exploring Semantic Kernel alternatives with powerful orchestration, memory, and human-in-the-loop (HITL) support.

In this article, we introduce 8 leading SK alternatives and compare their core capabilities, costs, and trade-offs, before explaining how ZenML can plug into your agent builder and orchestrate agents in a production-ready pipeline.

TL;DR

- Why Look for Alternatives: Semantic Kernel's limitations in production, like the feature gap between its .NET and Python/Java SDKs, potential for high token consumption in agentic loops, and heavy abstractions that complicate debugging.

- Who Should Care: ML engineers, Python developers, and LLMOps practitioners who are moving beyond simple chatbots to build complex, multi-agent systems and require stable orchestration, observability, and cost management.

- What to Expect: An in-depth, feature-by-feature analysis of 8 Semantic Kernel alternatives (LangGraph, Microsoft AutoGen, LlamaIndex, CrewAI, OpenAI Agents SDK, N8n, and Langflow) evaluated on their core capabilities for building and managing automated AI workflows, plus a look at how ZenML provides the MLOps layer for any agent.

The Need for a Semantic Kernel Alternative?

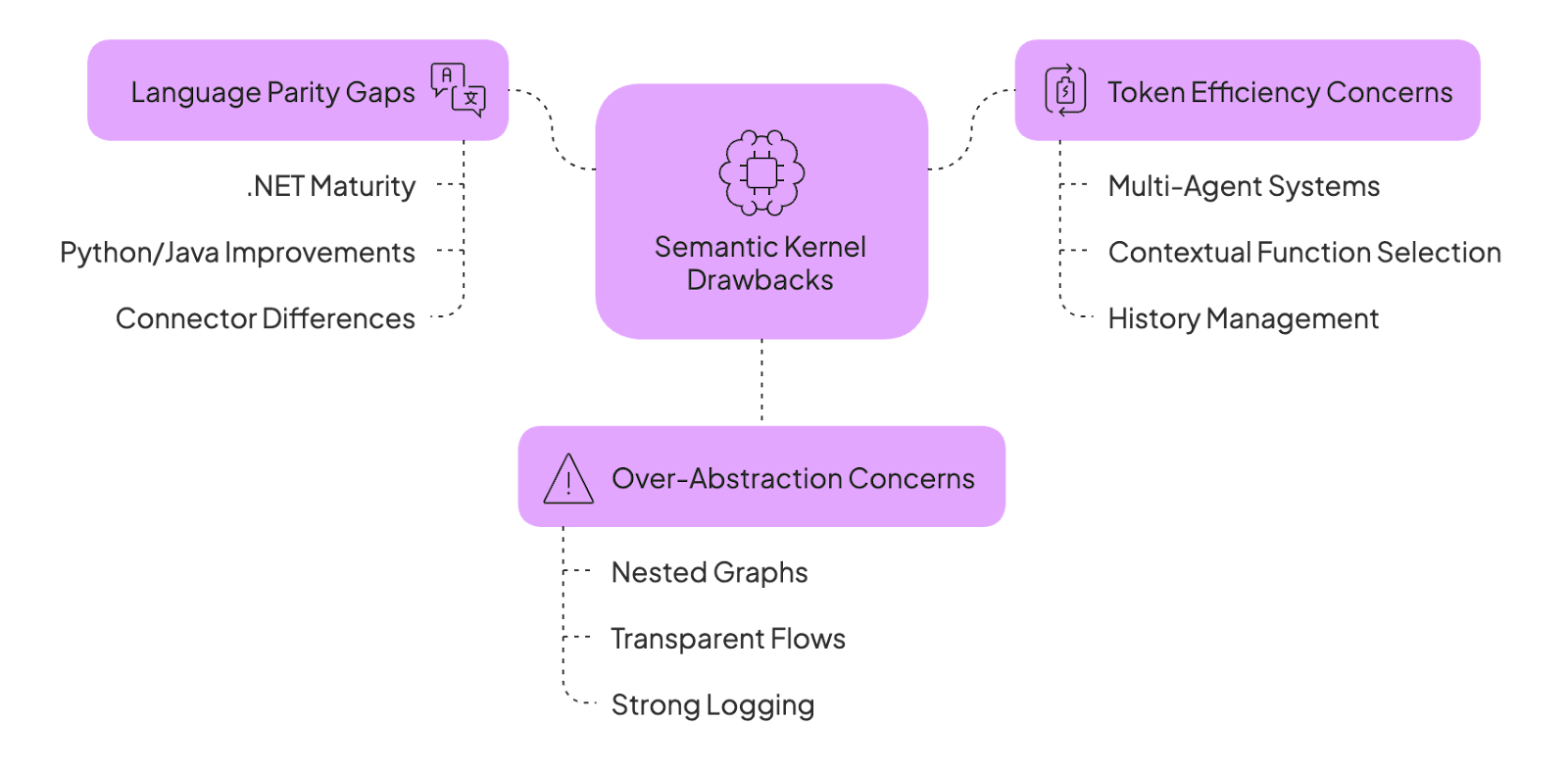

Despite SK’s promise, teams have identified a few drawbacks that force them to look for an alternative:

Reason 1. Language Parity Gaps

SK matured first on .NET, and parity for Python/Java has improved, but isn’t perfect yet. Teams still notice differences in docs, samples, and some connectors.

As one Microsoft blog admits, “many more features are necessary for parity between Python and .NET.”

In practice, Python SK still misses conveniences (like auto-function-calling) and key vector store connectors. Connectors for Ollama and Anthropic (via Bedrock) exist, although several remain experimental, and the freshest samples skew .NET. This forces some teams to limit themselves to C# or tolerate more boilerplate in Python

Reason 2. Token Efficiency Concerns in Agent Loops

Multi-agent systems involve numerous turns of conversation and tool calls. In each turn, the entire context, which includes chat history and tool outputs, is passed back to the LLM.

Without careful management, this history grows significantly large, leading to high token consumption. SK’s contextual function selection helps curb tool bloat in prompts, but long multi-turn chains still need careful history management to control token use.

Reason 3. Over-Abstraction Concerns

SK layers prompts behind graph/state abstractions like the Kernel, Plugins, and Services to create an extensible system. When an agent fails, developers must dig through nested graphs, decorators, and state objects to diagnose issues.

While this is an elegant design, many teams prefer transparent or code-driven flows, or at least tools with strong logging/observability, to avoid ‘opaque failure modes.’

Evaluation Criteria

To provide a credible and consistent analysis, we evaluated all Semantic Kernel alternatives against three key criteria:

1. Core Fit and Capabilities

We evaluated how effectively the tool performs fundamental agent-building tasks. We asked questions like:

- Does it support rich orchestration models: graphs, conversational loops, and conditional branches?

- Does it support multi-agent or hierarchical patterns, and integration with memory/RAG (retrieval-augmented generation)?

- Can it incorporate human-in-the-loop steps or manual review? Does it have built-in state/memory or integration with memory/RAG?

2. Performance and Cost

We examined the pricing models (open-source vs. SaaS, usage-based fees) and the framework’s efficiency in managing expensive resources like LLM tokens. This covers both computational performance (latency and scalability) and financial cost.

3. Language and SDK Quality

We evaluate the quality of the primary SDK with a focus on Python, its documentation, community support, and overall developer experience.

- What languages are supported: Python, .NET, TypeScript?

- How mature and well-documented is the SDK?

- Are there production-grade features - type safety, testing hooks, deployed CLI/UI?

We also consider community momentum and integration with existing ML/DevOps stacks.

What are the Best Alternatives to Semantic Kernel

Here’s a table that summarizes all the Semantic Kernel alternatives:

| Semantic Kernel Alternative | Key Features | Best For | Pricing |

|---|---|---|---|

| LangGraph | • Graph-based orchestration with explicit nodes/edges • Supports agent workflows with bulk state and persistence | Teams needing explicit, debuggable, stateful workflows with strong observability | • Free Developer tier • Plus: $28 per seat per month • Enterprise: Custom pricing |

| Microsoft AutoGen | • Open-source Python framework • Multi-agent chat via message passing • `UserProxyAgent` for HITL • Built-in observability and tracing | Flexible, conversational, research-driven multi-agent systems where agents negotiate dynamically | Free (MIT license). Enterprise support only for compute or LLM API usage |

| LlamaIndex | • Advanced RAG-first framework with hierarchical node parsers • Auto-merging retrievers • Hybrid search integrations with Langfuse, Arize, Weights & Biases • `Llama_deploy` for microservices | Teams building data-heavy, retrieval-augmented agents needing strong memory & indexing | • Core OSS (free) • LlamaCloud Starter: $99 per mo • Pro: $500 per mo • Enterprise: Custom pricing |

| CrewAI | • Python-first role-based multi-agent system (Agents–Tasks–Crews) • Flows for sequential/hierarchical orchestration & HITL • Built-in audit logs & time-travel debugging | Structured business workflows & complex multi-agent task orchestration with clear roles/goals | • OSS (MIT, free) • Basic: $99 per mo • Standard: $6K per yr • Pro: $12K per yr • Enterprise: $60K per yr • Ultra: $120K per yr |

| OpenAI Agents SDK | • Python SDK for agent apps in the OpenAI ecosystem • Simple agent loops • Handoffs for delegation • Built-in tracing & evaluation tools | Teams who want a lightweight, Python-first agent framework tightly integrated with OpenAI | Open source; usage billed via OpenAI API pricing |

| n8n | • Low-code visual workflow builder • Conditional logic, triggers, integrations • Manual approvals and HITL supported as nodes | AI automation in business workflows, non-technical users, and fast prototyping | • Starter: €24 per mo • Pro: €60 per mo • Enterprise: Custom pricing • Free OSS edition available |

| Langflow | • Visual interface for LangChain/LLMs • Drag-and-drop components (agents, memory, embeddings) • Export flows as APIs | Rapid prototyping and visual development of agent flows and RAG apps | Free and open-source (MIT). Infra/API costs apply when self-hosted or deployed to the cloud |

| Agno (formerly Phidata) | • Full-stack OSS agent framework • Supports multi-agent teamwork • 23+ LLM providers, multi-modal tools | Teams needing lightweight, high-throughput, multi-agent systems with strong RAG + reasoning | • OSS (MIT, 2.0, free) • Agno Pro for funded early-stage startups (<$2M funding) |

Read about all the above-mentioned AI agent builders in detail below:

1. LangGraph

LangGraph - part of LangChain - is a graph-based agent framework that lets you explicitly define workflows as nodes and edges. It supports both single-agent and complex multi-agent pipelines, and is a strong alternative to Semantic Kernel for developers who like precise control and observability over agent flow.

Features

- Defines workflows as explicit graphs of nodes and edges, supporting single-agent, multi-agent, and hierarchical patterns for precise control.

- Built-in components allow you to pause agents for human feedback or moderation between nodes to inject reviews or approvals.

- Built-in statefulness and persistence layers (checkpointers) help manage short-term and long-term memory across sessions.

- LangGraph’s token streaming allows downstream logic to react to partial outputs. Useful for UI or for triggering follow-up actions as output comes in.

- Tight integration with LangSmith provides trace viewers and logs for every step of the process.

Pricing

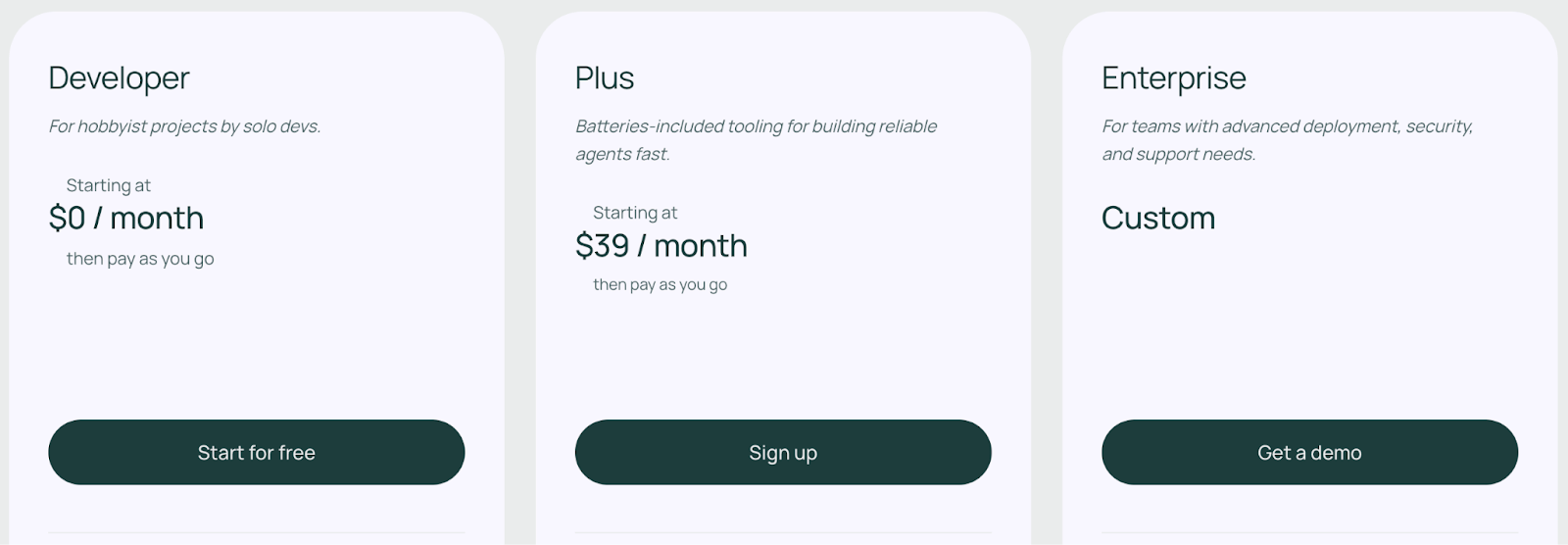

LangGraph is included in LangChain’s products and offers a free Developer tier with 1 seat, up to 5K trace events per month. It also has two pricing plans:

- Plus plan: $39 per seat per month, which comes with 10 seats and 10K trace events.

- Enterprise plan: Custom pricing

Pros and Cons

LangGraph’s core strength is the explicit transparency and control it offers over agent workflows. A visual map architecture of nodes and edges makes complex logic easier to understand, monitor, and debug.

On the downside, adopting the graph architecture adds boilerplate, and version churn often requires updates.

📚 Also read other LangGraph articles:

2. Microsoft AutoGen

AutoGen is a Python/.NET based open-source framework from Microsoft Research that lets you build multi-agent systems via text conversation. It uses a message-passing model where agents, AI or human, form chat-like sessions.

Features

- Multi-agent chat allows any number of agents to communicate via events or messages in a session and dynamically respond.

- Use workflow authoring to define workflows based on agent roles and conversation patterns, rather than rigid graphs.

- Offers

UserProxyAgentthat allows humans for direct feedback, approval, or code execution confirmation during runs. - Supports both Python and .NET agents, with interfaces that enforce type safety on messages and outputs.

- Built-in tools for tracing and debugging agent interactions and support OpenTelemetry for industry-standard observability.

Pricing

AutoGen is completely open-source (MIT license) and free to use. There are no usage fees beyond your own compute and API costs.

Pros and Cons

AutoGen’s conversational approach offers great flexibility for research and solving open-ended problems where agents can negotiate solutions among themselves.

The downside of this freedom is potential unpredictability. Agents might hallucinate and fail to converge unless guided. Compared to SK’s more deterministic pipelines, AutoGen is powerful but requires caution to avoid runaway agent chats.

**📚 Also read: **AutoGen vs LangGraph

3. LlamaIndex

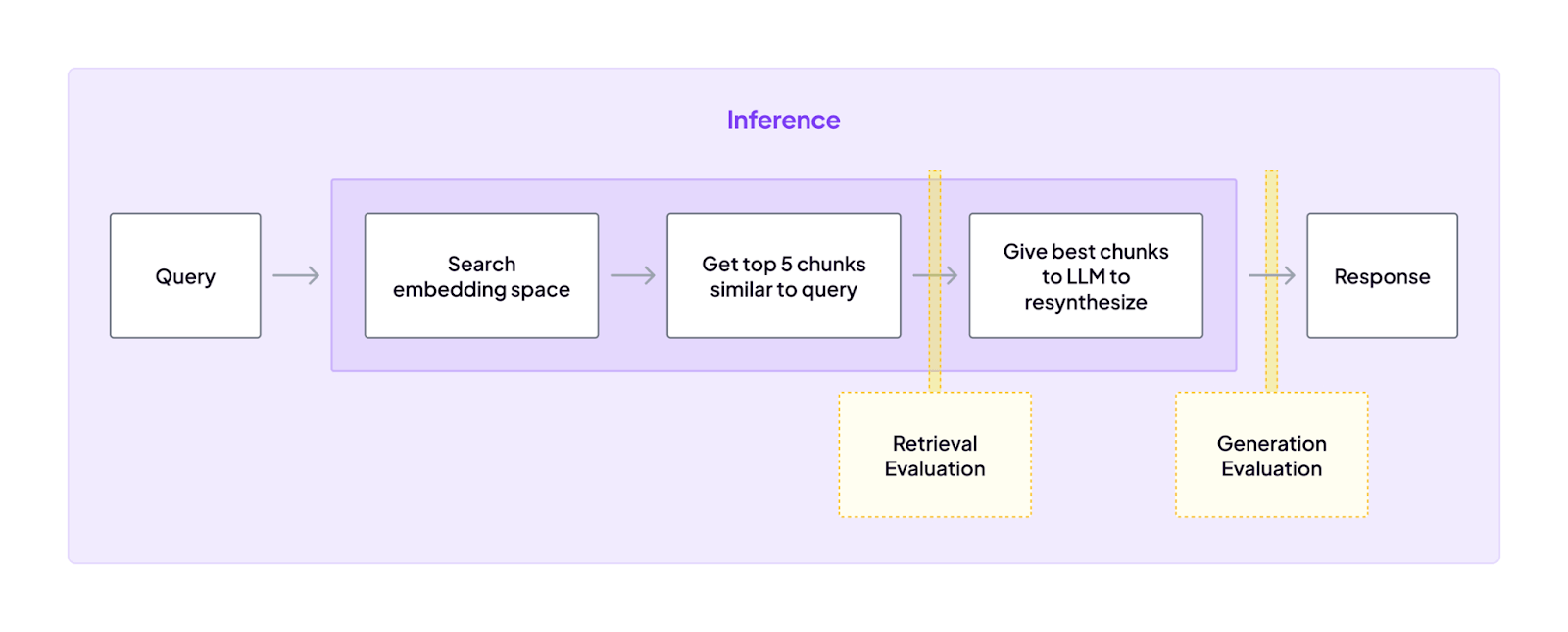

LlamaIndex (formerly GPT Index) is an open-source framework focused on retrieval-augmented agents and knowledge indexing. While not primarily an ‘agent orchestrator,’ it includes an agent interface (FunctionAgent) for building workflows. It excels at connecting large text and data sources to LLMs and provides rich memory and RAG capabilities.

Features

- Offers advanced RAG techniques like hierarchical node parsers, auto-merging retrievers, and hybrid search strategies to provide rich, accurate context to the LLM.

- Provides

Query Enginesfor Q&A,Chat Enginesfor conversations, and anAgentWorkflowmodule to orchestrate multi-step tasks that can use its powerful data retrieval tools. - Uses memory-efficient strategies like data chunking and incremental processing during indexing and structuring data from hundreds of sources.

- Integrates with observability partners like Langfuse, Arize Phoenix, and Weights & Biases for tracing and evaluation.

- Tools like

llama_deployhelp package agentic workflows as production microservices, and they can be deployed on Kubernetes.

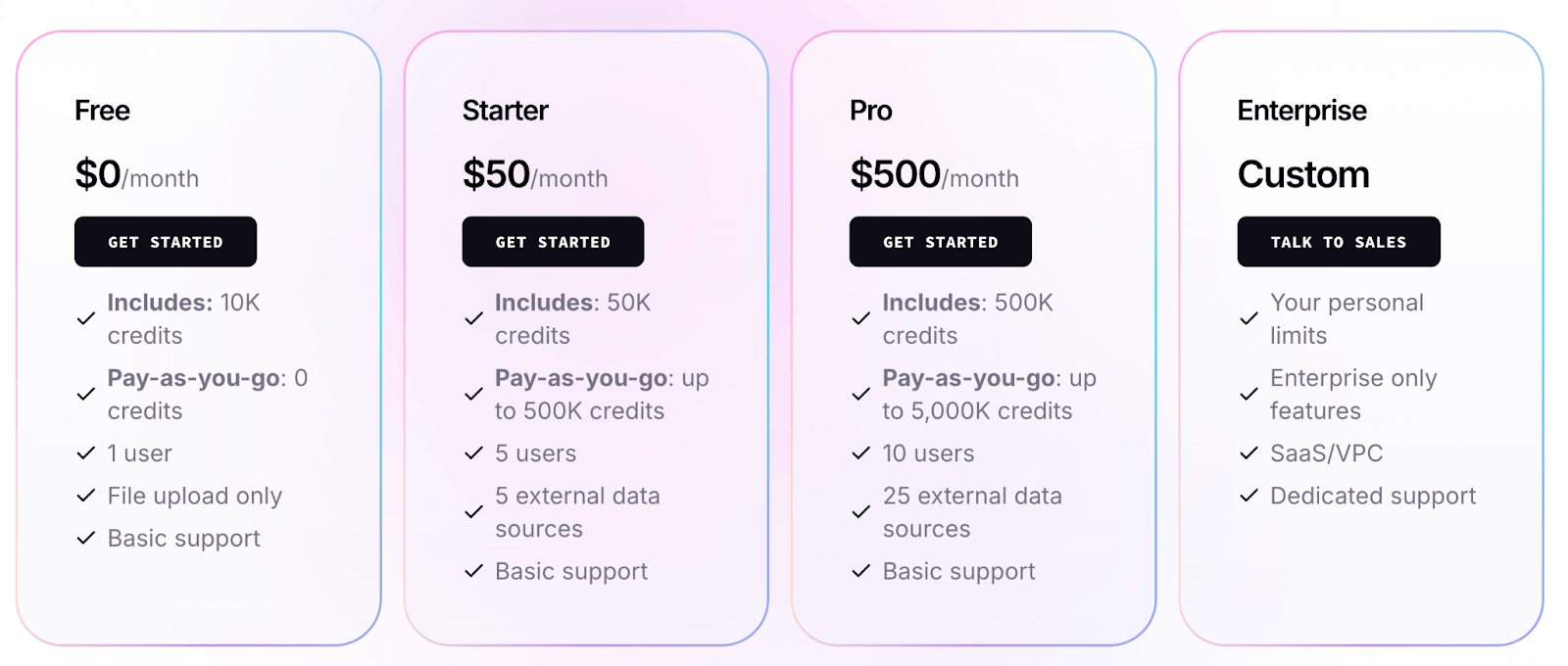

Pricing

LlamaIndex’s core libraries are free to use. It also offers LlamaCloud, a managed hosting service, with a free plan and three premium tiers.

- LlamaIndex Starter: $50 per month - 50K API credits, 5 seats

- LlamaIndex Pro: $500 per month - 500K credits, 10 seats

- LlamaIndex Enterprise: Custom pricing

📚 Read more about LlamaIndex Pricing in this article.

Pros and Cons

LlamaIndex’s strength lies in its ability to build sophisticated, data-driven RAG systems. It’s extremely good at document embeddings, RAG, and maintaining conversational state. It’s ideal when your agents need to query large databases.

However, it is more low-level than SK: you often write code to glue things together. Its agent orchestration capabilities, while improving, are less mature and flexible than specialized frameworks like LangGraph or AutoGen.

4. CrewAI

CrewAI is a Python-based agent framework focused on role-based multi-agent teams. It is built around the concept of a ‘Crew’ where you define Agents with specific roles, goals, and backstories. The framework manages task delegation and collaboration between these agents.

Features

- Agents come with a toolkit (web search, code execution, scraping, etc.), and you can add arbitrary Python functions as new tools.

- Beyond free-form chatter, CrewAI supports Flows, which are sequential or parallel steps with conditional logic, state management, and human handoff.

- Built-in audit logs record every agent’s step. You can replay or debug by modifying an agent’s previous action, like time-travel debugging.

- Integrates with numerous third-party observability tools like Langfuse, Arize Phoenix, and MLflow to track performance, quality, and cost metrics.

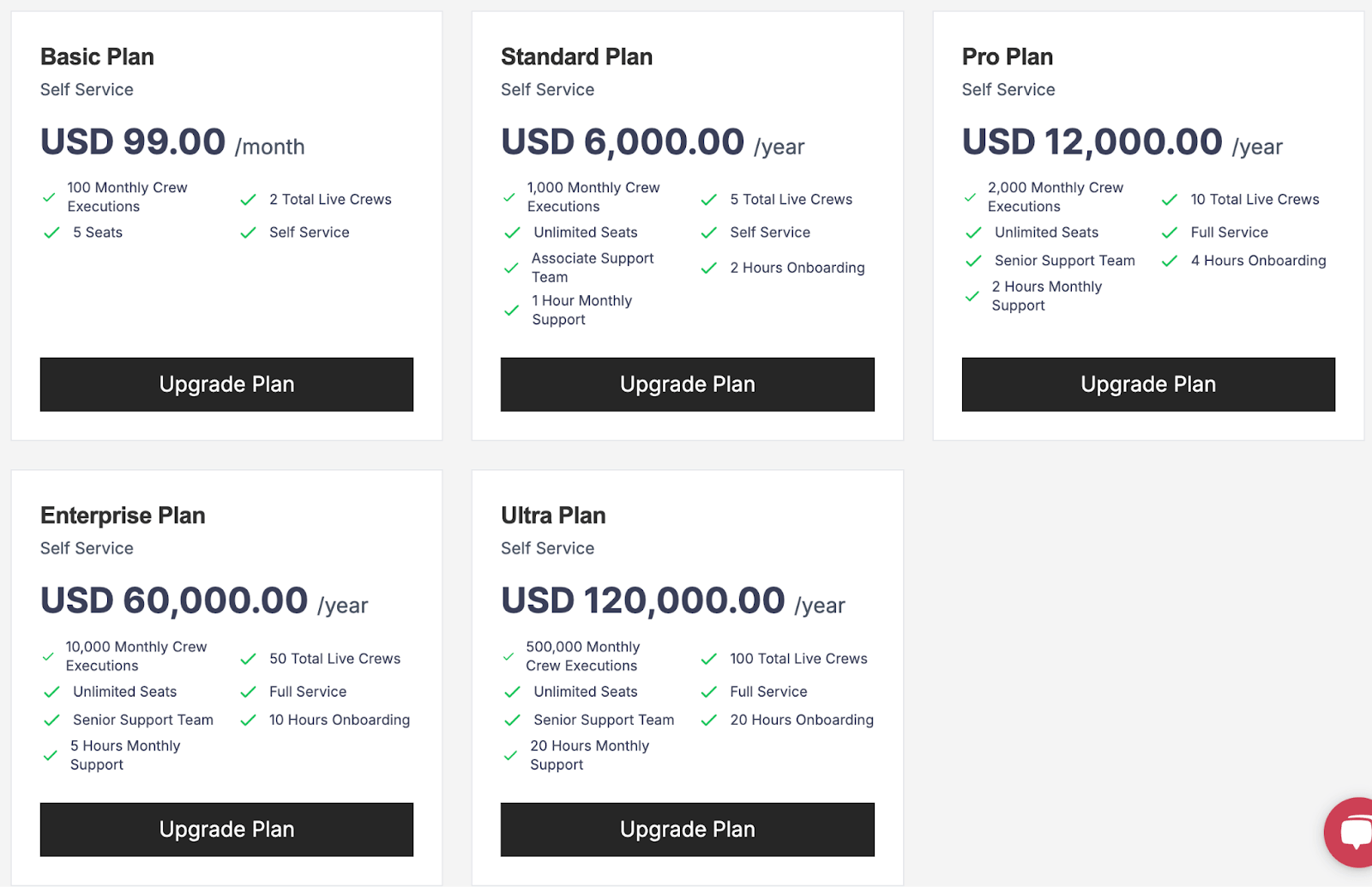

Pricing

CrewAI’s core framework is also MIT-licensed and open-source. But the platform offers several paid plans to choose from:

- Basic: $99 per month

- Standard: $6,000 per year

- Pro: $12,000 per year

- Enterprise: $60,000 per year

- Ultra: $120,000 per year

📚 Is CrewAI worth investing in? Read the CrewAI pricing guide to know.

Pros and Cons

CrewAI’s main advantage is clarity and structure for multi-step tasks. The role-based abstraction makes it easy to design complex collaborative workflows. YAML-based configuration helps teams set up agents without deep coding.

On the flip side, it’s less suited for completely open-ended agent discussions. In practice, CrewAI is best for complex business workflows with clearly defined subtasks, e.g., report generation and structured decision-making.

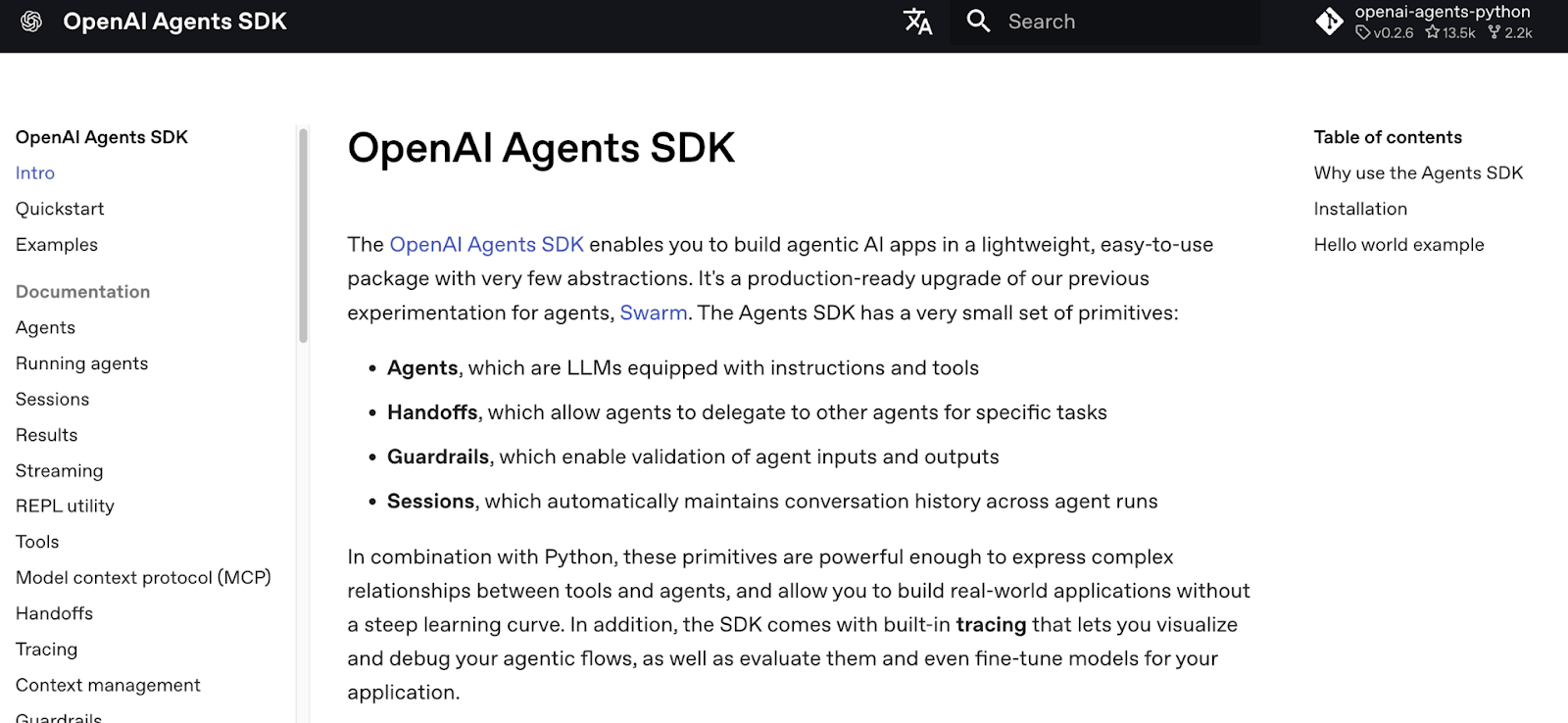

5. OpenAI Agents SDK

The OpenAI Agents SDK is OpenAI’s new Python library for building agentic applications. It provides simple primitives - Agents, Handoffs, Guardrails, Sessions - to chain LLMs with tools in lightweight workflows.

It is an excellent alternative to Semantic Kernel for developers who want to stay close to the OpenAI ecosystem and prefer a Python-first orchestration approach.

Features

- Use vanilla Python for control flow. You create agents (LLMs with instructions and tools) and call them directly. There’s an agent loop that manages calling tools and LLMs until termination.

- Uses a powerful Handoffs feature that allows one agent to delegate tasks to another and create a coordinated workflow.

- Attach guardrails that run in parallel. If a guardrail fails, it can stop the agent early.

- Supports session objects to automatically handle chat history between runs and give each agent a persistent conversation memory with minimal work.

- Built-in tracing to visualize, debug, and monitor workflows, which integrates with OpenAI's evaluation and fine-tuning tools.

Pricing

SDK is an open-source framework; usage is billed according to OpenAI pricing for models and any built-in tools you enable.

Pros and Cons

The major advantage of OpenAI Agents SDK is its simplicity and integration. For teams already using OpenAI, it plugs into existing workflows naturally. It also has strong built-in tracing and ties into OpenAI’s evaluation suite.

However, it’s essentially locked to OpenAI (no built-in support for other LLM providers) and lacks advanced orchestration controls.

That being said, it’s great for Python developers who want a quick agent setup with OpenAI models, but larger applications may outgrow it.

6. n8n

n8n is a low-code workflow automation platform that uses a visual, node-based interface to connect applications and services. It’s an ideal Semantic Kernel alternative for use cases where AI agents need to be embedded within broader business process workflows.

Features

- Provides a visual drag-and-drop canvas where each node represents an action, for example, calling an LLM or reading a database. Logic is controlled through conditional branches and loops.

- Connect 1000+ apps and services into your LLM workflows. You can pull data from a PostgreSQL DB, feed it to an LLM, then post the result to Slack, all in one flow.

- Natively supports creating if/else branches, loops, triggers, and manual approval steps where the workflow pauses and waits for human input.

- n8n can schedule workflows or run them on-demand with detailed logging for each node's execution history.

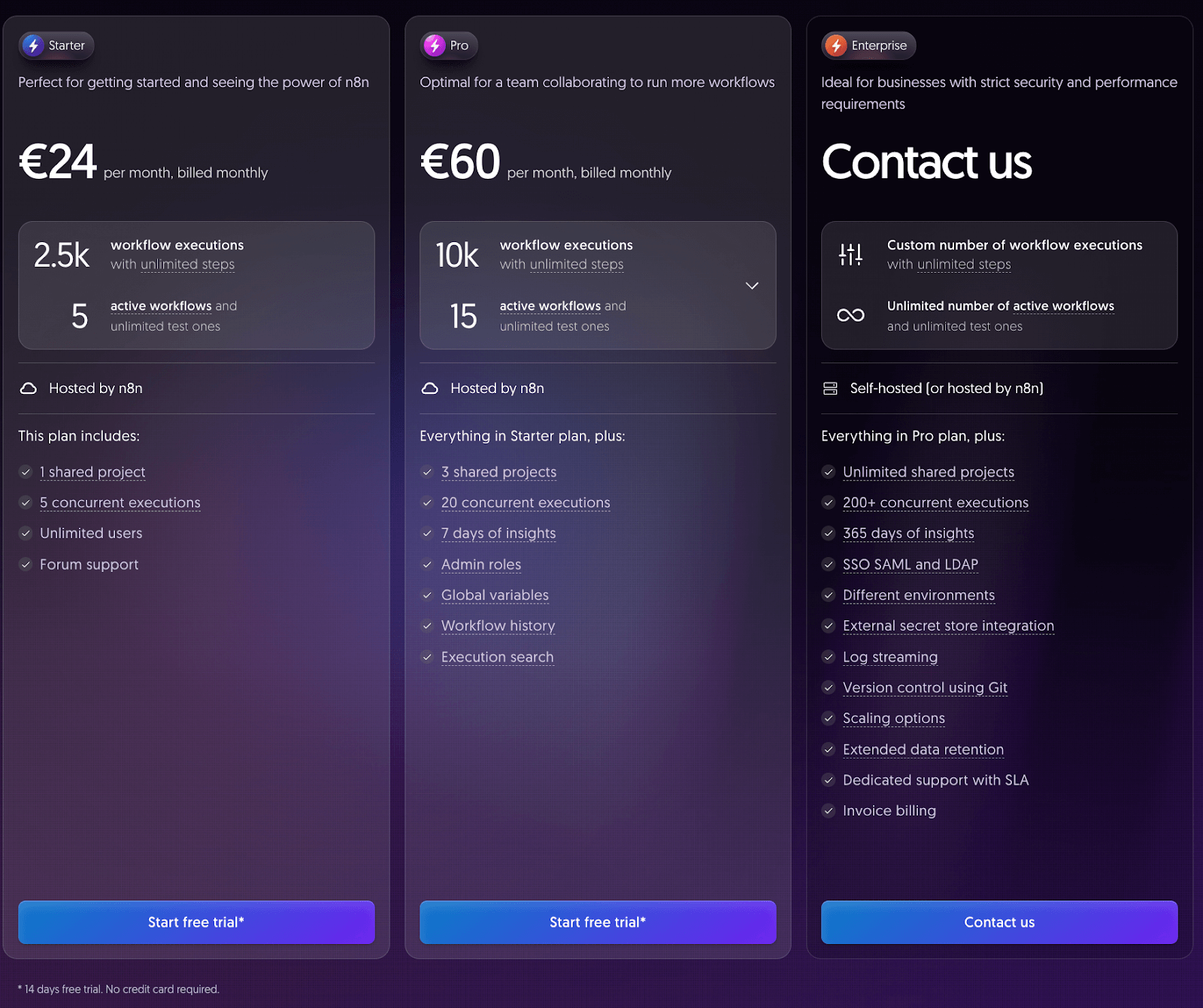

Pricing

n8n offers three paid plans to choose from. Each plan comes with a 14-day free trial, no credit card required.

- Starter: €24 per month. 2.5k workflow executions and 5 active workflows.

- Pro: €60 per month. 10k workflow executions and 15 active workflows.

- Enterprise: Custom pricing. Custom number of workflow executions and infinite active workflows.

👀 Note: n8n also has a Community edition - a basic version of n8n that’s available on GitHub.

Pros and Cons

n8n’s visual interface makes it highly accessible for both technical and non-technical users. Its vast library of connectors lets teams quickly prototype AI-enabled automations without heavy coding. Also, the pay-per-execution pricing is cost-efficient if agents run infrequently.

On the downside, n8n is not an agent-specific framework: it has no built-in ‘intent’ or multi-agent support. Complex agent logic must be manually coded into flows. Also, as a workflow engine, it does not provide native RAG memory – you’d have to manage that yourself.

7. Langflow

Langflow is an open-source visual interface built on LangChain. It provides a drag-and-drop IDE for connecting LangChain pipelines with LLMs, tools, and prompts.

It supports all major LLMs and vector DBs. A strong alternative for teams that prioritize rapid prototyping and visual development of agentic flows.

Features

- Langflow’s UI lets you create and modify AI workflows on a visual canvas. You can drag and drop components like agents, embeddings, tools, etc., onto the canvas to build complex chains and agentic systems without writing extensive code.

- Pre-built nodes for LLMs, vector stores, and data sources - S3, YouTube, and databases. If you don’t find what you need, you can drop in custom Python code.

- Flows can be easily exported and deployed as APIs, with both self-hosting and a free cloud platform available for deployment.

- Integrates with various vector stores and supports building RAG applications visually, allowing agents to access external knowledge bases.

Pricing

The Langflow software is free and open-source under an MIT license. There are no direct licensing fees or subscriptions.

The costs associated with using Langflow are indirect and stem from the infrastructure you provision to run it:

- API Usage: You pay your chosen providers for any LLM API calls or other external services your flows use.

- Cloud Hosting: When deploying Langflow from a cloud marketplace (e.g., AWS, Azure), you are billed for the underlying compute, memory, and storage resources.

The total cost of ownership is therefore a function of the infrastructure and API services you choose to manage yourself.

Pros and Cons

Langflow’s appeal is in visual development. It lowers the barrier to entry for LangChain workflows. The library of nodes and templates helps with RAG setups and agent flows.

However, since it sits on LangChain, it inherits LangChain’s complexity and instability. For production, you still need to test and possibly tune the underlying code.

8. Agno

Agno (formerly Phidata) is an open-source, full-stack framework for building AI agent systems. It comes with a web UI for chatting with and observing agents, and creating a multi-agent system with solid reasoning and shared context.

Features

- Supports ‘Agent Teams’ that can collaborate using chain-of-thought reasoning models or specialized reasoning tools.

- Integrates with over 23 LLM providers, like OpenAI, Anthropic, Mistral, etc., and is multi-modal.

- Built-in storage drivers and connectors to 20+ vector DBs for fast retrieval at runtime. Agents remember past interactions in structured form.

- Supports asynchronous execution for throughput, with agents initializing in microseconds and using very little memory for simple tasks.

Pricing

Agno is completely open-source (licensed under MPL 2.0) and free to use. You install the Python package and run it on your own hardware or cloud.

The platform also offers an ‘Agno pro’ plan free of charge for students, educators, and startups with less than $2 million in funding. For more information or to access this discount, you can contact [email protected].

Pros and Cons

Agno packs sophisticated features while remaining lightweight. It’s well-suited for teams needing a production-ready, high-throughput agent system with rich capabilities. The built-in observability and FastAPI deployment are bonuses.

As for its drawback, Agno is relatively new and has a smaller community than LangChain and other SK alternatives.

How ZenML Helps In Closing the Outer Loop Around Your Agents

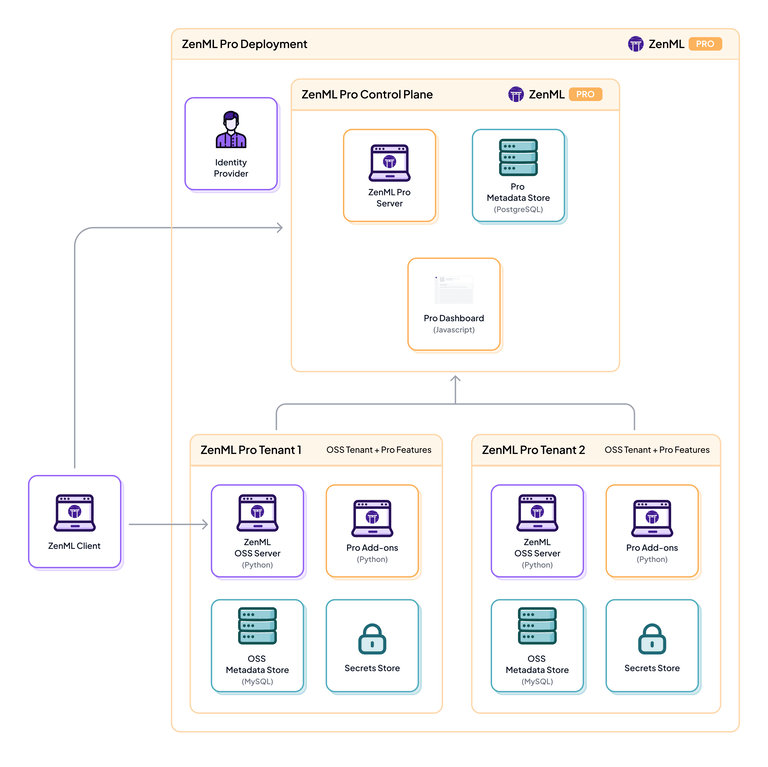

ZenML complements each of these Semantic Kernel alternatives by managing the outer loop of AI pipelines, rather than the inner agent logic.

The outer loop deploys, monitors, and manages the agent’s entire lifecycle. This is where an MLOps + LLMOps framework like ZenML provides the critical missing piece.

ZenML orchestrates entire pipelines end-to-end. In practice, that means ZenML can run your data ingestion, model fine-tuning, agent execution, evaluation, and deployment steps in a single reproducible workflow.

Think of it as a bridge combining agent-authoring tools with the rest of the MLOps stack.

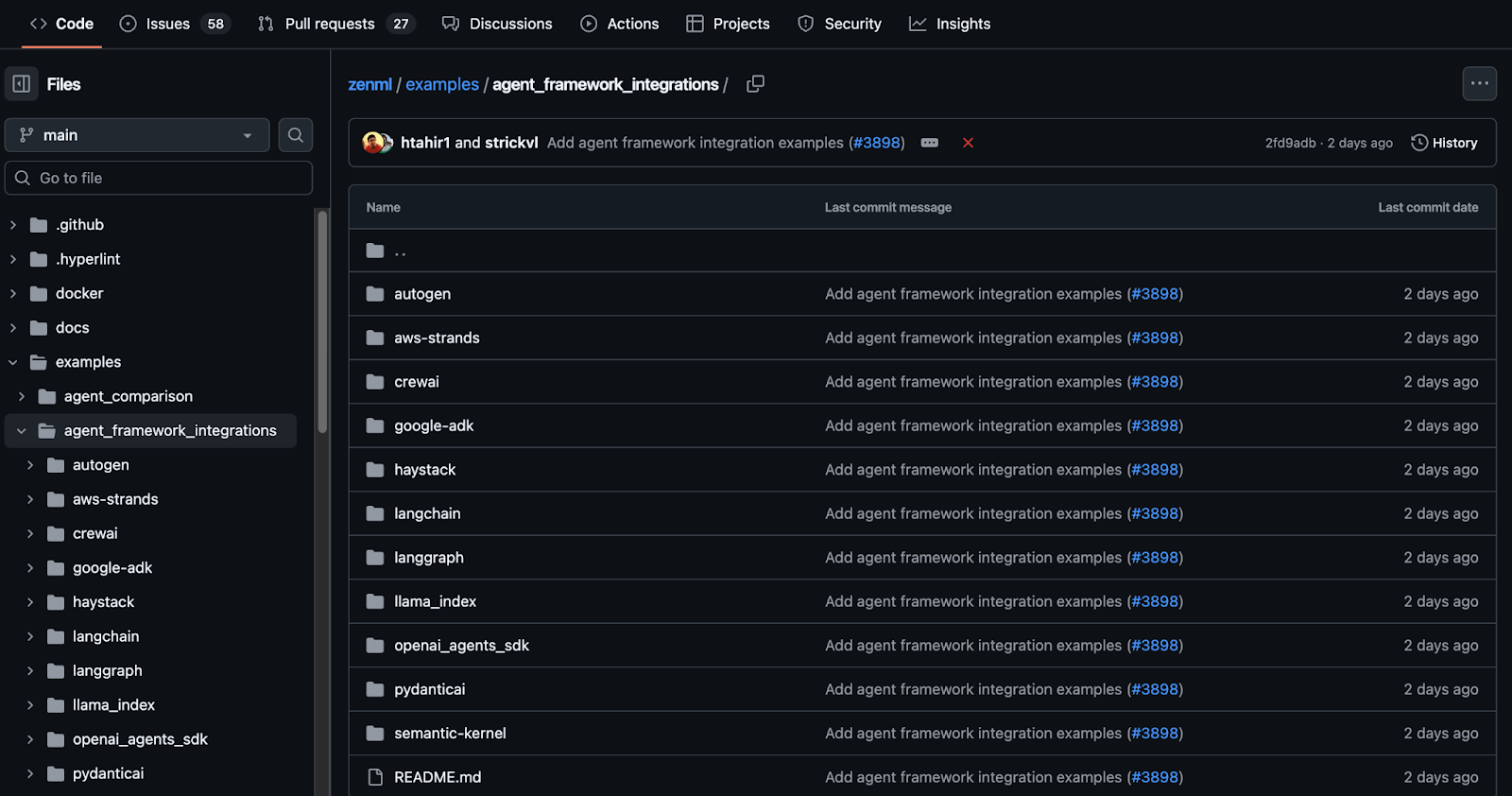

A single ZenML pipeline can use LlamaIndex for RAG in one step, execute a CrewAI agent in the next, and use MLflow for tracking, all running on a Kubernetes cluster. This prevents vendor lock-in and allows teams to use the best tool for each part of the job.

ZenML is not an agent framework - but it is built to complement Langflow or any of its alternatives by providing end-to-end lifecycle management for your agents. Here are a few ways our platform helps close the outer loop:

1. Pipeline Orchestration Beyond the Agent

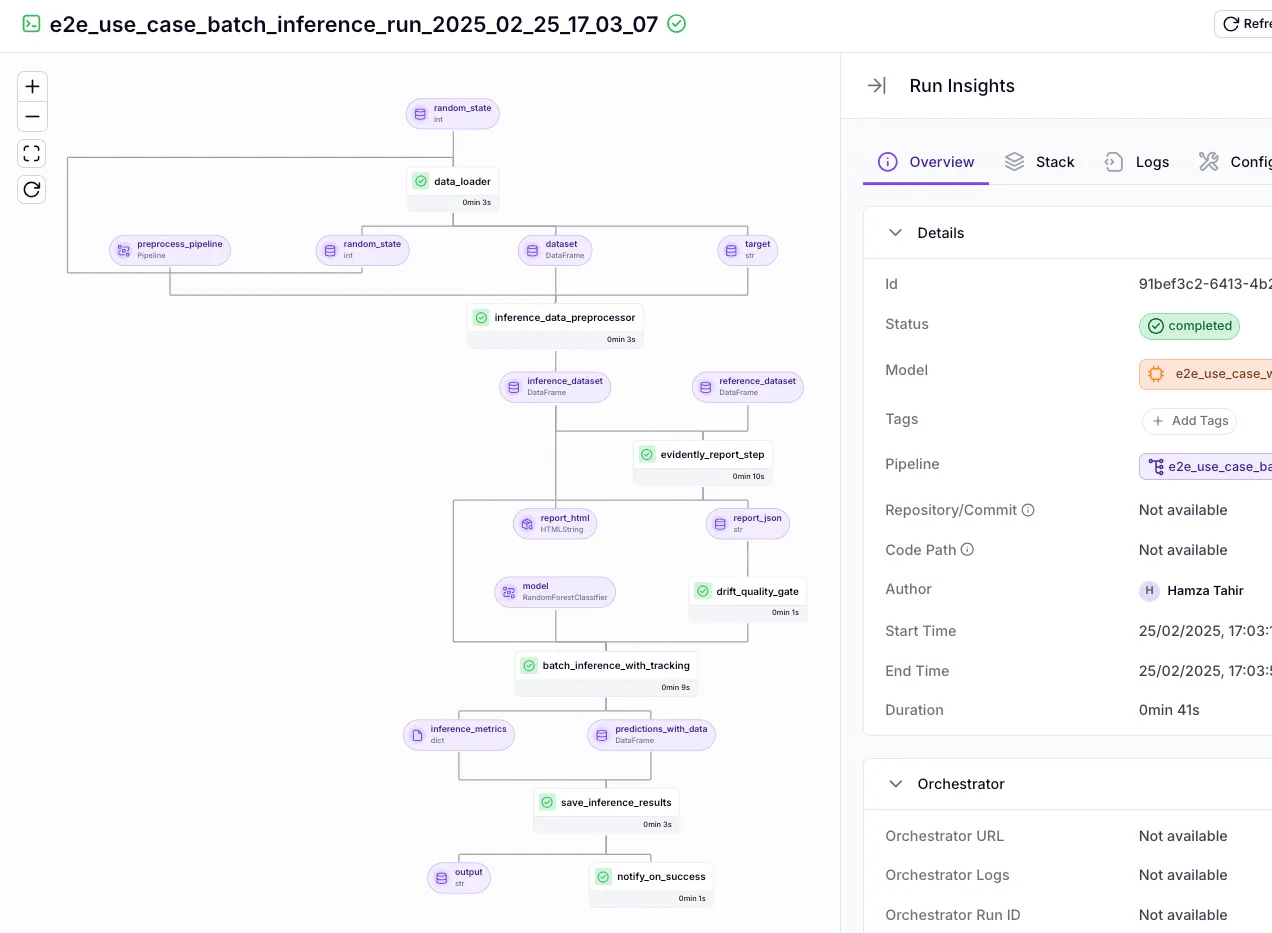

With ZenML, an SK-based agent becomes one step in a reproducible pipeline. You define the full workflow: data prep → agent invocation → post-processing → notifications or handoffs.

Pipelines run locally for development, then execute the same code on production backends like Kubernetes, Airflow, or cloud runners without rewriting steps. Scheduling, retries, caching, and alerts are first-class, so your agent isn’t a one-off script but part of an owned system.

2. Unified Visibility and Lineage

ZenML records every run: inputs, outputs, artifacts, and configurations across steps. You can trace a result back to the exact agent code, prompt version, model, and data that produced it.

This lineage helps with debugging, audits, and incident reviews. A side-by-side run comparison makes it clear when a new prompt or model version changes behavior, and where to roll back.

3. Continuous Evaluation and Feedback

Agent behavior drifts. ZenML lets you bake evaluations into the pipeline so each run gets scored against test sets, rules, or an LLM judge.

If quality drops or token costs spike, the pipeline can flag, alert, or trigger a follow-up job like prompt rollback or retraining.

Over time, this closes the loop: you don’t just ship an agent, you measure it, improve it, and keep it accountable inside a governed workflow.

👀 Note: At ZenML, we have built several agent workflow integrations with tools like Semantic Kernel, LangGraph, LlamaIndex, and more. We are actively shipping new integrations that you can find on this GitHub page: ZenML Agent Workflow Integrations.

Bottom line: Use your preferred agent framework for inner-loop logic, and pair it with ZenML to own the outer loop - pipelines, lineage, evaluations, and dependable deployment.

Which Semantic Kernel Alternative is Right for You?

There is no single best alternative to Semantic Kernel; the right choice depends entirely on your specific use case and technical requirements. The landscape of agentic AI frameworks is diverse, with each tool offering a unique philosophy and feature set.

Based on our analysis, here are our top recommendations:

- For teams that need explicit, debuggable control over complex, stateful workflows, LangGraph is the ideal choice due to its transparent graph-based architecture.

- For applications that require deep, data-driven reasoning and advanced RAG capabilities, LlamaIndex provides an unparalleled data framework.

- For flexible, conversational research and open-ended problem-solving where agents must collaborate dynamically, Microsoft AutoGen offers a powerful conversation-driven model.

- For building agent teams with intuitive, role-based collaboration that mirrors human team dynamics, CrewAI provides a highly effective and structured approach.

If you’re interested in taking your AI agent projects to the next level, consider joining the ZenML waitlist. We’re building out first-class support for agentic frameworks (like LangGraph, CrewAI, and more) inside ZenML, and we’d love early feedback from users pushing the boundaries of what AI agents can do. With ZenML, you can seamlessly integrate whichever agent framework you choose into robust, production-grade workflows. Join our waitlist to get started.👇