Weights & Biases has established itself as a widely adopted platform for ML experiment tracking. It empowers ML engineers and data scientists to log, visualize, and compare their model training runs, hyperparameters, and metrics.

However, despite its modern features, many teams encounter specific challenges that send ML engineers and data scientists on a quest to search for a Weights & Biases alternative.

In this post, we explore 7 Weights & Biases alternatives designed to address the pain points ML teams face.

TL;DR

- Why Look for Alternatives: Many teams seek WandB alternatives due to concerns over escalating costs, performance overhead, and UI latency, as well as issues with unreliable data uploads, syncs, or rate limits.

- Who Should Care: ML teams growing beyond basic experimentation, especially those training many models in parallel or managing sensitive data on-prem, need predictable pricing and scalability.

- What to Expect: We’ll evaluate Total Cost of Ownership (TCO), deployment flexibility, and scalability. Then we compare 10 alternatives (open-source and managed) with intros, feature lists, pricing, and pros/cons.

The Need for a Weights & Biases Alternative

Even with its popularity, Weights & Biases presents several challenges for machine learning teams, particularly as operations scale and demands intensify.

Reason 1. Cost and Licensing Plan

W&B charges users by ‘tracked hours’ of training, meaning running multiple GPUs in parallel quickly multiplies billed time. In our research, we found that 5,000 ‘tracked hours’ (the default Teams plan) can be burned in a day on a small GPU cluster.

Each extra GPU-hour costs $1 under the Teams plan, so running concurrent experiments can double or triple costs relative to real time.

For companies with larger teams, even the Enterprise plan (billed per user) will cost you $200 to $400 per user per month, which grows expensive if many team members only occasionally need tracking access.

Reason 2. Performance Overhead & UI Latency

W&B’s UI and APIs struggle with very large experiments. Logging heavy data can take a long time, slowing down the training process, with uploads often stalling tens of seconds after each run.

The web UI can become sluggish when displaying large numbers of runs or metrics. Retrieving data via the W&B API can also be extremely slow on large experiment sets. These delays frustrate teams running many parallel jobs or monitoring long-running jobs.

Reason 3. Unreliable Uploads, Syncs & Rate-Limits

With WandB, you might frequently encounter issues with data synchronization, like ‘Timeout while syncing’ and ‘Failed to upload file’ errors. These problems are often attributed to large file uploads, slow or unstable network connections, or server-side issues.

A significant concern for ML engineers is when the upload process blocks the training process for hours, which is unacceptable in hyperparameter search with sweep, leading to wasted cloud GPU service costs.

This problem of upload threads blocking the training process is a critical operational and financial concern for ML engineers using cloud GPUs.

Evaluation Criteria

When evaluating alternatives to Weights & Biases, ML engineers and data scientists must look beyond basic feature parity. The following criteria are crucial for selecting a solution that truly supports production-grade MLOps.

1. Total Cost of Ownership (TCO) & Pricing Predictability

Total Cost of Ownership (TCO) encompasses more than just the initial licensing or subscription fees. It includes ongoing operational costs like infrastructure, maintenance, support, training for teams, and potential costs associated with downtime or inefficiencies.

This means that a seemingly inexpensive upfront solution could become costly due to high operational overhead or integration challenges, directly impacting the overall return on investment for an MLOps platform.

Pricing predictability is vital for budgeting and long-term financial planning. Solutions with transparent, usage-based models or clear tier structures are preferable to those with opaque or escalating costs.

2. Data Governance and Deployment Flexibility

Robust data governance features, including data lineage tracking, strict access controls (Role-Based Access Control), and compliance certifications (e.g., SOC2, GDPR), are paramount for enterprise-level ML.

The increasing regulatory landscape and enterprise security demands make data governance and flexible deployment non-negotiable for MLOps tools.

3. Scalability and Reliability Under Heavy Load

An effective experiment tracking solution must scale effortlessly to handle growing data volumes, increasing numbers of experiments, and a high concurrency of users without degrading performance.

This means that beyond merely handling large data, true scalability and reliability in experiment tracking involve maintaining performance under concurrent, high-volume logging and ensuring zero data loss or blocking during critical operations.

What are the Best Alternatives to Weights & Biases?

ML engineers and data scientists are often time-constrained and need to quickly assess multiple options. The following comparative table provides a rapid overview and side-by-side comparison of specific features, pricing models, deployment options, and target use cases across all alternatives.

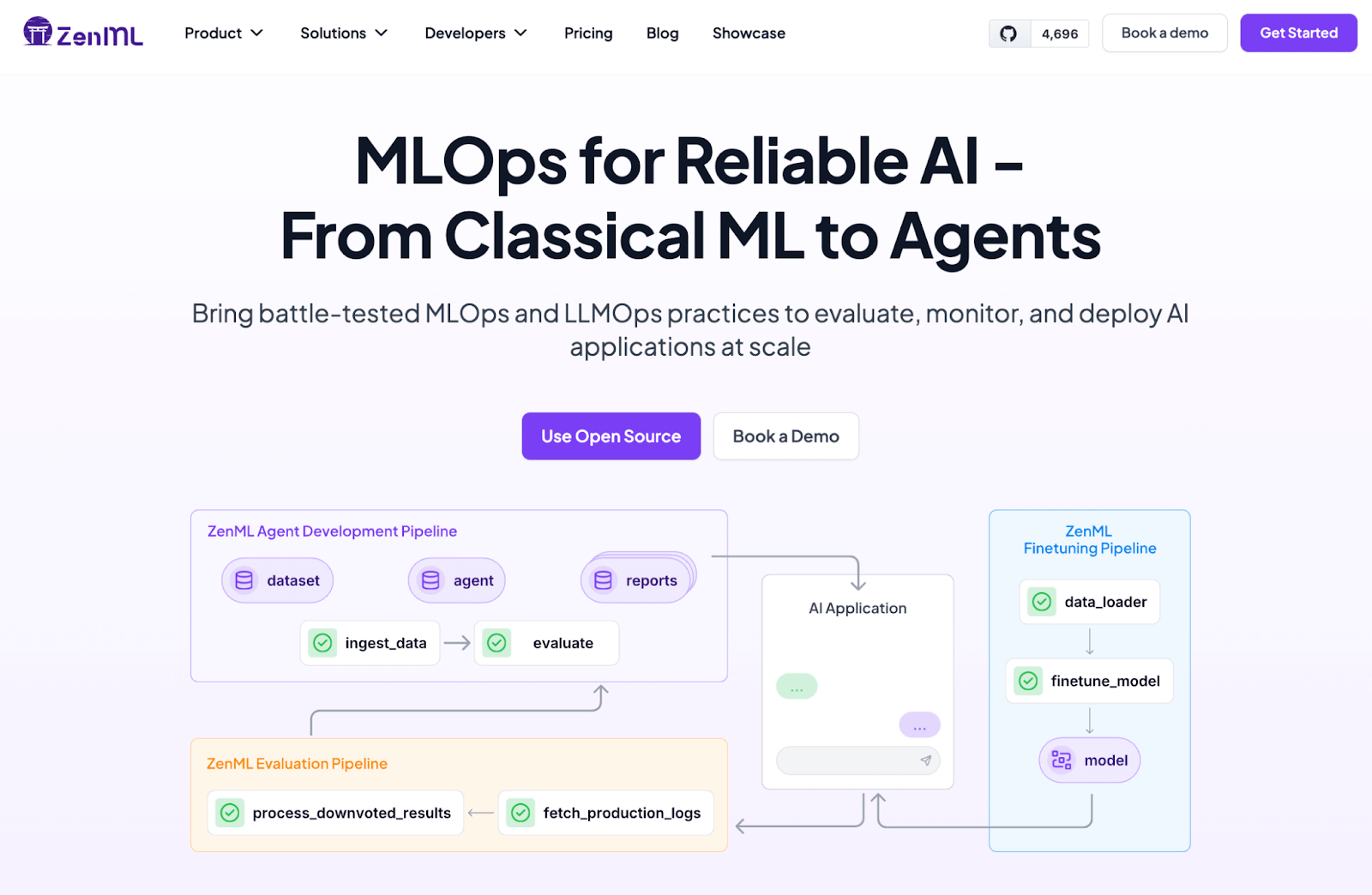

1. ZenML

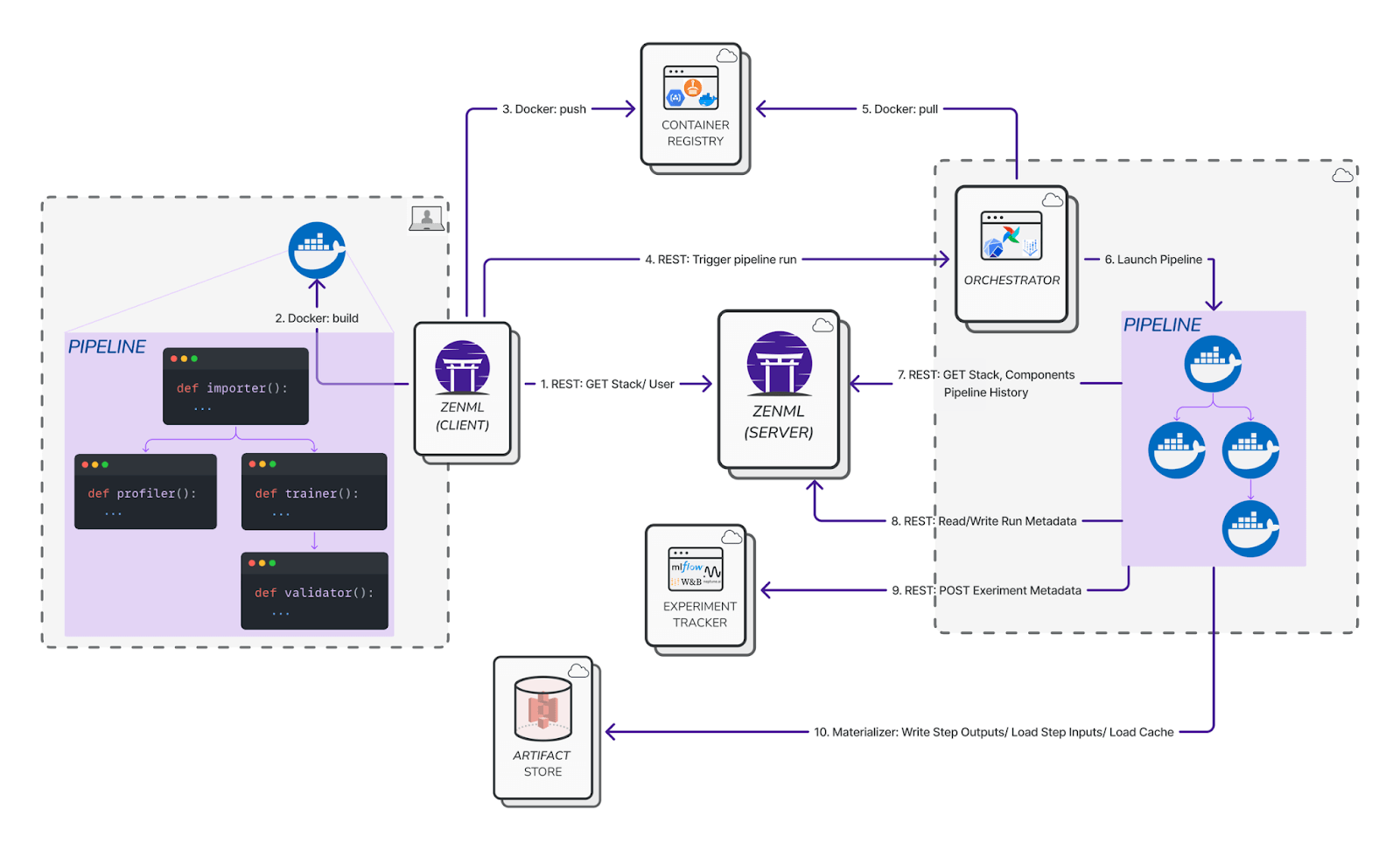

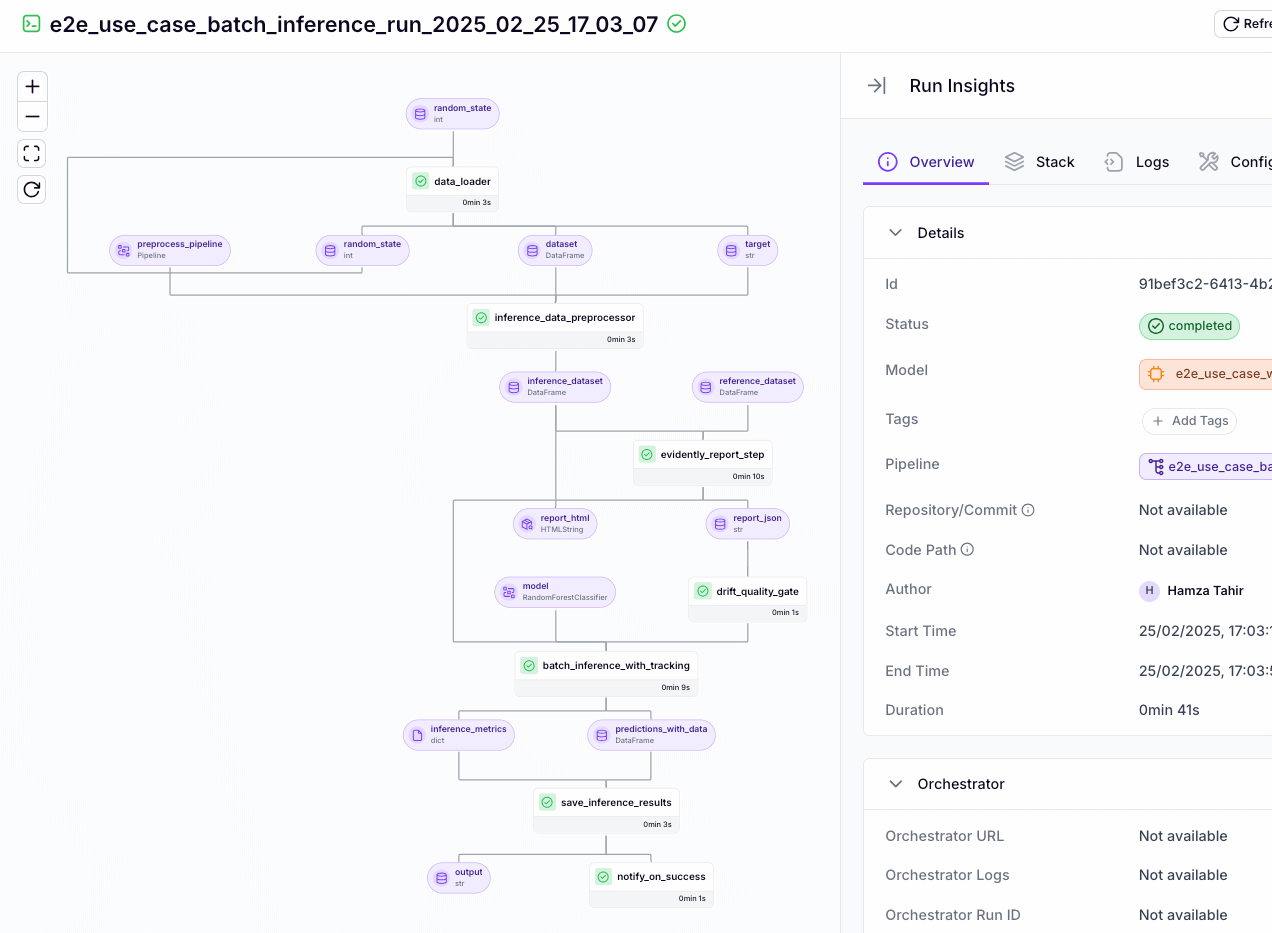

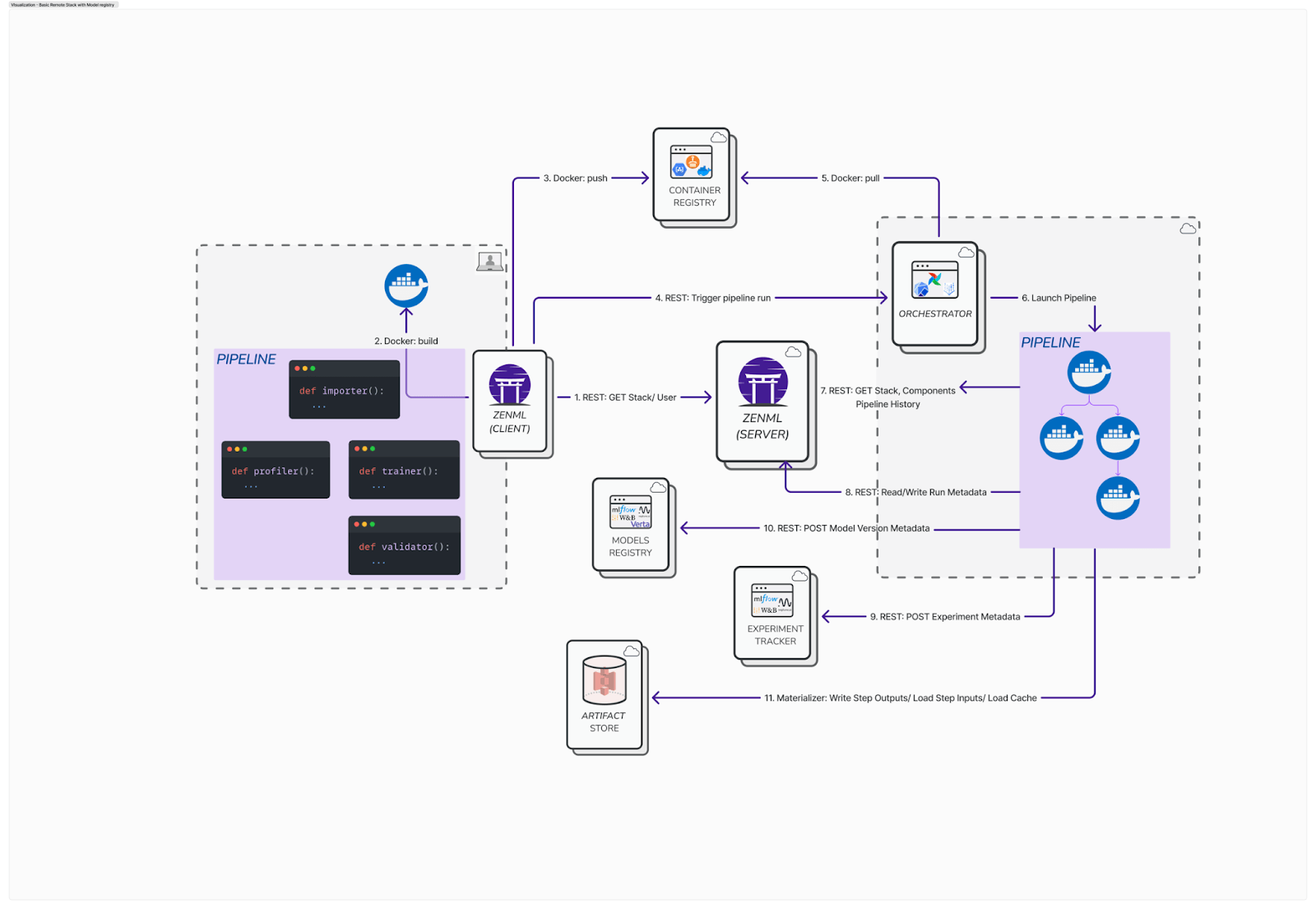

ZenML is an open-source MLOps framework that unifies pipeline orchestration with experiment tracking. Unlike W&B (which is model-centric), ZenML treats each pipeline run as an experiment and tracks metadata at every pipeline step.

Key Feature 1. Experiment Tracking and Run Comparison

ZenML integrates seamlessly with various experiment trackers, including WandB, MLflow, and TensorBoard, allowing users to log metrics, hyperparameters, and artifacts from any step within their ML pipelines.

This means users can leverage their preferred tracking tool while ZenML orchestrates the end-to-end workflow. This approach combines the benefits of specialized tracking tools with ZenML’s pipeline management capabilities.

The ZenML Pro dashboard offers advanced tools for comparing experiments, including Table View Comparisons for side-by-side analysis of metadata, configurations, and outcomes, and Parallel Coordinates Visualization for understanding complex relationships between parameters and results.

It supports comprehensive cross-pipeline run analysis, tracking numerical metadata, and sharing visualizations for collaborative insights. This lets your team analyze processing times, resource utilization, and data preprocessing statistics directly within the pipeline orchestration workflow.

Key Feature 2. Artifact Versioning and Lineage

ZenML automatically versions every artifact produced as an output from a pipeline step, whether it is a dataset, a model, or an evaluation report. This inherent versioning ensures full reproducibility and traceability across all ML workflows without requiring additional manual effort.

Creating an artifact in ZenML is simple and straightforward:

This systematic tracking creates a comprehensive data lineage for every artifact, allowing you to trace its origin, transformations, and usage throughout its ML workflows.

What’s more, the system tracks the complete lineage of each artifact, detailing which step and pipeline run produced it, its dependencies, and which subsequent steps consumed it. Such a robust lineage is fundamental to ZenML's powerful caching capabilities, avoiding redundant computations when inputs remain unchanged.

If a step has been run previously with identical inputs, code, and configuration, ZenML reuses the outputs from that cached run, saving computation time and ensuring consistent results.

Key Feature 3. Model Registry and Lifecycle Management

ZenML's Model Control Plane, available in ZenML Pro, provides a unified approach to model management, bringing together pipeline lineage, artifacts, and business context.

A ZenML Model is treated as a first-class entity, grouping relevant pipelines, artifacts, and metadata for a given ML problem. This goes beyond traditional model registries by unifying various aspects of the model lifecycle.

It offers built-in versioning and stage management, like staging, production, and archived stages, allowing each training run to produce a new Model Version automatically tracked with lineage to its data and code. This facilitates convenient model promotion triggers and provides a centralized view of all models, their training, deployments, and endpoints.

The Model Control Plane ensures transparency and governance throughout the model's lifecycle, from experimentation to production.

How Does ZenML Compare to WandB

ZenML and WandB serve different primary purposes, yet can complement each other effectively. While WandB is a specialized, cloud-based platform for experiment tracking and visualization, ZenML is fundamentally a pipeline orchestrator and MLOps framework designed to manage the entire ML lifecycle.

ZenML offers stronger foundational MLOps capabilities, which include end-to-end lineage tracking, robust artifact and model versioning, and a flexible architecture for integrating diverse tools.

Its controlled release cycle prioritizes backward compatibility, offering more stability compared to WandB's rapid API changes. WandB excels in interactive visualization, hyperparameter sweeping, and rich reporting features. However, ZenML's design allows it to integrate with WandB, enabling you to leverage WandB's powerful tracking and visualization within ZenML's reproducible pipelines.

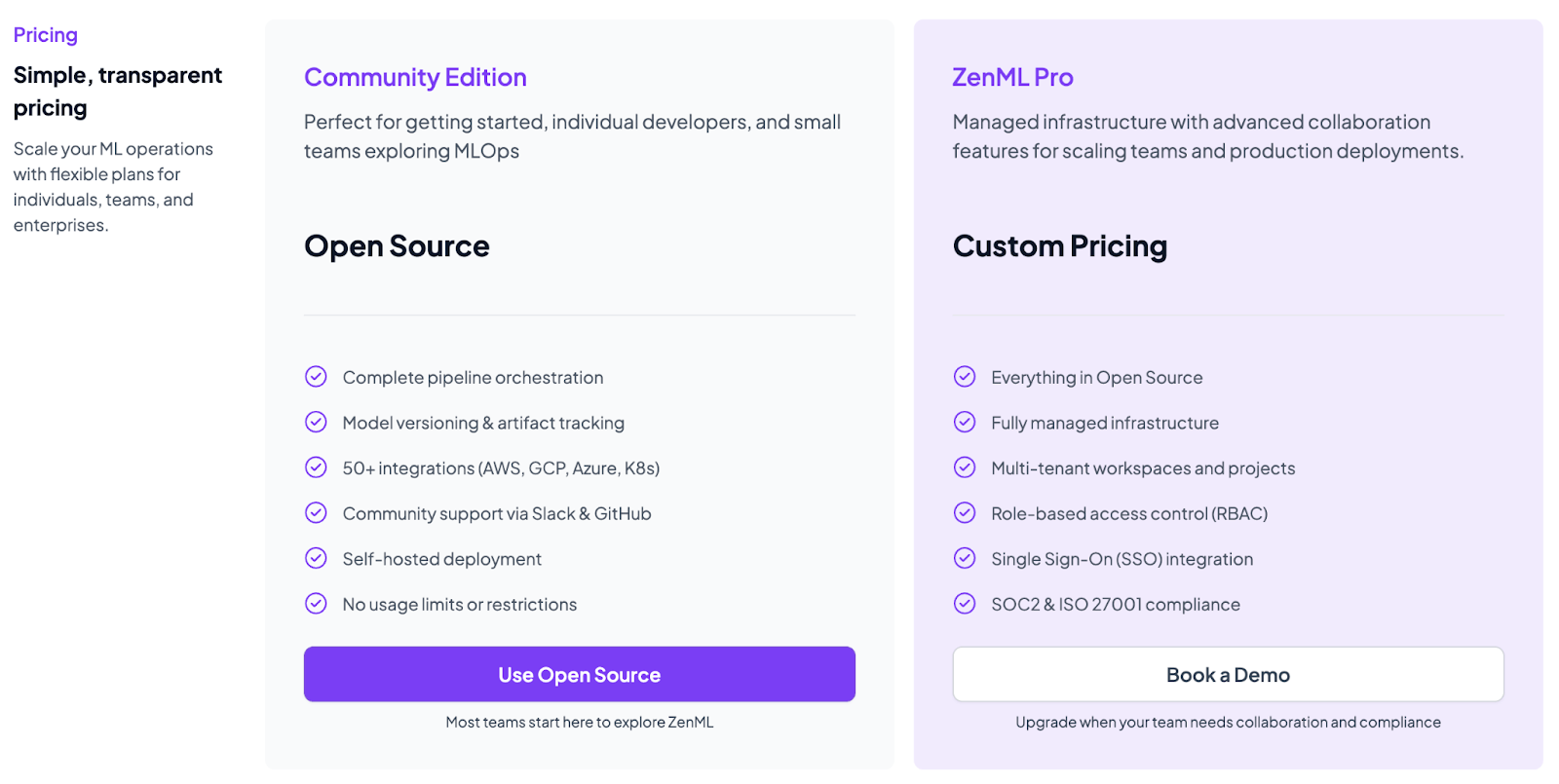

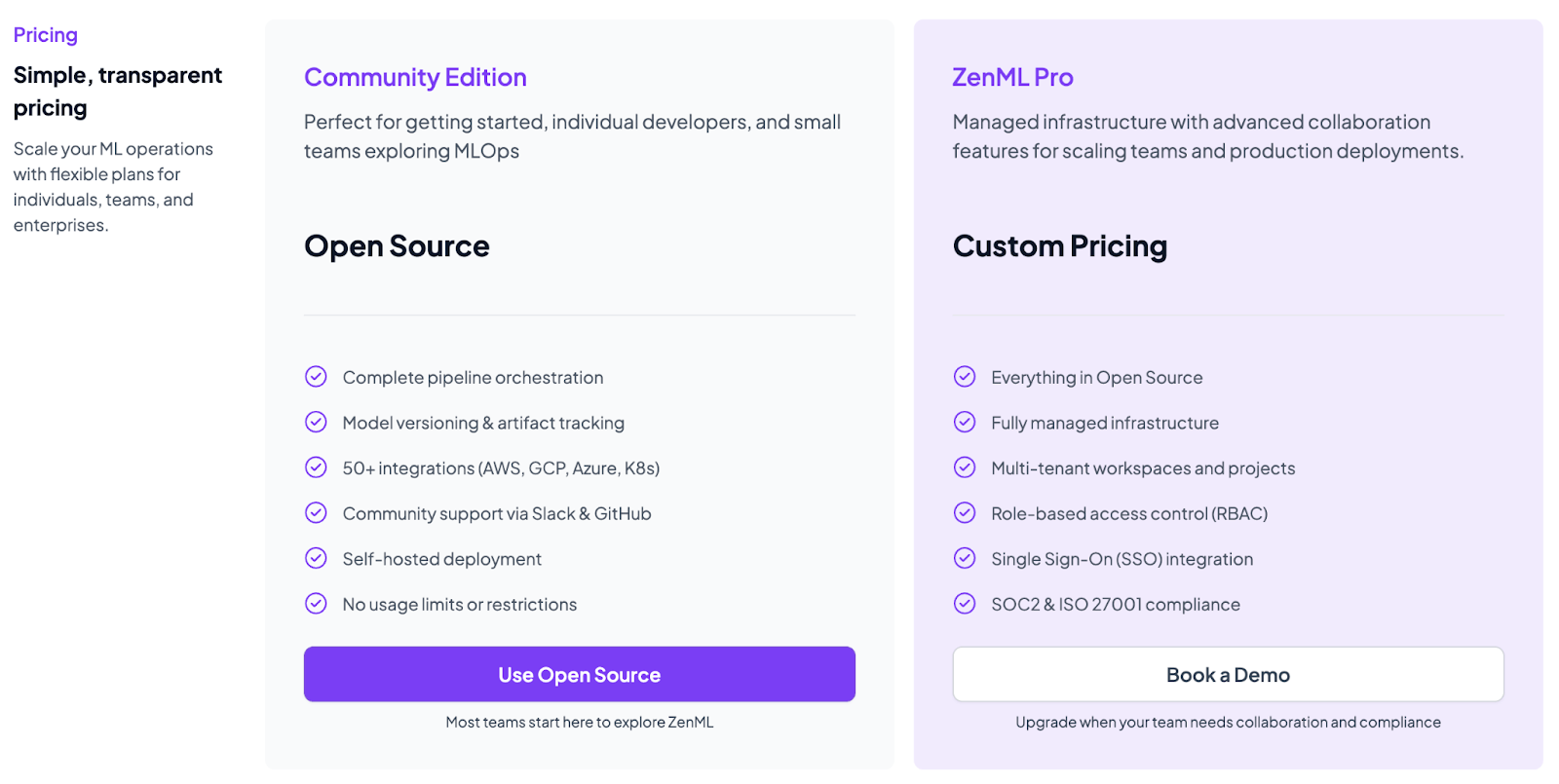

Pricing

ZenML is free, open source (Community Edition) for self-hosted use. For teams needing managed infrastructure, ZenML Pro offers a hosted control plane, multi-tenant workspaces, RBAC, SSO, and compliance features (SOC2/ISO27001) under a custom pricing plan.

Pros and Cons

ZenML is open-source, fully self-hostable with no usage or vendor fees. It comes with rich MLOps capabilities - pipelines, tracking, artifact management, and more in a single framework. Lastly, the platform integrates with multiple experiment trackers (and other MLOps tools) to get you an overall better ML pipeline building, running, and tracking experience.

ZenML does not have a native Spark/Ray runner; you must wire these frameworks yourself.

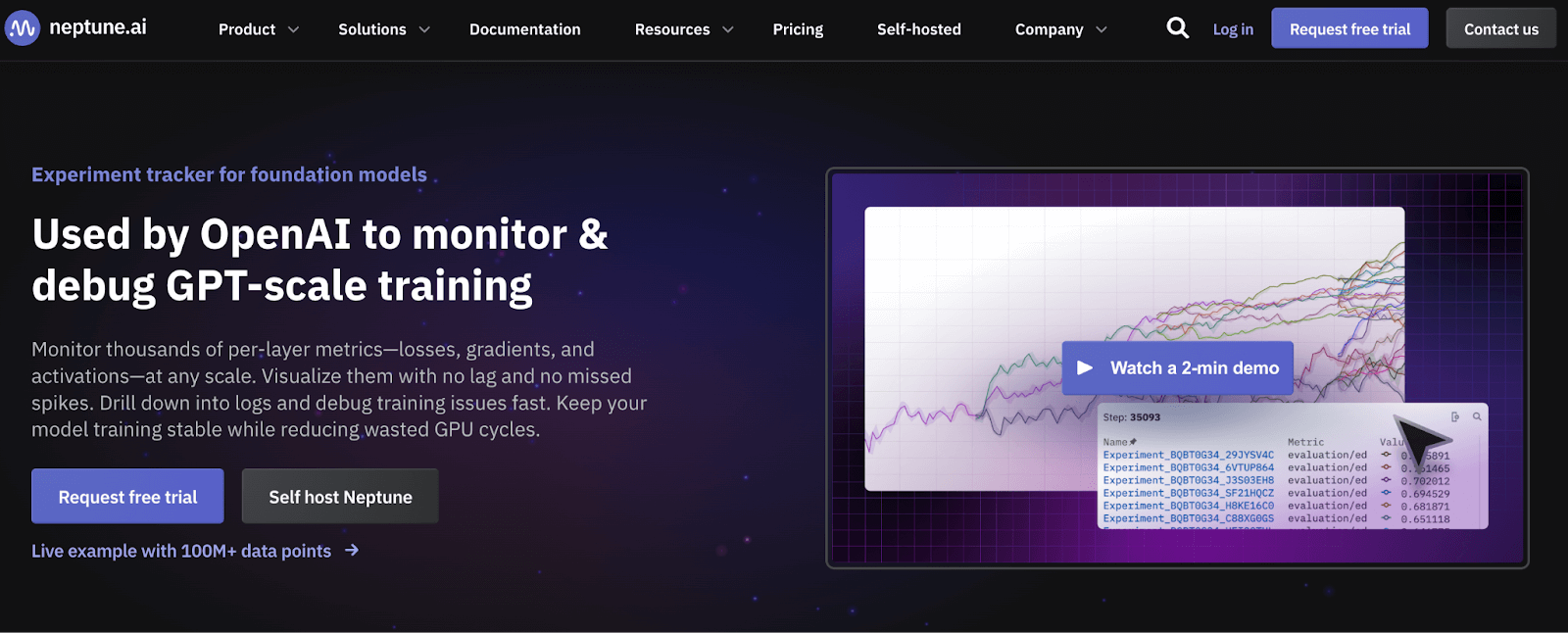

2. Neptune AI

Neptune is a managed experiment tracking platform designed for high-scale and collaboration. The Neptune UI is optimized for large-volume experiments: it can render hundreds of thousands of runs and billions of data points faster than many alternatives in this list.

Features

- Built to monitor large experiments (e.g., foundation models). The UI handles up to 100,000 runs with millions of data points.

- Comes with a central dashboard for all run metadata - metrics, params, tags, artifacts, with flexible structure.

- Powerful fork/run branch capability lets you restart or branch an experiment from any checkpoint, which is helpful for long or failed runs.

- The platform allows real-time monitoring of massive training jobs, enabling you to quickly identify and terminate diverging runs as they occur.

Pricing

Neptune has a free trial that’s coming soon, which you can take and get to know the platform. Apart from that, you have three paid plans to choose from:

- Startup: $150 per user per month

- Lab: $250 per user per month

- Self-hosted: Custom pricing

Pros and Cons

Neptune AI features an excellent, fast, and responsive UI, even with large datasets. It boasts an easy-to-use Python SDK and is actively maintained with frequent new features and strong backward compatibility.

The platform is more expensive for small teams as it has no cheap per-use option aside from the free tier (the free tier is limited to academics working on foundation models).

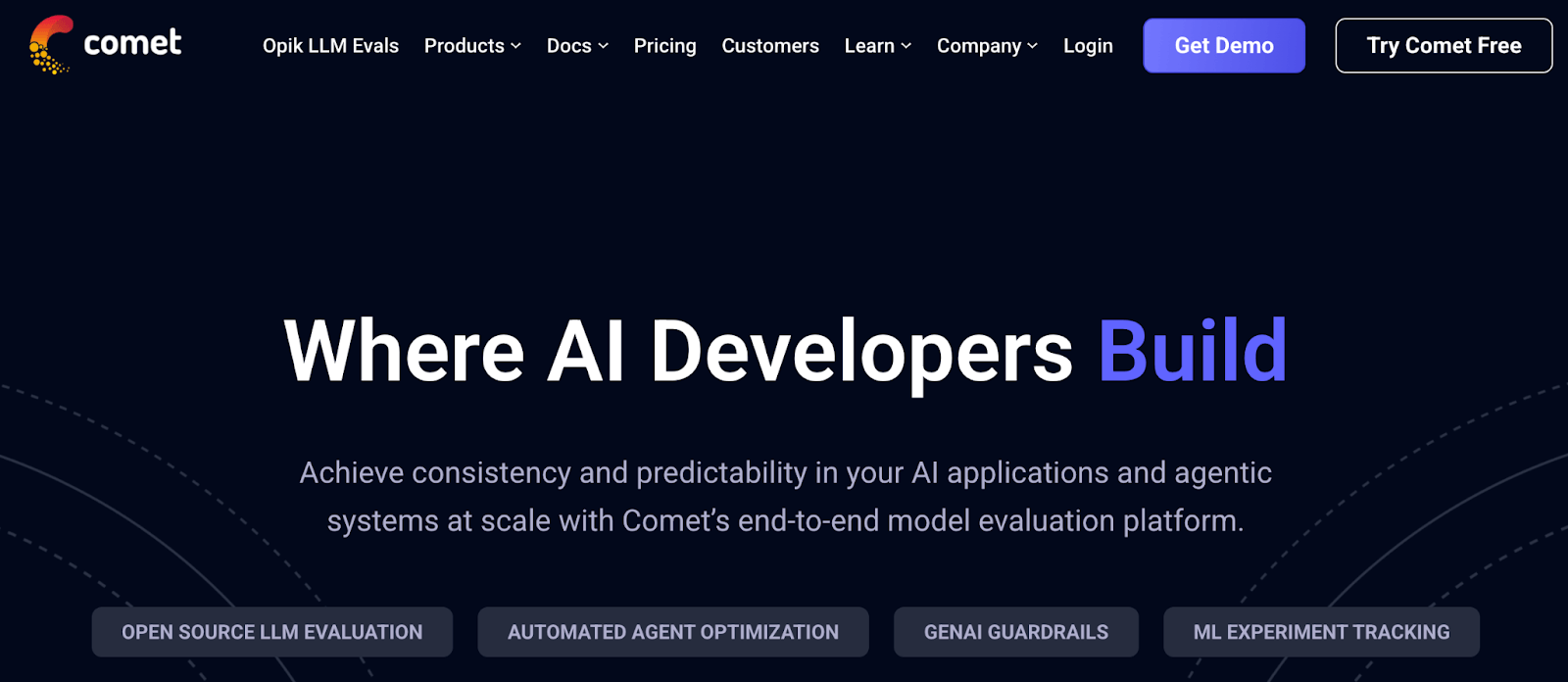

3. Comet

Comet is an AI developer platform that provides an end-to-end model evaluation platform. It aims to bring consistency and predictability to AI applications and agentic systems at scale, covering the entire ML lifecycle from experiment management to production monitoring.

Features

- Simply import

comet_mland every experiment logs parameters, metrics, and even code environments for automatic tracking. - Has a built-in model registry that centralizes model versions; the platform lets you link any model to its training runs and datasets.

- Comet comes with custom visualization. It has live charts for metrics and predictions, plus free-form Code Panels for custom Matplotlib/Plotly plots.

- Comes with collaboration tools like team workspaces with role-based permissions, lets you annotate experiments and share them with collaborators.

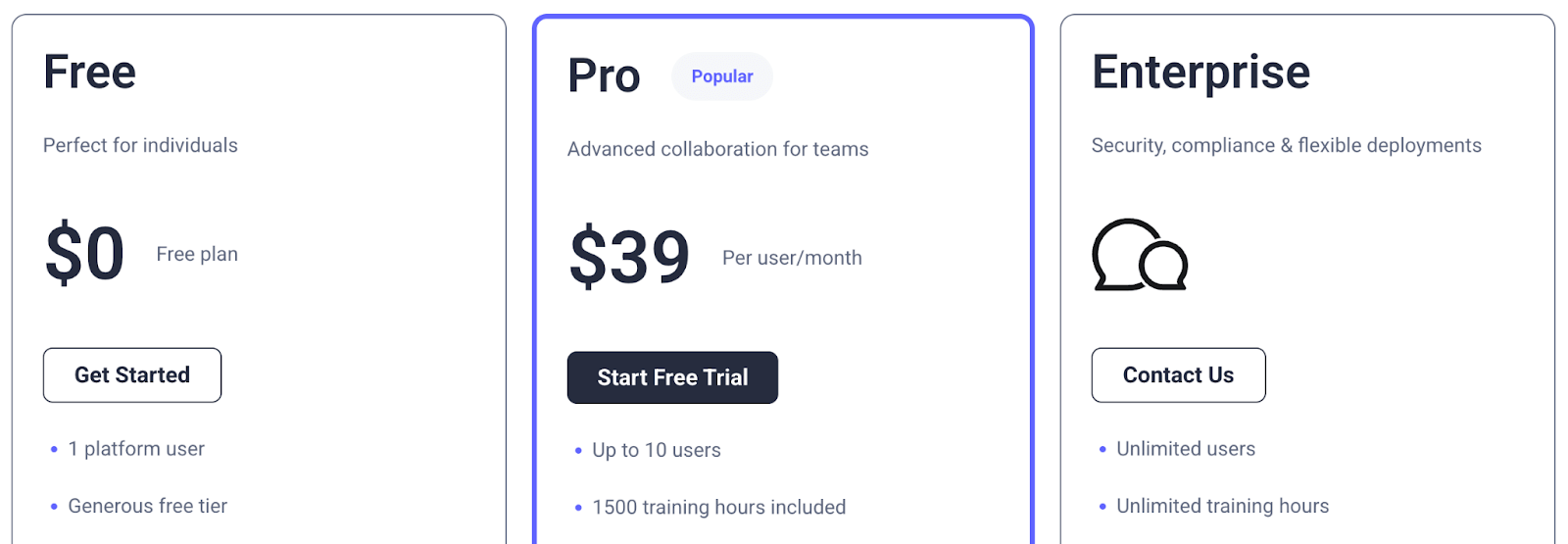

Pricing

Comet offers a free plan for a single platform user, and it has 2 paid plans to choose from:

- Pro: $39 per user per month (up to 10 users)

- Enterprise: Custom pricing (unlimited users)

Pros and Cons

Comet offers fast integration with minimal code changes and provides a comprehensive end-to-end platform for the entire ML lifecycle. It features strong support for LLM evaluation and includes robust experiment comparison and hyperparameter optimization capabilities.

However, the free tier has strict limits, and the per-user seat model can add up significantly.

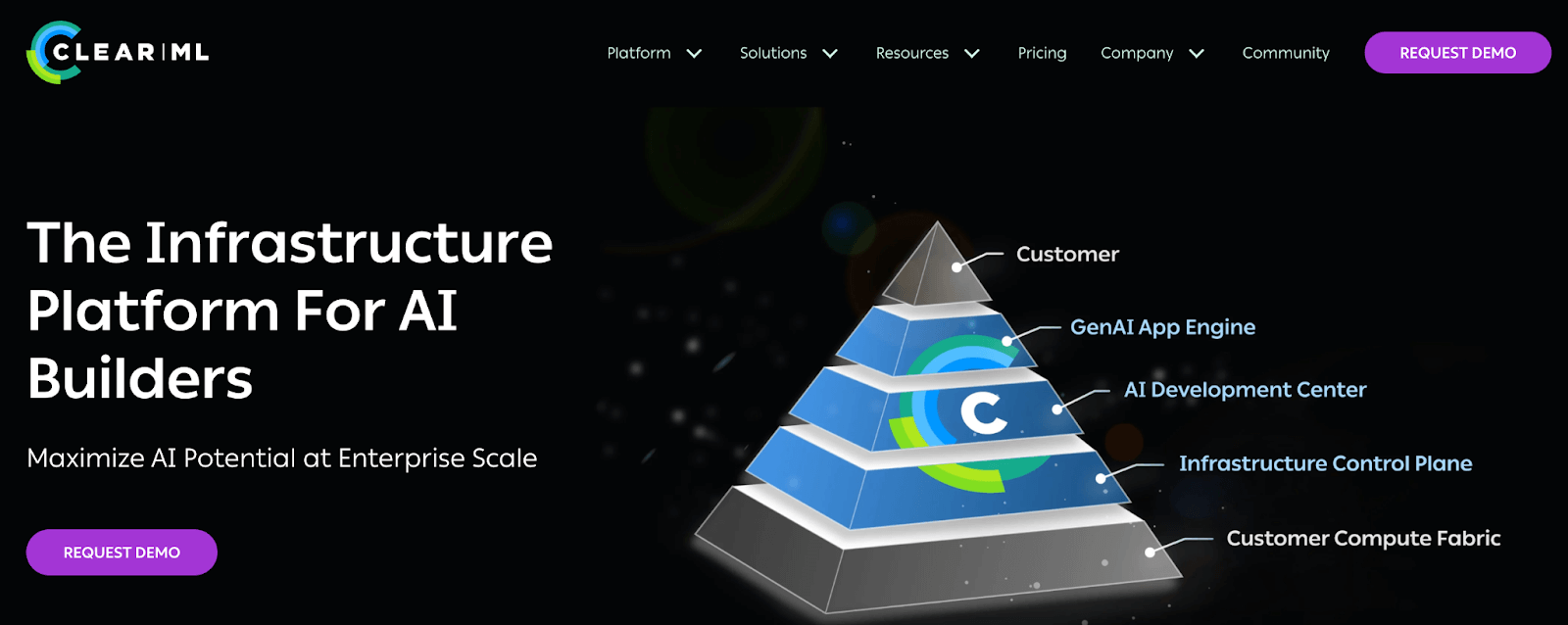

4. ClearML

ClearML is an AI Infrastructure Platform designed to maximize AI performance and scalability for enterprises. It offers a three-layer solution that streamlines the entire AI workflow, from initial development to production deployment.

Features

- ClearML features automatic logging, which lets you track code, notebooks, datasets, hyperparameters, and metrics without manual instrumentation.

- Each ‘Task’ (experiment) can be associated with a project, and then you can compare runs by tags or names.

- Beyond tracking, ClearML provides workflow orchestration through ClearML Pipelines and data management on a single platform.

- Provides a model registry to catalog and share models with full traceability and provenance.

Pricing

ClearML offers a free community plan that costs nothing for up to 3 members. Apart from that, ClearML has three paid plans to choose from:

- Pro: $15 per user per month + usage

- Scale: Custom pricing (Pay for What You Use)

- Enterprise: Custom pricing

📚 Related reading: ClearML pricing guide

Pros and Cons

ClearML’s tracking server (ClearML Server) and SDK are fully open source with no license fees. You can self-host it on Linux, Mac, or Kubernetes environments and use it indefinitely at no cost.

But also keep this in mind: ClearML has a steep learning curve for new users. The platform’s user interface can be challenging to navigate efficiently, and integrations with certain tools may require significant effort to set up and maintain.

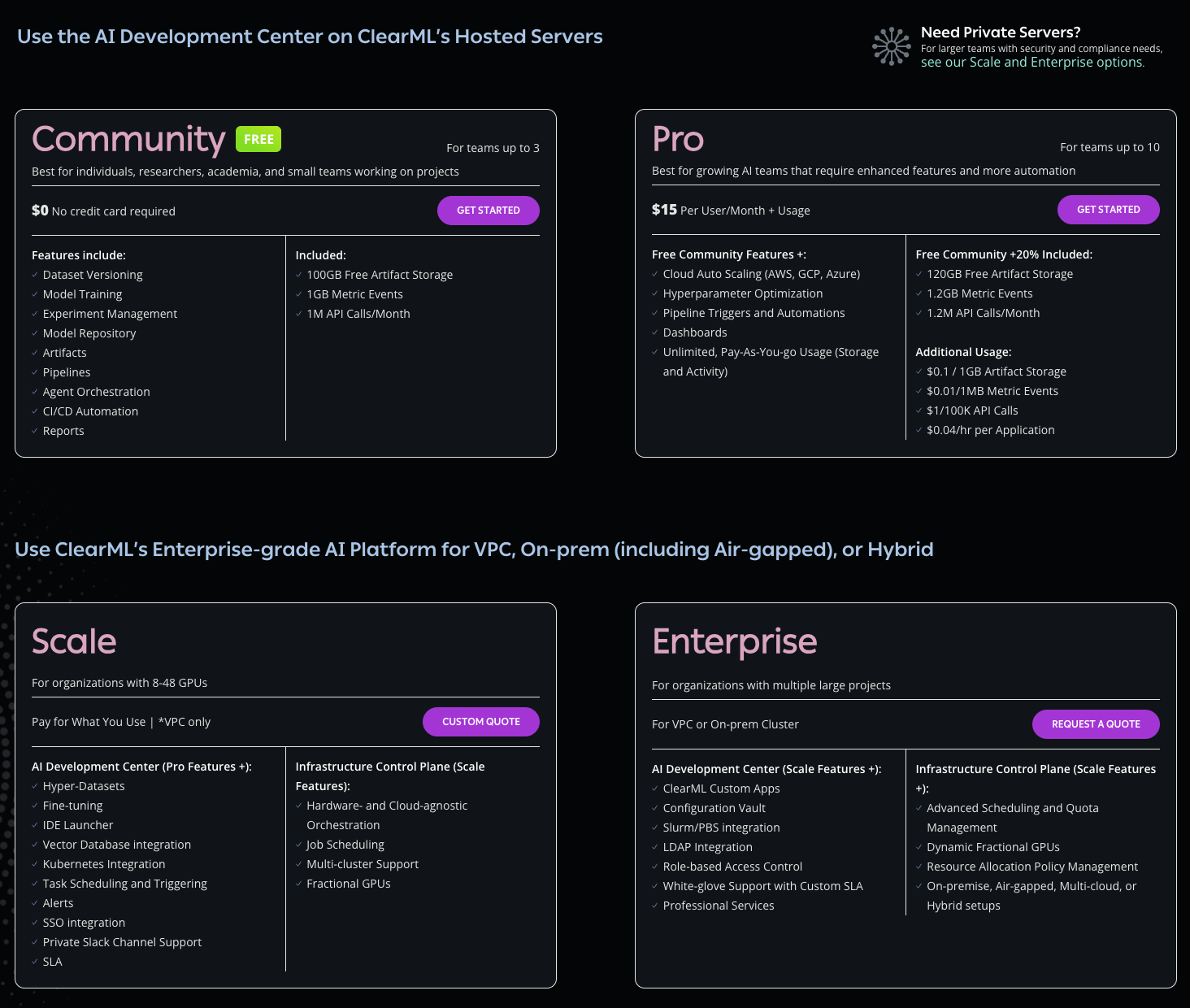

5. MLflow

MLflow is an open-source developer platform designed to build AI applications and models with confidence, offering end-to-end tracking, observability, and evaluations. It helps streamline machine learning workflows, including experiment tracking, model management, and deployment, providing a flexible and widely adopted solution for various ML needs.

Features

- Logs parameters, metrics, tags, and artifacts through a lightweight REST or gRPC endpoint that any language binding can hit.

- The web app loads from the same tracking server and supports metric scatter plots, parallel run comparison, and CSV export for offline analysis.

- Tracks model versions, stage transitions, and approval comments, then exposes REST hooks that deployment tools can call.

- Encapsulates code, environment, and entry points in a declarative YAML that can be run on Docker, Conda, or Kubernetes.

Pricing

MLflow is completely open-source and free for self-deployment on any infrastructure, giving teams full control over their experiment tracking and model registry without licensing costs.

Managed MLflow services include:

- Databricks MLflow: Fully integrated MLflow experience within Databricks, with pricing based on Databricks compute units and storage consumption rather than separate MLflow fees.

- AWS SageMaker MLflow: Managed MLflow tracking server starting at $0.642/hour for ml.t3.medium instances, plus separate charges for artifact storage in S3.

- Azure Machine Learning with MLflow: Built-in MLflow integration with pricing based on Azure ML compute instances and storage usage.

- Nebius Managed MLflow: Dedicated MLflow clusters starting at approximately $0.36/hour for 6 vCPUs and 24 GiB RAM configurations.

Pros and Cons

MLflow is an open-source, flexible platform with a wide range of MLOps capabilities, making it suitable for basic experiment tracking, model management, and deployment. It is well-regarded for streamlining the machine learning lifecycle and simplifying experiment tracking.

However, MLflow lacks advanced collaboration features, like discussions or team workspaces. Its UI has limitations, being less configurable and often storing plots as artifacts rather than interactive widgets.

📚 Related reading:

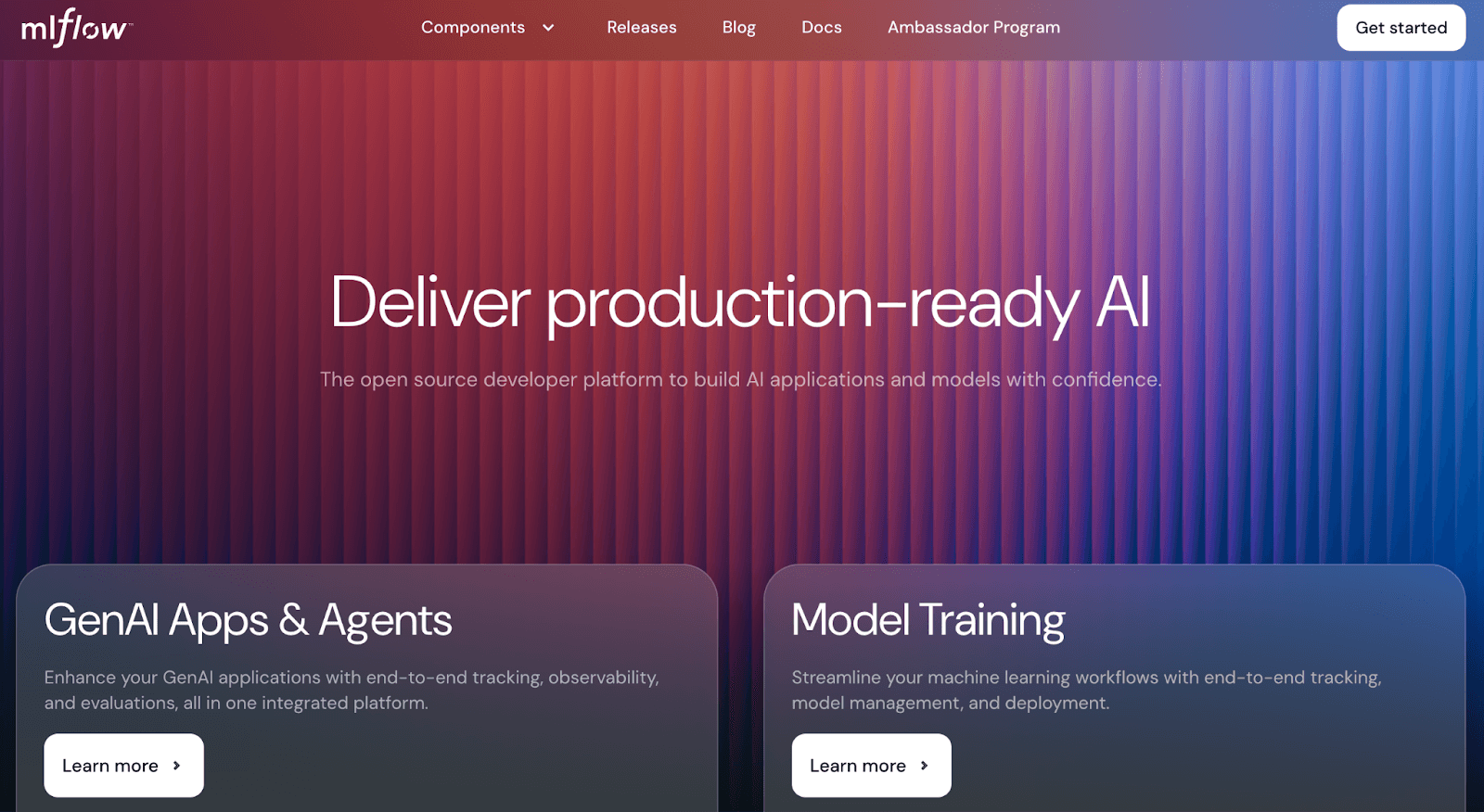

6. Google Vertex AI Experiments

Vertex AI is Google Cloud’s managed ML platform, and Vertex Experiments (built on ML Metadata) is its tracking component. In practice, any training you do via Vertex (Notebooks, AutoML, pipelines) can log metrics and artifacts into Vertex. Internally, this uses Google’s ML Metadata (MLMD) library. For visualization, Vertex AI integrates with TensorBoard as the analysis UI.

Features

- Metrics and artifact lineage for training runs are captured by Vertex ML Metadata. You don’t write special code; using Vertex’s services (e.g., Training Pipelines) automatically records metadata.

- Vertex provides a built-in TensorBoard viewer for your experiment logs. (You can launch TensorBoard on any logs stored in Google Cloud Storage.)

- Tight integration with BigQuery, Dataflow, AI Platform Pipelines, and Vertex Pipelines. Permissions are controlled via GCP IAM

- Because it’s based on ML Metadata, it can track experiments from any framework (TF, PyTorch, sklearn, etc.).

Pricing

Vertex Experiments charges per GB of metadata storage. You pay a fixed rate (e.g. $0.05–$0.20 per GB-month) for the size of your experiment logs.

There is no separate compute charge for using the tracking service itself; data is stored in BigQuery or GCS. All Vertex AI services are pay-as-you-go (with Google’s free tier credits for new users).

Pros and Cons

Vertex AI is a seamless platform for you if you’re fully in Google Cloud: managed notebooks, Pipelines, BigQuery integration, etc. The platform comes with enterprise security and global infrastructure with automated scaling.

But remember, it’s not a standalone product: there’s no sophisticated multi-run comparison UI built into Vertex (beyond TensorBoard and BigQuery queries).

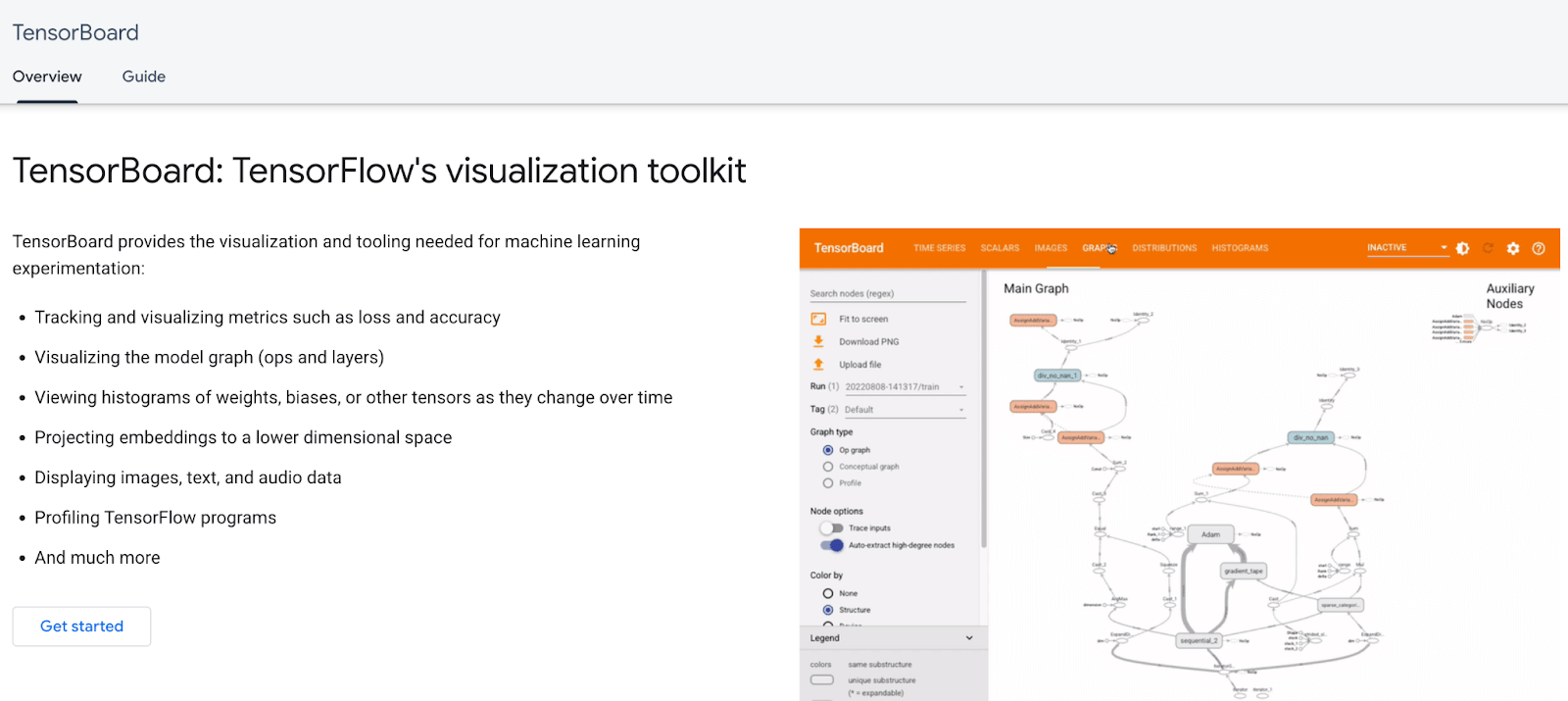

7. TensorBoard

TensorBoard is a suite of visualization tools designed to help you understand, debug, and optimize TensorFlow programs for machine learning experimentation. It provides the necessary visualization and tooling for various aspects of ML experimentation, making it an essential tool for deep learning researchers and developers.

Features

- Streams real-time data from event files, applies optional smoothing, and overlays multiple runs for side-by-side trend checks.

- Lets you zoom into subgraphs, inspect tensor shapes, and track weight histograms to catch exploding gradients.

- Performs PCA or t-SNE on high-dimensional vectors and lets you color-code points by label or any logged metadata.

- Plays recorded audio or shows generated text samples at each training step, which is useful for speech and NLP models.

Pricing

The local/self-hosted TensorBoard is completely free. The code is released under the Apache 2.0 license, so you can pip-install it and run it anywhere without paying license fees.

Pros and Cons

TensorBoard is an excellent visualization tool for deep learning, offering powerful features for understanding, debugging, and optimizing neural networks. It is free and open-source, supports multiple client languages (Python, C++, JavaScript, Go, Java, Swift), and facilitates easy sharing of trained models.

One disadvantage of TensorBoard, though, is that it’s primarily focused on TensorFlow and Keras, which limits its applicability for projects using other ML frameworks.

Which Weights & Biases Alternative is the Best for You?

No single tool is a one-size-fits-all replacement for W&B. You must choose based on your team’s priorities:

- If cost and open source matter: ZenML offers free, self-hostable tracking focusing on pipelines. MLflow is also free, but it requires more manual effort.

- If you need rich UI and collaboration: Comet offers polished dashboards and team features out-of-the-box (at a cost).

Still confused about which Weights & Biases alternative is the best for you? Sign up for ZenML open-source, discover how it can help you smoothen your ML orchestration process.

If you think ZenML is the right ML framework platform for you, book a demo call with our team to learn more about how we can build a plan tailored to your needs.

📚 MLOps alternative articles to read: