On this page

Every week brings a new framework for building LLM-powered agents. The hype is real, but this question remains unanswered: How do we build real, reliable, and production-ready applications?

Simply calling an LLM API isn’t it. To build LLM agents and multi-step RAG workflows that can reason, plan, recall, and use tools, you need a solid orchestration framework that can coordinate all the disparate components into one cohesive workflow.

In this guide, we break down 9 of the best LLM orchestration frameworks for agents and RAG, discussing their features, pricing, and pros and cons.

Factors to Consider when Selecting the Best LLM Orchestration Tools to Use

Before we jump in, let’s examine the three key factors to look for in an LLM orchestration framework.

1. Workflow Model and Developer Experience

How do you build and orchestrate your LLM workflow in the tool? Frameworks vary from declarative approaches to code-first SDKs and even no-code visual builders.

The choice, however, depends on your style: do you prefer a drag-and-drop UI or writing Python code?

For example, a graph-based model lets you design the agent’s reasoning as nodes and edges in a graph. Others use flow-based or pipeline models that execute steps sequentially with possible branching.

LangGraph lets you build graph-like flows using stateful nodes, while Langflow provides a low-code canvas for assembling components visually.

A good framework should be easy to start with, yet still allow for deep customization in complex projects.

2. State and Memory

Intelligent agents, especially RAG-first agents, need to remember context and past results. Check how each framework manages state and memory across an agent’s workflow.

Some frameworks provide ephemeral in-memory contexts or message histories, which are good for real-time conversational agents. Others integrate with persistent stores to give agents long-term memory.

Consider the framework that supports the type of memory your use case needs and how easy it is to store and retrieve state.

3. Reproducibility and Lineage

Tools with reproducibility features allow you to track which prompt, model version, or data led to a given result. Put simply, they allow you to store and reproduce successful experiments.

A strong orchestration tool will capture the lineage of each step: for example, storing every intermediate output and linking it to the code/config that produced it. This lets you trace an agent’s decision back to its inputs.

Ideally, look for LLM orchestrations frameworks that support experiment trackers or built-in logging dashboards. Version control for prompts and the ability to replay agent sessions are big plus points.

What are the Best LLM Orchestration Platforms

| Tool | Best for | Key features | Pricing |

|---|---|---|---|

| ZenML | For teams that need unified ML and LLM pipelines | - Robust pipeline orchestration - Infrastructure agnostic - Artifact and code versioning | Both free and paid |

| LangGraph | Teams that need stateful, controllable agent graphs | - Graph-based orchestration - Shared state/memory - Human-in-the-loop checkpoints | - Free - Paid: $39 per month |

| LlamaIndex | Data-centric orchestration where RAG is core | - Vector, tree, knowledge-graph indices - Advanced retrieval - Agent/tool abstractions via LlamaHub | - Free - Paid: $50 per month |

| Flowise | Low-code teams that want a visual builder for agents/RAG | - Drag-and-drop node canvas - Memory and vector-DB nodes - Basic tracing and deploy/embed widget | - Free - Paid: $35 per month |

| Langflow | Visual LangChain workflows with broad connectors | - Visual canvas editor - Multi-agent support - Reusable components and custom Python blocks | Free (open-source) |

| Prefect + Controlflow | Production-grade agent orchestration in Python | - Task/Agent/Flow abstraction - Dynamic runtime branching - Scheduling/retries - Rich logs and observability | - Free - Paid: $100 per month |

| Microsoft Agent Framework | Enterprise multi-agent apps with workflows + agents | - Unified agents and graph workflows - Pluggable memory with telemetry observability and Azure integrations - Python & .NET SDKs | Free (open-source) |

| Haystack | Pipeline-first RAG with optional agent tool-use | - DAG pipelines (retriever/reader/tools) - Function-calling tools, hybrid search, evaluation & monitoring, on-prem or cloud | - Free - Has paid custom plans |

| CrewAI | Role-based multi-agent teams with deterministic turns | - Explicit roles/goals, sequential turn-taking - 40+ built-in tools, full trace & replay, cloud control plane | - Free - Paid: starts at $25 per month |

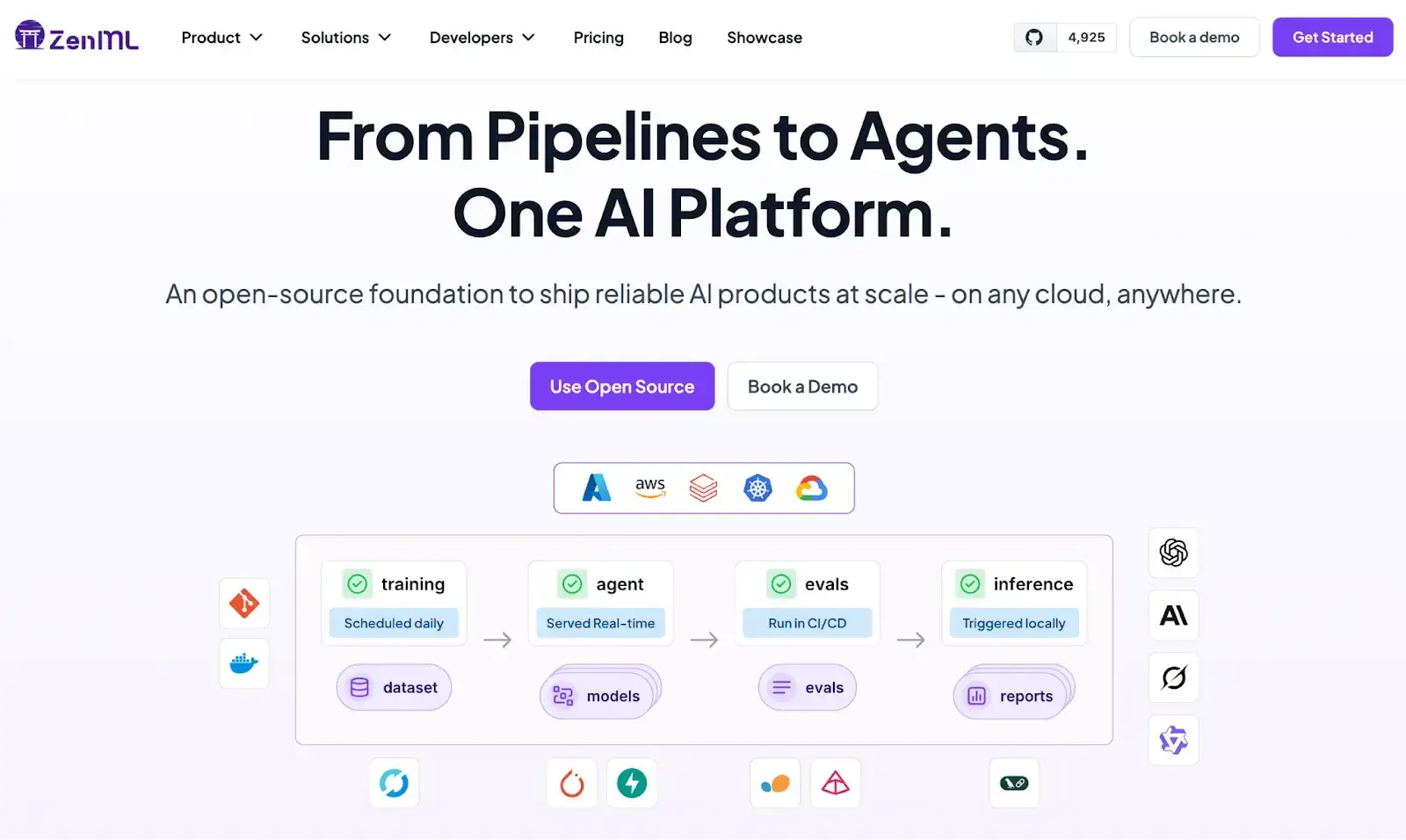

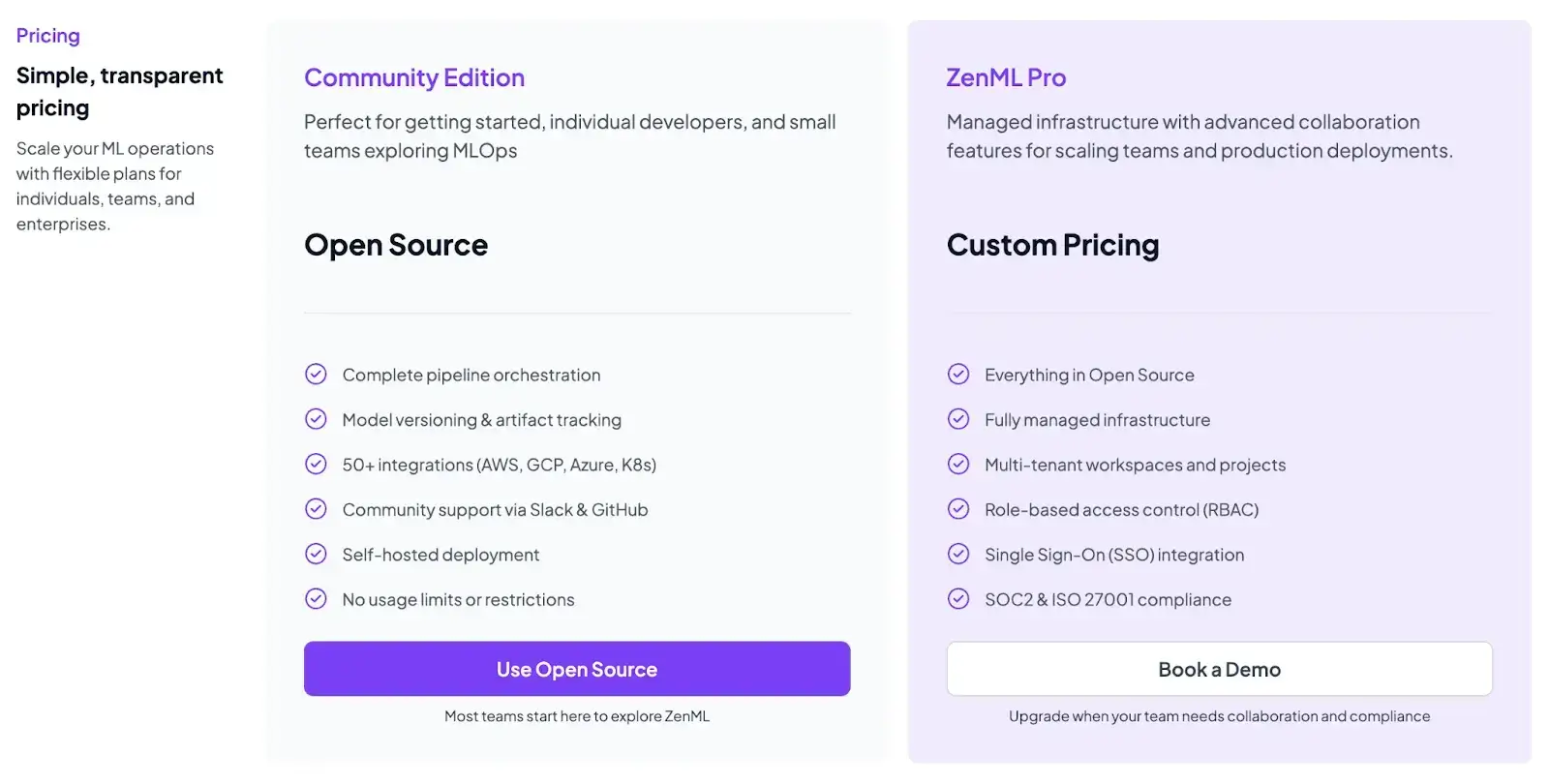

1. ZenML

ZenML is an open-source MLOps and LLMOps framework designed to help teams build reliable, reproducible, and production-grade AI systems. For LLM orchestration, ZenML provides the same battle-tested orchestration backbone that powers ML pipelines, now extended to manage the entire LLM lifecycle, from data preparation to model evaluation and deployment.

📚 Also read: ZenML documentation

Features

- Robust Pipeline Orchestration: ZenML provides a production-grade orchestration engine for LLM workflows, handling retraining, evaluation, RAG pipelines, and agent orchestration with retries, caching, and version control built in.

- Infrastructure Agnostic: Run your LLM pipelines anywhere; locally, on Kubernetes, or across clouds, without changing a single line of code. ZenML abstracts away infrastructure differences, letting you switch backends easily.

- Artifact and Code Versioning: Every model, prompt, dataset, and component in a ZenML pipeline is automatically versioned, ensuring full reproducibility and traceability of your LLM experiments.

- Metadata Tracking: Capture metadata for every LLM workflow step: models, datasets, metrics, prompts, and outputs are all logged and linked for lineage tracking and auditability.

- Automatic Tracing (Coming Soon): ZenML will soon include built-in automatic tracing for LLM orchestration, providing complete visibility into prompt flows, responses, and context propagation across pipelines.

Pricing

We are upgrading our platform to bring every ML and LLM workflow into one place for you to run, track, and improve. Think of processes like data preparation, training, RAG indexing, agent orchestration, and more, all in one place.

Pros and Cons

ZenML offers pipeline-first visibility with an interactive DAG, run history, artifact lineage, and step logs, making debugging simple. It integrates cleanly with experiment trackers and supports autologging.

Automatic tracing and some LLM-specific observability features are still in development

2. LangGraph

LangGraph is an extension of the popular LangChain library. It provides a cyclical, graph-based architecture for building stateful LLM agents. You define each step or tool invocation as a node in a directed graph, and edges control the flow of information.

📚 Also read: LangGraph alternatives

Features

- Orchestrate LLM workflows using a visual, graph-based architecture where you place key actions or tools as nodes and connect them using branches (edges).

- Implements a cyclical architecture with shared memory, allowing the system to revisit steps for context-aware interactions during long processes.

- Create multi-agent systems using common architectural patterns, like Network, Supervisor, Hierarchical, and custom architectures.

- Built-in Human-in-the-Loop Integration as checkpoints where the workflow can be paused to allow for human input, review, or approval before continuing.

- Supports token-wise streaming of LLM outputs, so downstream nodes can start work before an LLM finishes its response.

Pricing

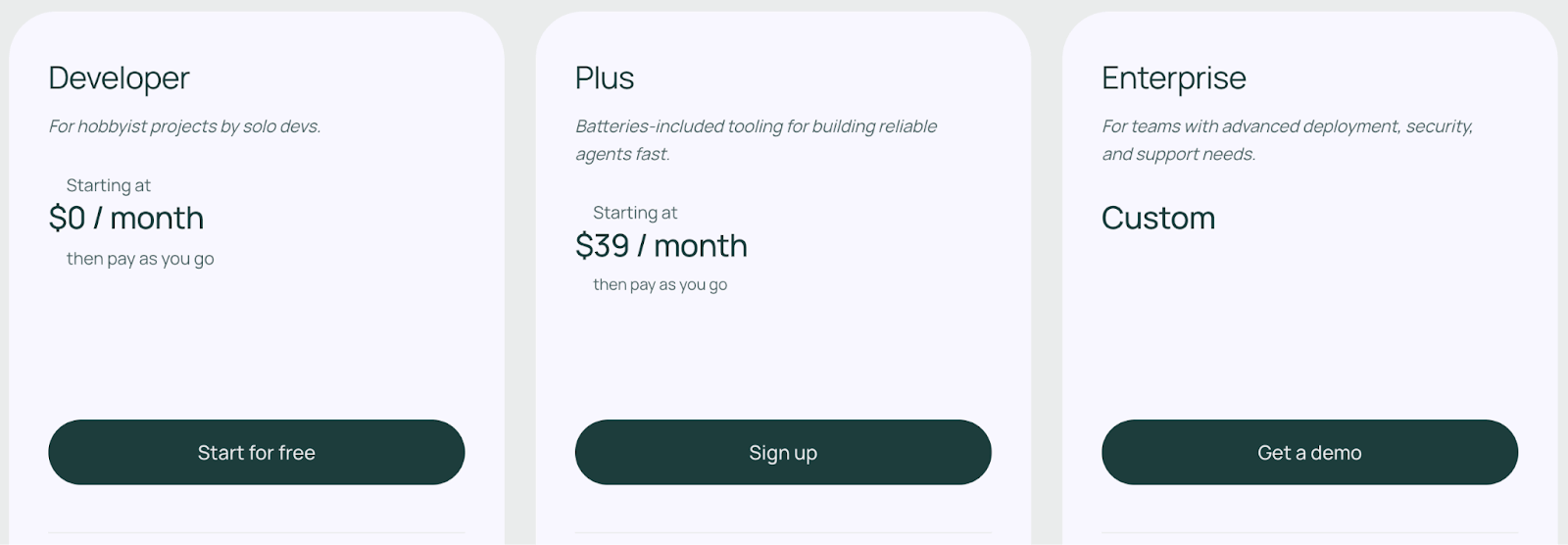

LangGraph’s core library is open-source and free to use. While the cloud platform has three plans:

- Developer: Free

- Plus: $39 per month

- Enterprise: Custom pricing

📚 Also read: LangGraph pricing

Pros and Cons

LangGraph is a library that extends LangChain for building stateful, multi-actor applications. By defining logic as a graph of nodes and edges in Python, it offers granular control ideal for complex, production-grade workflows that require loops, branching, and persistent state.

This code-first approach, while powerful, results in a steeper learning curve. Lacking a visual interface, it requires comfort with Python and graph-based concepts, which can make debugging more intricate than troubleshooting a visual representation.

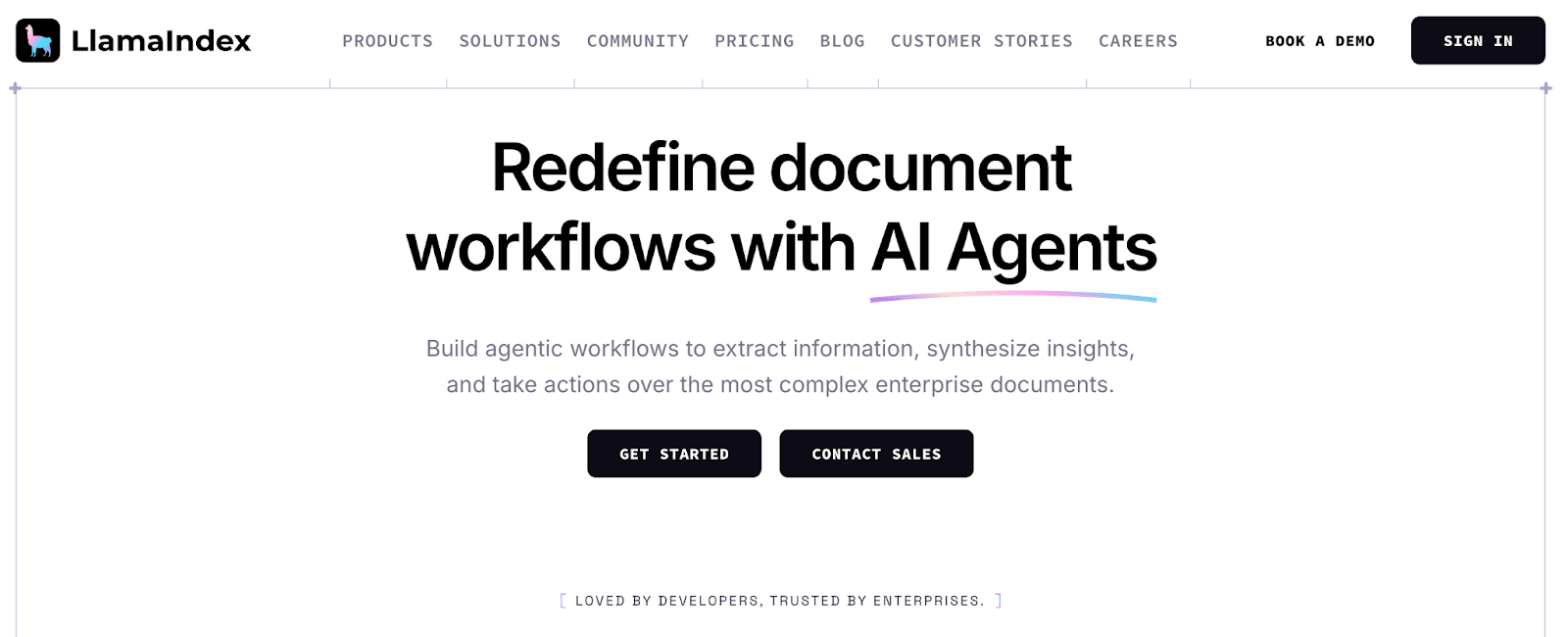

3. LlamaIndex

LlamaIndex is a data framework built to connect custom data sources to LLMs. It’s best suited for creating RAG applications and also provides tools for building LLM agents that can plan and act based on that data.

📚 Also read: LlamaIndex alternatives

Features

- Use an event-driven framework to orchestrate multi-step workflows using Python code. You can write custom, asynchronous Python functions and trigger them by events.

- Supports various indexing structures, including vector, tree, and knowledge graphs, to optimize data for diverse retrieval needs.

- Offers hundreds of pre-built connectors for APIs, databases, documents, and applications for quick and easy data ingestion.

- Provides powerful query engines and a QueryPipeline feature to orchestrate complex retrieval and synthesis workflows by routing queries to multiple indexes and intelligently combining the results.

- Deploy data agents that can intelligently interact and automatically search data, make API calls, and use tools to augment the RAG process.

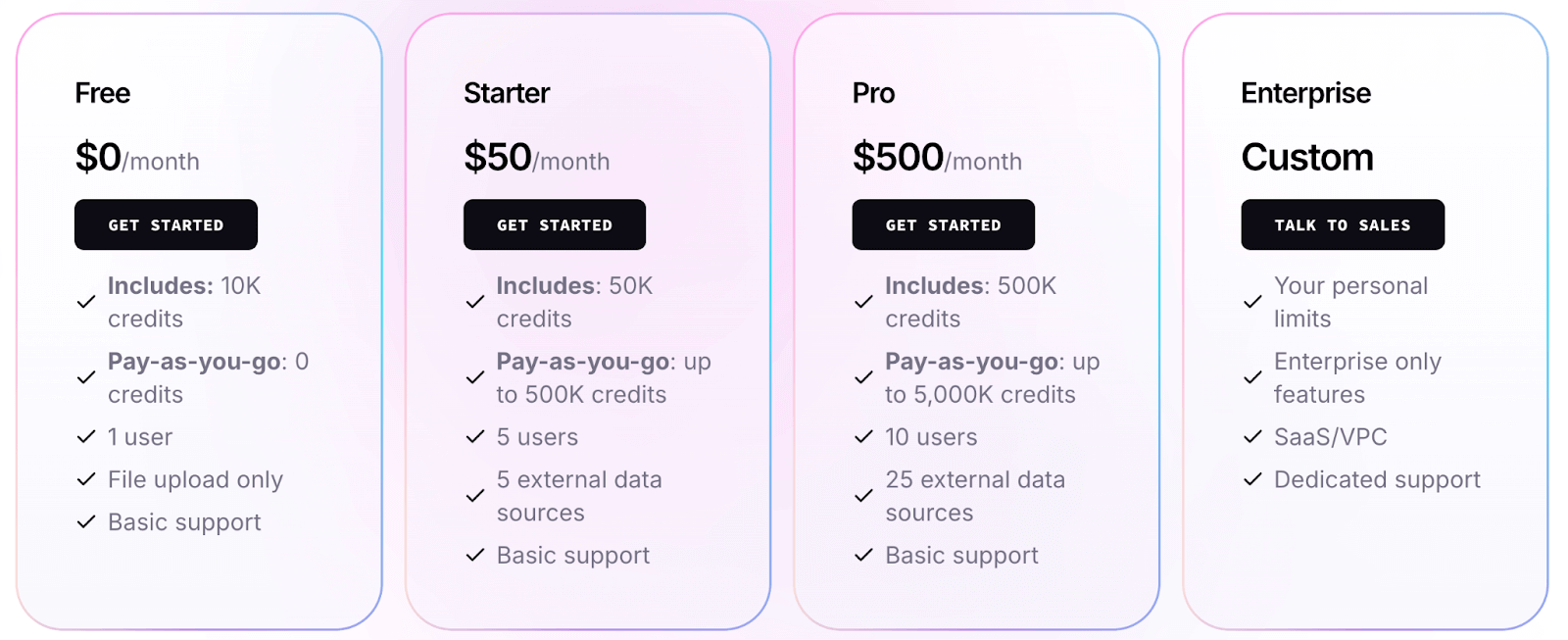

Pricing

The open-source LlamaIndex library is licensed under the MIT license and is free to use. LlamaIndex also provides a hosted platform, which offers more and better features. Their pricing (as of 2025) is credit-based:

- Free tier: $0, includes 10k credits (sufficient to try out with smaller workloads).

- Starter plan: $50 per month, includes 50k credits

- Pro plan: $500 per month, includes 500k credits

- Enterprise plan: Custom pricing

📚 Also read: LlamaIndex pricing

Pros and Cons

The upside is LlamaIndex depth and flexibility for building custom RAG systems. It has best-in-class tools for data ingestion, indexing, and querying. Being code-first, you get direct control over logic, data access, and tool use. A top choice for developers who need granular control over how an LLM interacts with data.

The trade-off is more responsibility for guardrails like step limits, termination, and error handling. Since there is no built-in pipeline serialization, LlamaIndex could be resource-intensive. Meaning, there’s a risk of high operational costs for data handling and optimizing query latency across billions of vectors.

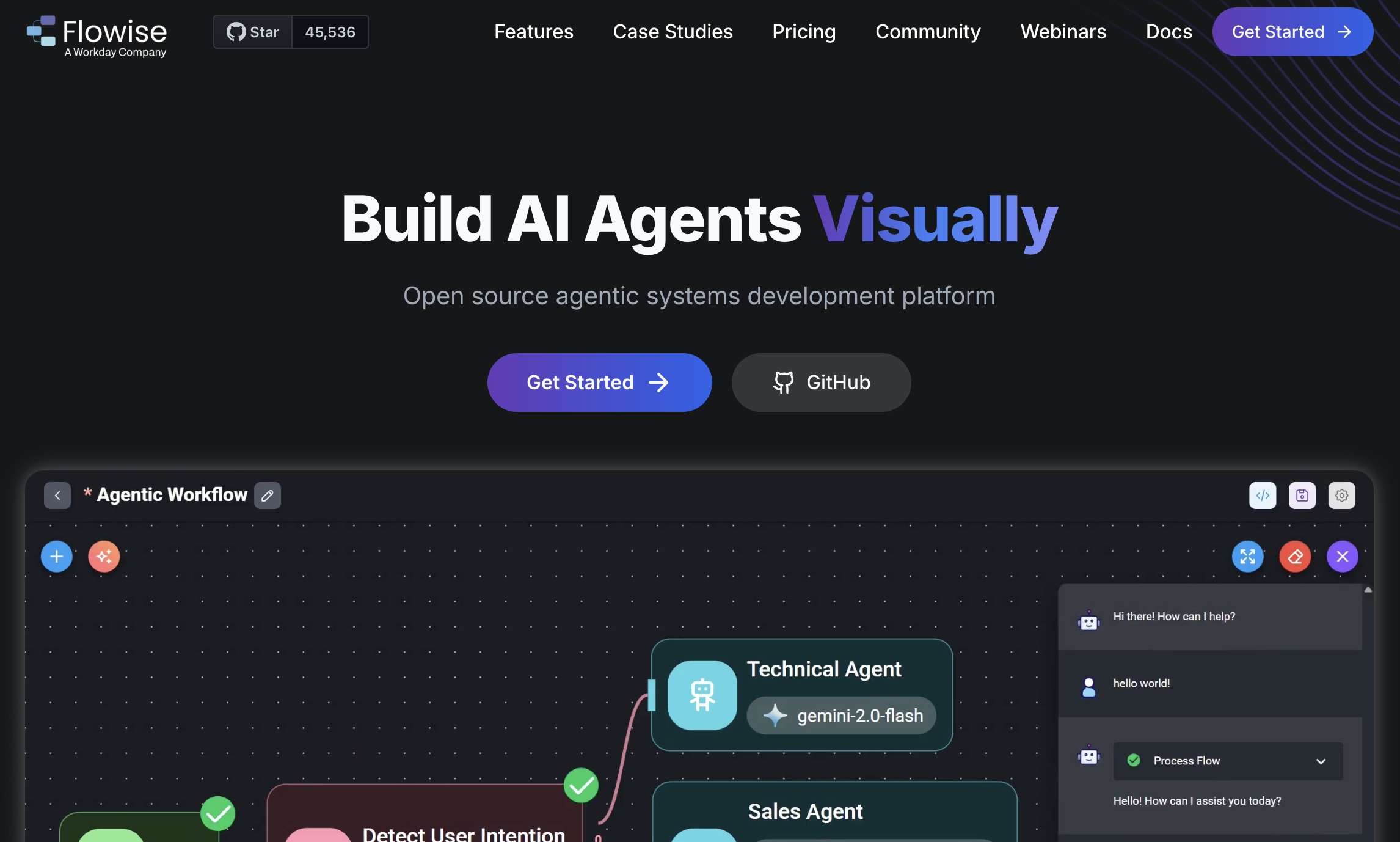

4. Flowise

Flowise is an open-source, low-code platform for building LLM applications. It provides a drag-and-drop node-based editor where you can chain together LLMs, prompts, tools, memory modules, and data sources on a canvas.

📚 Also read: Flowise alternatives

Features

- Offers a drag-and-drop editor where you can add nodes and functions and draw connections between them to define the LLM application's logic.

- Supports over 100 integrations with popular AI frameworks like LangChain and LlamaIndex, as well as a wide range of LLMs, vector databases, and data sources.

- Get pre-built nodes for common needs, like prompts, various LLM providers, vector store queries, tools like web search, or Python code execution.

- Specialized visual builders, such as Agentflow, are natively designed for creating multi-agent systems with memory and HITL nodes.

- Built-in dashboard for metrics and logs, and supports integration with monitoring tools like OpenTelemetry and Prometheus.

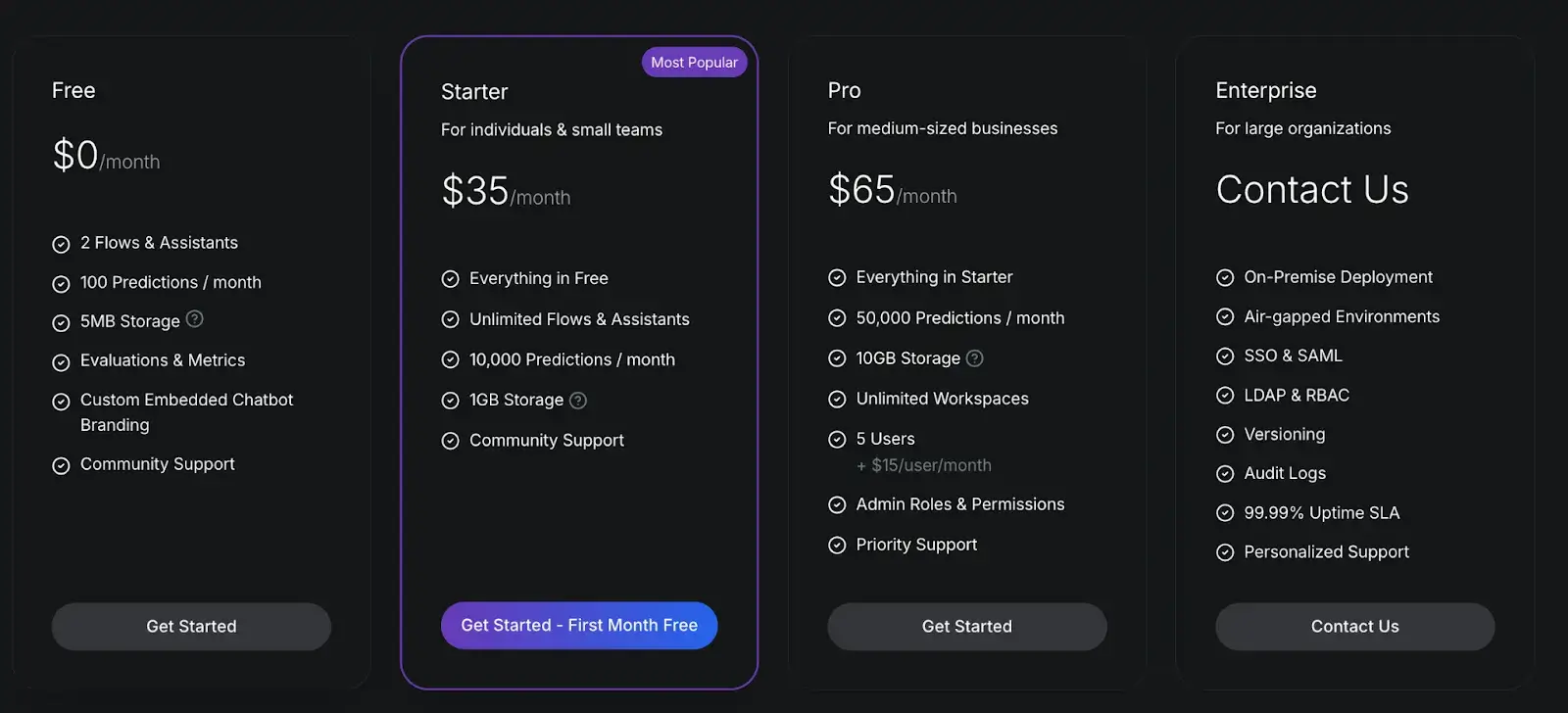

Pricing

Flowise is an open-source project and can be self-hosted for free. For users who prefer a managed solution, the commercial FlowiseAI cloud platform offers a tiered pricing structure:

- Free: $0 per month; limited to 2 flows and 100 predictions per month.

- Starter: $35 per month

- Pro: $65 per month

- Enterprise: Custom pricing

Pros and Cons

The obvious pro of Flowise is its user-friendly, drag-and-drop canvas. It enables engineers (and even non-engineers) to design LLM-powered workflows much like drawing a diagram – no heavy coding required. This can dramatically speed up prototyping and iteration.

However, this visual abstraction can be limiting for advanced logic and presents challenges in production. Users report performance issues like high RAM consumption and memory leaks under load, and overly complex flows can become unmanageable on the canvas compared to more robust code-based alternatives.

5. Langflow

Langflow is an open-source visual orchestration tool for LLMs. It’s a graphical user interface built on top of the LangChain ecosystem that allows you to drag and drop, as well as connect building blocks such as models, prompts, tools, and memory into a flowchart.

📚 Also read: Langflow alternatives

Features

- Orchestrate workflows using a node-based workspace where you drag components onto a canvas and connect them to form a pipeline.

- Use the ‘Agents at your service’ feature to run single or multi-agent systems with different roles, all of which are accessible to use the defined tools/components.

- Supports hundreds of data sources, tools, models, and vector stores by default, and allows the addition of custom tools or functions using Python.

- Export a visually designed flow in Langflow as a JSON file embeddable into a Python application or into a larger, code-based project.

Pricing

Langflow is open-source and free to use. For teams that prefer not to self-host, Langflow Cloud also offers a free account to get started. There is no subscription fee for the software itself. Instead, the total cost of running Langflow comes from the underlying infrastructure and services you connect to it.

Pros and Cons

Langflow bridges the gap between prototyping and deployment by offering an accessible UI that still allows for deep code integration. Its ‘batteries included’ approach provides a wide range of connectors for most data sources, while its connection to the LangChain ecosystem ensures it receives continual updates.

However, Langflow faces challenges common to visual builders, including latency and scaling issues under heavy loads. Customization can be limited compared to code-first frameworks, and its capabilities are ultimately constrained by the abstractions and limitations of LangChain itself.

6. Prefect + ControlFlow

Prefect is a popular general-purpose workflow orchestration tool, and ControlFlow is an open-source extension from the Prefect team focused on orchestrating AI agent workflows. Together, Prefect and ControlFlow let you orchestrate LLM agents with the same rigor as traditional ETL pipelines.

Features

- Structure AI workflows into manageable, observable tasks with clear objectives and measurable outcomes. You can create discrete tasks, assign multiple AI agents to each task, and combine multiple tasks into a flow.

- Return structured, Type-safe results supported by Pydantic that bridge the gap between the probabilistic nature of LLMs and the deterministic needs of traditional software.

- Create dynamic agent flows that support spawning new tasks or adjusting the path based on immediate results.

- Inherit Prefect’s production-grade orchestration features like advanced scheduling, automatic retries, detailed logging, and a rich observability dashboard.

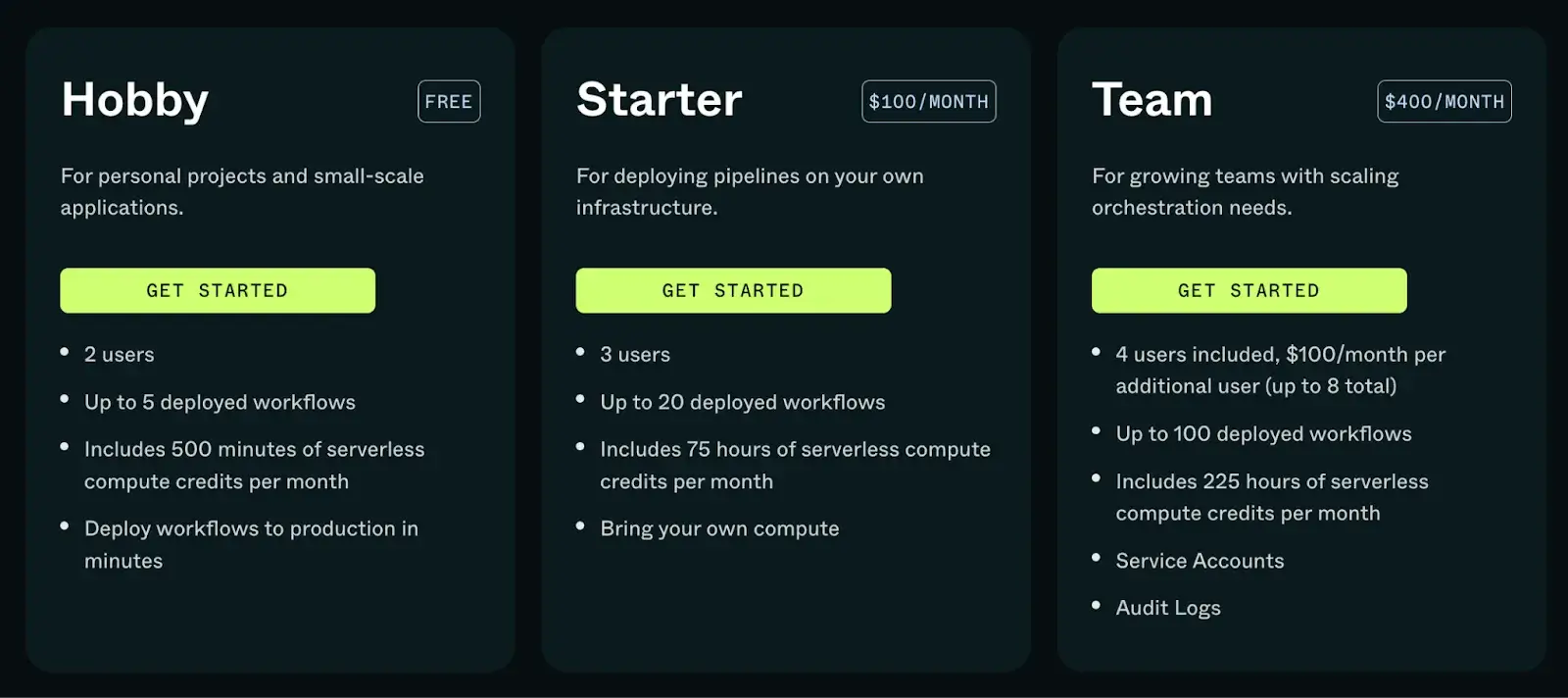

Pricing

The ControlFlow framework is part of the open-source Prefect library and is free to use. The Prefect cloud platform offers a generous free plan and four paid plans:

- Starter: $100 per month

- Team: $400 per month

- Pro and Enterprise: Custom pricing

📚 Also read: Prefect pricing

Pros and Cons

The combination of Prefect and ControlFlow brings production-grade orchestration to LLM agents. Its task-centric approach enforces good software engineering practices. Also, because it’s code-first, everything is version-controllable and testable, which appeals to software engineers.

On the downside, using Prefect + ControlFlow can feel heavier-weight for simple use cases. Also, there’s no fancy GUI for designing agent logic; you are writing Python code, albeit with powerful libraries. Some users have reported a steep learning curve due to the platform’s code-first terminology and concepts.

7. Microsoft Agent Framework

Microsoft Agent Framework is an open-source SDK and runtime from Microsoft for building sophisticated multi-agent AI systems. Announced in late 2025, it actually combines ideas from two earlier AI projects: the enterprise-ready features of Semantic Kernel and the experimental multi-agent orchestration ideas from AutoGen.

Features

- Use the latest multi-agent patterns like Magnetic One and orchestrate agents into Workflows.

- Provides a graph-based workflow to orchestrate multi-step processes with explicit control, including features like persistent state, checkpointing for long-running tasks, and built-in error handling.

- Has native support for OpenTelemetry for monitoring, integration with Microsoft Entra ID for security, and a suite of responsible AI tools like prompt shields and task adherence monitoring.

- Uses Model Context Protocol (MCP) and Agent-to-Agent (A2A) communication to dynamically discover and use tools across different platforms.

- Offers pluggable memory and knowledge with built-in connectors for various vector stores, knowledge bases, and a unified abstraction for memory.

Pricing

The Microsoft Agent Framework itself is open-source and free to use. Since Microsoft backs it, they may eventually offer it as part of Azure services, but the framework itself is MIT-licensed.

Pros and Cons

The key strength of the Microsoft Agent Framework is its enterprise readiness, especially for organizations already invested in the Microsoft and Azure ecosystem. It’s one of the first frameworks to support both LLM-driven orchestration and deterministic orchestration in the same system. The built-in compliance and observability features address many concerns companies have with autonomous agents.

On the con side, because it’s new, the framework may not be as battle-tested by the community as some others. There’s a steep learning curve to understand all the moving parts. Additionally, while it’s open-source, it’s obviously geared to work best with Azure services; if you’re not in the Microsoft ecosystem, some features (like connector libraries or deployment pipelines) might be less applicable.

8. Haystack

Haystack is an open-source Python framework from deepset for building production-grade LLM applications. It has a strong focus on semantic search and advanced RAG. Its orchestration capabilities are centered around composable and modular pipelines that allow for the construction of highly customized and scalable AI workflows.

Features

- Assembles components like LLMs and tools into a directed acyclic graph (DAG) that supports branches and loops to orchestrate complex workflows.

- Leverages a standardized function-calling interface for LLMs to invoke tools, enabling advanced strategies like hybrid retrieval and self-correction loops.

- Provides a rich library of pre-built components for tasks like retrieval, generation, ranking, routing, and tool invocation.

- Offers robust retrieval and indexing by supporting multiple vector databases (FAISS, Weaviate, Pinecone), document stores, and hybrid search capabilities.

- Supports serialization of the entire pipeline into a YAML file, which simplifies version control and team collaboration.

- Includes a built-in evaluation framework to automate QA testing on sample queries, helping you measure and improve your pipeline's accuracy.

Pricing

Haystack is an open-source framework and is free to use. Deepset also offers a commercial ‘deepset AI Platform,’ which includes a free ‘Studio’ tier for prototyping and custom-priced Enterprise plans./

Pros and Cons

Haystack’s production-first design makes it a strong choice for building reliable and scalable search and RAG applications. For agentic workflows, having a solid retrieval foundation is a significant advantage. It’s also very modular and customizable: you can easily swap out components, making experimentation straightforward.

On the con side, Haystack’s approach can be somewhat rigid for highly dynamic agent behaviors. It’s great when you can map out the pipeline of steps, but if you want a free-form agent that decides an arbitrary sequence of actions, Haystack may present a steep learning curve.

9. CrewAI

CrewAI is a Python framework designed for orchestrating role-playing, autonomous AI agents. Its core orchestration philosophy is to model workflows as a ‘crew’ of collaborating agents. Each agent is given a specific role, a goal, and a set of tools, and they work together to accomplish complex tasks.

📚 Also read: CrewAI alternatives

Features

- Orchestrate multi-agent LLM workflows using role-based configurations where an agent is assigned a specific role, goal, and expertise, and they autonomously collaborate with other agents to reach a common output.

- Features a library of over 40 built-in tools that agents can utilize, including web search, code execution, web scraping, and math calculators.

- Supports different process models, like sequential, round-robin, and hierarchical, allowing for the creation of complex and adaptive workflows.

- Create detailed trace logs for every step, message, and tool used in a multi-agent session, with the option to replay a session with modifications.

Pricing

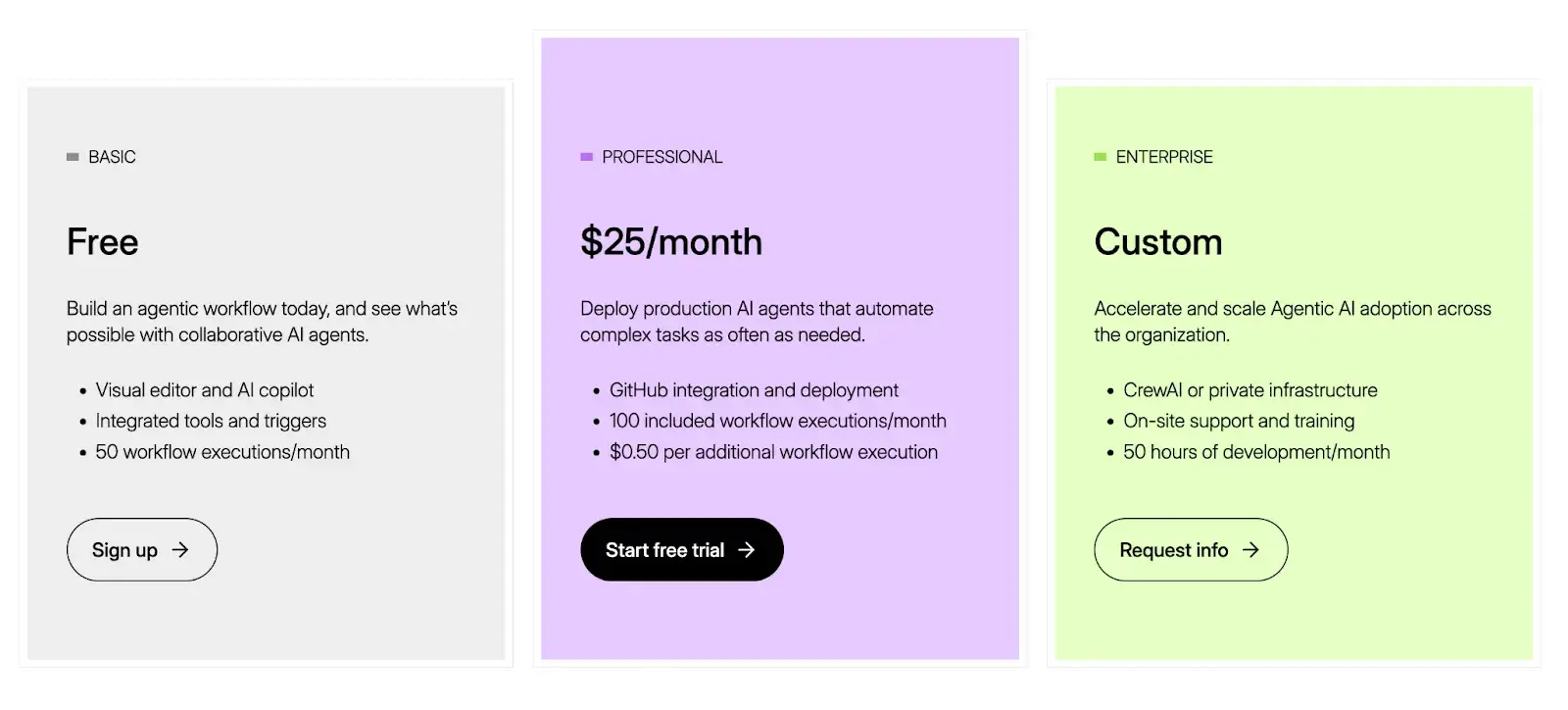

CrewAI offers a free, open-source framework. For a managed experience, it provides three pricing plans:

- Basic: A free tier that includes 50 workflow executions per month.

- Professional: $25 per month, which includes 100 executions, with additional executions available on a pay-as-you-go basis.

- Enterprise: Custom pricing for large-scale deployments that require advanced support, security, and infrastructure options.

Pros and Cons

The biggest pro is CrewAI’s structured approach. Its high-level, role-based abstraction makes it easy to set up multi-agent systems and avoid the unpredictability that plagues multi-agent interactions. The built-in replay and trace functionality is also a big plus; few frameworks make debugging multi-agent reasoning this convenient.

The main drawback is that CrewAI is optimized for workflows that naturally fit a sequential pipeline of roles. If your use case requires more free-form dialogue between agents or non-linear interactions, CrewAI might feel too constrained. Also, it’s a relatively newer player, might not have as large a community or as many integrations as something like LangChain-based tools.

Which LLM Orchestration Tool is the Right Choice for You?

Choosing an orchestration framework is a critical decision that will shape your development process and the capabilities of your final application.

Here is a decision framework to guide your selection:

✅ For Unified ML and LLM Pipelines: ZenML is best suited for teams that want to orchestrate both traditional ML and LLM workflows within the same production framework. Its infrastructure-agnostic pipelines, artifact versioning, and upcoming tracing features make it ideal for scaling RAG and agentic workflows without losing reproducibility.

✅ For Complex, State-Driven Agents: LangGraph and Prefect ControlFlow are top contenders. They provide the low-level control necessary for building resilient, task-oriented agentic workflows.

✅ For Collaborative, Role-Playing Automation: CrewAI offers a high-level, intuitive abstraction that is ideally suited for this paradigm.

If you’re interested in taking your AI agent projects to the next level, consider joining the ZenML waitlist. We’re building our first-class support for agentic frameworks (like LangGraph, CrewAI, and more) within ZenML, and we’d love early feedback from users pushing the boundaries of what AI agents can do. With ZenML, you can seamlessly integrate whichever agent framework you choose into robust, production-grade workflows. Join our waitlist to get started.👇