Temporal has long been the gold standard for durable execution in microservices. However, for machine learning and data teams, Temporal’s developer-centric and infrastructure-heavy approach often feels like a mismatch.

If you are hitting walls with Temporal’s strict determinism or operational complexity, this guide is for you.

We analyze the 9 best Temporal alternatives, ranging from data-first orchestrators to MLOps-native platforms, to help you ship efficient AI agents at scale.

Overview

- Why Look for Alternatives: Temporal optimizes for deterministic workflows, which can feel restrictive for probabilistic ML systems. Its strict determinism requirement and heavy infrastructure may slow iteration when building fast-moving AI workflows.

- Who Should Care: ML engineers, Data Scientists, and AI teams who need to orchestrate LLM agents but want a Python-first experience with built-in experiment tracking and data lineage.

- What to Expect: A detailed breakdown of 9 Temporal alternatives, from MLOps platforms like ZenML to data orchestrators like Airflow and Prefect, evaluated on their ability to handle AI agent workloads.

The Need for a Temporal Alternative?

While Temporal excels at ensuring code runs to completion, it often creates friction for AI development lifecycles.

Here are three reasons why teams look elsewhere:

1. Heavy Operational and Infrastructure Overhead

If you self-host Temporal, you operate the Temporal Server plus Persistence and Visibility stores (Visibility can be backed by SQL databases or Elasticsearch, depending on your setup). If you use Temporal Cloud, Temporal manages that backend infrastructure for you.

For an ML team that simply wants to chain an LLM call with a database lookup, maintaining this infrastructure is overkill. It shifts focus from building intelligent agents to managing distributed systems.

2. Steep Learning Curve and Strict Determinism

Temporal enforces strict determinism in workflow code. Workflow code must be deterministic. You should not call non-deterministic functions (like non-seeded random/UUID or system-time APIs) directly. Instead, use Temporal-provided mechanisms such as Activities or Side Effects (depending on SDK and use case) so results are recorded and replays stay deterministic.

This might work for microservices, but not for scripting AI agents, where iteration speed is key. The rigid distinction between Workflows and Activities forces you to a specific coding style that might feel alienating if you’ve been around Python scripts.

3. Not Built as a Data/ML-First Orchestrator

Temporal is designed for service orchestration, not data flow. Therefore, it lacks native concepts for datasets, models, experiment tracking, and some other MLOps processes.

Temporal doesn’t provide first-class concepts or UI for prompt/dataset/model lineage or experiment tracking. You can record identifiers yourself, but you typically need an additional MLOps or lineage system to make that traceability easy and standardized.

It misses the lineage and versioning crucial for debugging probabilistic AI systems.

A top Reddit comment describes Temporal as feeling more like a Celery/queue framework than a traditional data orchestrator.

Evaluation Criteria

We evaluated these alternatives based on the specific needs of AI agent orchestration:

- Operational Overhead and Deployment Model: We looked for tools that are easy to deploy and maintain. We prioritized platforms that offer a simple

pip installexperience or managed cloud options, allowing teams to start small and scale without a dedicated DevOps team. - Performance, Scalability, and Workload Fit: We assessed how well each tool handles these patterns, including support for async execution, parallelism, and event-driven triggers.

- Observability, UI, and Debugging: We evaluated each tool's UI and logging capabilities. Does it provide visual DAGs? Can you trace the inputs and outputs of every LLM call? Can you compare different runs to identify regression?

- Governance, Access Control, and Audit: We checked for features like Role-Based Access Control (RBAC), audit logs, and the ability to secure credentials and sensitive customer data.

What are the Top Alternatives to Temporal

Here are the top 9 alternatives to Temporal for shipping AI agents:

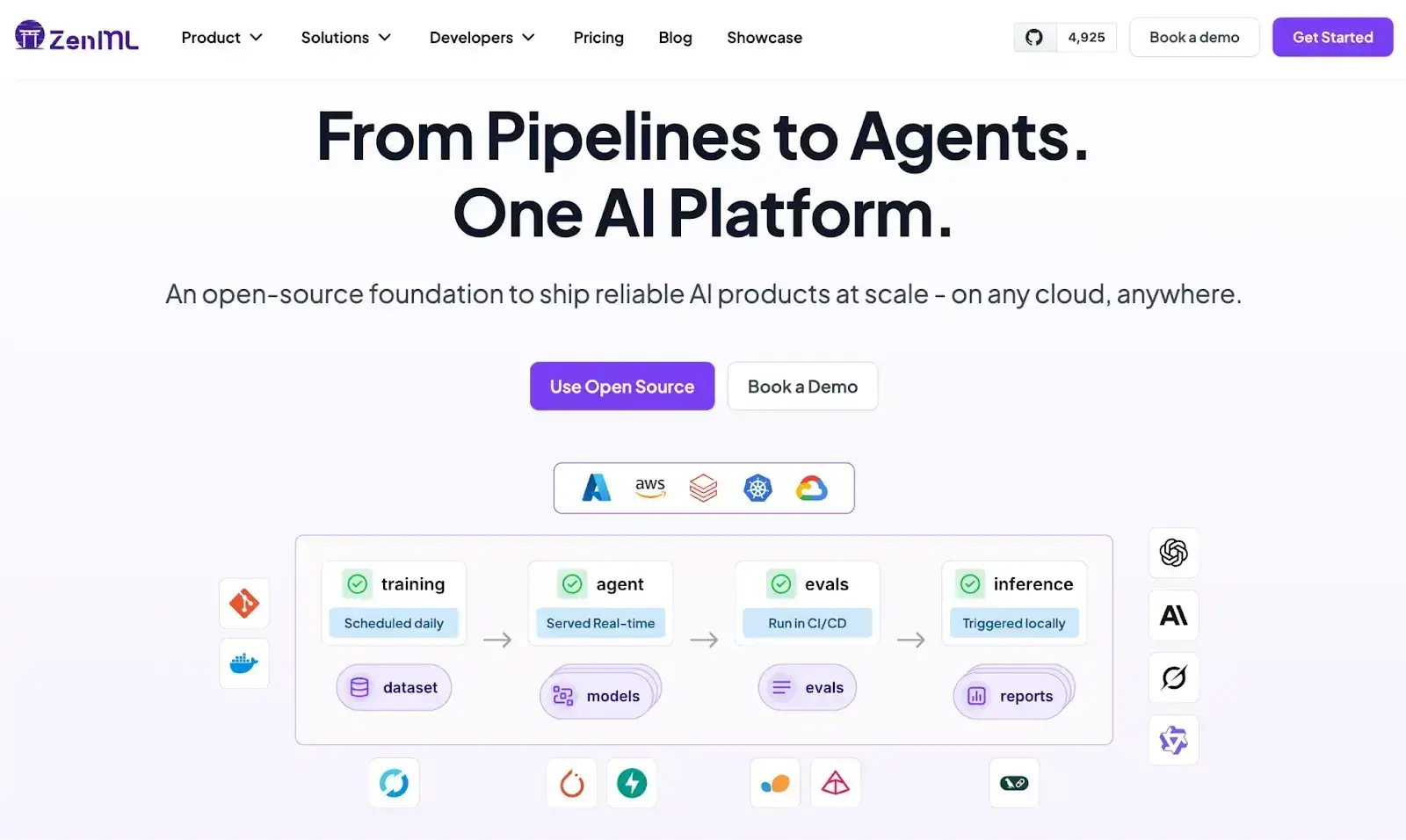

1. ZenML

ZenML is an open-source MLOps framework built to run and manage machine learning workflows with full context. It is a strong alternative to Temporal when workflows are tied to models, data, and experiments rather than generic service orchestration.

Features

- ML-native pipelines and steps are designed around training, evaluation, and inference workflows.

- Artifact tracking for datasets, models, prompts, and outputs with end-to-end lineage.

- Run and experiment metadata to compare executions and trace regressions.

- Pluggable infrastructure stacks that let the same pipeline run locally, on Kubernetes, or in the cloud.

- Versioned promotion of ML assets from experimentation to production.

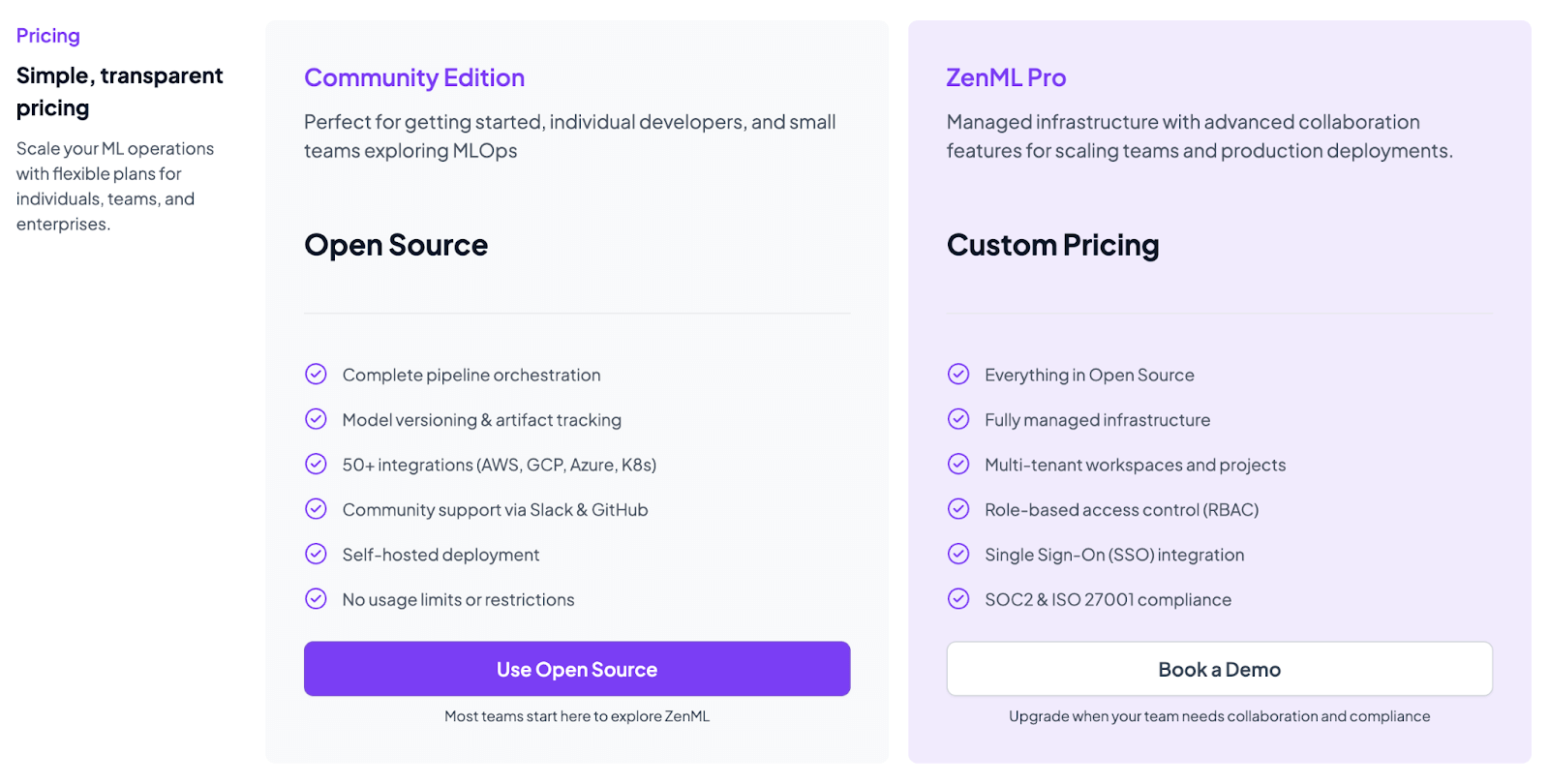

Pricing

ZenML is free and open-source under the Apache 2.0 License. The core framework, including tracking, orchestration, and ZenML dashboard, can be fully self-hosted at no cost.

For teams that want a managed setup or enterprise features, ZenML offers paid plans through ZenML Pro with pricing based on deployment and scale. The plan adds SSO, role-based access control, premium support, and hosted infrastructure, while all core MLOps functionality remains available in the open-source version.

You can start without paying and move to a paid plan only when collaboration or operational ownership becomes a bottleneck.

Pros and Cons

ZenML’s main strength is that orchestration and MLOps live in the same system. Every pipeline run carries information about the data, models, and artifacts involved, which makes debugging and reviewing changes possible without stitching together multiple tools.

Another advantage is standardization across ML teams. Pipelines follow a consistent structure while still allowing teams to choose where and how they run them.

The downside is focus. ZenML is built for ML workflows, so teams looking for a general-purpose workflow engine for non-ML services may find Temporal better aligned with that use case.

2. Apache Airflow

Airflow is the industry standard for programmatic workflow authoring. While traditionally batch-oriented, its massive ecosystem makes it a viable choice for teams who want to integrate AI agents into larger data processing pipelines without adopting a new tool.

Features

- Define Python-based DAGs that let you model tasks, branches, and retries cleanly for versioned, testable workflows.

- Scale workloads across Celery or Kubernetes executors to run many tasks in parallel with minimal overhead.

- Monitor pipelines through a visual UI that surfaces DAG graphs, logs, statuses, and retry controls in one place.

- Connect to cloud services and data systems using a broad library of operators spanning AWS, GCP, Azure, Spark, and more.

- Schedule workflows with cron rules, sensors, or backfills to automate recurring runs or recover missed executions.

Pricing

Apache Airflow is fully open-source (Apache 2.0 license) and free to use.

Pros and Cons

Airflow’s pros are its maturity and community. It has proven stability, a huge ecosystem of connectors, and a familiar Python-centric workflow model for data teams. The built-in UI and monitoring are well-developed, and many engineers have experience with it.

However, Airflow was designed for scheduled batch jobs, not the low-latency, event-driven patterns typical of conversational agents. It requires setting up and maintaining its scheduler, database, and worker components – a nontrivial DevOps effort. Airflow’s static DAG paradigm also makes it less well-suited to highly dynamic or streaming agent flows.

📚 Read about how Airflow compares to Temporal.

3. Prefect

Prefect is a Python-native workflow platform highly effective for AI agents that require dynamic loops, conditional logic, and responsiveness. It keeps things simple, you define workflows as regular Python functions with @flow and @task, and Prefect handles orchestration, retries, and scheduling in the background.

Features

- Execute asynchronous Python code natively to handle IO-bound LLM calls efficiently without blocking resources.

- Trigger agent responses instantly via webhooks or event streams to support real-time user interactions.

- Inspect task outputs and logged artifacts, including markdown, tables, or JSON, directly in the UI.

- Route agent tasks to specific infrastructure, such as GPU instances, using simple work pool configurations

- Coordinate LLM or multi-agent steps using Prefect’s Agent model, including optional human-approval gates.

Pricing

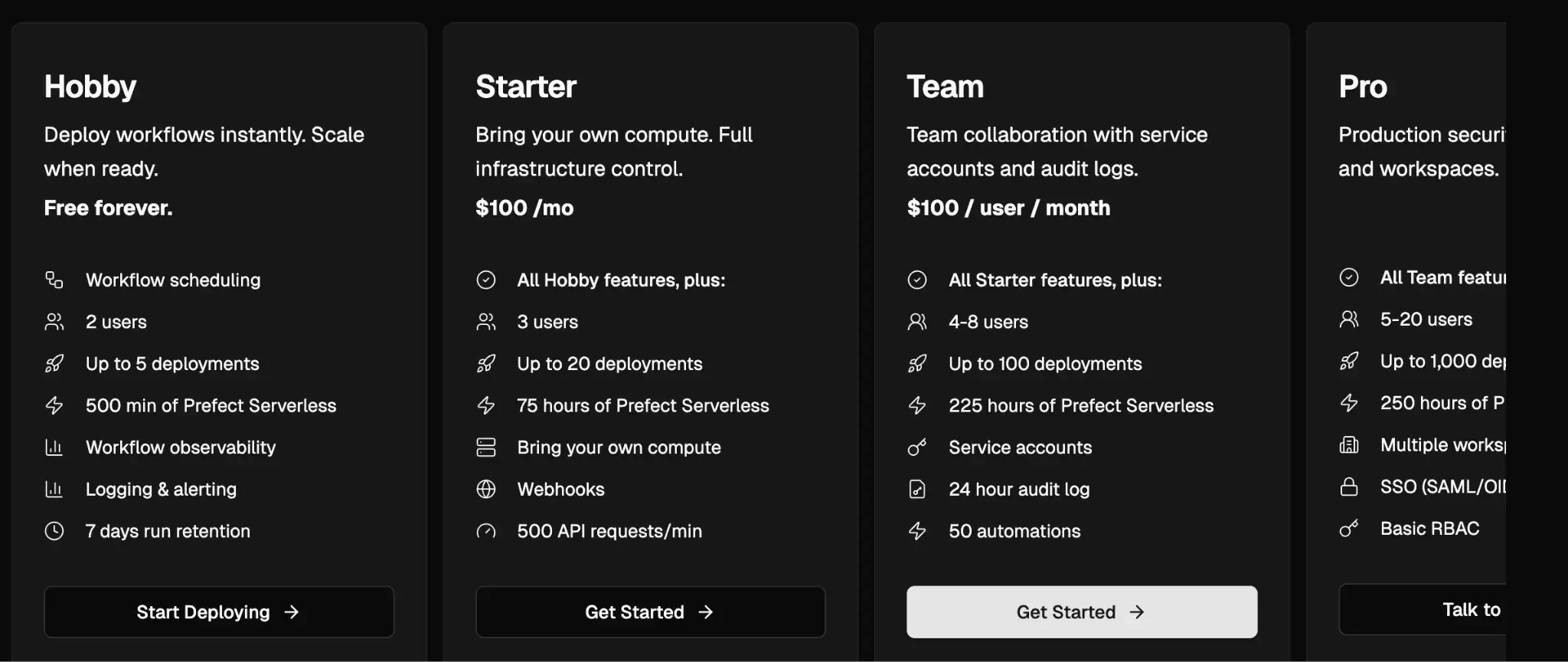

Prefect is open-source (Apache 2.0) for self-hosting. Prefect Cloud offers a free tier for individuals, with paid plans:

- Starter: $100 per month (Up to 3 users)

- Team: $100 per user per month

- Pro: Custom pricing

- Enterprise: Custom pricing

Pros and Cons

Prefect shines when developer speed and workflow control matter. Workflows are written as regular Python code, which makes branching logic, retries, and event-driven execution easy to express and reason about. It also provides a clear UI for inspecting task-level execution, which helps during development and operations.

The limitation is MLOps depth. Prefect runs workflows reliably, but it does not track models, datasets, or lineage as first-class concepts, so ML teams usually need an additional system to understand how outputs were produced.

📚 Relevant read: Prefect vs Airflow vs ZenML

4. Dagster

Dagster is best for data teams that treat their AI agents as producers and consumers of data assets. It introduces the idea of ‘assets’, like data outputs, tables, or models, as first-class citizens in workflows. It is a popular alternative to Temporal because it bundles observability and data lineage into the core experience.

Features

- Run pipelines locally or on isolated branch deployments to test changes before promoting them to production.

- Model agent knowledge bases and outputs as software-defined assets to track data freshness and quality.

- Trigger agent runs based on upstream data updates rather than rigid schedules to ensure context relevance.

- Configure and test agent runs manually with varying parameters using the built-in Launchpad UI.

- Abstract storage logic for complex inputs and outputs automatically with pluggable IO managers.

- Connect to cloud services and data systems through integrations spanning AWS, GCP, Snowflake, Spark, and more.

Pricing

Dagster itself is free and open-source. For managed offerings, Dagster Cloud offers paid plans:

- Solo: $10 per month

- Starter: $100 per month

- Pro: Custom pricing

Pros and Cons

Dagster’s pros include its strong developer ergonomics and data-centric features. Its type system and local testing support lead to fewer runtime surprises. And a polished visual UI makes monitoring and debugging straightforward. For ML teams, the built-in data quality and lineage tools are a major plus.

However, its asset-centric mental model can be a learning curve for engineers who just want to run a simple script. Also, it’s less suited for purely conversational, stateless agents that don't produce persistent data artifacts. Some advanced features, like certain connectors or team collaboration features, are only in Dagster Cloud, not the OSS version.

5. Kestra

Kestra is an event-driven orchestrator that uses YAML to define workflows. It is particularly powerful for stitching together heterogeneous systems, like a Python agent, a SQL query, and a Node.js API, without managing complex worker environments.

Features

- Define complex agent logic, including retries and conditionals, using declarative YAML files accessible to non-engineers.

- Run Python or Node.js scripts directly within the worker to avoid complex packaging and deployment steps.

- Initiate agent workflows instantly upon external events using built-in webhook and Kafka triggers.

- Compose modular multi-agent systems by calling and nesting distinct flows as reusable sub-processes.

Pricing

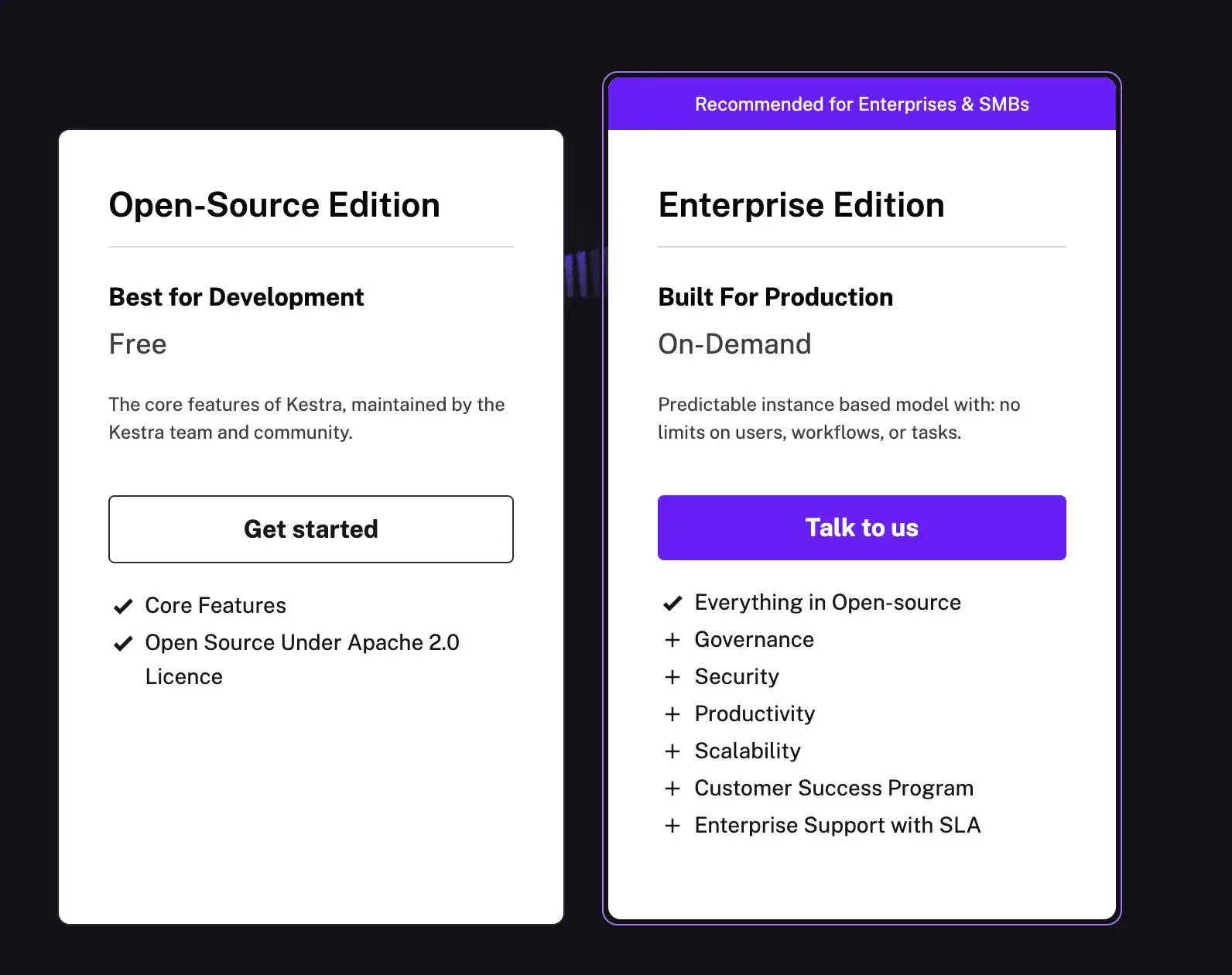

Kestra is entirely open-source (Apache 2.0) and free to use. There is an enterprise edition that adds features like advanced governance, security, and support; this is sold on an on-demand, per-instance basis./

Pros and Cons

Kestra’s strengths are its lightweight deployment model and flexibility. Teams find it easy to adapt with a zero vendor lock-in promise. The event-driven design is particularly good for streaming or API-triggered pipelines. Compared to building custom Airflow DAGs, defining a Kestra workflow in YAML can be much simpler.

On the downside, Kestra is less well-known than Airflow or Prefect, so the community and ecosystem are smaller. Its UI is more basic, and features like complex cron dependencies may require more manual setup. Additionally, if you prefer code for complex logic, the YAML approach may feel limiting.

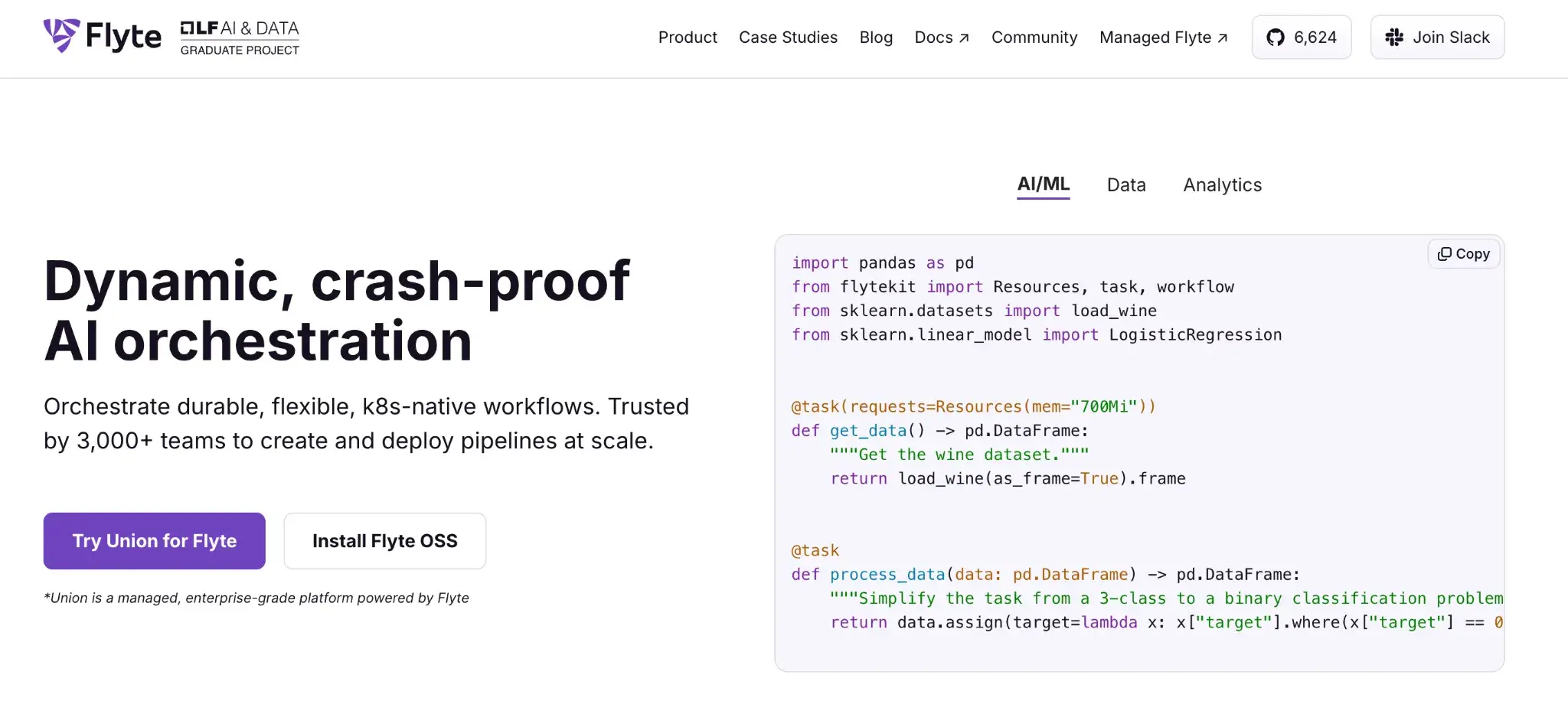

6. Flyte

Flyte is a Kubernetes-native orchestrator originally built at Lyft. It is designed for massive scale and reproducibility. Therefore, a popular Temporal alternative for teams treating AI agents as mission-critical production software.

Features

- Build pipelines in pure Python and use loops, maps, and branches to create dynamic, data-driven workflows.

- Trigger runtime decisions with native conditional logic that adapts the workflow to intermediate results.

- Recover from failures automatically through state persistence that restarts workflows from the last successful step.

- Run tasks locally or on Kubernetes with identical code so development and production behave the same way.

- Reduce execution latency with warmed container reuse and live debugging in the Union AI edition.

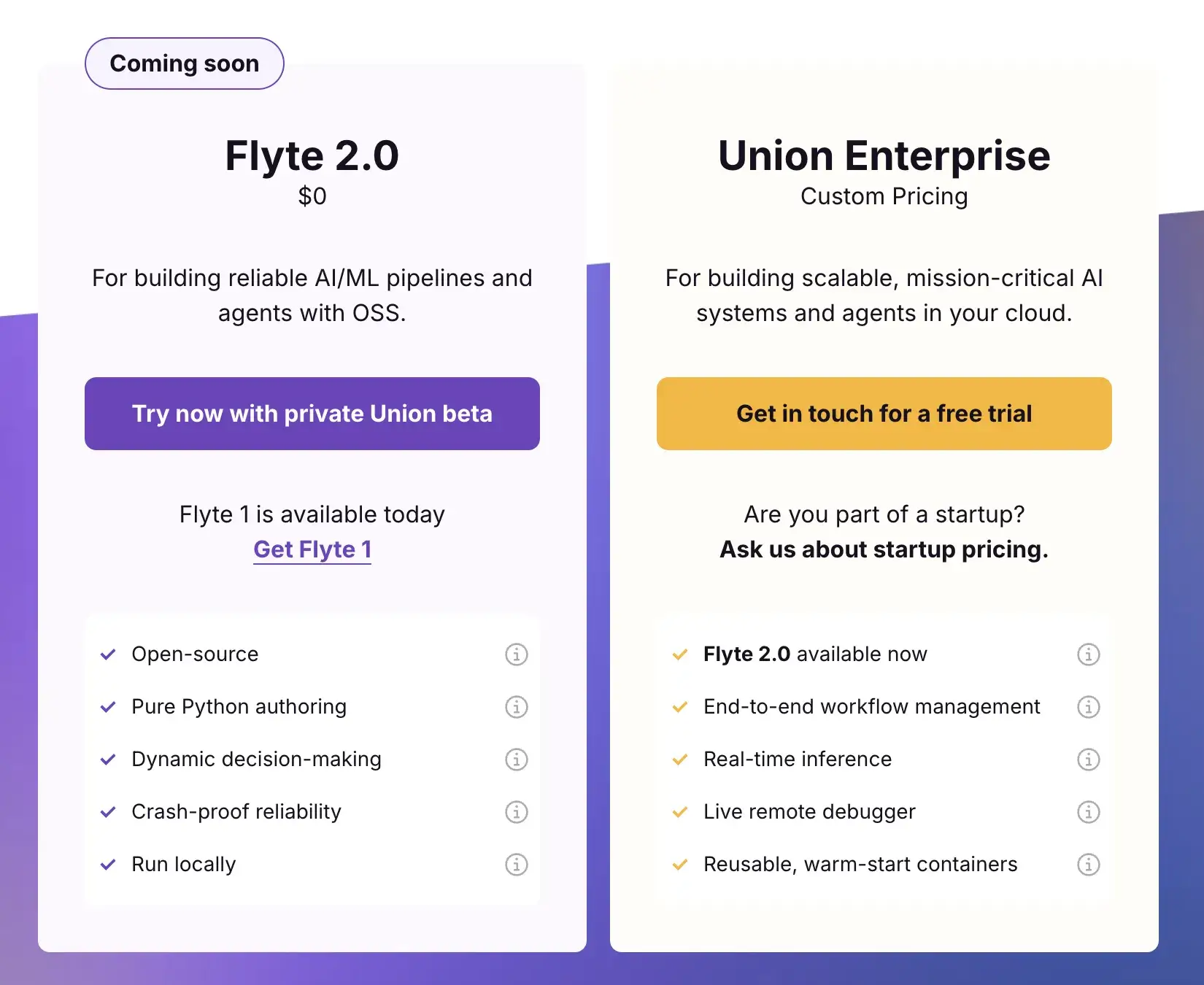

Pricing

Flyte is free and open-source at its core. Union.ai offers Flyte Enterprise, a fully-managed service for large deployments, but pricing for that is custom.

Pros and Cons

Flyte offers arguably the best reproducibility and scalability guarantees of any Temporal alternatives on this list. Its type system prevents many common data bugs in agent pipelines. Also, it’s good at both batch and streaming ML workflows, and many teams praise its data lineage and strong typing.

However, it requires Kubernetes and its own control plane, so there’s a higher operational cost compared to simple Python schedulers. If your team is not comfortable managing K8s clusters, Flyte isn’t the right fit. Check out lighter tools like Prefect or ZenML.

📚 Relevant read: Flyte vs Airflow vs ZenML

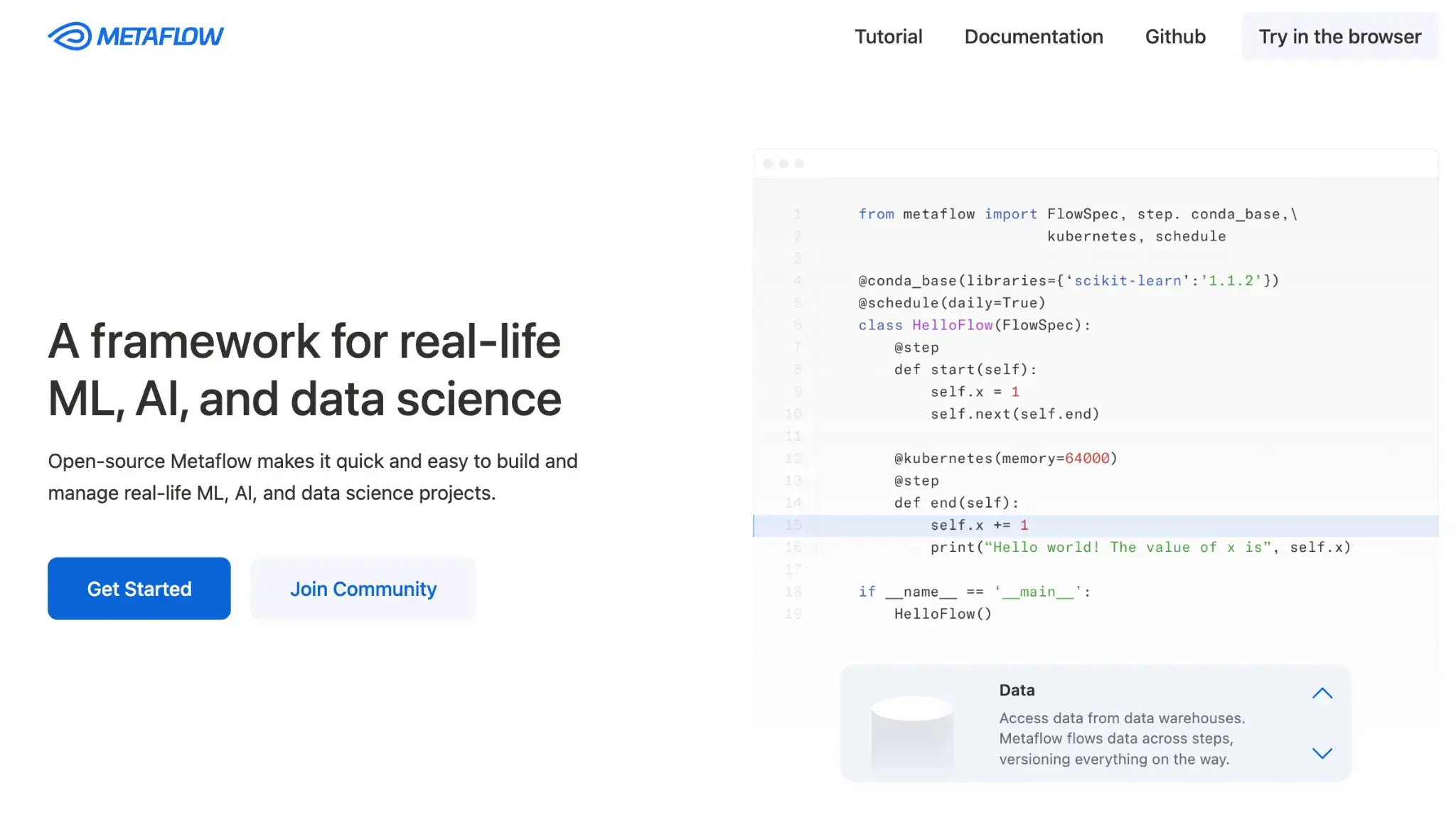

7. Metaflow

Metaflow is an open-source framework (originally from Netflix) for managing real-world data science projects and ML workflows. It lets you write workflows as pure Python code, then handles scaling, versioning, and execution for you.

Features

- Version every input, parameter, and output so each run is reproducible and easy to debug.

- Scale workflow steps to AWS Batch or Kubernetes with minimal code changes.

- Load data from large stores and keep datasets versioned alongside models for consistent lineage.

- Track runs, lineage, and comparisons through the Outerbounds UI when you need visual experiment management.

- Isolate agent runs in separate namespaces to manage production and development environments safely.

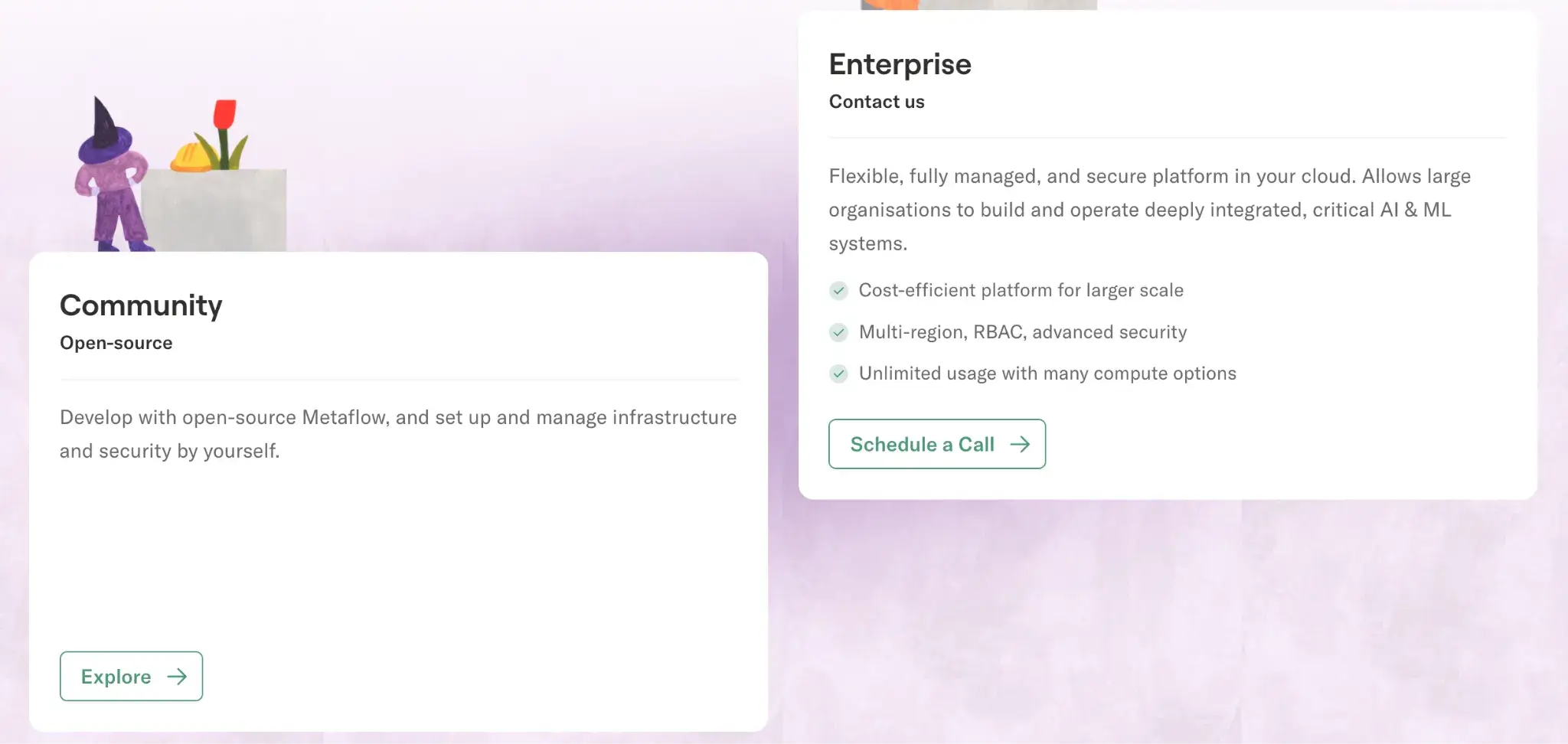

Pricing

Metaflow is open-source (Apache 2.0). Outerbounds offers a managed platform that provides a fully hosted control plane and enhanced security features.

Pros and Cons

Metaflow’s pros lie in its simplicity for data scientists. You can take a local notebook or script and scale it up with one command, without container specs or extra code. Automatic versioning of results is excellent for provenance. Since it was designed at Netflix, it works smoothly with AWS (Step Functions/EKS) and now Azure/GCP too.

Its cons include the fact that core Metaflow historically leaned toward AWS-specific, so multi-cloud or on-prem setups are newer. The out-of-the-box OSS offering has minimal UI. Also, advanced workflow patterns (dynamic DAGs, streaming triggers) are limited compared to tools like Prefect or Flyte. Finally, the managed service is relatively expensive, which may deter smaller teams.

8. Kubeflow

Kubeflow is an open-source ML platform built for Kubernetes, with modular tools for model training, hyperparameter tuning, and model serving at scale. Its Pipelines component lets you define container-based workflows in Python or YAML and run them on any K8s cluster.

Features

- Assemble container-based ML pipelines with the Kubeflow Pipelines SDK and reuse components across training, prep, or evaluation steps.

- Pass parameters and artifacts automatically as Kubeflow handles serialization and storage across workflow steps.

- Run tasks in parallel for large workloads and skip unchanged steps through built-in caching.

- Compare runs in the Pipelines UI to view logs, metrics, and resource usage for each execution.

- Deploy pipelines on any Kubernetes cluster and move them across environments with a portable YAML-based IR.

Pricing

Kubeflow is free and open-source (Apache 2.0). Google offers a fully managed option via Vertex AI Pipelines. On AWS, Kubeflow is commonly deployed via the open-source ‘Kubeflow on AWS’ distribution (typically on EKS), and you can integrate with managed services like SageMaker to run heavy jobs.

Pros and Cons

Kubeflow’s biggest advantage is its end-to-end Kubernetes-native ML stack. It covers your entire workflow within one ecosystem. Teams already on Kubernetes benefit from massive scalability and can leverage cloud credits or cluster autoscaling.

However, it is notoriously difficult to install and maintain. The operational burden is high, making it suitable mostly for large enterprises with dedicated platform engineering teams.

📚 Relevant read: Kubeflow vs MLflow vs ZenML

9. Argo Workflows

Kubeflow Pipelines traditionally used Argo Workflows as its default workflow engine, though other backends have existed (for example, Tekton), and newer KFP versions emphasize a more backend-agnostic intermediate representation.

Features

- Define each workflow step as a container to run tasks in isolated pods with precise scaling across your cluster.

- Run workflows on any Kubernetes setup and rely on native autoscaling to push thousands of parallel tasks when needed.

- View workflow graphs, logs, and statuses in a clean web UI or interact through the CLI or Python client.

- Move artifacts through S3, GCS, HTTP, or Git so steps can pull inputs or push outputs with minimal setup.

- Reuse workflow templates and CronWorkflows to standardize patterns and automate recurring ML or CI tasks.

Pricing

Argo Workflows is fully open-source (Apache 2.0) and free to use. As a CNCF project, there are no licensing costs. You only pay for the Kubernetes infrastructure it runs on.

Pros and Cons

Argo is incredibly robust and fits perfectly into a Kubernetes-centric DevOps culture. It is lightweight compared to Kubeflow and highly reliable.

The downside is the developer experience. Writing lengthy YAML files to define simple Python agent loops can be tedious, and debugging YAML errors can be annoying. It lacks the data awareness of tools like Dagster or the experiment tracking of ZenML, placing it firmly in the 'infrastructure' category rather than 'ML platform.'

The Best Temporal Alternatives for ML and Data Teams

Choosing the right alternative depends on where your friction with Temporal lies:

- For Data and ML Teams: ZenML allows you to orchestrate agents while maintaining full visibility into the models and data that power them, closing the loop between development and production.

- For Python Developers: Prefect offers the closest experience to 'pure Python' coding while handling the resilience and scheduling logic you need for agents.

- For RAG and Data Assets: Dagster is the best choice if your agents are heavily dependent on data pipelines and asset freshness.

- For Kubernetes Shops: Flyte provides the type safety and scalability needed for large-scale enterprise agent deployment.

If you are ready to move beyond just running code and want to build agents that are reproducible, tracked, and integrated into your data stack, check out ZenML’s open-source plan.