ClearML positions itself as an end-to-end MLOps platform. It handles experiment tracking, manages your datasets, and orchestrates training pipelines; all from a single dashboard.

The problem? That unified approach often creates more friction than it solves.

We've seen ML teams struggle with ClearML's enterprise limitations, run into conflicts with their existing tools, and hit walls trying to customize workflows for their specific needs. The platform's governance features can feel like a black box, and its opinionated architecture doesn't always play well with the rest of your stack.

If you've run into these issues, you're not alone. Many teams eventually reach a point where ClearML's constraints outweigh its convenience, and that's when they start looking elsewhere.

This article breaks down 10 solid alternatives to ClearML; tools that give you better experiment tracking along with more flexible pipeline orchestration. Whether you need something lightweight for tracking runs or a full-featured platform that actually integrates with your workflow, we've covered the options that real ML engineers are switching to.

Too busy today? Here's a quick overview👇

Quick Overview of ClearML Alternatives

- Why Look for Alternatives: ClearML’s ‘do-it-all’ architecture often conflicts with modern, modular stacks. Self-hosting the full stack with API, web server, DB, and cache is a maintenance burden, and teams frequently report UI failures and version incompatibilities.

- Who Should Care: ML engineers and platform leads who need a modular stack where they can swap out components (like the orchestrator or registry) without breaking the entire workflow.

- What to Expect: A breakdown of 10 tools ranging from lightweight trackers like MLflow and Comet to heavy-duty orchestrators like Flyte and Kubeflow.

The Need for a ClearML Alternative?

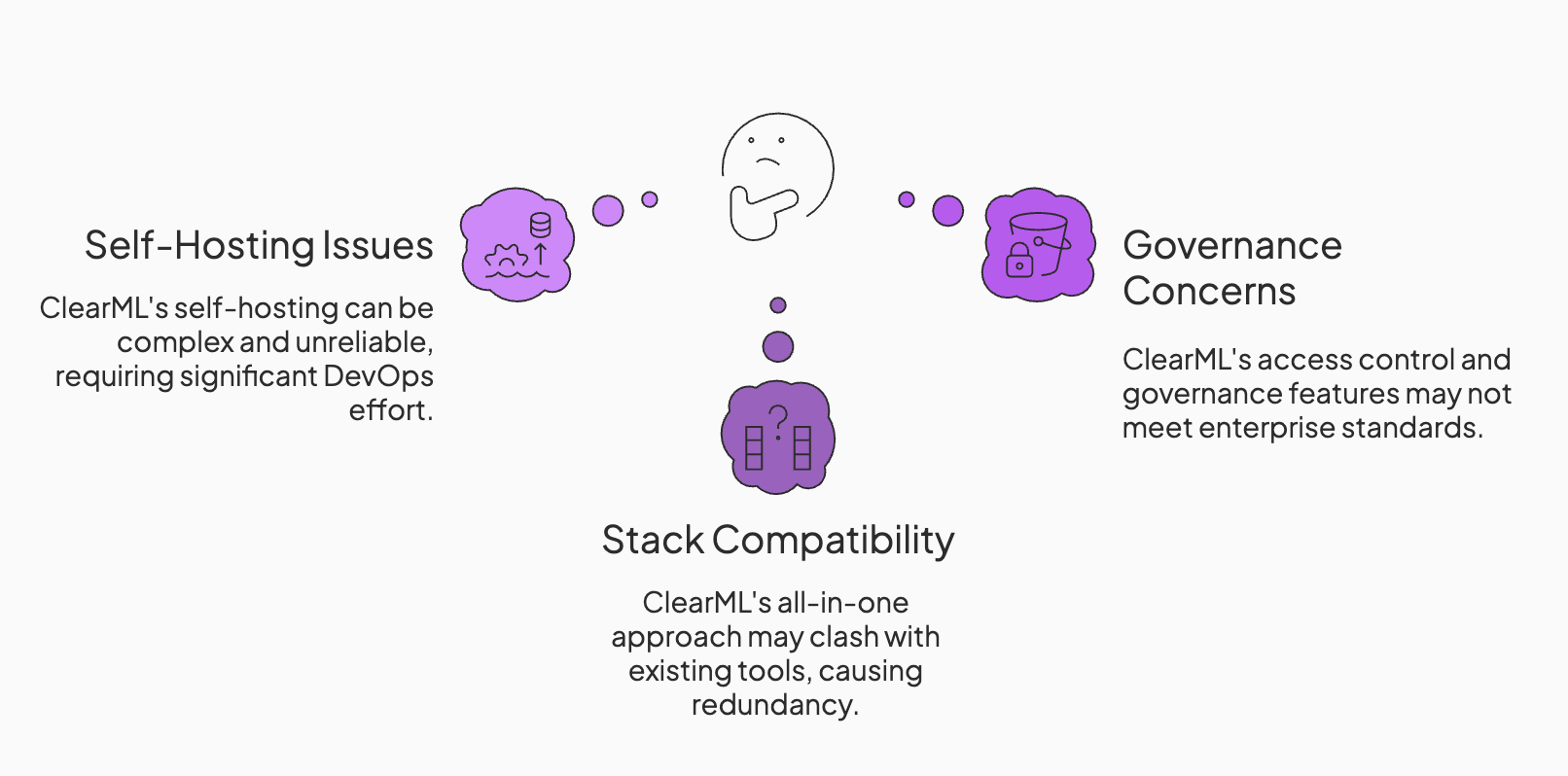

ClearML is a powerful suite, but its monolithic design creates bottlenecks in production. The main ones are:

1. Self-Hosting Friction and Reliability Issues

ClearML is a self-hostable, multi-service server stack comprising an API server, web app, file store, database, and cache, typically deployed via Docker.

This works fine for a POC. But when you need high availability, security patching, or upgrades, this ‘simple’ self-hosting turns into a major DevOps task. Common symptoms that push teams to alternatives include:

- Inadequate support for Redis deployment

- Users report UI failures where important tabs, Scalars, and Debug Samples suddenly stop loading

- Upgrades can break login and authentication mechanisms in self-hosted instances.

2. An All-in-One Approach Clashes with Existing Stacks

ClearML markets itself as a unified platform for tracking, pipelines, agents, and datasets.

But most mature engineering teams already have a preferred stack. You likely use Airflow or Dagster for orchestration, S3 for storage, and maybe a separate tool for labeling. You don't want a platform that tries to replace all of them poorly.

We see many teams using ClearML strictly for tracking while forcing it to work alongside tools like Metaflow or DVC. This overlap creates confusion and redundancy.

3. Governance and Enterprise Controls Become a Deciding Factor

As your AI team and data grow, ‘good enough’ access control is not acceptable. You need Role-Based Access Control (RBAC) that actually works.

ClearML’s enterprise release notes mention fixes for RBAC behavior often. These questions concern their stability and ongoing issues with access control.

For regulated industries or large enterprises, you need SSO, strict multi-tenancy, and audit logs that are robust by default. Alternatives often treat these governance features as core requirements, not add-ons.

Evaluation Criteria

To help you choose the right tool, we evaluated each alternative against four criteria critical to deploying AI agents and models.

- Core tracking capability: Can it capture what we debug every day? We looked for the ability to log metrics, parameters, and artifacts, capture code and environments, and compare runs across branches.

- Artifact and data handling: How does the tool manage large datasets and model weights over time? A strong ClearML alternative should either provide its own storage layer or integrate cleanly with cloud object storage and Git-like data versioning systems. Beyond storage, it should support dataset and artifact versioning with clear lineage tracking so teams can trace which data, code, and parameters produced a given model and reproduce results as pipelines evolve.

- Model registry and lineage: Can you trace a deployed agent back to the exact code and data that trained it? This means tracking model versions, associating them with the experiments that produced them, and recording lineage.

- Orchestration and execution model: Does it have a built-in pipeline scheduler or execution engine? If not, can it integrate with external orchestrators or cloud workflow engines?

What are the Top Alternatives to ClearML

Here are the 10 best tools to replace or augment your ClearML stack.

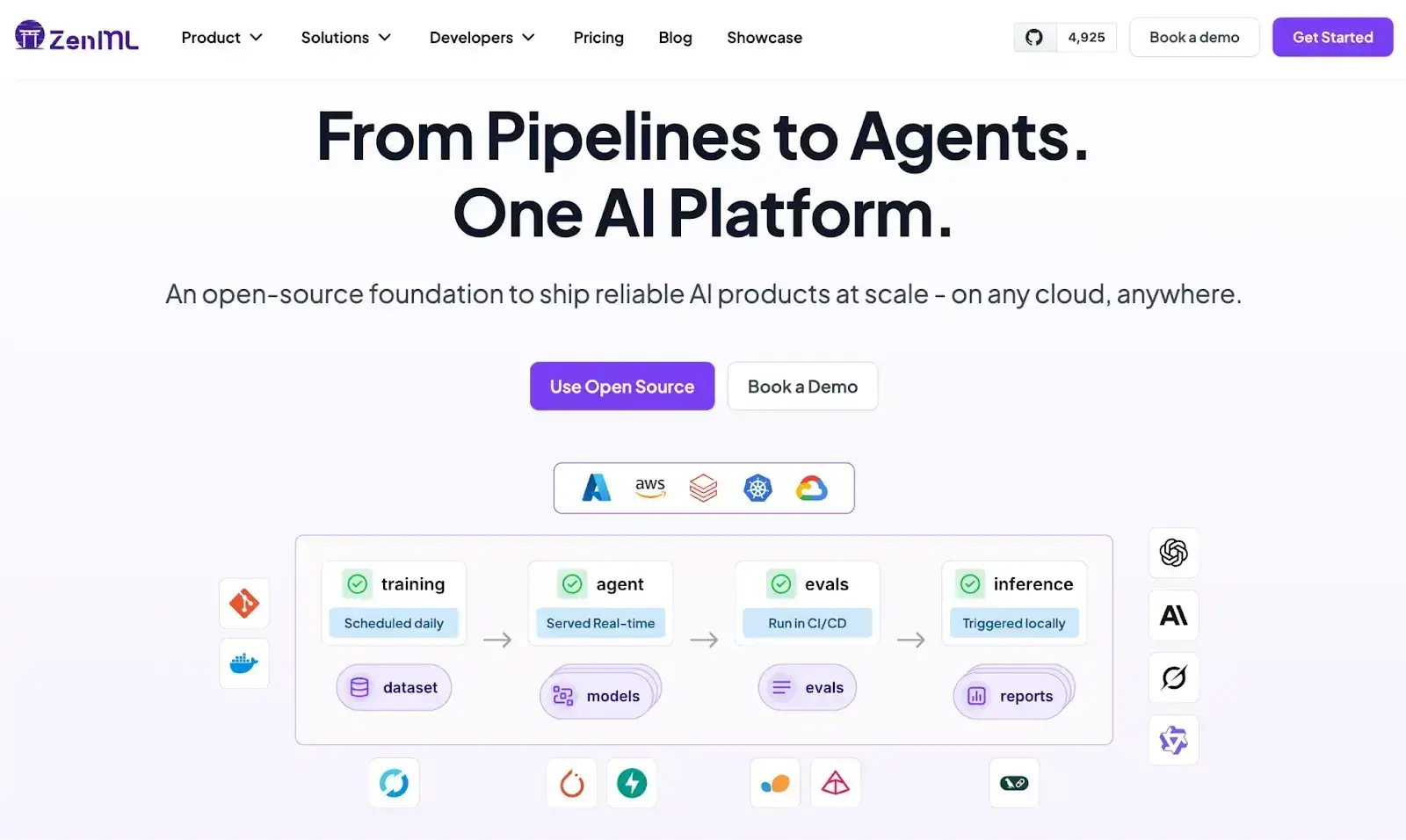

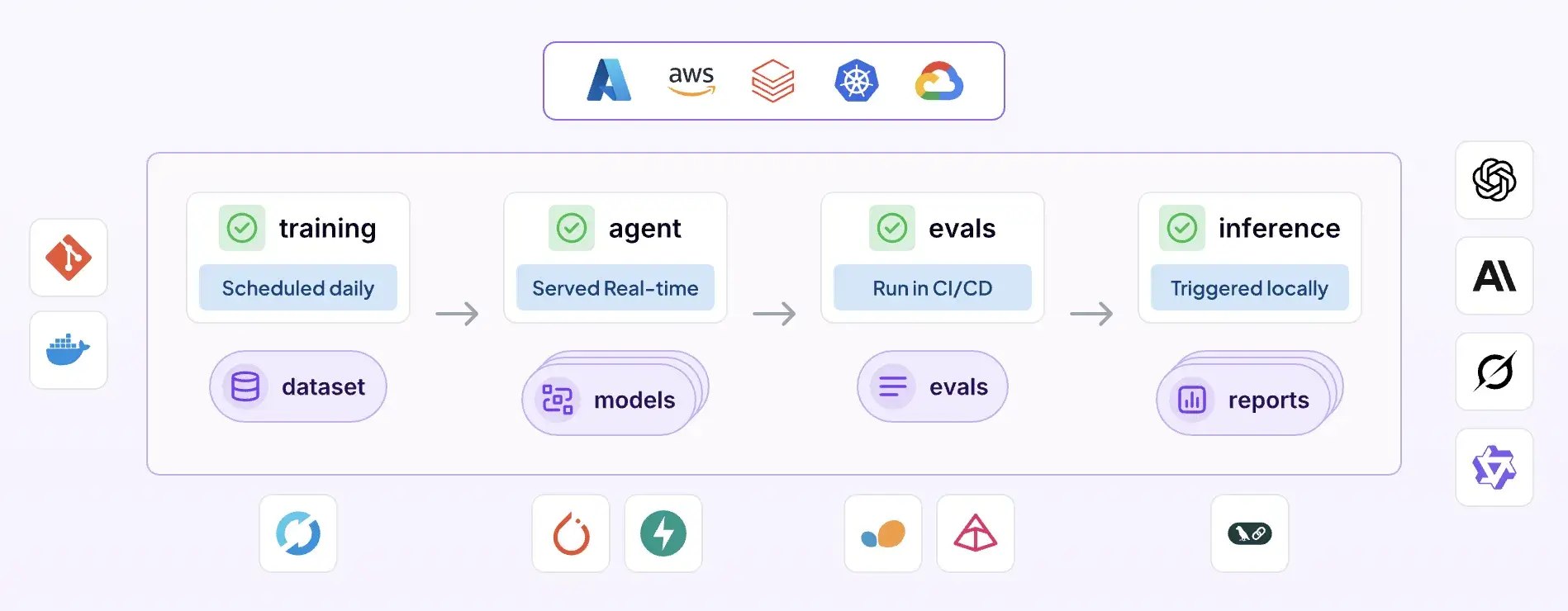

1. ZenML

ZenML is a strong ClearML alternative when you want the benefits of an MLOps platform without being locked into a single ‘do-it-all’ stack that fights your existing tools. ClearML’s unified approach can create friction in mature setups and can become a self-hosting and upgrade burden over time.

ZenML flips that model. It gives you one consistent way to build and run pipelines while letting you plug in best-in-class tools for tracking, orchestration, storage, and deployment as your needs evolve.

Key Feature 1. Stack Decoupling (Swap Components without Rewiring Everything)

ZenML uses a “stack” concept where each capability (orchestrator, experiment tracker, artifact store, model deployer, and more) is a replaceable component. That means you can keep your current tracker, or move from one orchestrator to another, without rebuilding your workflow around a new platform UI.

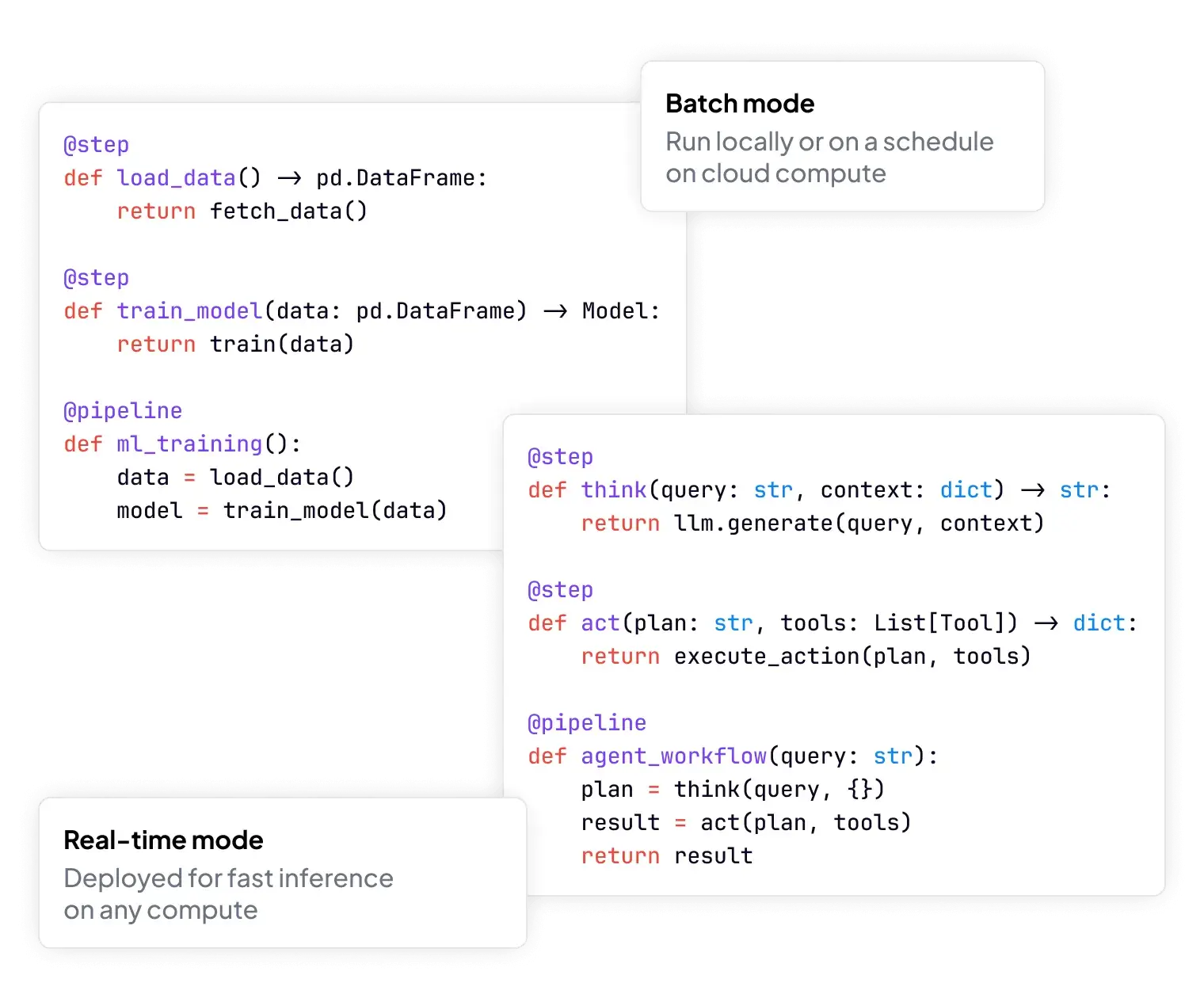

Key Feature 2. Pipeline Orchestration with Reproducibility and Caching Built In

ZenML turns normal Python into reproducible pipelines with a clean step and pipeline abstraction. You get a smooth local development loop, then the same pipeline can run on remote backends when you need scale. ZenML also supports caching, so repeated work can be skipped when inputs do not change.

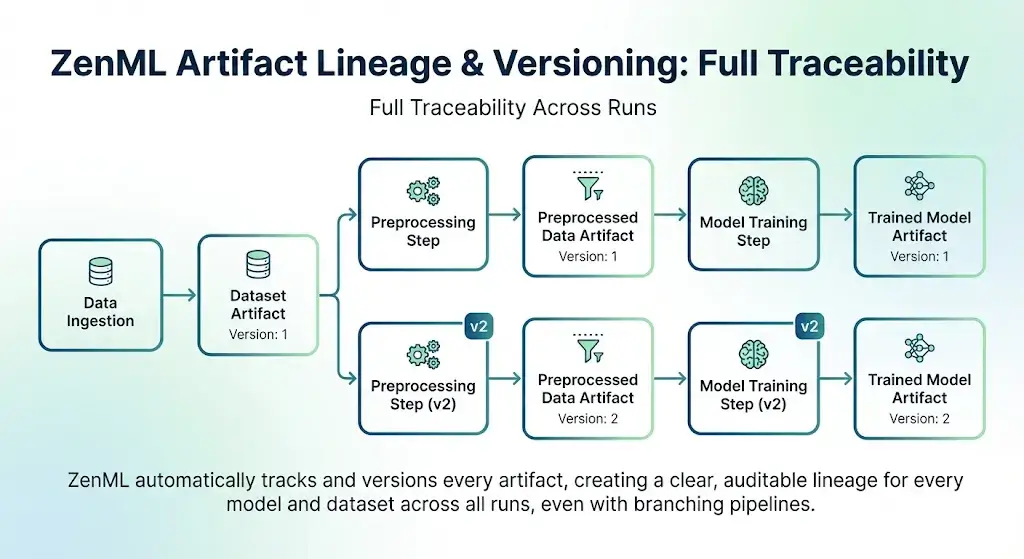

Key Feature 3. Artifact Lineage and Versioning as a Default Behavior

ZenML tracks artifacts produced by each step and versions them, along with rich metadata about inputs and outputs. This gives you traceability across datasets, models, and evaluation results, with less manual glue code.

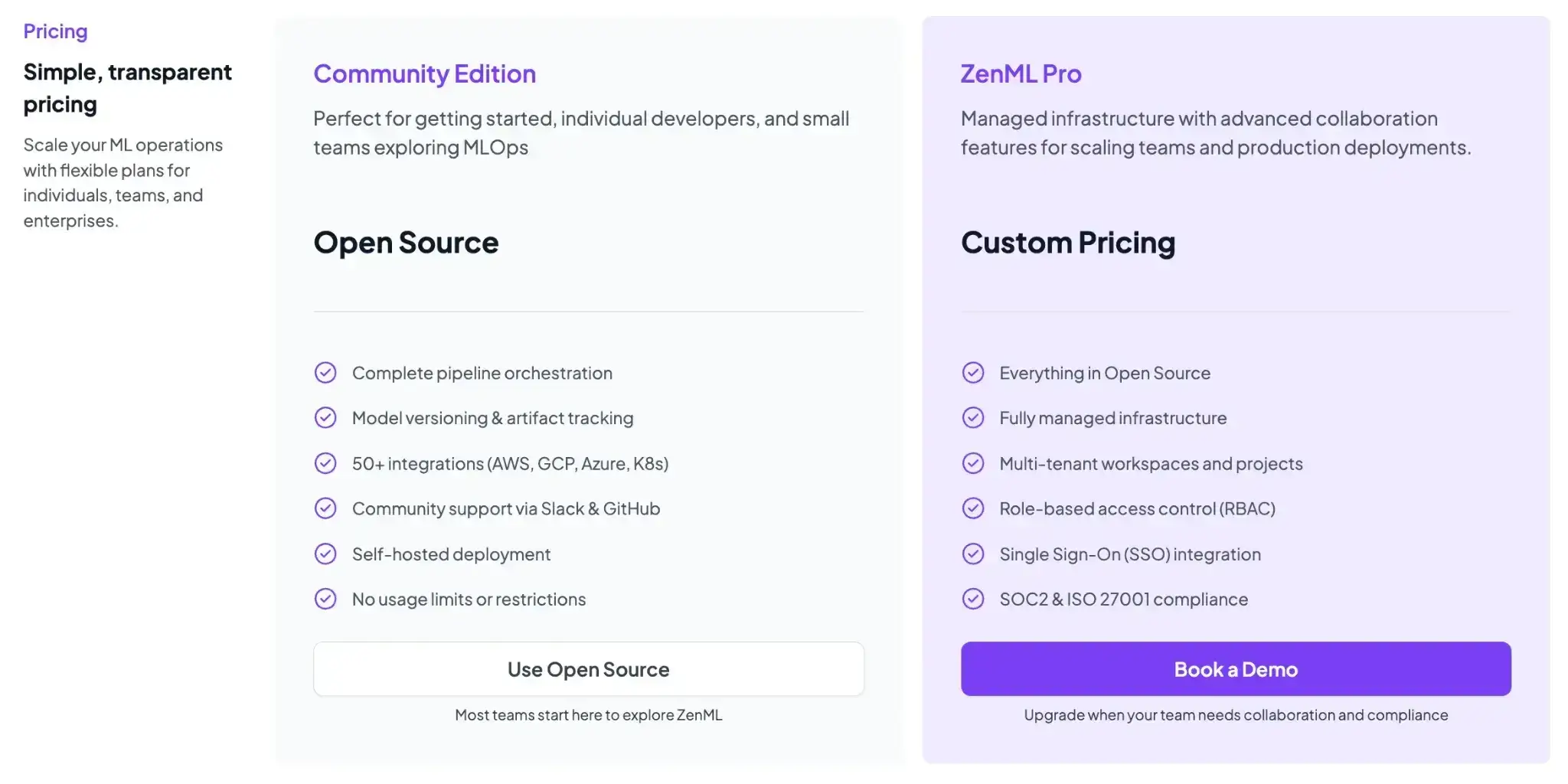

Pricing

ZenML is free and open-source (Apache 2.0). You can self-host the core framework at no cost. For teams that want a managed control plane or advanced enterprise features, ZenML offers paid plans with custom pricing.

Pros and Cons

ZenML is a better fit than ClearML when you want orchestration plus lineage, but you do not want a monolithic platform dictating your tracker, storage, or runtime choices. Its stack approach also makes tool swaps and platform changes far less painful as your team grows.

If you want one vendor UI that does everything, ZenML’s modular approach can feel like “more decisions,” since you choose which stack components you standardize on.

2. MLflow

MLflow is the industry standard for experiment tracking and model lifecycle management. It doesn’t aim for full pipeline orchestration like ClearML; instead, MLflow focuses on core tracking, model registry, and reproducibility in a lightweight manner.

Features

- Log LLM and agent traces (spans, steps, inputs/outputs) and related metrics using MLflow’s GenAI tracing features, then browse and compare traces in the MLflow UI.

- Manage model versions through a centralized Model Registry that handles staging, production, and archiving transitions.

- Package agents for deployment using standardized formats (like the PyFunc flavor), ensuring models run consistently across Docker, Conda, or Databricks.

- Compare experiment runs with a simple UI that highlights differences in hyperparameters and performance metrics.

Pricing

MLflow is open-source and free to use. The platform lets you host it on your own infrastructure at no cost. Many cloud providers and MLOps services incorporate MLflow; for example, Databricks offers MLflow as a managed service

Pros and Cons

MLflow’s ubiquity is its major pro. It has a massive community, endless tutorials, and integrates with almost everything MLOps-related. If you just need a solid place to dump logs and artifacts, it’s the safest bet. The new LLM evaluation features also make it viable for agent work.

On the downside, MLflow’s UI feels dated compared to newer tools. Setting up a highly available, secure MLflow server on-premises requires significant DevOps effort (database, artifact store, and auth). It also lacks built-in pipeline orchestration; it tracks runs but doesn't manage the execution order of complex workflows.

📚 More MLflow-related articles:

3. Weights & Biases (W&B)

Weights & Biases is a cloud-first experiment tracking platform. It has a slick, visually rich interface for tracking machine learning experiments in real time. As a ClearML alternative, W&B excels at experiment tracking and artifact management.

Features

- Visualize agent performance with interactive charts, system hardware metrics (GPU/CPU), and custom dashboards that update in real-time.

- Debug GenAI flows using W&B Weave, which traces LLM calls, providing a tree-view of agent reasoning, inputs, and outputs.

- Collaborate on reports by turning experiment data into shareable documents, allowing teams to discuss agent failures and improvements directly on the data.

- Sweep hyperparameters to automatically optimize your agent’s configuration across distributed infrastructure.

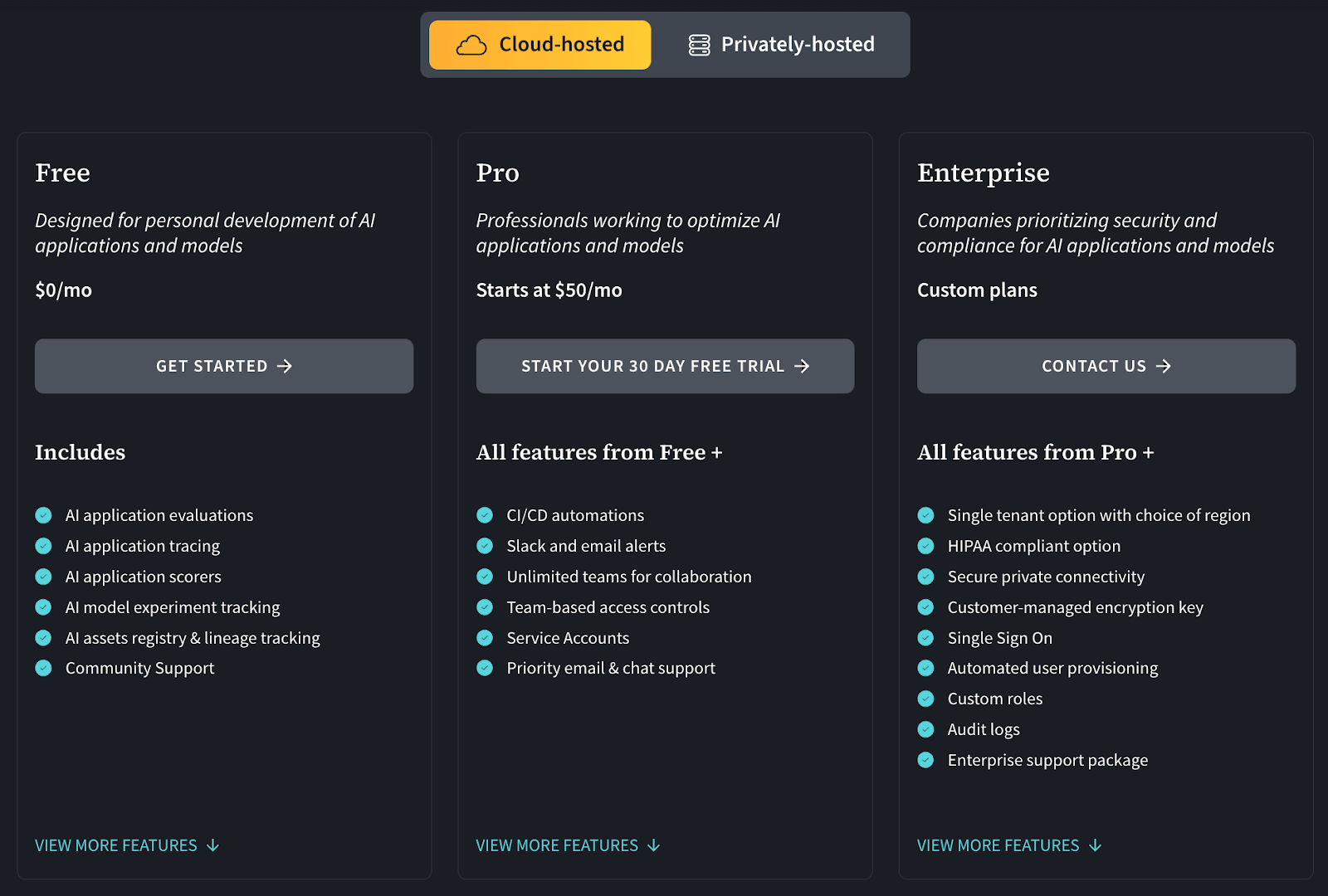

Pricing

W&B offers a free plan for personal AI app development projects, and two premium plans:

- Pro: $60/user per month

- Enterprise: Custom pricing

Pros and Cons

WandB offers the best developer experience in the market. The UI is beautiful, fast, and incredibly intuitive for comparing thousands of runs. For shipping agents, the Weave integration is a standout feature for debugging complex chains.

The catch is that WandB is primarily a SaaS product. While powerful, the cost escalates quickly for large teams. W&B offers self-managed (private/on-prem or your cloud) deployment options, but access and pricing are typically sales-led and depend on your plan and requirements.

📚 More MLflow-related articles:

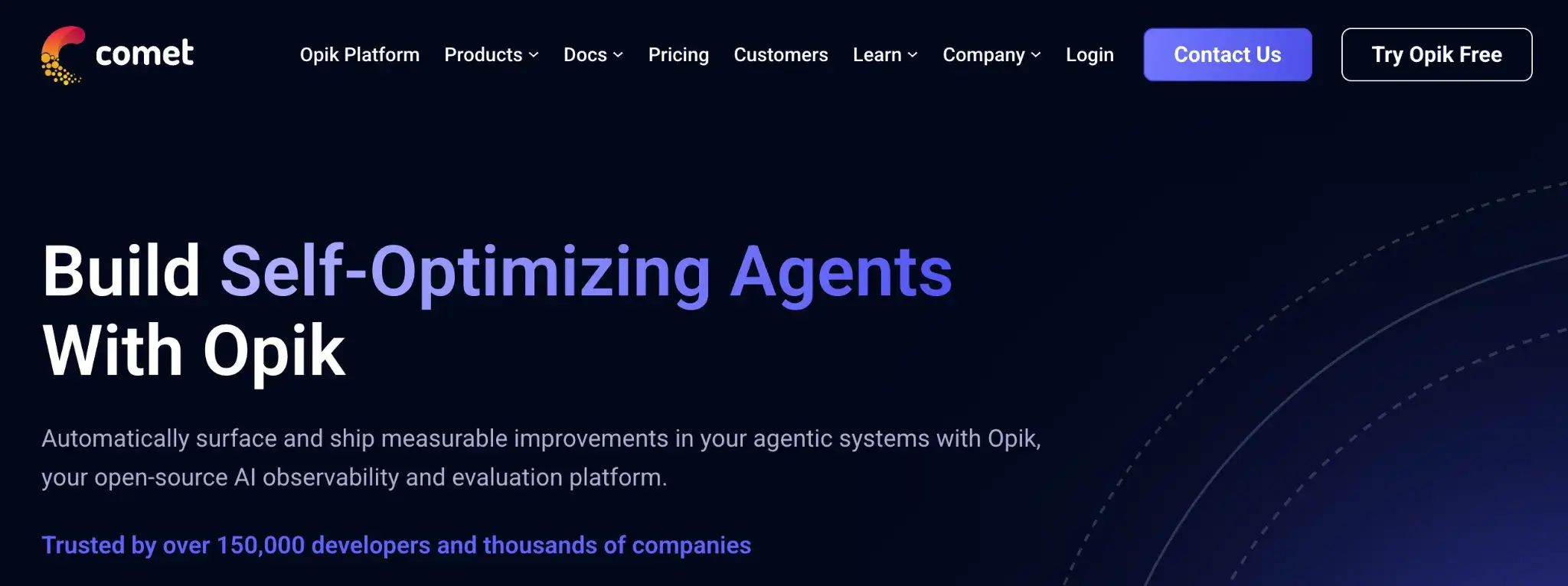

4. Comet

Comet is a direct competitor to WandB and ClearML, offering deep experiment tracking and a model registry. It differentiates itself with "Opik," a specialized module for evaluating and tracing LLM agents.

Features

- Log metrics, hyperparameters, stdout, and artifacts with a small comet_ml snippet; support offline runs for later sync.

- Monitor experiments in real time with live metric plots, system stats, and alerts when runs fail or hit certain thresholds.

- Compare runs side by side by overlaying metrics, checking parameter differences, and reviewing visual summaries like confusion matrices.

- Record code snapshots and notebooks for every run so you can audit, rerun, or debug the exact version that produced the results.

- Organize models and experiments using a built-in registry and workspace structure that links model versions to their originating runs.

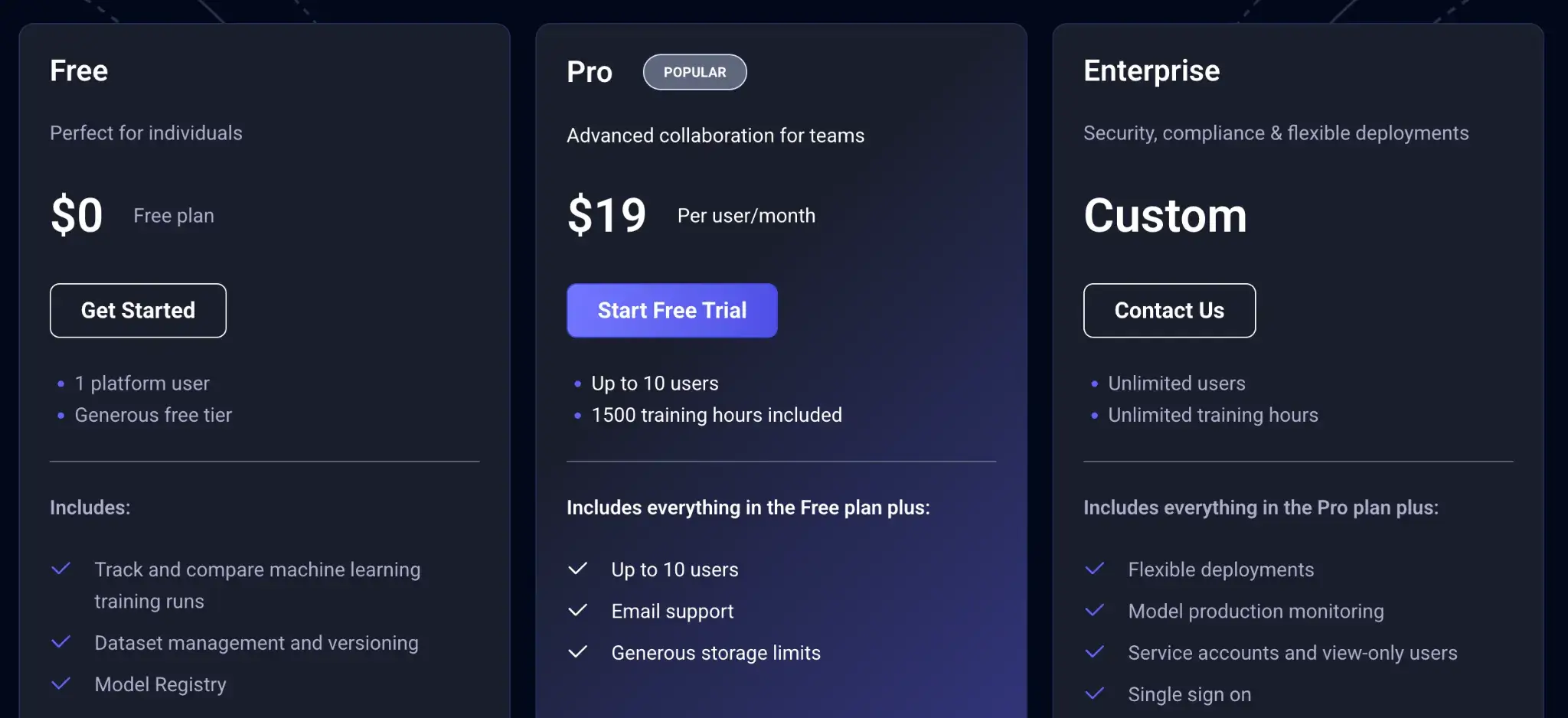

Pricing

For MLOps, Comet offers a free plan for individual use. Then it expands the offering for teams with two premium plans:

- Pro: $19/user per month

- Enterprise: Custom pricing

Pros and Cons

Comet is an enterprise-friendly alternative to ClearML. The UI is robust, and many users appreciate the rich comparisons, the level of detail, and the ability to customize dashboards. The addition of Opik makes it a strong contender for teams specifically focused on GenAI and agents.

However, the UI is dense and less intuitive than WandB’s. Some users find the configuration options overwhelming. It also suffers from the same limitation as other trackers: it monitors your work but doesn't orchestrate the compute or pipelines required to do the work.

5. DVC

DVC (Data Version Control) is an open-source tool that brings Git-like version control to machine learning assets and also provides simple pipeline management. It replaces ClearML’s dataset management with a command-line tool that feels exactly like Git, making it a favorite among engineers who work in the terminal.

Features

- Version control large datasets and agent models directly in Git, storing the actual files in S3, GCS, or Azure Blob Storage while keeping lightweight metadata in your repo.

- Reproduce experiments by tracking the exact combination of code, data, and hyperparameters used to train an agent.

- Create lightweight pipelines using a simple

dvc.yamlfile to define dependencies and outputs for your training stages. - Share data securely across the team without setting up a complex database server; access is handled via your cloud provider’s IAM.

Pricing

DVC is completely free and open source (MIT License). You can use all its features without any license fees. The only potential costs are the storage for your data, since you’ll likely use cloud storage to store your versioned datasets/models.

Pros and Cons

The primary strength of DVC is robust version control for data and models. It addresses a problem that ClearML also tries to handle, but does so in a highly transparent, storage-agnostic way. It fits perfectly into a GitOps workflow. If you want to "Git checkout" your entire model and dataset state, DVC is the tool.

On the flip side, DVC lacks UI and real-time monitoring like ClearML. You won’t get a fancy dashboard without an add-on like DVC’s Studio or manually visualizing logs. It’s also more hands-on; you need to structure your project for DVC, write the pipeline stages, manage remote storage, etc. That overhead pays off in rigorous reproducibility, but it’s a bit of a learning curve.

6. DagsHub

DagsHub is like a ‘GitHub for Machine Learning.’ It wraps DVC, MLflow, and Git into a single platform so an ML team can see all project components together, with a zero-setup backend.

Features

- Host MLflow and DVC remotes automatically for every project, allowing you to log agent metrics and version data without configuring your own servers.

- Visualize data and models directly in the browser, including diffing notebooks, images, and datasets to understand changes in agent behavior.

- Collaborate on experiments with a social-coding interface that includes pull requests, issue tracking, and discussions around specific model versions.

- Annotate data using the built-in Label Studio integration to create ground truth datasets for evaluating your agents.

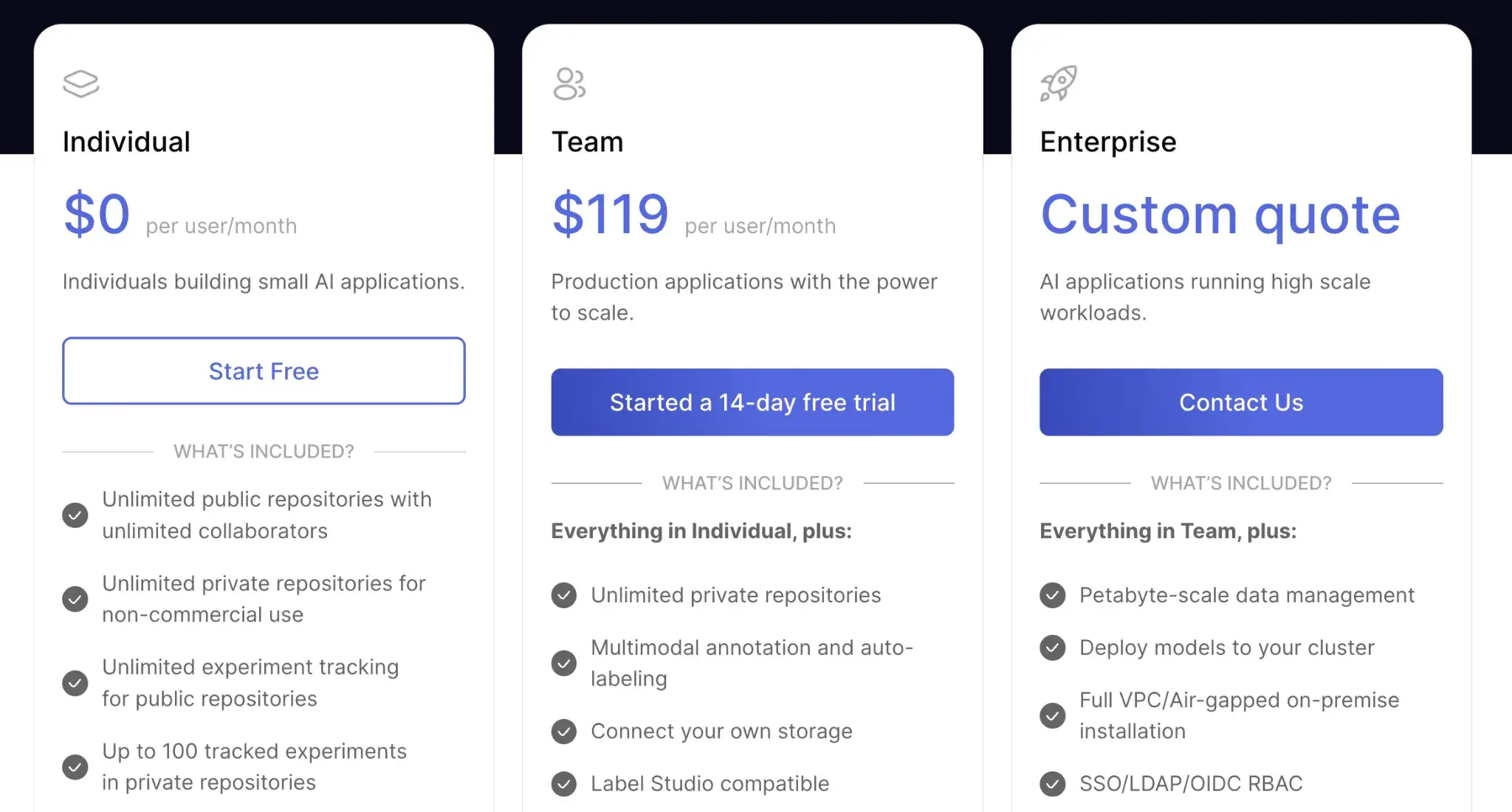

Pricing

DagsHub offers a generous free plan with unlimited public repositories and collaborators. Other than that, it has two paid plans:

- Team: $119 per user per month

- Enterprise: Custom pricing

Pros and Cons

DagsHub is convenient through unification. It’s one of the few platforms that cover the whole "Modern Data Stack." You get a remote DVC storage and MLflow server instantly. This is a massive time-saver for small to mid-sized teams who don't want to manage infrastructure.

On the flip side, because DagsHub does a bit of everything, it might not be as specialized or powerful in each domain. It is also an aggregator of tools rather than a standalone framework, so you are still reliant on the underlying mechanics of DVC and MLflow.

And because it’s cloud-first, cost could become an issue if you use a lot of storage or have a big team.

7. Flyte

Flyte is a Kubernetes-native workflow orchestrator designed for machine learning and data processing pipelines. Think of it as a more specialized alternative to something like Airflow or Kubeflow Pipelines.

Features

- Define workflows in Python using typed tasks that make data dependencies clear and enforce structure.

- Run each task in an isolated Kubernetes container that scales automatically and parallelizes heavy compute.

- Pass data and artifacts between tasks without manual handling through automatic storage and versioning.

- Reproduce any pipeline by using versioned workflows and stored execution metadata for parameters and outputs.

- Inspect runs through a web console or CLI to view statuses, logs, and outputs for each task.

Pricing

Flyte is fully open-source under Apache 2.0. You can deploy Flyte on your Kubernetes cluster at no cost. The primary cost comes after that, when you need to actually run and maintain the framework.

Pros and Cons

Flyte is engineering-heavy. It’s among the advanced orchestration systems specifically tailored for ML workflows. For production-grade agent systems running at scale, the strong typing and versioning bring confidence in reproducibility.

The con is the barrier to entry. Flyte is complex to set up and manage if you don't have a dedicated platform team. It requires a Kubernetes cluster and high DevOps knowledge. But if you don’t need that level of complexity, it might be too heavyweight a replacement.

8. Kubeflow

Kubeflow is a Kubernetes-native ML platform that integrates pipelines, distributed training, hyperparameter tuning, and model serving into a single system. It’s powerful for teams already invested in Kubernetes and looking for a full-stack, open-source alternative to ClearML that scales across the entire ML lifecycle.

Features

- Orchestrate pipelines as containerized steps that run on Argo and track artifacts, logs, and metrics across each run.

- Launch Jupyter notebooks on cluster resources so users can request GPUs and work inside the same environment as production workloads.

- Scale training jobs with built-in operators like TFJob and PyTorchJob that handle distributed workers and parameter servers.

- Tune hyperparameters through Katib, which explores parameter search spaces across many training runs using multiple optimization strategies.

- Serve models with KServe, deploying them as autoscaling endpoints that support canary rollouts and multiple ML frameworks.

Pricing

Kubeflow is open source and free to use. There’s no license cost. Typically, you’d run Kubeflow on a Kubernetes cluster, either on-prem or on cloud, so you’ll pay for the VMs/containers and any managed K8s fees.

Pros and Cons

Kubeflow is the standard for cloud-native MLOps. If your organization is already 100% on Kubernetes, Kubeflow fits naturally into your environment. It offers a standardized way to do ML across hybrid and multi-cloud environments.

However, Kubeflow is notoriously difficult to install and maintain. It often feels like a collection of loosely coupled tools rather than a cohesive platform. The overall user experience is disjointed compared to ClearML.

9. Metaflow

Metaflow is an open-source framework. It was initially developed at Netflix to help scientists build and manage real-world data science workflows. It allows you to define workflows in a Python script using a few decorators and handles the execution of each step either locally or on the cloud.

Features

- Define flows in plain Python so you express branches, loops, and joins without YAML or container plumbing.

- Automatically store data artifacts at every step, allowing you to resume runs or inspect past outputs without additional tracking code.

- Scale heavy steps to AWS Batch or Kubernetes with a single decorator that packages, submits, and returns results for you.

- Query runs metadata through the client API to inspect parameters, artifacts, and history directly from notebooks or scripts.

- Capture Git commits and environment details automatically so every flow run is reproducible and fits cleanly into CI/CD.

Pricing

Metaflow is open source and free to use. Netflix open-sourced it under the Apache 2.0 license.

Pros and Cons

Metaflow’s key advantage is developer friendliness. Compared to ClearML, which provides components you assemble, Metaflow is built to make it easy for data scientists to move from prototype to production.

Its weakness is that it is primarily an orchestrator. It relies on other tools for experiment tracking (though it has some built-in metadata) and model registry features. You will likely need to pair it with a tool like WandB or ZenML to provide a complete ClearML alternative.

10. Dagster

Dagster is a modern orchestrator built to run data and ML pipelines with clear structure, strong observability, and versioned assets. Its Python-first design and rich UI make it easy to debug, track lineage, and manage complex workflows across teams.

Features

- Define pipelines and assets in Python with typed inputs and outputs that make dependencies clear and maintainable.

- Inspect runs in Dagit using real-time logs, graphs, and lineage views to quickly debug failures.

- Schedule pipelines with sensors, cron-like triggers, and partitions to keep retraining or data-refresh jobs on track.

- Connect to external systems through resources that centralize configuration for databases, storage, and ML tooling.

- Deploy workflows through Dagster Cloud if you prefer a hosted control plane that simplifies team operations.

Pricing

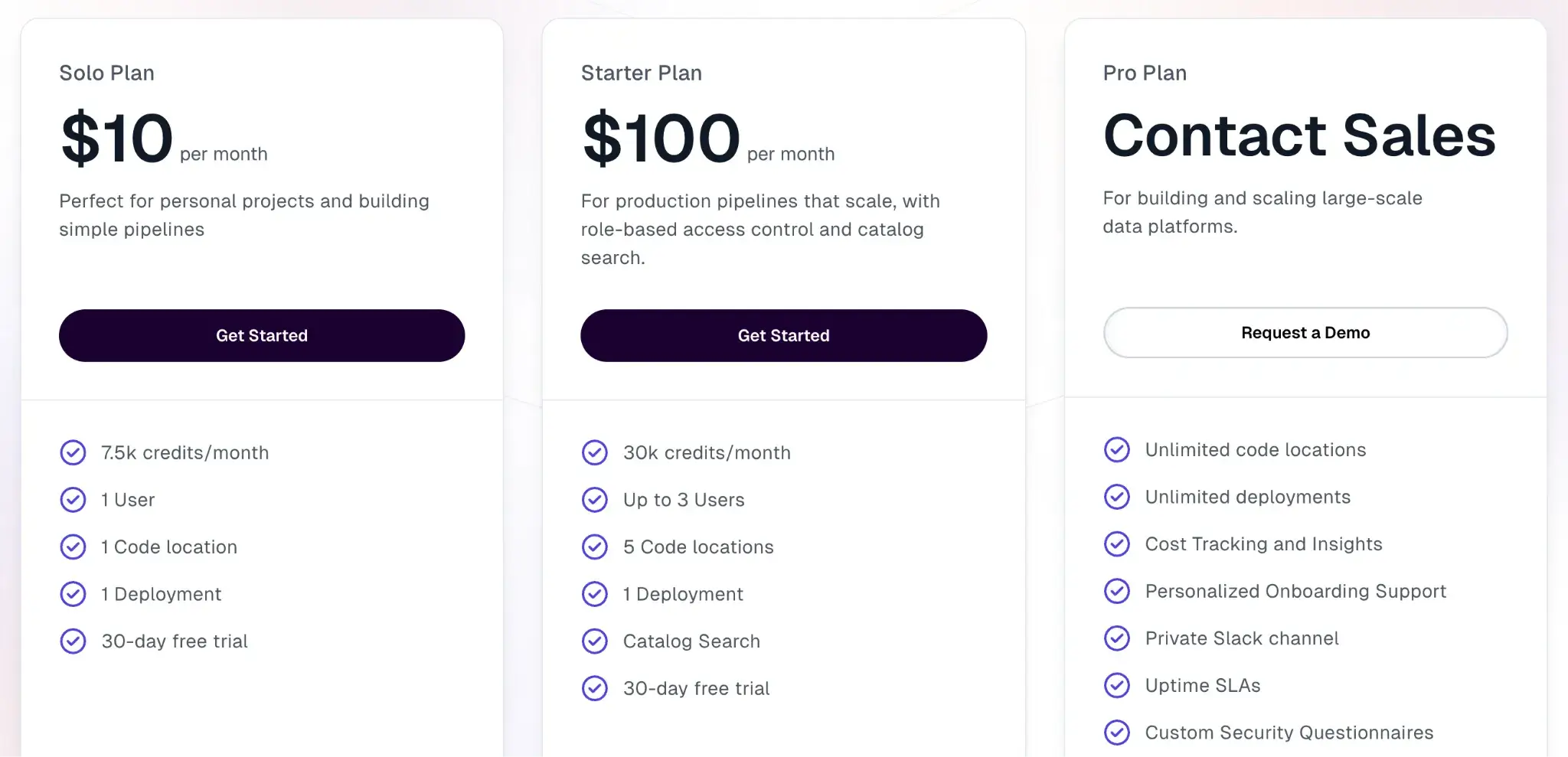

Dagster offers a 30-day free trial. It has three pricing models:

- Solo plan: $10 per month

- Starter plan: $100 per month

- Pro plan: Custom pricing

Pros and Cons

Dagster brings a modern, refreshing approach to orchestration. Its focus on "assets" aligns well with ML, where the model and the dataset are the products. The UI is excellent, and the developer experience is top-tier.

However, Dagster is not an MLOps-first platform. It lacks native experiment-tracking visualizations (e.g., loss curves) available in ClearML. You would use Dagster to run the pipeline, but still need a separate tool to track the specific metrics of your AI agents.

The Best ClearML Alternatives to Track Experiments and Build ML Pipelines

Choosing a ClearML alternative depends on which part of ClearML’s ‘all-in-one’ promise failed you.

- For Experiment Tracking: Choose WandB or Comet. They offer superior visualization and debugging for agents without the overhead of a full platform.

- For Data Versioning: Choose DVC. It separates data concerns from code, perfect for teams managing large datasets for agents.

- For Robust Orchestration: Choose ZenML or Dagster. They provide the scalability and reliability needed for production pipelines.

Choose ZenML if you want an all-in-one MLOps platform that you can leverage on top of ClearML, along with a combination of multiple different MLOps tools.

ZenML’s architecture is built to integrate ClearML's UI with the freedom to use best-in-class tools like Airflow for orchestration and MLflow for tracking. With ZenML, you own your stack, your data, and your workflow.